6

Understanding the Causes

[H]ow dishonesty works . . . depends on the structure of our daily environment.

Synopsis: Improving understanding of why researchers commit misconduct and detrimental research practices (DRPs) is important because this understanding should inform the responses of the research enterprise and its stakeholders. For instance, if the only perpetrators of research misconduct and DRPs are a very small number of bad people engaged in self-interested deception and shortcuts, then the potentially useful responses of the research enterprise might be limited to increased vigilance in uncovering these “bad apples” and ending their research careers. To the extent there are other factors contributing to research misconduct and DRPs, such as institutional environments for research integrity or incentive structures significantly shaped by the policies and practices of journals and funding agencies, then other responses are required. Recent advances in understanding human cognition have implications for the response of the research enterprise to problems.

Why people engage in criminal or other pathological behavior and the conditions that encourage or discourage such behavior are issues of perennial interest in the behavioral and social sciences. Recent work provides some useful insights on these questions that are relevant to understanding why and under what conditions researchers commit misconduct and engage in detrimental research practices. Current patterns of U.S. research funding and organization are contributing to research environments with characteristics that behavioral and social sciences research suggests facilitate and encourage detrimental behavior in science, with some evidence of negative effects. More research on the causes of research misconduct and DRPs is needed in order to develop better strategies for prevention.

WHY IS IT IMPORTANT TO BETTER UNDERSTAND THE CAUSES OF RESEARCH MISCONDUCT?

Beliefs and assumptions about the causes of research misconduct can shape the responses of the research enterprise and its constituent stakeholders. For example, one theory that has been expressed by scientists is that misconduct is rare

and due to the “ineradicable presence of fraudsters” (James, 1995). Under this formulation, not much can be done by research institutions or others to prevent misconduct or foster integrity; the fraudulent “bad apples” can only be discovered and removed from the scientific barrel through the process of others trying and failing to replicate their work.

Indeed, current policies and practices for addressing research misconduct, described in Chapter 4 and Chapter 7, largely focus on the behavior of individuals. Specifically, federal policy defines prohibited individual behaviors as research misconduct and sets out procedures for investigating the individuals alleged to have engaged in this behavior. The policy also covers the corrective actions that might be taken against individuals in response. In the view of one expert, “The ‘bad-apple’ metaphor represents an old ideology, protective of science but, at the same time, perpetuating an ineffective way of dealing with research misconduct” (Redman, 2013).

Alternatively, a broader understanding that includes theories of misconduct in which individual failings interact with aspects of the immediate lab or institutional research environment—or even with larger structural conditions in research such as competition for funding or workforce imbalances—to cause a higher or lower incidence of misconduct would lead to different response strategies than those based on the bad-apple theory. Interventions directed at individual researchers that go beyond the investigation of alleged misconduct, such as better education and training or closer supervision, might be combined with efforts to improve research environments or even address structural issues.

When Responsible Science was released, the potential but as yet undocumented and little-understood importance of environmental factors in affecting integrity in science was acknowledged in the statement that “factors in the modern research environment contribute to misconduct in science” (NAS-NAE-IOM, 1992). A range of possible reasons were posited: (1) career and funding pressures, (2) institutional failures of oversight, (3) commercial conflicts of interest, (4) inadequate training, (5) erosion of standards of mentoring, and (6) part of a larger pattern of social deviance. Responsible Science specifically “made no judgment about the significance of any one factor,” concluding that the alternative “bad person” and “environmental factors” hypotheses are “possibly complementary.”

A similar stance is seen in Integrity in Scientific Research: Creating an Environment That Promotes Responsible Conduct (IOM-NRC, 2002). This report explicitly recognized the important role of the local environment—the lab, the department, the university—in shaping the behavior of scientists. Like the 1992 report before it, the 2002 Institute of Medicine–National Research Council report took an essentially agnostic stance about larger structural influences on the integrity of research practices, citing the lack of specific empirical evidence to guide policy.

If more reliable knowledge about the causes of misconduct can be attained, including the likely role of environmental factors and their interaction with the

psychology and cognitive limitations of individuals, the research enterprise and its constituent stakeholders will be able to use this knowledge to refine their approaches to preventing misconduct as well as to discovering and addressing misconduct after it has occurred. Examining the evidence on this topic bears directly on several elements of the committee’s task statement, including the need to assess “the impacts on integrity of changing trends in the dynamics of the research enterprise,” “the advantages and disadvantages of enhanced educational efforts,” and “the appropriate roles for government agencies, research institutions and universities, and journals in promoting responsible research practices.”

Choosing to stick with assumptions that are not supported by evidence as the basis for strategies to prevent and address research misconduct and detrimental research practices (DRPs) may perpetuate suboptimal responses on the part of the community, causing the negative consequences and damage resulting from misconduct that are described in Chapter 5 to be greater than they need to be.

INSIGHTS FROM THE SOCIAL AND BEHAVIORAL SCIENCES ABOUT RESEARCH MISCONDUCT AND DETRIMENTAL RESEARCH PRACTICES

Decades of research in the social and behavioral sciences have generated important insights on why humans deviate from the behavioral norms of the groups to which they belong. Recent research has yielded insights with implications for understanding the causes of research misconduct and DRPs. While some work has been done to apply broader social and behavioral sciences insights to researchers and research environments, further efforts along these lines hold the promise of helping the research enterprise to better address research misconduct and DRPs and even reduce their incidence.

For decades, examinations of research misconduct and DRPs have been framed around concepts of deviance, explained most simply by reference to psychopathology, moral defect, or poor upbringing—in short, factors residing primarily within the individual, and typically seen as predating their involvement in research. This parallels the evolution of understanding of human conduct in other arenas, but this perspective falls short in explaining research misconduct and DRPs for several reasons: (1) the individual defects that supposedly lead to deviant behavior in science are vague and unmeasured; (2) the characteristics that supposedly define defective individuals may also be characteristics that are highly valued among eminent scientists, such as creativity, original thinking, and self-assurance; and (3) many of these traits have been observed among “scientists whose actions or ideas are controversial or inconvenient, including whistleblowers” (Gino and Ariely, 2011; Hackett, 1994). Hackett also asserts “the individualistic explanation is too convenient and too self-serving of the interests of established scientists to be accepted on faith and assertion without evidence” (Hackett, 1994).

Evolution of Thinking on Causes of Deviant Behavior

Across a diverse range of fields and theories, a broad spectrum of causes of deviant behavior has been explored, including mental illness and moral defect, criminality, institutional failures, rational response to perverse incentive structures, and nonrational behavior arising from cognitive limitations, biases, or impaired decision making. Considerations of potentially motivating factors, as well as potentially mitigating influences, have been similarly broad, ranging from avarice to hubris to loss aversion to maladaptive coping. Additionally, views of human behavior as being largely intentional and rational have evolved to recognize the frequent presence of unintentional and nonrational elements to human behavior. Just as we now know that people tend to eat more when food is placed on larger plates, evidence is emerging that the conduct of those around us and the structure of the environment in which we work affects the integrity of choices made in performing work (Ariely, 2012; Wansink and van Ittersum, 2006). Examining these past conceptualizations can inform thinking about research misconduct and DRPs and demonstrate that this is an arena worthy of further empirical investigation.

The deviance approach that is prominent in fields such as the sociology of crime and delinquency generally holds that there are “good” people, who behave well, and there are “bad” people, who behave badly, or good people who make bad choices for reasons of personal defect or gain (Ben-Yehuda, 1986; Folger and Cropanzano, 1998; Hirschi, 1969; Matza, 1964, 1969; Sovacool, 2008). A second group of theories seen in fields such as organizational psychology, behavioral economics, and decision science is aimed primarily at understanding behavior that, while it may still be somewhat intentional, may result from biased, nonrational, and in some cases subconscious cognitive processes (Kahneman and Tversky, 1979; Thaler and Sunstein, 2008; Vaughan, 1999). Despite the diversity in viewpoints, these theoretical perspectives mainly conceive of deviance as arising from the interaction of individuals with salient aspects of their social environments.

Considerations of human behavior frequently speculate about motives for behavior. Among the motivating factors for deviance, perhaps the most commonly suggested is avarice. The simple desire for personal gain seems a natural explanation for the behaviors of self-interested individuals, not sufficiently held in check whether by self-control or threat of punishment. In fact, greed intuitively fits the “bad actor” individual defect explanation of deviant behavior, since moral defect may allow for the unhealthy expression of self-interest as greed. But while avarice may explain some deviance, it is likely too simple and convenient an explanation for most deviance in science.

Some other proposed motivating factors are thought to operate through mechanisms of human emotion or cognition. Motives consonant with recognized features of human psychology include the blockage of legitimate goals, leading to desperation, alienation, or other aversive affective states (Agnew, 1992, 2006;

Cohen, 1965; Merton, 1938). Some theories posit that deviant behavior will not result unless environmental conditions also lead to the activation of “will” and the neutralization of moral reasoning, or through the generation of negative affect (i.e., the experience of negative emotion) in individuals and their attempts at coping with that affect (Agnew, 1992, 2006; Ben-Yehuda, 1986; Matza, 1969). Other theories have suggested the importance of an intrinsic sense of justice or fairness (typically in terms of perceived violations with respect to the individual in question) (Colquitt et al., 2001; Tyler and Blader, 2003).

The theory of ego depletion has recently been posed by social psychologists to understand poor decision making. It sees the availability of individual willpower or self-control as varying over time as a function of factors such as sleep deprivation, low blood glucose, or resource scarcity (Baumeister and Tierney, 2011; Baumeister et al., 2000; Gino et al., 2011; Mani et al., 2013).

Kahneman and Tversky’s (1979) Prospect Theory addresses decision making under uncertainty, focusing attention on the “bounded rationality” of actors. This theory appears particularly relevant due to the parallel ideas that, on the one hand, fear of loss (loss aversion) tends to be a much stronger motivator of behavior than does the potential for gain, and on the other hand that individuals tend toward risk aversion when confronted with potential gains but bias toward risk seeking when confronted with avoiding potential losses. Applied to a research setting, this theory would imply that, other things being equal, researchers facing a potential loss of position or resources would be more inclined to take risks—including research misconduct or DRPs—than those seeking to gain status or resources.1

Experimental work in the social and behavioral sciences has shed light on how these theoretical perspectives can be applied to specific problems such as cheating by students that could carry implications for research practices. Ariely and his colleagues, in a series of experiments, have found that the extent to which human beings are willing to cheat and engage in dishonest behavior “depends on the structure of our daily environment” (Ariely, 2012). A key finding is that maintaining a self-image of honesty is important to people, but many are able to engage in very low levels of cheating and simultaneously adjust their explanations to retain their own self-regard (Mazar et al., 2008).

A recent compilation of decades of research on cheating by students, for example, focused on five elements that combine to contribute to an environment that is conducive to cheating: (1) a strong emphasis on performance, (2) very high stakes, (3) extrinsic motivation, (4) a low expectation of success, and (5) a peer culture that accepts or endorses corner cutting or cheating (Lang, 2013). Among the top reasons that students use to rationalize cheating is when the teacher/assessment system is perceived to be unfair and/or there is perceived to be little chance of success (Brent and Atkisson, 2011). This tracks to the literature on

___________________

1 This is not meant to imply that researchers facing a potential loss would choose risky research topics. Indeed, such a researcher might exhibit risky behavior in the form of misconduct while working on a topic currently popular in his or her field.

organizational justice showing that when humans perceive a workplace as arbitrary and unfair, they find it more justifiable to (and are more likely to) cheat by stealing or by calling in sick than when the workplace is perceived as being fair (Folger and Cropanzano, 1998).

Implications for Understanding and Preventing Research Misconduct and Detrimental Research Practices

The foregoing sketches out an evolution of thinking over time—supported by empirical work—from a focus on deviance as stemming from a bad actor’s rational set of choices to a more nuanced understanding of the multifactorial influences on human decision making. Humans are influenced by a wide range of cognitive biases and errors that infect and disrupt rational thought—even when we think we are being rational. No single theory is likely to be adequate to completely explain the full spectrum of behavior encompassed by research misconduct and detrimental research practices, but to assume that researchers are not subject to the same kinds of influences and defects in their decision making that afflict humans more generally would also be a mistake.

Some preliminary, limited research has attempted to bring some of this theoretical richness to bear directly on the questions of research misconduct and detrimental research practices, but the existing research has not been well positioned to provide compelling tests of the hypotheses suggested by these perspectives (Antes et al., 2007; DuBois et al., 2016; Martinson et al., 2006, 2010; Medeiros et al., 2014; Mumford et al., 2007, 2008). An analysis of research misconduct case files showed that a variety of causes and rationalizations could be identified, including personal and professional stressors, organizational climate, and personality factors (Davis et al., 2007). Generating more precise insights and more adequate tests of the theoretical frameworks useful for understanding research misconduct and detrimental research practices would require far more detailed longitudinal, perhaps experimental (and perhaps social-network–based, in some cases) data than have been amassed and examined in the study of research integrity.

While questions of the prevention of research misconduct are implicit in most, if not all, of the theoretical perspectives discussed above, some who have studied the topic have been more explicit in differentiating perspectives on prevention. In 2005, Douglas Adams and Kenneth Pimple offered a criminological perspective on the topic of prevention of research misconduct, arguing that any instance of misconduct can be described as having two essential elements—a propensity on the part of the individual to engage in a deviant behavior, and the opportunity to do so (Adams and Pimple, 2005). This is a somewhat different take on the joint person/environment explanatory framework seen in other theories, and these authors argue that anticipated difficulty in altering the propensity of individuals to deviate from norms suggests that opportunities for misbehavior

should be reduced. They do not address the topic seen in many other theories of trying to address motivations for deviant behavior. This line of work is largely consistent with situational prevention approaches in criminology that seek to increase the risks and costs of specific categories of crime while reducing rewards through manipulation of the environment and other techniques (Clarke, 1995).

In contrast to a narrow focus on misconduct, Nylenna and Simonsen have offered an epidemiologic perspective that draws attention to the entire distribution of behavior composed of research misconduct and detrimental research practices (Nylenna and Simonsen, 2006). These writers start from Geoffrey Rose’s now-classic population health perspective, which argued that when a disease risk factor is widespread in a population (e.g., hypertension), attempting to bring the entire risk distribution down should be the objective: reducing the overall risk distribution only slightly may be a more effective approach than simply trying to eliminate the risk only in the most “high risk” individuals (Rose, 1985). That is, Rose argued that reducing blood pressure population-wide by only a few mm Hg is a more effective way to reduce heart disease than merely bringing the blood pressure of a smaller group of hypertensives below the 140/90 threshold. Nylenna and Simonsen’s insight was that, like disease risks in a population, there is a range of DRPs in science beyond the most extreme examples of misconduct that meet the federal definition, and that focusing on the broader range of undesirable, research-related behavior might be more beneficial than a single-minded focus on fabrication, falsification, and plagiarism.

Like Nylenna and Simonsen, Weed brings an epidemiologic perspective to the prevention of research misconduct (Weed, 1998). Weed distinguishes three types of prevention, analogous with concepts of prevention in medicine: primary prevention, secondary prevention, and tertiary prevention. He sees primary prevention as “identifying and removing causes of events and as identifying factors whose presence (rather than absence) actively reduces the occurrence of those events” (Weed, 1998). He discusses secondary prevention as early detection to increase opportunities for discovering instances of misconduct and “treatment” through “procedures for investigating cases as well as the sanctions delivered to those responsible for the misconduct” (Weed, 1998). Auditing and increased monitoring of junior researchers are cited as examples of secondary prevention. In terms of tertiary prevention, Weed suggests that it “can also be applied to scientific misconduct, inasmuch as those who commit such misconduct may require rehabilitation before they return to scientific practice” (Weed, 1998).

Weed notes the difficulties in knowing anything about scientific misconduct with any level of certainty, owing to multiple factors, including the hiddenness of the behaviors in question, but also the lack of existing data and general absence of resources devoted to their study. In particular, he notes:

Indeed, in the foregoing analysis, a host of such questions have emerged. Answers will be difficult to obtain, especially if precise scientific methodologies are to be employed. But then, we are scientists, and solving difficult empirical

problems is what we do best. Perhaps the essential question is less methodological than motivational: Are we as scientists willing to study our conduct as scientists? If so, then one day we may discover why we suffer from an important and sometimes disabling professional affliction and what works to prevent it.

I am not suggesting, however, that we should postpone interventions until we fully understand the etiology, including the underlying biological, behavioral, and social mechanisms involved in the range of activities we call scientific misconduct. (Weed, 1998)

Reason’s work on high-reliability systems offers a framework for considering certain behaviors as possibly amenable to being addressed through quality improvement and quality assurance mechanisms, and that doing so may be a more effective way of reducing undesirable behavior than the historical focus on criminality (Reason, 2000). Such a perspective may be particularly salient in considering the potential role of sloppy research practices in contributing to the reproducibility problem, which is discussed in Chapter 5 and Chapter 7. Reason distinguishes a “person approach” to error from a “system approach” (Reason, 2000). He notes that “high reliability” organizations (including air traffic control centers, nuclear power plants, and nuclear aircraft carriers) are characterized by a focus on error management at the systems level more than the individual level. Reason’s logic has also been adopted in widespread attempts to reduce medical errors and a focus on moving medicine from a “blame and shame” cultural perspective to one of a “reporting and feedback” cultural perspective, with public reporting of individual and organizational performance being crucial (Leape, 2010). There is potential value in bringing such a perspective to bear, particularly in the promotion of research best practices and other quality improvement efforts recently being considered to improve the reliability and reproducibility of research findings, as discussed in more detail in Chapter 7.

CURRENT FUNDING AND ORGANIZATIONAL TRENDS AND THEIR NEGATIVE IMPACTS ON RESEARCH ENVIRONMENTS

Research environments at institutions and laboratories that produce outstanding work have long been characterized by significant competitiveness and pressure to perform. However, patterns of funding and organization that have emerged over the past few decades in the United States have created environments increasingly characterized by elements identified above that are associated with cheating, such as very high stakes, a very low expectation of success, and peer cultures that accept corner cutting. These conditions are best documented in the single largest component of the research enterprise in the United States—biomedical research—but aspects of these problems are appearing in other disciplines (Alberts et al., 2014; Casadevall and Fang, 2012; Stephan, 2012b; Teitelbaum, 2008).

Data strongly suggest that some fields have been producing more highly trained students with specialized research training than can be absorbed in research. According to the report of a National Institutes of Health (NIH) working group that examined the biomedical research workforce, the production of PhDs in the biomedical sciences has closely tracked the NIH budget (NIH, 2012b). Thus, the number of basic biomedical PhDs began to increase substantially in 2004, just as the doubling of the NIH budget was ending, which reflects the 5- to 7-year graduate student cycle. Over the past two decades, the number of basic biomedical graduate students has doubled (NIH, 2012b). Yet the percentage of PhDs who move into tenure-track positions has dropped from 34 percent in 1993 to 26 percent today, and the percentage of graduates in the biomedical sciences who say that they are employed in occupations closely related to their PhD field dropped from 70 percent in 1997 to 59 percent in 2008 (NIH, 2012b).

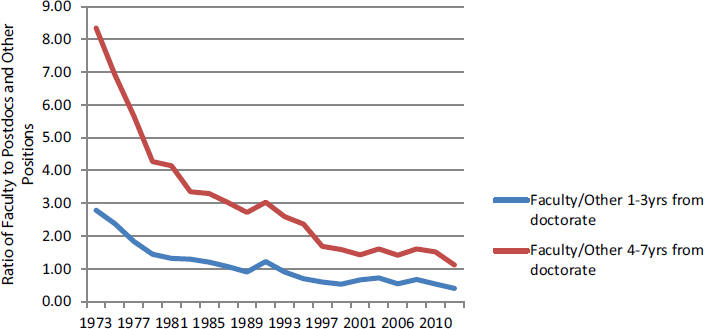

Across all of science and engineering, annual production of PhDs in the United States is roughly 10 times the number of open faculty positions (Schillebeeckx et al., 2013). Figure 6-1 shows the ratio of younger researchers who hold faculty positions to those holding postdoctoral fellowships and other temporary positions and how that ratio has declined over time. As discussed further below, the purpose of examining and highlighting labor market trends for PhD scientists and changes is not to imply that all or most science and engineering PhD recipients should ultimately be employed in academic research. Yet the scope of the discrepancy must lead us to ask: For what careers are all of these PhDs being trained?

In addition to the low probability of success, achieving a tenure-track position is a lengthy process. While the time to degree and age at degree have remained stable over the past 15 years, the overall length of training in the biomedical sciences, including graduate school and postdoctoral appointments, is longer than for comparable disciplines, and those who go on to tenure-track positions do so at an older age than PhDs in other disciplines. The average age at which PhD biomedical investigators get their first R01 grant from NIH is 42, an age at which investigators in other disciplines, not to mention nonacademic professionals, typically are already well established in their careers. The overall age profile of principal investigators has also risen.

This is not to say that all of those who have completed the education and training required of biomedical research principal investigators necessarily want to or have to go into research. Some medical schools have made it a high priority to train their PhD candidates for a variety of careers over the past decade. And students want other kinds of careers. Some institutions do a commendable job of communicating career information to students. Many trained researchers can and do find rewarding and successful careers in industry, government, and nonprofit organizations.

However, PhD scientists have received specialized training for this work, and academic research has traditionally employed a significant percentage of bio-

medical PhD recipients. If the number of PhDs rises while the number of tenure-track positions goes down, this implies that there will be heightened competition for those positions and that a growing fraction of PhD scientists will need to find employment in other sectors. The education and training requirements for some alternative careers (such as a professional science master’s) require fewer years of education and training than a tenure-track position in biomedical research. In aggregate terms, the end result is a system that relies more heavily on postdocs, graduate students, and other nonfaculty researchers relative to faculty than it did in the past.

Surveys have been taken to better understand the causes and consequences of apparent workforce imbalances in academic research and the challenges facing early-career researchers, with particular attention focused on the postdoctoral experience (Sauermann and Roach, 2012). For example, are imbalances primarily caused by supply-side or demand-side conditions? A 2014 report proposed both supply-side interventions, such as providing better education to graduate students and postdocs about career possibilities, and demand-side measures, such as limiting the length of postdoctoral service and raising minimum salaries (NAS-NAE-IOM, 2014). The evidence from surveys indicates that this is a complex issue. Graduate students and postdocs may have an accurate understanding of the abstract probability of attaining a tenured research position in their fields but may overestimate their own chances (Sauermann and Roach, 2012).

Even for the minority of researchers in biomedical fields who are eventually able to secure tenure-track faculty positions, the research environment continues to be characterized by hypercompetition. The success rate for NIH grant applica-

SOURCE: Data taken from NSB, 2016.

tions has fallen from 32 percent in 2001 to 18 percent in 2015. The average size of grants has risen in current dollars but has declined in real terms between 1999 and 2015 (NIH, 2015). The success rate at the National Science Foundation has experienced a more modest decline, from 27 percent in 2001 to 20 percent in 2014, with the size of the average award not showing a significant trend (in real dollars) over that period (NSB, 2014a, 2016). As a consequence, faculty members have to spend more time writing grants and less time doing research.

While success rates have been declining, external funding has become an important source of coverage for faculty salaries. To be sure, charging faculty salaries to grants is a proper and legitimate expense for federally funded work. And reviewers might question a principal investigator’s commitment to a project if his or her salary allocation is too low. At the same time, the emergence of a situation at some institutions and departments where the salaries of a substantial fraction of the research workforce are heavily or even entirely dependent on grant funding is a relatively recent phenomenon.

A survey undertaken by the Association of American Medical Colleges showed just under half of the salary support for non-MD faculty at responding institutions came from sponsored programs in fiscal 2009 (Goodwin et al., 2011). Aggregate data obscure the fact that conditions may vary greatly for individuals within an institution, with some faculty completely dependent on grant funding. They also mask differences between institutions. For example, 2013 Association of American Medical Colleges data from 72 academic medical centers showed that while most institutions fell in a range where 40 to 60 percent of non-MD faculty salaries were covered by sponsored programs, a minority of institutions (fewer than 10) relied on sponsored programs for up to 70 percent of non-MD faculty salaries (Levine et al., 2015). Anecdotal evidence reveals that reliance on sponsored programs for salary coverage is a considerable source of stress and anxiety at some institutions, particularly for younger faculty (Hellweg, 2015).

Some experts have pointed to the reliance on “soft money” faculty positions supported by grant funding as an important indicator that incentives are not aligned to support high-quality research (Alberts et al., 2014; Stephan, 2012b; Teitelbaum, 2008). The potential drawbacks of such salary arrangements have also been questioned by the NIH leadership (Collins, 2010). The thinking goes that researchers who are dependent on grant funding to support their salaries will be inclined to propose safer research that is more likely to receive funding but less likely to result in significant advances (Stephan, 2012b). This concern was actually raised in a 1960 report of the President’s Science Advisory Committee, which warned of “the need for avoiding situations in which a professor becomes partly or wholly responsible for raising his own salary” (PSAC, 1960).

A study that examined differences between researchers funded by the Howard Hughes Medical Institute, “which tolerates early failure, rewards long-term success, and gives its appointees great freedom to experiment,” and grantees of NIH,

“who are subject to short review cycles, predefined deliverables, and renewal policies unforgiving of failure,” appears to bear this out (Azoulay et al., 2011).

As noted above, an analysis of actual research misconduct cases implicates factors related to a hypercompetitive research environment in many of the cases (Davis et al., 2007). In another study conducted through a series of focus groups with early- and mid-career scientists, Anderson et al. (2007b) found that competition in research “can skew this system in unanticipated and perverse ways, with negative consequences for science as well as for the lives and careers of scientists.” Several of the interviewees pointed to a dramatic increase in the competitiveness of research in recent years. As one said, “It’s so much more competitive than it used to be. When we were first starting out, it was more collegial. You gave reagents away freely. Now there’s more at stake. There’s patents at stake. There is getting yourself funded.”

One of the negative consequences of competition cited by scientists is an inducement to engage in careless or detrimental research practices. While none of the interviewees said that they had committed or witnessed misconduct in science, they cited the temptation to behave irresponsibly. As one said, “There is a lot of pressure for people to come out with things in a very short time-frame. The likelihood that corners are cut, is real.” These incentives can operate internationally, as some institutions and governments provide large bonuses to researchers who are able to publish work in certain prestigious international journals. Also, empirical findings have shown a strong positive relationship between the level of competition perceived in an academic department and the likelihood of misconduct being observed by departmental members (Louis et al., 1995).

Appendix D discusses a specific case in which Elizabeth Goodwin, a faculty member at the University of Wisconsin who oversaw a number of graduate students and postdocs, was found to have falsified information contained in a federal grant application she submitted in 2005 (ORI, 2010). No evidence has emerged indicating that Goodwin committed research misconduct in other applications or in her publications. Philip Anderson, a faculty member in Goodwin’s former department, took one of the graduate students into his lab to finish work on her PhD dissertation. Anderson provided his perspective in an interview several years later: “I’ve thought about Betsy a lot through this process…. What she did, I believe, happened because of the extreme pressure we’re all under to find funding” (Allen, 2008). Certainly, this is one opinion, and it is not cited to rationalize or justify misconduct. However, as discussed above, there is empirical and theoretical grounding for concerns about the potentially detrimental effects of competition for resources on the behavior of scientists.

Recalling the discussion from Chapter 1 that described the research enterprise as a complex system, the accumulating evidence seems to suggest that some aspects of this system are not functioning well, at least in some disciplines, with implications for the ability of the system’s stakeholders to foster research integrity. If producing the next generation of highly trained and educated scientists

is an important function of the system, then there is reason to question whether current funding policies and the incentives that they create for institutions and individuals are resulting in the right quantity or quality of that output. The structural challenges facing U.S. biomedical research and the resulting perverse incentives and unintended negative consequences have been described by leading scientists (Alberts et al., 2014; Casadevall and Fang, 2012). These scientists have linked structural challenges to incentives affecting whether researchers commit misconduct or DRPs and assert that they will not be solved simply through increased funding. While developing approaches to remedy these structural challenges is beyond the scope of this report, the linkages between structural features of the research enterprise such as funding mechanisms, research environments, incentives, and behavior need to be better understood.

THE VALUE AND IMPORTANCE OF RESEARCH ON RESEARCH INTEGRITY

The discussion in this chapter illustrates that the causes of research misconduct and detrimental research practices are complex. The current state of knowledge, while incomplete, supports several propositions:

- Rather than focusing exclusively on fabrication, falsification, and plagiarism, the breadth of research misconduct and detrimental research practices should be taken into account and addressed.

- Targeting of efforts needs to go beyond just bad actors and should attend to the salient features of both local organizational environments and settings, as well as the structural arrangements of research and the incentive structures with which various actors in the research enterprise are confronted.

- Additional theoretically grounded research is warranted to more completely inform all such efforts. Important areas of focus include why researchers commit misconduct and DRPs—both theoretical and empirical work—as well as the strategies and interventions that could prevent or reduce the incidence of these actions.

These propositions underlie the committee’s Finding C, discussed in Chapter 11, which addresses the need for more knowledge to develop evidence-based approaches to research misconduct and detrimental research practices.