Computational Near-Eye Displays: Engineering the Interface to the Digital World

GORDON WETZSTEIN

Stanford University

Immersive virtual reality and augmented reality (VR/AR) systems are entering the consumer market and have the potential to profoundly impact society. Applications of these systems range from communication, entertainment, education, collaborative work, simulation, and training to telesurgery, phobia treatment, and basic vision research. In every immersive experience, the primary interface between the user and the digital world is the near-eye display. Thus, developing near-eye display systems that provide a high-quality user experience is of the utmost importance.

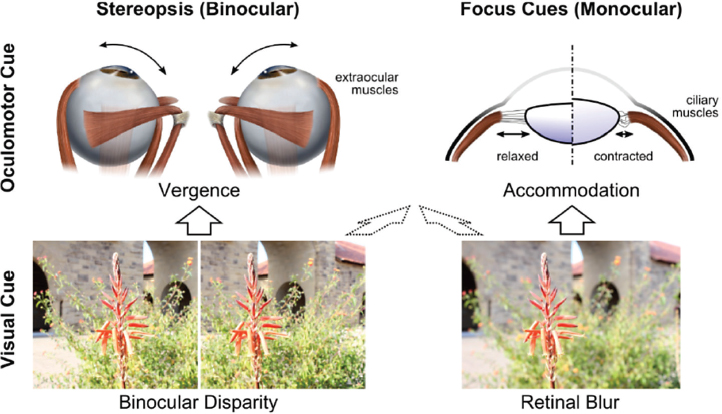

Many characteristics of near-eye displays that define the quality of an experience, such as resolution, refresh rate, contrast, and field of view, have been significantly improved in recent years. However, a significant source of visual discomfort prevails: the vergence-accommodation conflict (VAC), which results from the fact that vergence cues (e.g., the relative rotation of the eyeballs in their sockets), but not focus cues (e.g., deformation of the crystalline lenses in the eyes), are simulated in near-eye display systems. Indeed, natural focus cues are not supported by any existing near-eye display.

Using focus-tunable optics, we explore unprecedented display modes that tackle this issue in multiple ways with the goal of increasing visual comfort and providing more realistic visual experiences.

INTRODUCTION

In current VR/AR systems, a stereoscopic near-eye display presents two different images to the viewer’s left and right eyes. Because each eye sees a slightly different view of the virtual world, binocular disparity cues are created

that generate a vivid sense of three-dimensionality. These disparity cues also drive viewers’ vergence state as they look around at objects with different depths in the virtual world.

However, in a VR system the accommodation, or focus state, of the viewer’s eyes is optically fixed to one specific distance. This is because, despite the simulated disparity cues, the micro display in a VR system is actually at a single, fixed optical distance. The specific distance is defined by the magnified image of the micro display, and the eyes are forced to focus at that distance and only that distance in order for the virtual world to appear sharp. Focusing at other distances (such as those simulated by stereoscopic views) results in a blurred view.

In the physical world, these two properties of the visual response—vergence and accommodation—work in harmony (see Figure 1). Thus, the neural systems that drive the vergence and accommodative states of the eye are neurally coupled.

VR/AR displays artificially decouple vergence and focus cues because their image formation keeps the focus at a fixed optical distance but drives vergences to arbitrary distances via computer-generated stereoscopic imagery. The resulting discrepancy—the vergence-accommodation conflict—between natural depth cues and those produced by VR/AR displays may lead to visual discomfort and fatigue, eyestrain, double vision, headaches, nausea, compromised image quality, and even pathologies in the developing visual system of children.

The benefits of providing correct or nearly correct focus cues include not only increased visual comfort but also improvements in 3D shape perception, stereoscopic correspondence matching, and discrimination of larger depth intervals. Significant efforts have therefore been made to engineer focus-supporting displays.

But all technologies that might support focus cues suffer from undesirable tradeoffs in compromised image resolution, device form factor or size, and brightness, contrast, or other important display characteristics. These tradeoffs pose substantial challenges for high-quality AR/VR visual imagery with practical, wearable displays.

BACKGROUND

In recent years a number of near-eye displays have been proposed that support focus cues. Generally, these displays can be divided into the following classes: adaptive focus, volumetric, light field, and holographic displays.

Two-dimensional adaptive focus displays do not produce correct focus cues: the virtual image of a single display plane is presented to each eye, just as in conventional near-eye displays. However, the system is capable of dynamically adjusting the distance of the observed image, either by actuating (physically moving) the screen (Sugihara and Miyasoto 1998) or using focus-tunable optics (programmable liquid lenses). Because this technology only enables the distance of the entire virtual image to be adjusted at once, the correct focal distance at which to place the display will depend on where in the simulated 3D scene the user is looking.

Peli (1999) reviews several studies that proposed the idea of gaze-contingent focus, but I am not aware of anyone having built a practical gaze-contingent, focus-tunable display prototype. The challenge for this technology is to engineer a robust gaze and vergence tracking system in a head-mounted display with custom optics.

A software-only alternative to gaze-contingent focus is gaze-contingent blur rendering (Mauderer et al. 2014), but because the distance to the display is still fixed in this technique it does not affect the VAC. Konrad and colleagues (2016) recently evaluated several focus-tunable display modes in near-eye displays and proposed monovision as a practical alternative to gaze-contingent focus, where each eye is optically accommodated at a different depth.

Three-dimensional volumetric and multiplane displays represent the most common approach to focus-supporting near-eye displays. Instead of using 2D display primitives at a fixed or adaptive distance to the eye, volumetric displays either mechanically or optically scan the 3D space of possible light-emitting display primitives (i.e., pixels) in front of each eye (Schowengerdt and Seibel 2006).

Multiplane displays approximate this volume using a few virtual planes generated by beam splitters (Akeley et al. 2004; Dolgoff 1997) or time-multiplexed focus-tunable optics (Liu et al. 2008; Llull et al. 2015; Love et al. 2009; Rolland et al. 2000; von Waldkirch et al. 2004). Whereas implementations with beam

splitters compromise the form factor of a near-eye display, temporal multiplexing introduces perceived flicker and requires display refresh rates beyond those offered by current-generation microdisplays.

Four-dimensional light field and holographic displays aim to synthesize the full 4D light field in front of each eye. Conceptually, this approach allows for parallax over the entire eyebox to be accurately reproduced, including monocular occlusions, specular highlights, and other effects that cannot be reproduced by volumetric displays. Current-generation light field displays provide limited resolution (Hua and Javidi 2014; Huang et al. 2015; Lanman and Luebke 2013), whereas holographic displays suffer from speckle and require display pixel sizes to be in the order of the wavelength of light, which currently cannot be achieved at high resolution for near-eye displays, where the screen is magnified to provide a large field of view.

EMERGING COMPUTATIONAL NEAR-EYE DISPLAY SYSTEMS

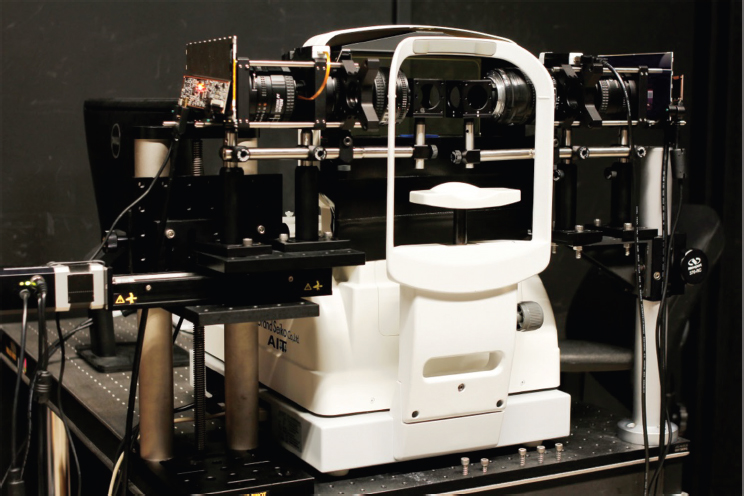

In our work, we ask whether it is possible to provide natural focus cues and to mitigate visual discomfort using focus-tunable optics, i.e., programmable liquid lenses. For this purpose, we demonstrate a prototype focus-tunable near-eye display system (Figure 2) that allows us to evaluate several advanced display modes via user studies.

Conventional near-eye displays are simple magnifiers that enlarge the image of a microdisplay and create a virtual image at some fixed distance to the viewer.

Adaptive depth of field rendering is a software-only approach that renders the fixated object sharply while blurring other objects according to their relative distance. When combined with eye tracking, this mode is known as gaze-contingent retinal blur (Mauderer et al. 2014). Because the human accommodation system may be driven by the accommodation-dependent blur gradient, this display mode does not reproduce a physically correct stimulus.

Adaptive focus display is a software/hardware approach that either changes the focal length of the lenses or the distance between the micro display and the lenses (Konrad et al. 2016). When combined with eye tracking, this mode is known as gaze-contingent focus. In this mode, the magnified virtual image observed by the viewer can be dynamically placed at arbitrary distances, for example at the distance where the viewer is verged (requires vergence tracking) or at the depth corresponding to their gaze direction (requires gaze tracking). No eye tracking is necessary to evaluate this mode when the viewer is asked to fixate on a specific object, for example one that moves.

Monovision is a common treatment for presbyopia, a condition that often occurs with age in which people lose the ability to focus their eyes on nearby objects. It entails placing lenses with different prescription values for each eye such that one eye dominates for distance vision and the other for near vision.

Monovision was recently proposed and evaluated for emmetropic viewers (those with normal or corrected vision) in VR/AR applications (Konrad et al. 2016).

HOW OUR RESEARCH INFORMS NEXT-GENERATION VR/AR DISPLAYS

Preliminary data recorded for our study suggest that both the focus-tunable mode and the monovision mode could improve conventional displays, but both require optical changes to existing VR/AR displays. A software-only solution (i.e., depth of field rendering) proved ineffective. The focus-tunable mode provided the best gain over conventional VR/AR displays. We implemented this display mode with focus-tunable optics, but it could also be implemented by actuating the microdisplay in the VR/AR headset.

Based on our study, we conclude that the adaptive focus display mode seems to be the most promising direction for future display designs. Dynamically changing the accommodation plane depending on the user’s gaze direction could improve visual comfort and realism in immersive VR/AR applications in a significant way.

Eye conditions, including myopia (near-sightedness) and hyperopia (farsightedness), have to be corrected adequately with the near-eye display, so the user’s prescription must be known or measured. Presbyopic users cannot accommodate, so dynamically changing the accommodation plane would almost certainly always create a worse experience than the conventional display mode. For them it is crucial for the display to present a sharp image within the user’s accommodation range.

In summary, a personalized VR/AR experience that adapts to the user, whether emmetropic, myopic, hyperopic, or presbyopic, is crucial to deliver the best possible experience.

REFERENCES

Akeley K, Watt S, Girshick A, Banks M. 2004. A stereo display prototype with multiple focal distances. ACM Transactions on Graphics (Proc. SIGGRAPH) 23(3):804–813.

Dolgoff E. 1997. Real-depth imaging: A new 3D imaging technology with inexpensive direct-view (no glasses) video and other applications. SPIE Proceedings 3012:282–288.

Hua H, Javidi B. 2014. A 3D integral imaging optical see-through head mounted display. Optics Express 22(11):13484–13491.

Huang FC, Chen K, Wetzstein G. 2015. The light field stereoscope: Immersive computer graphics via factored near-eye light field display with focus cues. ACM Transactions on Graphics (Proc. SIGGRAPH) 34(4):60:1–12.

Konrad R, Cooper E, Wetzstein G. 2016. Novel optical configurations for virtual reality: Evaluating user preference and performance with focus. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 1211–1220.

Lanman D, Luebke D. 2013. Near-eye light field displays. ACM Transactions on Graphics (Proc. SIGGRAPH Asia) 32(6):220:1–10.

Liu S, Cheng D, Hua H. 2008. An optical see-through head mounted display with addressable focal planes. Proceedings of the 7th International Symposium on Mixed and Augmented Reality (ISMAR), September 15–18, Cambridge, UK.

Llull P, Bedard N, Wu W, Tosic I, Berkner K, Balram N. 2015. Design and optimization of a near-eye multifocus display system for augmented reality. OSA Imaging and Applied Optics, paper JTH3A.5.

Love G, Hoffman D, Hands D, Gao J, Kirby J, Banks M. 2009. High-speed switchable lens enables the development of a volumetric stereoscopic display. Optics Express 17(18):15716–15725.

Mauderer M, Conte S, Nacenta M, Vishwanath D. 2014. Depth perception with gaze-contingent depth of field. Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, pp. 217–226.

Peli E. 1999. Optometric and perceptual issues with head-mounted displays. In: Visual Instrumentation: Optical Design and Engineering Principles, ed. Mouroulis P. New York: McGraw-Hill.

Rolland J, Krueger J, Goon A. 2000. Multifocal planes head-mounted display. Applied Optics 39(19):3209–3215.

Schowengerdt B, Seibel E. 2006. True 3-D scanned voxel display using single or multiple light sources. Journal of the Society of Information Displays 14(2):135–143.

Sugihara T, Miyasato T. 1998. A lightweight 3-D HMD with accommodative compensation. SID Digest 29(1):927–930.

von Waldkirch M, Lukowicz P, Tröster G. 2004. Multiple imaging technique for extending depth of focus in retinal displays. Optics Express 12(25):6350–6365.