4

Session 3: Machine Learning from Natural Languages

MACHINE LEARNING FROM TEXT: APPLICATIONS

Kathy McKeown, Columbia University

Kathy McKeown, Columbia University, explained that the natural language processing community uses machine learning techniques to extract information from text to solve problems. The framework for these machine learning techniques includes (1) the data, which is often labeled with the intended output; (2) the extraction of features from the text data using natural language techniques; and (3) the prediction of output. According to McKeown, researchers are interested in what type of data is available for learning, which features produce the most accurate predictions, and which machine learning techniques work best—supervised, unsupervised, and distant learning are currently used.

McKeown noted that core natural language processing technology enables part-of-speech tagging, sentence parsing, and language modeling (i.e., a statistical approach to identify word sequence probability). She detailed applications of natural language technology that use (1) interpretation (e.g., information extraction and sentiment analysis), (2) generation (e.g., summarization), and (3) analysis of social media (e.g., Tweets from gang members).

The purpose of information extraction, according to McKeown, is to extract concrete facts from text (i.e., named entities, relations, events) to create a structured knowledge base of facts. Event discovery and linking includes linking documents as well as linking dimension in the text to a real-world entity. The state of the art for the English language is relatively strong for named entities (89 percent accuracy), though there is room for improvement in both relations (59 percent accuracy) and events (63 percent accuracy). Commonly deployed machine learning methods include sequence labeling, neural nets, and distant learning, while features could be linguistic or based on similarity, popularity, gazeteers, ontologies, or verb triggers.

McKeown pointed out that since 2006, there has been substantial progress in entity discovery and linking as a result of the Defense Advanced Research Projects Agency (DARPA) Deep Exploration and Filtering of Text (DEFT) program.1 Additional short-term goals for entity discovery and linking include improved name tagging, smarter collective inference, and resolution of true aliases and handles used as entity mentions. She added that

___________________

1 For information about the DEFT program, see DARPA, “Deep Exploration and Filtering of Text (DEFT),” last updated November 13, 2015, https://opencatalog.darpa.mil/DEFT.html. See also the section on Boyan Onyshkevych’s presentation in Chapter 5 of this proceedings.

more long-term goals would increase the number of languages (to 3,000) and entities (to 10,000), eliminate the use of training data, incorporate multimedia and streaming modes, and improve context awareness.

McKeown defined sentiment analysis as the ability to identify sentences that convey opinions, as well as the polarity of those opinions. Sentiment analysis allows for the detection of online influencers, agreements or disagreements in dialogues, and online conflicts, as well as for the understanding of argumentation. Methods for sentiment analysis are primarily supervised: (1) manually labeled or self-labeled data collections; (2) conditional random fields, support vector machines, and joint learning approaches; (3) recurrent neural networks; and (4) sentiment of words, word categories, parts of speech, and parse features. In the future, McKeown hopes that cross-lingual transfer of sentiment and sentiment toward targets will be possible.

Natural language processing is especially important when disasters happen around the world in places where researchers or aid workers neither know the language nor have access to machine translation, McKeown explained. The DARPA Low Resource Languages for Emergent Incidents (LORELEI) program2 analyzes the subjective posts of everyday people and tries to identify sentiment and emotion in low resource languages. Cross-lingual sentiment models are used to leverage parallel and comparable corpora in low resource languages—multiple transfer model scenarios are created, target languages are engaged, and transfer models are created. These models, then, can be used to create sentiment analysis for languages with no labeled data. McKeown noted that the models perform well in English (80–85 percent) to detect polarity; however, when cross-lingual transfer is done, the success rate is much lower. For cross-lingual transfer, it is 40–50 percent; for sentiment toward targets, it is 59 percent.

By browsing and filtering news, journal articles, emails, and product reviews, McKeown noted that summarization enables quick determination of the relevance of these sources. Methods for summarization are typically extractive: sentences that are representative of the larger document are selected before unsupervised or supervised learning methods are applied. McKeown warns that the resulting summaries are not always coherent or accurate. If an abstractive method is used instead, pieces of the selected sentences are edited, compressed, or fused. There has been quite a bit of success with abstractive approaches, but they still need improvement. McKeown explained that the next step in summarization involves monitoring events over time using streaming data. For example, during disasters such as hurricanes or power outages, it is useful to take in relevant documents every hour and generate updates. Each disaster has a language model and geographic features. She added that summarization of personal experience from blogs or online narrative can also be used during a disaster and is a challenge for the next 5 years. Neural networks are a methodology that is just now being applied to summarization and holds promise for the future. The upcoming Intelligence Advanced Research Projects Activity (IARPA) Machine Translation for English Retrieval of Information in Any Language (MATERIAL) program will conduct search and summarization over low resource languages.

The third application McKeown discussed was a joint project between the social work and natural language processing communities to analyze tweets from gang members in Chicago.3 This partnership was especially important because the qualitative analysis and annotation would not have been possible without the social workers’ knowledge of language commonly used by gang members. A speech tagger and bilingual dictionary were created before the tweets could be classified and labeled as either “aggression” or “loss.” Researchers observed that violence exacerbated after gang members taunted one another on social media. McKeown hopes that this case study will lead to increased community intervention to diffuse violence when aggression is detected. She noted that neural network models are needed to exploit large unlabeled data sets and wondered if these methods could be used to detect other instances of violence from nonstandard language.

McKeown’s last topic of discussion was the identification of fake news through classification of statements and articles. She described a system that currently identifies fake news accurately 65 percent of the time, so there is more work to be done in this area as well. McKeown concluded with a discussion of technology capabilities over

___________________

2 For more information about the LORELEI program, see B. Onyshkevych, “Low Resource Languages for Emergent Incidents (LORELEI),” DARPA, https://www.darpa.mil/program/low-resource-languages-for-emergent-incidents, accessed August 24, 2017. See also the section on Boyan Onyshkevych’s presentation in Chapter 5 of this proceedings.

3 T. Blevins, R. Kwiatkowski, J. Macbeth, K. McKeown, D. Patton, and O. Rambow, 2016, Automatically processing tweets from gang-involved youth: Toward detecting loss and aggression, pp. 2196-2206 in Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical, December 11–17, available at http://aclweb.org/anthology/C/C16/.

the next 5 or more years. She explained that while the natural language processing community has strong capabilities for English and formal text, it needs to improve interpretation, analysis, and generation from multi-modal sources, informal texts, and streaming data. It is also important to be able to do learning without big data (e.g., in the case of low resource languages); better understand machine learning, discourse, context, and applications in multi-lingual environments without machine translation; fund research in robust and flexible language generation; and explore new application areas such as fake news, cyberbullying, and inappropriate content.

Jay Hughes, Space and Naval Warfare Systems Command, remarked that a common dictionary is needed to avoid miscommunication about concepts for which we use different words to describe. McKeown responded that the lack of a common vocabulary is of interest to the natural language processing community. It wants to capture cultural background, as well as perspective and ideology, to generate and understand language. A participant asked if fake news should be considered a computational linguistics problem, a graph analytics problem, or a natural language processing problem. McKeown said that it is a difficult problem, and a solution will likely require all technologies that can be brought to bear.

DEEP LEARNING FOR NATURAL LANGUAGE PROCESSING

Dragomir Radev, Yale University

Dragomir Radev, Yale University, noted that there has been a full-scale revolution in neural networks owing to the large amount of textual data (e.g., email, social media, news, spoken language) and the powerful hardware that are available. Radev remarked that many of the most fundamental natural language processing tasks are classification problems—for example, optical character recognition, spelling correction, part-of-speech tagging, word disambiguation, parsing, named entity segmentation and classification, sentiment analysis, and machine translation.

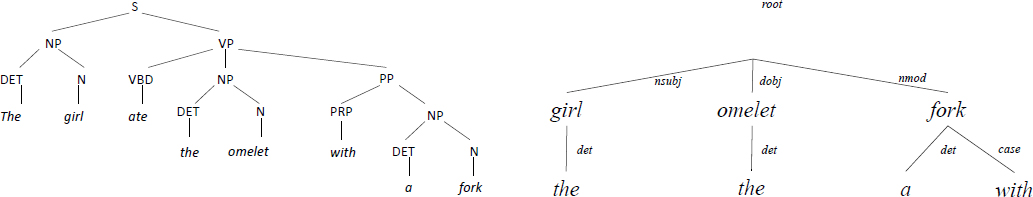

Radev described the traditional natural language processing pipeline. Words in a sentence are tagged with parts of speech and then parsed based on syntactic constituency or dependency (see Figure 4.1). To understand language, Radev explained, it is necessary to perform semantic analysis. After a sentence is converted into a logical form, world knowledge is used to perform inference and reach a conclusion about meaning. Current natural language processing methods include (1) vector semantics for dimensionality reduction or compositionality; (2) supervised learning using deep neural networks; and (3) learning architectures such as recurrent neural networks, long short-term memory networks, attention-based models, generative adversarial networks, reinforcement learning, and off-the-shelf libraries. Radev explained that, with vector semantics, words can be represented as vectors based on correspondence to nearby words, and meaning can be represented in vector form. He noted that this method also works when dealing with multilingual text. Compositional meaning can be expressed as a sum of vectors whereas non-compositional meaning (e.g., idioms) cannot.

Radev defined deep learning as neural networks with multiple layers and non-linearities. Depending on the number of layers in the neural network, deep learning can be used as a simple classifier or to learn more complex

features. He added that deep learning works well with high-performance computers using graphical processing units and with large data sets. He shared that industry is investing billions in deep learning, in part because it has led to a significant improvement on many natural language processing tasks—for example, a 30 percent decrease in word error rate for speech recognition. Radev next highlighted multi-layer perceptrons, which can be used as classifiers. They are universal function approximators in the sense that they can compute any function that is consistent with the training data. The hidden layers between the input layer and the output layer are tasked with finding features of interest.

Radev gave a brief overview of some important work in the natural language processing and related communities:

- AlexNet. A neural network architecture used for computer vision.

- Embeddings for words. Used to represent singular and plural words, comparative and superlative adjectives, and male and female words, for example.

- Autoencoder. The simplest neural network that can perform dimensionality reduction—when the network is trained, the hidden dimensions find principle components for the lower dimensional representations of the data.

- Embeddings for word senses. Uses vectors to represent multiple meanings associated with individual words.

- Recurrent neural networks. Used to represent and make inferences about sequences of words, such as sentences.

- Long short-term memory networks. Addresses challenges with vanishing or exploding gradients in recurrent neural networks by controlling the flow of information through the network and enables long-distance dependency. Useful for language identification, paraphrase detection, speech recognition, handwriting, and music composition, for example.

- Attention-based summarization. Through the use of an attention mechanism, text can be processed and sentences simplified, enabling progress on tasks such as summarization.

Radev described the challenges that remain in natural language processing, including recognition of non-compositionality, incorporation of world knowledge, semantic parsing, discourse analysis, contextual comprehension, user modeling, database systems access, dialogue modeling, and text generation. There are also challenges with adversarial data when fake examples confuse the classifier.

Joseph Mundy, Vision Systems, Inc., asked why the attempts to introduce world knowledge in natural language processing have struggled to date. Radev noted that manually built ontologies of world knowledge are gradually fading, and the future is in automatically learned resources. Tom Dietterich, Oregon State University, commented that automatically structuring the architecture is important since deep networks require writing a program and that it is important to identify useful models and reusable program components for this new style of differentiable programming.

In conclusion, Radev encouraged the intelligence community to focus on the following research areas:

- Speech analysis,

- Massive multilinguality,

- One-shot learning,

- Understanding short texts,

- Interpretability of models,

- Deep learning personalization,

- Trust analytics, and

- Ethical language processing.

He also highlighted potential enabling technologies such as the following:

- Integrating deep learning and reinforcement learning,

- Automating architecture learning,

- Hyperparameter searching,

- Improving generative models,

- Using memory-based networks and neural Turing machines,

- Semi-supervised learning,

- Mixing and matching architectures, and

- Adopting privacy-preserving models.

MACHINE LEARNING FROM CONVERSATIONAL SPEECH

Amanda Stent, Bloomberg

Amanda Stent, Bloomberg, explained that conversation is a complex problem because while there are accepted rules that conversation participants follow, there are also myriad choices that can be made by these participants. Speech conversation researchers use advanced analytics and machine learning methods but are constrained by a lack of access to data appropriate for the complexity of the problem and thus sometimes work on artificial problems or with artificial data. As a result, according to Stent, speech and dialogue are not solved problems, and a divide exists between research and industrial application in this area.

She said that while conversation is often thought to occur between two or more humans, human–computer conversation is becoming more prevalent—the most common of which is slot-filling dialogue. Conversation is different from text or speech processing because (1) It is collaborative; (2) Its contributions are hearer-oriented, tentative, and grounded; and (3) It is incremental, multi-functional, and situated (i.e., there is always other context that must be taken into account). Stent noted that much research in conversation is focused on speech recognition uncertainty and errors because computer systems cannot yet handle these types of miscommunications.

Stent described three contexts in which machine learning methods would be useful to study conversation: exploration, understanding, and production. For instance, government agencies may be interested in the exploration component because it supports off-line and real-time data mining and analytics to identify issues with products or services. The machine learning methods that focus on understanding conversation support more advanced analytics and could be used to identify where a conversation veers off track, for example. Methods for production, on which researchers tend to focus most of their time, learn conversational agent models and task models. Across industry, these machine learning methods may be used for call center analytics, advertisement placement, customer service analytics, and problematic dialogue identification.

Stent added that there is a large gap between what researchers do and what industry does in the context of building conversational agents, which will hopefully begin to be addressed by the technology capabilities discussed in this workshop. She listed platforms that could serve as examples on which to build, including Microsoft Cognitive Services, Facebook, Slack, Twitter, Reddit, and various open source packages (e.g., rasa.ai and opendial). Evaluation of such systems is based on retrieval (for exploration), precision and recall (for understanding), and task completion and user satisfaction (for production). According to Stent, current threads of research in conversation include domain adaptation for dialogue state tracking and “end-to-end” models for chat dialogue, which predict the next utterance in a conversation, given the previous.

Stent emphasized that there are still many large problems in conversation. No general dialogue engine yet exists—an integrated model of comprehension and production at all levels of linguistic processing is needed. And researchers are still addressing basic architectural and modeling questions, which is very difficult to do with a limited amount of data. While a small number of large companies may have an abundance of data, these data may be of little use to researchers either because they are uninteresting or may contain personally identifiable information.

To advance innovation in conversation, Stent stated that sponsors are needed to support further research on machine learning for conversational analytics; this would give the conversation community access to more problems and more data. In the realm of producing conversational agents, Stent hopes to move beyond single-domain slot filling and context-free chat to the areas of situated, negotiation, and perpetual dialogue, for instance. The community could also expand slot-filling dialogue with more tasks, multiple domains, or multiple genres. Generally, Stent believes that methods combining advanced machine learning neural networks with programmatic control

would be useful. She also encouraged the use of methods that include a “human-in-the-loop” so as to collect data and train the models more quickly. Further innovation could come from providing the community with shared portals for evaluation and crowdsourcing of data. Stent hopes that work on world knowledge induction and situational awareness will also be sponsored in the United States, as such work is already under way abroad. Stent would like to see researchers collaborate on issues of data availability and privacy.

Regarding the issue of data availability, a participant noted that some of the large companies that have access to an abundance of data may have more interesting data than we realize; they may just be inaccessible. Dietterich asked if planning technology is essential for the study of dialogue. Stent responded that there are many applications for which planning is crucial for the task level of the conversation. However, at the conversation level, Stent remains unconvinced that planning is necessary. Rama Chellappa, University of Maryland, College Park, asked if there will ever be a system that will convert a native language in real time so that everyone else in a country (e.g., India, which has 30 languages) can understand it. Stent responded that the Interspeech Sexennial Survey4 estimated that such progress would be unlikely before the year 2050.

___________________

4 Moore, R.K., and R. Marxer, 2016, Progress and prospects for spoken language technology: Results from four sexennial surveys, Proceedings of INTERSPEECH, September 8-12, https://pdfs.semanticscholar.org/99e8/7769b1d2b27f09012cdf31ea550d00be4c22.pdf.