7

Assessing STEM Learning among English Learners

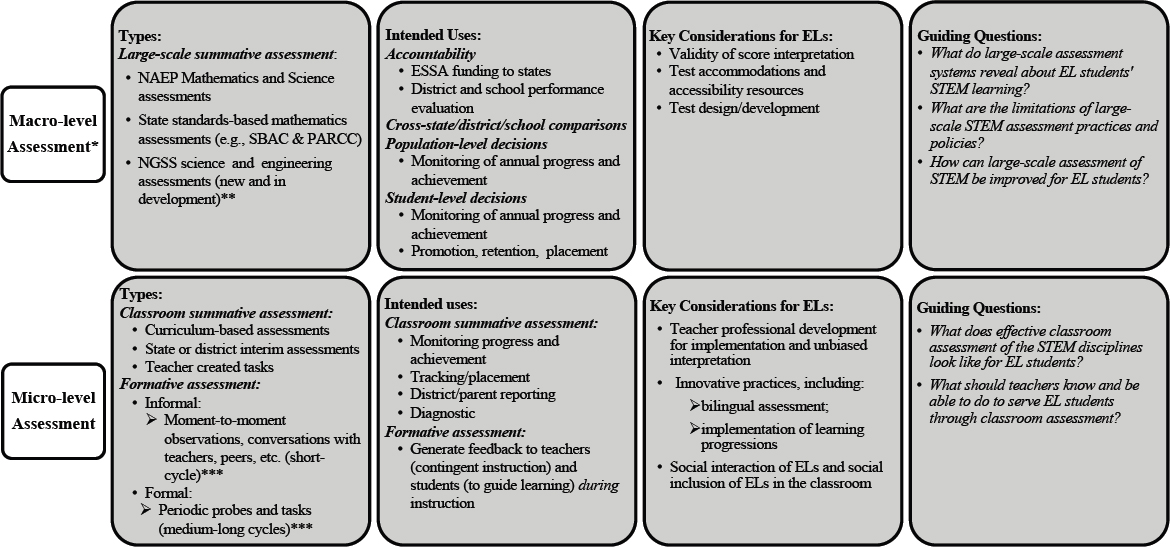

This chapter discusses the role that assessment plays or potentially can play in ensuring English learners’ (ELs’) successful access to science, technology, engineering, and mathematics (STEM). Assessment of STEM subjects, as with other academic areas, can be separated into two broad assessment approaches. Measurement-driven (i.e., macro-level) assessment is used summatively for large-scale accountability of student performances and for evaluating learning across broad intervals (e.g., annual progress). Performance data-driven (i.e., micro-level) assessment can be used for summative purposes at the classroom level, or can provide formative feedback to inform teaching and learning as it happens (Black, Wilson, and Yao, 2011; see Figure 7-1).

Within these broad assessment approaches, the discussion addresses several challenges in EL testing practice and policy. The first challenge concerns large-scale assessment and has to do with the fact that language is the means through which tests are administered. Professional organizations consistently have recognized that scores on tests confound, at least to some extent, proficiency in the content being assessed and proficiency in the language in which that content is assessed (American Educational Research Association, American Psychological Association, and National Council on Measurement in Education, 2014). This limitation, which is always a concern in the testing of any student population, is especially serious for ELs. While there is a wealth of information on the academic achievement of ELs in tests from large-scale assessment programs, the majority of current testing practices with ELs are ineffective in eliminating language proficiency in the language of testing as a factor that negatively affects the performance

* Black, P., Wilson, M., and Yao, S. Y. (2011). Road maps for learning: A guide to the navigation of learning progressions. Measurement: Interdisciplinary Research & Perspective, 9(2-3), 71-123.

** For example, Washington Comprehensive Assessment of Science (operational 2017-18), California Science Test (operational Spring 2019), Massachusetts Comprehensive Assessment System. See also, National Research Council (2014). Developing Assessments for the Next Generation Science Standards. Washington, DC: The National Academies Press.

*** Wiliam, D. (2006). Formative assessment: Getting the focus right. Educational Assessment, 11(3-4), 283-289.

of these students on tests. This in turn poses a limit to the extent to which appropriate generalizations can be made about ELs’ academic achievement based on test scores.

The second challenge concerns classroom summative assessment and formative assessment—assessment for learning. The past decade has witnessed a tremendous increase in the number of investigations and publications that examine how teachers can obtain information about their students’ progress and, based on that information, adjust their teaching to ensure that learning goals are met. Classroom summative assessment includes more frequent monitoring of student progress on standardized measures such as state or district interim assessments, implementation of commercial curriculum-based assessments, or teacher-made end-of-unit tests. Formative assessment entails generating feedback on student learning using formal activities (such as giving quizzes or assignments that involve the entire class and that are carried out in a purposeful and planned manner) and/or informal activities (such as requesting participation in classroom discussions, or observing student-to-student discussions and asking questions to probe student understanding of the topic).

Much of the research on classroom summative and formative assessment has been conducted without considering linguistic diversity in the classroom. With a few exceptions, research is mostly silent about the linguistic factors that may shape effectiveness in formative assessment, or it appears to implicitly assume equal proficiency in the language of instruction among all students in class. As a consequence, the extent to which current knowledge on formative assessment is applicable to linguistically diverse classrooms still needs more research.

The chapter has two main sections. The first examines what is known about ELs on STEM large-scale assessments, the limitations of available information on EL achievement, and the factors that contribute to these limitations. The second section examines what is known about ELs and classroom assessment practices for the STEM disciplines, including teacher preparation and credentialing.

ENGLISH LEARNERS IN LARGE-SCALE STEM ASSESSMENT PROGRAMS

Legal mandates to include ELs in large-scale assessment programs are intended to ensure that states produce indicators of academic achievement for ELs. The quality of such indicators depends, to a large extent, on the ability of assessment systems to support these students to gain access to the content of items in spite of the fact that they are in the process of developing proficiency in the language in which they are tested. It also depends on

the ability of assessment systems to consider this developing proficiency as critical to making valid interpretations of test scores for these students.

Test scores from assessment programs consistently show that ELs lag behind their non-EL counterparts in science and mathematics achievement (see Chapter 2). Multiple factors may contribute to this achievement gap; among them is the exclusion of proficient ELs from the EL subgroup and, more importantly, the limited opportunities these students have to benefit from STEM instruction delivered in a language that they are still developing. This section focuses on the limited effectiveness of assessment systems to properly address the complexity of EL student populations in a consistent and cohesive manner (see for review, Bailey and Carroll, 2015).

Participation of ELs in National and State Assessment

The inclusion of ELs in large-scale assessment programs has been shaped by changes in legislation and different ways of interpreting and implementing that legislation. The No Child Left Behind Act (NCLB)1—another reauthorization of the Elementary and Secondary Education Act (ESEA)2—contained requirements for schools to meet growth requirements and report status regarding progress toward learning English according to English-proficiency standards aligned to academic standards. The goal of this effort was to align language proficiency standards under Title III to the language needed to learn academic content under Title I. A potentially unfortunate consequence of these requirements is that they may have unintentionally promoted practices that do not distinguish between the characteristics of developing English as a second language and learning the formal and academic aspects of English language encountered in content areas.

States were required to adopt standards that targeted knowledge and skills in mathematics and English language arts deemed necessary to access and succeed in college (e.g., Common Core State Standards [National Governors Association Center for Best Practices and Council of Chief State School Officers, 2010]). As part of the American Recovery and Reinvestment Act,3 Race to the Top—a federal grant intended to promote innovation in education at the state and local levels (U.S. Department of Education, 2017)—funded two state assessment consortia, the Partnership for Assessment of Readiness for College and Careers (PARCC) and the Smarter Balanced Assessment Consortium (Smarter Balanced). These two assessment consortia are required to administer and provide reports on assessments tied to state standards in mathematics and English/language arts once a year in Grades 3–8 and once in high school.

___________________

1 No Child Left Behind Act of 2001. Public Law 107-110.

2 Elementary and Secondary Education Act of 1965. Public Law 89-10.

3 American Recovery and Reinvestment Act of 2009. Public Law 111-5.

Along with the assessment consortia and their focus on mathematics, four major recent developments have contributed to define the current assessment scene for ELs as it concerns STEM broadly. First is the Every Student Succeeds Act4—the latest reauthorization of ESEA, which includes both an indicator of progress in English language proficiency and indicators of academic content achievement within the same accountability system. It also requires states to report on the languages most used by their ELs and to make efforts to assess students in those languages (see Solano-Flores and Hakuta, 2017).

The second development is the creation of new English language development or proficiency (ELD/P) standards by states and consortia that attempt to align with the uses of language in the most recent academic content standards, and newer ELD/P assessments to match these standards (e.g., WIDA Consortium World-Class Instructional Design Assessment, 2007). These assessment efforts have opened the possibility for states to make sound decisions concerning the identification of ELs and supporting them based on aspects of language that are relevant to learning content (Council of Chief State School Officers, 2012). They may also contribute to helping educators to meet the academic language demands inherent to college and career readiness (see Frantz et al., 2014; Valdés, Menken, and Castro, 2015).

The third recent development in STEM education is the Next Generation Science Standards (NGSS), which set expectations for knowledge and skills for students in grades K–12. The NGSS provide a conceptual organization for large-scale assessment frameworks (NGSS Lead States, 2013). Sufficient consideration of ELs’ needs in the development of NGSS-based assessments will depend on the extent to which the intersection of content and the linguistic demands of science content are addressed (see Lee, Quinn, and Valdés, 2013).

Finally, a fourth important recent development relevant to ELs has to do with the requirement for states to formalize college- and career-readiness expectations for their students. ESSA allows more flexibility in state accountability systems, which allows more states to refine their accountability systems to include college readiness (see Council of Chief State School Officers, 2012). An important outcome of this development is the link between Common Core standards and the criteria used by colleges to make placement decisions for developmental courses. For example, about 200 colleges are using Smarter Balanced high school test scores assessing the Common Core (including mathematics) as part of their measure to inform decisions on whether students are ready for credit-bearing courses or need to take developmental courses (Smarter Balanced Assessment Consortium, 2017a).

___________________

4 Every Student Succeeds Act of 2015. Public Law 114-95 § 114 Stat. 1177 (2015–2016).

Limitations of Current Large-Scale Testing Policies and Practices

Low performance of ELs on large-scale tests can be regarded as reflecting the challenges inherent to learning in a second language, living in poverty, receiving inadequate support to learn both English and academic content, and having limited opportunities to learn. Performance differences between ELs and non-ELs are further complicated by the routine practice of excluding former ELs who have gained proficiency in English from the EL reporting subgroup. This creaming from the top within the EL subgroup diminishes the performance of the EL subgroup and exacerbates differences between ELs and non-ELs (Saunders and Marcelletti, 2013). At the same time, including former ELs in the EL subgroup masks the performance of those ELs who have not yet gained proficiency in English. Both comparisons are important and useful but address different questions of importance to educators and policy makers, as well as to parents and students.

In addition to those sources of low performance in large-scale assessment tests, a discussion of STEM assessment for ELs needs to take into consideration multiple limitations in current testing policies and practices: (1) EL identification and classification practices; (2) the validity of generalizations of test scores for ELs; (3) the process of test development, review, and adaptation; (4) the use of testing accommodations; and (5) the reporting and documentation of ELs’ performance and test scores.

Identifying and Classifying ELs

Decisions relevant to STEM assessment for ELs are affected by the process of identifying students and deciding on their level of proficiency in English. Results from this process determine who is tested and how (e.g., the kinds of testing accommodations ELs receive when they are given tests in large-scale content assessment programs). Legal definitions of English language learners (e.g., as students who speak a language other than English at home and who may not benefit fully from instruction due to limited proficiency in the language of instruction, as contained in NCLB) help states and school districts to make classification decisions needed to comply with legal mandates concerning ELs. Yet these definitions are not technical and may render erroneous classifications—some students may be wrongly classified as ELs, while certain ELs may wrongly not be identified as ELs (Solano-Flores, 2009; see also National Academies of Sciences, Engineering, and Medicine, 2017). Because states have different criteria to implement legislation regarding the definition of ELs, whether a student is regarded or not as being an EL depends, at least to some extent, on the state in which a given student lives. Remarkable collaborative efforts involving states and other stakeholders have recently been undertaken to support

states in developing a statewide, standardized definition of ELs (Linquanti et al., 2016). Given the new requirements in Every Student Succeeds Act for states to adopt standardized, statewide EL entrance and exit procedures and criteria, more states are moving to adopt entrance and exit criteria focused squarely on the English language proficiency construct that can be applied consistently by schools and districts across the state.

To complicate matters, testing practices and practices concerning reporting and using information on English proficiency are not sensitive to the tremendous heterogeneity of EL populations. Of course, an important source of this heterogeneity stems from the wide diversity of ELs’ first languages (while Spanish is the first language of the vast majority of ELs in the United States, there are hundreds of other languages used by ELs). However, the kind of linguistic heterogeneity that is perhaps most important in the assessment of ELs is the heterogeneity that exists across students in oral language, reading, and writing skills. This diversity is present in both English and in students’ first language and is evidenced even among speakers of the same language. Failure to properly address this diversity has a great negative impact on the effectiveness of any action intended to support ELs.

These differences are not captured by overall categories of English proficiency, whose use fails to recognize the strengths a given EL student may have in English. While assessment systems may report English proficiency for different language modes (i.e., listening, speaking, reading, writing), this information is not necessarily available to educators or the professionals in charge of making placement or testing accommodation decisions for use during STEM assessment or is not given in ways easy for teachers to interpret and use during STEM instruction (see Zwick, Senturk, and Wang, 2001).

Validity Concerns

Another limitation in current STEM testing practices for ELs concerns validity. For the purposes of this report, validity can be defined as the extent to which reasonable generalizations can be made about students’ knowledge or skills based on the scores produced by a test (see Kane, 2006). The fact that limited proficiency in the language of testing constitutes a threat to the validity of test score interpretations has been widely recognized for a long time.

A large body of research has identified language as a source of construct irrelevant variance—variation in test scores due to factors that do not have to do with the knowledge or skill being assessed (e.g., Abedi, 2004; Avenia-Tapper and Llosa, 2015; Solano-Flores and Li, 2013). Many of these factors have to do with linguistic complexity; for example, complexity due to the use of unfamiliar and morphologically complex words, words

with multiple meanings, idiomatic usages, and long or syntactically complex sentences in texts and accompanying test items and directions (Bailey et al., 2007; Noble et al., 2014; Shaftel et al., 2006; Silliman, Wilkinson, and Brea-Spahn, 2018). However, underrepresentation of the content construct is a concern, as well, if all linguistic complexity is unreflexively removed from assessments (Avenia-Tapper and Llosa, 2015). If communication of STEM is part of the target construct of the assessment, then some degree of linguistic complexity representing the texts, tests, and discourse of STEM classrooms could be desirable on content assessments (e.g., Bailey and Carroll, 2015; Llosa, 2016). Indeed it may not be possible to present higher-level STEM content in language that is not complex.

Another important source of construct irrelevant variance has to do with dialect and culture. Tests are administered in so-called Standard English, which is a variety of English commonly used in formal and school texts and carries high social prestige. There is evidence that indicates that the lack of correspondence between the Standard English used in tests and the variety of English used by ELs produces a large amount of measurement error (Solano-Flores and Li, 2009). Due to limited experience in life, younger students may be more sensitive to these dialect differences.

An additional set of validity threats concerns culture and the contextual information (e.g., fictitious characters, stories, situations) used in science and mathematics items with the intent to make them more relatable and thus more meaningful to students. A study found that at least 70 percent of released National Assessment of Educational Progress science items for Grades 4 and 8 provided contextual information, both in the form of text and illustrations. Correlation data suggest that some of those contextual characteristics influence student performance (Ruiz-Primo and Li, 2015; see also Martiniello, 2009). Evidence from research conducted with small samples of science and mathematics items suggests that the contextual information of items reflects mainstream, white, middle-class culture (Solano-Flores, 2011). Certainly, not sharing the communication styles, values, resources, ways of living, objects, or situations of another cultural group does not necessarily mean that an individual is incapable of understanding contextual information. Yet it is not clear to what extent responding to test items that picture situations that are not part of one’s everyday life makes students feel alienated and to what extent that feeling may impact performance. After all, how meaningful something is to a person is not only a matter of familiarity, but also a matter of the person’s identification with a community and their level of social participation in that community (Rogoff, 1995).

Test Development, Review, and Adaptation

Key to properly addressing the linguistic and cultural challenges relevant to validly testing ELs appears to be the process of test development. Ideally, when tests are developed properly, draft versions of items are tried out with samples of pilot students drawn from the target population of examinees. This process involves examination of student responses and even interviews and talk-aloud protocols that provide evidence on the ways in which students interpret items and the reasoning and knowledge they use to respond to them. Based on this information, the content, context, and text of items are refined through an iterative process of review and revision (Trumbull and Solano-Flores, 2011). Even when the target population of students does not include ELs, many of the refinements concern linguistic features (e.g., colloquial words that are not used by students in the ways in which test developers assumed).

Unfortunately, there is no certainty that this process of development takes place for a substantial proportion of items used in large-scale assessment. Of course, many items may undergo a process of formal scrutiny in which committees of reviewers examine them and systematically identify sources of potential bias (Hambleton and Rodgers, 1995; Zieky, 2006). Yet experts’ reviews are only partially sensitive to the features of items that may pose an unnecessary challenge to students due to linguistic and cultural issues (Solano-Flores, 2012).

To complicate matters, even when items are pilot tested with samples of students, ELs are rarely included in those student samples. Underlying this exclusion is the assumption that little information on the reasoning ELs use when they respond to items can be obtained from them due to their limited English proficiency. Yet there is evidence that most ELs can participate in cognitive interviews and communicate with test developers (Kachchaf, 2011). Non-ELs and ELs may differ on the sets of linguistic features that may hamper their understanding of items. This simple notion is extremely important, as there is evidence that even changing one word or slightly rephrasing an expression in science items may make a difference on whether an item is or is not biased against linguistic minority students (Ercikan, 2002).

Regarding test review, an important aspect of EL assessment is the analysis of item bias. Using item response theory (a psychometric theory of scaling), potential bias in an item can be examined by comparing the level of difficulty of the item for ELs and for non-ELs after controlling for group performance differences on the overall test score. If the item is more difficult for the sample of ELs than for the sample of non-ELs in spite of the fact that their overall test scores are similar, that case is considered as evidence that the item functions differentially for the two populations—it is biased

against ELs (Bailey, 2000/2005; Camilli, 2013; Martiniello, 2009). While this procedure has been available for several decades, the extent to which it is used routinely in large-scale assessment programs and with respect to the heterogeneity of the samples of students compared is unclear. One reason that explains why it may not be used with substantial numbers of items is that item response theory-based item bias analysis is costly and time consuming (Allalouf, 2003). To complicate matters, the effectiveness of this procedure may be limited by the characteristics of the student populations. There is evidence that the rate of detection of biased items declines as the samples of ELs’ heterogeneity increases (Ercikan et al., 2014; Oliveri, Ercikan, and Zumbo, 2014).

Testing Accommodations

Legislation contains provisions on accommodations in tests for ELs and special education students. These accommodations are modifications made on the format of tests or the ways in which they are administered. These modifications are intended to minimize factors related to the condition of being an EL that could adversely affect the student’s test performance, but which are not relevant to the constructs being measured. Thus, valid accommodations remove the impact of construct irrelevant variance on test performance without giving the students who receive them an unfair advantage over non-ELs who are not provided with those accommodations (see Abedi, Hofstetter, and Lord, 2004). Examples of testing accommodations used by states include: allowing students extra time to complete the test, assigning students preferential seating, simplifying the text of items, providing students with printed dictionaries and glossaries, and providing them with translations of the test. An investigation on the use of accommodations (Rivera et al., 2006) counted a total of more than 40 testing accommodations used by states with ELs, many of which were shown by the authors to be inappropriate and likely to fail to address the linguistic needs of ELs. The study also found that accommodations for ELs and accommodations for students with disabilities are often confused. For example, schools may be given lists of authorized accommodations without distinguishing which ones are for each of these two groups of students (Rivera et al., 2006). As a result, ELs may receive accommodations such as providing enhanced lighting conditions or large font size, which are intended for visually impaired students.

In spite of the good intentions that drive their use, there are many technical and practical issues that need to be resolved before testing accommodations can reasonably be expected to serve the function for which they have been created. An important issue is the defensibility of the implied assumptions about the students’ skills and needs. Because EL populations

are very heterogeneous, some accommodations may be effective only for some students. For example, allowing extra time to complete the test will help only students who truly take longer reading and comprehending the text of test items than typical test-takers. If ELs are not literate in their first language or have not had schooling in their first language, they may be equally slow, or even slower in completing a test given in their first language (see Chia and Kachchaf, 2018). Also, dictionaries or glossaries might benefit only those students who have the skills needed to efficiently locate words alphabetically ordered, and translation will benefit only those students who have received formal instruction in their first language and know how to read in it.

Several reviews and meta-analyses have been conducted with the intent to shed light on the effectiveness of different types of accommodations for ELs (Abedi, Hofsteteter, and Lord, 2004; Kieffer et al., 2009; PennockRoman and Rivera, 2011; Sireci, Li, and Scarpati, 2003; Wolf et al., 2008, 2012). The results indicate that very few accommodations are effective and those that are effective are only moderately effective insofar as they reduce only a small portion of the achievement gap between ELs and non-ELs. Also, there is evidence that, in the absence of accurate and detailed information about students’ skills and needs, assigning accommodations to what educators or school administrators believe is the best accommodation for their students, or randomly assigning them to any accommodation render similar results (Kopriva et al., 2007). Thus, limited proficiency in English should not be assumed to be entirely removed as a source of measurement error in large-scale assessment programs simply because testing accommodations are used with ELs.

An important aspect often neglected in examining testing accommodation effectiveness is implementation (see Ruiz-Primo, DiBello, and Solano-Flores, 2014). Assessment programs’ specifications concerning the accommodations that states or schools are authorized to provide are not specific on the ways in which those accommodations have to be created or provided (Solano-Flores et al., 2014). Test translation is a case in point. Research shows that translations can alter the constructs (skills, knowledge) being measured by tests (Hambleton, 2005). Research also shows that translating tests is a very delicate endeavor that, in addition to qualified translators, needs to involve content specialists and teachers who teach the content assessed, and who must engage in a careful process of review and revision (Solano-Flores, 2012; Solano-Flores, Backhoff, and Contreras-Niño, 2009). Thus, it is possible that many accommodations are not properly created or provided to students, and it is possible that schools and states vary considerably in their approaches to matching students to accommodations, as well as in the fidelity with which accommodations are implemented.

As large-scale assessment programs transition from paper-and-pencil to computer-based formats, a wide range of devices emerge that, if developed carefully, have the potential to effectively reduce limited proficiency in English as a source of measurement error in testing (Abedi, 2014). The term accessibility resource is becoming increasingly frequent in the literature on EL assessment. An accessibility resource can be defined as a device available for students to use when they need it, and which reacts to each individual student’s request, for example, by displaying an alternative representation of the text of the item or part of this text (see Chia et al., 2013).

During the past few years, Smarter Balanced5 has been developing accessibility resources for ELs in mathematics assessment (Chia et al., 2013; Chia and Kachchaf, 2018; Solano-Flores, Shade, and Chrzanowski, 2014). Pop-up text glossaries are an example of these accessibility resources. The text of the item highlights select words or strings of words that are available for translation. When the student clicks on one of those words or strings of words, its translation in the student’s first language pops up next to it. Unlike a conventional glossary printed on paper, a pop-up text glossary is sensitive to each individual student’s need in that it is activated only when the student needs it.

This capability to react to the student is critical to designing accessibility resources that are sensitive to individual needs. Other accessibility resources, such as pictorial and audio representations of selected words, are being designed using this capability (see Smarter Balanced Assessment Consortium, 2017b). These accessibility resources hold promise as an alternative to testing accommodations intended to support ELs in gaining access to the content of items. The methods for their sound design and use are currently being investigated.

Reporting and Documentation

Reporting the results of tests poses intricate challenges to supporting ELs to have access to STEM. For decades, test score reporting has been identified as critical to ensuring that assessment programs effectively inform policy and practice (Klein and Hamilton, 1998; Ryan, 2006). Loopholes in legislation or inappropriate interpretation or implementation of legislation concerning the use of measures of academic achievement can lead to unfair practice. For example, NCLB legislation mandated that states measured adequate yearly progress in reading, science, and mathematics, and report

___________________

5 The Smarter Balanced Assessment Consortium distinguishes between universal tools, designated supports, and accommodations. Under Smarter Balanced’s framework, most EL-related accessibility resources are considered designated supports. See Stone and Cook (2018) for a detailed discussion.

this progress in a disaggregated manner for different groups, one of which was students classified as limited English proficient (LEP). Due to their limited proficiency in English, these students start school with lower scores than their native English-using peers. According to these requirements, many LEP students with substantial yearly progress in the mentioned content areas would also likely have increased their English proficiency and placed out of the LEP category, becoming reclassified as fluent English proficient. As mentioned, as a consequence, school reports for LEP students could never reflect the actual progress of students in this group because they would no longer belong to that reporting category (Abedi, 2004; Saunders and Marcelletti, 2013).

Of special importance is the kind of information about ELs that is included in technical reports and scientific papers. As discussed before, EL populations are linguistically heterogeneous, even within the same linguistic group of students who are users of the same given language. Along with English proficiency in the different language modalities (i.e., listening, speaking, reading, and writing), first language, literacy in a first language, schooling history, ethnicity, age, socioeconomic status, and geographical region are variables that contribute to this heterogeneity. Unfortunately, information on the samples of students according to those variables and the sizes of those samples often goes unreported. Contributing to this limitation is the fact that no EL population sampling frameworks are available that support institutions and researchers in specifying and drawing representative samples of ELs based on critical sociodemographic variables.

Perhaps the most important aspect yet to be properly addressed in reporting and documentation is heterogeneity in English language proficiency and how content area achievement performance covaries with English proficiency. Aggregating achievement results across language proficiency categories complicates inferences about EL achievement because of the positive covariation between English proficiency and content area achievement measured in English (see Hopkins et al., 2013). In such contexts, the aggregate performance is a function of both the performance of students in each proficiency category and the percentage of ELs in different categories, which complicates the interpretation of comparisons across schools and districts in the same state, and comparisons over time in the same school or district.

It is easy to construct examples where the performance in each proficiency category is better in School A than in School B, and yet school B has better overall performance because School B has a larger percentage of its ELs in higher levels of English proficiency. This problem, known as the Simpson’s paradox (Blyth, 1972; Wagner, 1982), can occur in the EL context because the percentage of students at different levels of proficiency varies from school to school and over time for reasons outside of the control of

the schools. Aggregation of achievement results across language proficiency categories and the lack of information on other sources of heterogeneity within the EL populations make it impossible to determine how reasonable are the generalizations that can be made about ELs’ STEM performances on large-scale assessment of STEM or to properly inform practice and policy for these students.

CLASSROOM SUMMATIVE AND FORMATIVE STEM ASSESSMENT WITH ENGLISH LEARNERS

In this section, we focus on the micro-level assessment that occurs during instruction in STEM classrooms (see Figure 7-1), specifically on what is known about effective classroom assessment of the STEM disciplines for ELs. We discuss what teachers would benefit from knowing and being able to do to serve ELs through formative assessment. Classroom assessment is important for teacher use in instructional planning and student-level decision making (e.g., Noyce and Hickey, 2011). In addition to the problematic implementation of large-scale assessment of STEM subjects with ELs (outlined in the previous section), large-scale assessment, with its design for signaling strengths and weaknesses in student learning, is not focused on suggesting what kinds of assistance students will need from their teachers to further that learning. This is the role that classroom assessment is designed to play.

There is renewed interest in classroom assessment of the academic content areas as part of a balanced assessment system under ESSA (2015). Such a balance is inclusive of both classroom and large-scale assessment approaches; information on student learning produced by classroom assessment ideally complements that produced by large-scale assessment and together they can form an academic achievement assessment system that fulfils the comprehensive, coherent, and continuous recommended assessment framing of Knowing What Students Know (National Research Council, 2001; see also Black, Wilson, and Yao, 2011; Songer and Ruiz-Primo, 2012). At the same time, there has been an increased interest and visibility around how classroom assessment can be brought into the discussion of educational measurement considerations more broadly, especially in the areas of assessment validity, reliability, and feasibility, which have traditionally been under the purview of large-scale assessment approaches (see Bennett, 2010; Mislevy and Durán, 2014; Wilson, 2016).

In the area of EL student assessment research specifically, classroom assessment is argued to better suit the learning needs of ELs for whom large-scale assessments have limitations on validity (e.g., Cheuk, Daro, and Daro, 2018). In some instances, there is a suggestion that ELs are failing to answer correctly despite their abilities to otherwise show their content

knowledge and skills, for example, in the area of science multiple-choice tests (Noble et al., 2014). The promise of classroom summative and formative assessment for ELs is that students can demonstrate their content knowledge and language, and language practices are used in authentic contexts encountered during content learning (e.g., Mislevy and Durán, 2014). One of the goals of classroom assessment can be teasing apart STEM knowledge from language used in the display of that knowledge.

Furthermore, large-scale assessments of STEM may inadequately provide tractable information that teachers can use for their instruction of ELs (e.g., Abedi, 2010). Durán (2008) has argued that there are “inherent limits of large-scale assessments as accountability tools for ELs as a means for directly informing a deep understanding of students’ learning capabilities and performance that can be related to instructions and other kinds of intervention strategies supporting ELL schooling outcomes” (p. 294). A pertinent illustration of these limitations can be found in the recent research of Rodriguez-Mojica (2018) working in the area of English language arts. She has shown the range of appropriate academic speech acts (i.e., the communicative intents performed by a speaker) naturally occurring in the “real-time talk” between emerging bilingual students. Many of the students who were successfully engaged in interactive classroom activities in English were otherwise deemed to be struggling with English language proficiency and reading on state-wide standardized assessments.

Rodriguez-Mojica’s findings from the discourse analysis of student-to-teacher and peer interactions lend credence to Durán’s suggestion that “assessments can be designed to work better for these students, if we take care to have assessments do a better job of pinpointing skill needs of students developmentally across time, better connect assessments to learning activities across time and instructional units, and better represent the social and cultural dimensions of classrooms that are related to opportunities to learn for ELL students” (Durán, 2008, p. 294; see also Mislevy and Durán, 2014; Wilson and Toyama, 2018). Indeed, there is a small body of research that suggests that classroom-level approaches to assessing STEM disciplines constitute alternative designs that may “work better” for ELs (e.g., Siegel, 2007). However, there are very few studies focusing expressly on ELs and the classroom assessment of their knowledge of the STEM disciplines, particularly of mathematics, engineering, and technology. Where necessary, we have included relevant findings from general education studies of this topic, considering likely implications for ELs and encouraging the urgently needed studies of STEM classroom assessment to fill this lacuna in the EL research base.

Types of Classroom Assessment

Black and Wiliam (2004) cautioned that “[t]he terms classroom assessment and formative assessment are often used synonymously, but . . . the fact that an assessment happens in the classroom, as opposed to elsewhere, says very little about either the nature of the assessment or the functions that it can serve” (p. 183). On this point, Black, Wilson, and Yao (2011) further delineated the different characteristics that classroom assessment can take on. Classroom assessment can have an evaluative function when it adds to the summative information of large-scale academic achievement assessments with interim assessment and teacher-created assessments that may be given at shorter intervals than the annual large-scale assessments (e.g., Abedi, 2010). These classroom summative assessments give teachers and districts information on how well students have acquired certain topics sooner than year-end testing so that modifications to instruction, curricula, or planning of future lessons can be made in a more timely fashion. These purposes of classroom assessment contrast with formative assessment that instead may comprise both formal and informal observations of student work and student discussions, student self- and peer assessment, and teacher analysis of student responses to in-the-moment questions or preplanned probes, among other activities (e.g., Ruiz-Primo, 2011, 2017). These are all examples of an assessment approach designed with feedback to individual student learning as a primary target so that instructional responses can be personalized to the immediate needs of learners (e.g., Erikson, 2007; Heritage, 2010; Ruiz-Primo and Brookhart, 2018).

Our discussion of these two forms of assessment takes into account the nature of empirical evidence that is valued in research on classroom assessment. Whereas research on large-scale assessment tends to use quantitative research methods and research on classroom assessment tends to utilize modest to small-scale quantitative, qualitative, or mixed-methods approaches to research, there are no set of methods specific to large-scale or classroom-based assessment (see Ruiz-Primo et al., 2010; Shavelson et al., 2008). Yet, it is safe to say that, while randomized controlled trials are highly valued in research on large-scale assessment, they are not common in research on classroom assessment due, to a large extent, to the need to be sensitive to classroom context. As a result of this sensitivity to context, studies of classroom assessment may involve different treatments across classrooms—an approach intended to ensure ecological validity, for example through capturing in detail the characteristics of a classroom teachers’ authentic assessment routines and practices with ELs.

Classroom Summative Assessment of STEM with English Learners

There are a number of important initiatives for the summative assessment of STEM subjects at the classroom level. For example, Smarter Balanced has developed interim assessments of mathematics aligned with both the Common Core and the annual summative assessment, to be administered and scored locally by classroom teachers. In another initiative, the Gates Foundation-supported Mathematics Assessment Project successfully supported classroom summative assessment task adoption and design by secondary teachers.6 However, there is scant literature on the effectiveness of classroom summative assessment practices in STEM specifically designed with ELs in mind. Some studies have reported on classroom assessment strategies, although few reported findings address the question of effectiveness. A larger body of literature reports on the creation of classroom assessments by researchers as a means of assessing the effectiveness of new STEM intervention or curricula for ELs (e.g., Llosa, et al., 2016; see also Wilson and Toyama, 2018). However, because evaluation of the technical quality of the assessments for use with ELs is not a predominant target of the research, we have not included these studies here.

As far back as the early 1990s, Short called on integrated assessment to match integrated language and content instruction (Short, 1993). She illustrated this approach with different examples of alternative assessment tasks from a number of content areas, including several integrated language and mathematics tasks. Rather than relying on one type of classroom assessment, Short advocated using several types that span summative and formative purposes (i.e., the teacher’s anecdotal notes of how students draw diagrams to solve word problems and students’ own self-assessments used to generate formative feedback). The summative purposes include classroom assessments that evaluate the students’ performance on a task such as a written essay in which students are asked to explain how other students solved an algebraic word problem. Adding to this repertoire of alternative mathematics assessment with young ELs, Lee, Silverman, and Montoya (2002) found that students’ drawings could reveal students’ comprehension of mathematics word problems helping to separate out the linguistic challenges of mathematics word problems from student comprehension of the mathematical concepts in the word problems.

In a rare large-scale study focused on mathematics assessment and including measures of effectiveness, Shepard, Taylor, and Betebenner (1998) examined the outcomes on the Rhode Island Mathematics Performance Assessment with 464 4th-grade ELs among other student groups. Items on the assessment included matching stories with data in graphic form,

___________________

6 See http://www.map.mathshell.org [October 2018].

representing numbers with base 10 stickers, and representing tangrams with numbers. Students were also required to explain their answers, and a rubric was created for scoring the tasks. Results of the study showed that the assessment was highly correlated with the concurrently administered Metropolitan Achievement Test, a standardized assessment of mathematics knowledge. Promisingly, ELs were found to be less far behind non-ELs on the performance assessment than on the Metropolitan Achievement Test, and very little differential item functioning between the student groups on the performance assessment tasks was observed. However, the authors raised the issue of the adequacy of the scoring rubric and the impact of students’ abilities to explain on their mathematics scores. Elsewhere, Pappamihiel and Mihai (2006) also raised the issue of rubrics used in classroom assessments with ELs and recommended to teachers that the language of rubrics be culturally sensitive and the feedback useful and in language understandable by ELs.

Addressing EL classroom assessment in both mathematics and science, the ONPAR project7 has demonstrated several techniques effective for measuring the mathematics and science knowledge and abilities of ELs. ONPAR tasks engage students in conveying their mathematical and scientific understanding through a variety of representational formats beyond traditional text-based demonstrations, including technology-enhanced assessments that do not place high language demands on ELs (e.g., Kopriva, 2014). ONPAR uses multisemiotic approaches to task creation, including inquiry-based performances, dynamic visuals, auditory supports, and interaction with stimuli to support ELs’ situational meaning. For example, in a series of experimental studies and cognitive labs with 156 elementary through high school-aged ELs and non-ELs, Kopriva and colleagues (2013) found evidence that ELs were able to demonstrate their science knowledge more effectively. Consistent with findings reported by Shepard, Taylor, and Betebenner (1998), the ELs in the ONPAR studies scored higher on ONPAR tasks than they did on a traditional assessment of the same content knowledge. Important for science construct validity, there were no differences in performance on the ONPAR tasks and the traditional assessment for non-ELs and ELs with high levels of English language proficiency.

A different science assessment initiative by Turkan and Liu (2012) presents a mixed set of findings for EL classroom assessment. The authors studied the inquiry science performances of 313 7th- and 8th-grade ELs and more than 1,000 of their non-EL peers. Using differential item functioning analysis to test for the effects of EL status on the performance assessment, the authors found that non-ELs significantly outperformed the ELs overall. Turkan and Liu warned that “in addition to the produc-

___________________

7 See http://www.onpar.us [September 2018].

tion demands placed on ELLs by inquiry science assessments, the wording of prompts in these assessments may also prove challenging for ELLs, which might influence student performance” (p. 2347). However, the study also captured complexities that speak to the necessity for cultural sensitivity and an awareness of student backgrounds during assessment development that might optimize their performances. For example, the differential item functioning analyses revealed an item that favored ELs. This item provided a graphic representation of a science concept within a familiar context. Furthermore, while a constructed response task may seemingly add language challenges for a student acquiring English, Turkan and Liu reported evidence that this task type provided an opportunity for ELs to convey their scientific reasoning in their own words. Collectively, the findings of this study provide direction for assessment developers and teachers who, the authors cautioned, need to be aware of the likelihood of complex “interactions between linguistic challenges and science content when designing assessment for and providing instruction to ELLs” (p. 2343).

The remaining studies of classroom summative assessment with ELs reviewed here were also conducted in the science field. Work by Siegel (2007), who studied the assessment of life sciences in middle school classrooms, suggests ways in which classroom assessments might be best designed to optimize student performance. She modified existing writing tasks by adding visual supports and dividing prompts into smaller units. Using a pre-post test design, Siegel was able to document that ELs with high levels of English language proficiency, along with their non-EL peers, scored higher on the modified classroom assessments. Similarly, Lyon, Bunch, and Shaw (2012) found the students were able to navigate the demanding communicative situation of the performance assessments. The assessments required students to modify their language use to fit a range of participation configurations (e.g., whole group, small group, one-to-one) in order to interpret and present their science knowledge as well as use language to engage interpersonally with other students. However, echoing the findings of Siegel (2007), Lyon, Bunch, and Shaw (2012) wondered how well students with lower English language proficiency than their case study students would be able to participate in such assessments. The question is not trivial, as there is evidence that, along with language, epistemology (ways of knowing) and culturally determined practices influence students’ interpretations of science and mathematics test items (Basterra, Trumbull, and Solano-Flores, 2011; Turkan and Lopez, 2017).

This evidence speaks to the need for careful interpretations of students’ performance on assessment activities, if those activities are to accurately provide information about student progress toward learning goals (Solano-Flores and Nelson-Barber, 2001). Teachers evaluating their ELs may not have sufficient cultural awareness or familiarity with students’

epistemologies and practices. Also, they may be biased against the culturally bounded responses their ELs offer to specific tasks and regard them as incorrect (Shaw, 1997). Consistently, there is evidence that, in evaluating students’ responses to mathematics tasks, teachers tend to fail to give proper consideration to the cultural background of their ELs (Nguyen-Le, 2010). Moreover, there is evidence that teachers who have the same ethnic and cultural backgrounds as their ELs tend to articulate a more complex and sophisticated reasoning about the ways in which culture and language influence students’ interpretations of items and their responses to those items. However, both these teachers and their teacher counterparts who do not share the ELs’ ethnic and cultural backgrounds are equally limited in their ability to address culture in their interpretations of specific scenarios (Nguyen-Le, 2010). These findings indicate that, while necessary, the participation of educators who share ethnic or cultural background with their students in assessment endeavors is not sufficient to properly address the complex and subtle cultural influences that shape student performance in classroom STEM assessment.

Classroom Formative Assessment of STEM with English Learners

Formative assessment involves gathering data or evidence of student learning as learning occurs so that teachers and students can benefit from the information generated during real-time instruction (i.e., short cycle formative assessment) or after reflection for modifying later lessons or future curricula choices (i.e., medium or long cycles) (e.g., Black and Wiliam, 1998, 2009; Heritage, 2010; Swaffield, 2011).

Formative assessment can occur in a variety of ways (Ayala et al., 2008; Ruiz-Primo and Furtak, 2007). As Heritage and Chang (2012) pointed out, “These include informal methods during the process of teaching and learning that are mostly planned ahead of instruction but can occur spontaneously (e.g., observations of student behavior, written work, representations, teacher student interactions and interactions among students) as well as more formal methods (e.g., through administering assessments that are specifically designed for formative purposes for ELL students)” (p. 2). Formal methods of formative assessment may involve giving students commercially available or teacher-created assessments such as quizzes and checklists (Shavelson, 2006). As long as the information they generate is used to inform instruction, rather than to summarize students’ performances with a score or grade, these formal assessments also meet the definition of formative assessment recently revised by the Formative Assessment for Teachers and Students State Collaborative on Assessment and Student Standards (2017) of the Council of Chief State School Officers:

Formative assessment is a planned, ongoing process used by all students and teachers during learning and teaching to elicit and use evidence of student learning to improve student understanding of intended disciplinary learning outcomes and support students to become self-directed learners.

Those who view formative assessment as an approach, rather than as formal tests or tasks administered to students, treat it as a process for generating information about where student learning currently is and where it needs to go next to meet a learning goal (e.g., Hattie and Timperley, 2007; Ruiz-Primo and Furtak, 2007). For example, through episodes of close questioning of ELs’ thinking and planning around their persuasive writing, teachers are able to grasp in what ways they can scaffold learning to take the students to the next level of understanding (Furtak and Ruiz-Primo, 2008; Heritage and Heritage, 2013; Ruiz-Primo and Furtak, 2006). The line between instruction and assessment here is blurred: Where instruction stops and assessment starts during such interactions is not clear and may not be relevant. By being in such sustained conversations with a student, formative assessment is especially suited to assessing ELs’ content thinking and learning (Alvarez et al., 2014; Bailey, 2017; Bailey and Heritage, 2014; Solano-Flores, 2016). Only when they are interacting with students in real time are teachers in a position to modify their own language as well as scaffold their students’ language comprehension and production needs if and when those needs occur. In combination, these linguistic adjustments can seamlessly assist in the display of a student’s content knowledge and abilities (Bailey, 2017).

Formative assessment also suits the assessment of STEM with ELs in several additional ways. For example, teachers may ask their students to express their ideas with drawings and to explain those ideas in their own words. Potentially, this approach not only allows students to demonstrate learning in multiple ways, but also allows informal triangulation of data (Alvarez et al., 2014; Ruiz-Primo, Solano-Flores, and Li, 2014). However, research is needed that allows proper identification of the ways in which these resources can be used effectively with ELs. Unfortunately, research and practice involving the use of visual (non-textual) resources in assessment wrongly assumes that visual information is understood in the same ways by all individuals and is not sensitive to the multiple variations of visual representations (see Wang, 2012) or the abstractness of many concepts.

Mixed-methods research conducted with science teachers of secondary-level general education students has found that written scaffolding (e.g., “focusing” sentence frames such as “What I saw was________” and “Inside [the balloon] the particles were________,” and “connecting” sentence frames such as “Evidence for _________comes from the [activity or reading] because________.” p. 686) embedded in the formative assessment

of written science explanations successfully increased the explicitness of the explanations from students at all levels of science achievement (Kang, Thompson, and Windschitl, 2014). Quantitative analyses revealed that contextualizing the focal science phenomena in the writing tasks was the most effective scaffold alone, even when used in combination with other proven scaffolding types such as providing rubrics, answer checklists, and sentence frames, and allowing students to diagram their explanatory models, as well as explain in writing. Qualitative analysis of the students’ writing illustrated how contextualization (e.g., students selecting a geographic location of their own choosing to explain seasonal changes) along with combinations of the other scaffolds in this assessment meant that the “students were invited to engage in a high level of intellectual work” (Kang et al., 2014, p. 696).

This work can be informative for designing effective formative assessment opportunities with ELs. First, by knowing at what level of comprehension a student is making meaning of STEM content through the student’s oral articulation of that content, a teacher can then devise a contingent pedagogical response: that is, the teacher can tailor the next steps of instruction to match the content and language needs of the student (Bailey, 2017). This approach is consistent with findings that using both written and oral prompts in teaching contributes to understanding where students are in their learning (Furtak and Ruiz-Primo, 2008). This may take the form of translating key STEM concepts into a student’s first language, allowing students to make connections to their own cultural contexts, and supporting STEM instruction with images, graphics, manipulatives, and other relevant objects and material from everyday life.

Second, to be inclusive of ELs, formative assessment can be viewed as a form of social interaction through language (Ruiz-Primo, Solano-Flores, and Li, 2014). Formative assessment practices can lead to establishing “a talking classroom” (Sfard, 2015), and thus such practices can also provide a rich(er) language environment for ELs to participate (Ruiz-Primo, Solano-Flores, and Li, 2014; Solano-Flores, 2016) as they learn the STEM disciplines.

Third, formative assessment can foster students’ agency in their STEM content learning, through a key focus on self-assessment and peer assessment made by formative assessment approaches (Heritage, 2013a). Research with the K–12 general population has documented the efficacy of self-assessment in particular on student learning outcomes (e.g., Andrade and Valtcheva, 2009; McMillan and Hearn, 2008). There is also some evidence of positive outcomes of formative assessment approaches that include student self- and peer assessment for EL learning in disciplines that are not STEM related (e.g., Lenski et al. [2006] in the area of literacy).

Self-directed learners are able to monitor and plan for their own learn-

ing through the feedback they generate for themselves with self-assessment. Related to the second point above, ELs who are also self-directed learners may be in a position to create their own English language-learning opportunities by not waiting for language learning chances to come to them. Rather, they can deliberately seek out additional language exposure throughout the school day, including during their STEM classes (Bailey and Heritage, 2018).

There has been a small number of studies on the effectiveness of formative approaches to assessment of mathematics and science with ELs specifically. The TODOS: Mathematics for ALL initiative conducted a series of research studies on the effectiveness of the interactive interview as a means of uncovering students’ mathematical understanding. In one study, four 6th-grade Spanish-English bilingual students (their English language proficiency status was not reported) took part in multistage interactive interviews. Following an oral think-aloud to approximate a problem solution, students initially independently wrote draft responses to tasks focused on fractions, mixed numbers, percentages, and proportional reasoning. The interviews encouraged students to consider different problem solutions, test and revise their hypotheses, and use all their linguistic resources (e.g., both Spanish and English) for solving the tasks. Analyses of these interviews showed that this formative assessment approach “provided the means to develop student agency through problem solving, support and encourage mathematical innovation, and cultivate a shared sense of purpose in mathematics” (Kitchen, Burr, and Castellón, 2010, p. 68). While this assessment format yielded promising results with students who were Spanish-English bilinguals, the authors cautioned that the interview format is lengthy and requires a substantial time investment on the part of teachers (Castellón, Burr, and Kitchen, 2011).

In the area of science assessment, one recent mixed-methods study implemented “educative assessments” that were writing rich assessments for Grades 4 through 8. Educative assessments are designed to support teachers’ instructional decision making, particularly in this instance with ELs in the areas of inquiry practices, academic language, and science content, which fits formative assessment as defined here (Buxton et al., 2013). These assessments were implemented as one component of the Language-Rich Science Inquiry for English-language Learners (LISELL) project. The assessments were found to support “teachers in diagnosing their students’ emergent understandings. . . . And interpretation of assessment results led to changes in teachers’ instructional decision making to better support students in expressing their scientific understandings” (Buxton et al., 2013, p. 347). Specifically, the teachers were able to support their students in building connections from everyday language (both English and Spanish) to disciplinary discourse during their science learning. However, Buxton

and colleagues noted the necessary time required and the scaffolding that the teachers needed from the project during focus group sessions to draw conclusions about their students’ learning.

In a study of two 9th-grade science classrooms in Canada, Slater and Mohan (2010a) reported how an ESL teacher and a science teacher collaborated to formatively assess their ELs to help improve their “use of English in and for science” (p. 93). The science teacher had a mix of English-only and more proficient ELs, whereas the ESL teacher had a class of relatively newcomer students who were beginning to acquire English. The newcomer ELs were predominantly users of Chinese as a first language. Both adopted a register approach to unpacking the language of science so that students could use all of their available linguistic resources to demonstrate their content knowledge. The science teacher focused on formatively assessing students’ acquisition of the science register in his class through problem-solving, whereas the ESL teacher focused on explicit teaching and assessing of the knowledge structures of the register, namely the language underlying cause-effect reasoning, and problem-solving/decision-making in science to prepare her students for transfer to the science teacher’s classroom. Slater and Mohan explained that “[w]hen teachers assess learners’ knowledge of science, in [Systemic Functional Grammar] terms they are assessing whether the learners have built up the meaning potential of the science register and can apply it to relevant situations and texts” (p. 92).

Slater and Mohan (2010b) argued that oral explanations provide teachers with the requisite information they need to scaffold student learning. Moreover, these authors provided a clear example of formative assessment being ideally suited to EL pedagogy that integrates language and content learning; only by being engaged in an approach to formative assessment that requires oral discourse to generate evidence of science learning are teachers also able to build on what their students say contingently. This not only helps develop students’ English language proficiency, but also works to further their explanations of “their understanding of cause and effect in order to further their content knowledge” (Slater and Mohan, 2010b, p. 267).

Also using students’ explanations as the basis of generating evidence of learning, a study of kindergarten teachers illustrated how through intervention, they increased their implementation of simultaneous formative assessment of ELs’ science and language knowledge (Bailey, Huang, and Escobar, 2011). This 3-year research-practice partnership assisted teachers in intentionally planning for and then putting into practice formative assessment with Spanish-dominant ELs by implementing three components of a formative assessment approach: (1) setting learning goals for science and language based on state science and ELD standards during lesson planning and using self-reflection guides; (2) making success criteria explicit to stu-

dents during lessons so that they were aware of the desired goals; and (3) evoking evidence of student learning in both science and language learning using diverse ways, including through closely observing students engaged in different tasks and activities, questioning student comprehension and understanding, and inferring student understanding through the questions students asked them and other students. This small-scale qualitative study can only be suggestive of the impacts of formative assessment implementation on science and language learning, but it revealed that, over time, formative assessment was more frequently adopted and enabled teachers to identify gaps between current levels of student science and language understanding and the desired learning goals, as well as documented increased student engagement and talk during science lessons over the same time period.

In a recent review of formative assessment practices in science instruction, Gotwals and Ezzo (2018) also reported that teachers’ use of scientific phenomena (e.g., observable events that allow students to develop predictions and explanations) to anchor their instruction provides opportunities for rich language use and engagement in science classrooms. These discussions in turn provide opportunities for formative assessment of student scientific understanding. This review also highlights the close connections between science instruction and assessment when a formative approach is adopted.

Bailey and Heritage (2018) provided several clinical examples of formatively assessing ELs in both mathematics and science among other content areas, also primarily with an emphasis on explanation as a cross-curriculum language practice. These examples provide elaborated descriptions of how teachers who have experience with implementing a formative approach to assessment pay close attention to both the current status of students’ content learning and the kinds of language students use to exhibit their understanding (both orally and in written tasks). Only with this simultaneous focus, the authors argued, can teachers effectively develop contingent teaching that takes account of the integration of content and language: that is, make in-the-moment decisions to either make modifications to any content misconceptions or language ambiguities to complete the formative feedback loop, or to move on to presenting students with a suitably calibrated subsequent challenge. The centrality of this feedback loop in formative assessment is discussed in the next section.

The Central Role of Feedback in Formative Assessment

Generating feedback so that teachers know what to teach next or which pedagogical moves to choose and provide feedback to students about how their learning is progressing is central to formative approaches

to assessment (Ruiz-Primo, 2017). In a review of effectiveness, Hattie and Timperley (2007) defined the purpose of effective feedback as reducing the gap between a student’s current understanding and a desired learning goal. Effective feedback provides answers to three main questions for the student (and the teacher): “Where am I going?” “How am I going?” and “Where to next?” (p. 86). Feedback that was effective was coupled with instruction-enhanced learning, whereas feedback that involved praise only was not effective for learning.

Effective feedback makes partners out of the student and the teacher, giving each a role in response to the same assessment information (e.g., Heritage, 2010; Kitchen, Burr, and Castellón, 2010; Li et al., 2010; Ruiz-Primo and Li, 2012, 2013). While most studies of the positive effects of feedback on the accuracy of students’ own assessment of their performance have been conducted with adult and adolescent learners, van Loon and Roebers (2017) reported encouraging findings with German-speaking 4th- and 6th-grade students in Switzerland. Students studied concepts and their definitions, and they were then tested on their knowledge and asked to self-assess their performances. Feedback was effective in improving the accuracy of their self-assessments. The authors even reported that initial age differences in selecting what aspects of their work needed restudying went away after students received feedback on how to improve their definitions. These findings indicate that students used information from feedback to make themselves not only better self-evaluators but also better regulators of their own learning.

Use of Learning Progressions with Formative Assessment

For over a decade, there has been much interest within the assessment field in the development of learning progressions, also known as trajectories of learning in some STEM disciplines (Shavelson, 2009; Wilson, 2009). Progressions have been used to guide classroom assessment, particularly formative approaches to assessment. Learning progressions are useful to formative approaches to assessment because they can provide the necessary details of how student thinking about a domain develops over time with instruction and experience with tasks and thus guide teachers in their choice of what next to teach and in their feedback to students on what next to learn.

There is variation in the design of learning progressions; some are hypothesized incremental developments in a domain often based on syntheses of research on children’s conceptual knowledge, whereas others are empirically derived from authentic student performances (see Briggs et al., 2006). Some are designed to reference the academic content standards, others are based on “big ideas” or concepts within a domain of learning,

and yet others are based on the analysis of the curriculum to be taught. If they are empirically based, learning progressions are designed to trace pathways of learning for a particular domain that students have demonstrated on tasks devised for that purpose that may also have been informed by the research on children’s conceptual knowledge of a domain (Confrey and Maloney, 2010). When they are not empirically based, these ideas are based on logical analyses (Ayala et al., 2008; Ruiz-Primo, 2016; Ruiz-Primo and Li, 2012).

If they are well devised and implemented, learning progressions can be a framework to integrate assessment (both summative and formative) with instruction and can take account of developmental theories of learning (see National Research Council, 2005; Wilson and Toyama, 2018). However, the course of a progression is not developmentally inevitable for every student. Instead, a learning progression offers a sequence of “expected tendencies” along a continuum of increasing expertise (Confrey and Maloney, 2010). While most students will follow the different phases of the progression if it is well researched and designed, proponents of learning progressions point out that due to individual variation in student development and instructional experience, it is not expected that all students exhibit every growth point along the route to greater expertise (e.g., Heritage, 2008). Indeed, learning progressions are descriptions of typical development of a domain and are not intended for students and teachers to follow lockstep through each phase if students have already progressed to more sophisticated levels of understanding and skill.

Moreover, in contrast with state and professional organizations’ standards for mathematics and science, many learning progressions or trajectories are not tied to specific grades or to a particular scope and sequence for learning, which is an important consideration for implementation with ELs who may have different pathways to arrive at successful STEM content learning. The learning of ELs with strong literacy skills but still emerging oral English skills, the learning of newcomers with extensive schooling experiences in their first language, or the learning of ELs with interrupted schooling may all be better understood with a learning progression of a specific domain (e.g., proportional reasoning, force and motion) rather than with summative or formative assessments of STEM content tied to a curriculum that is aligned to specific grade-level academic standards that may be out of synchrony with an EL’s school experience.

Unfortunately, much of the work on progressions to date has focused on science and mathematics learning in general education contexts rather than with ELs specifically and has been articulated as a “promising approach” to evidence-based educational reform (see Corcoran, Mosher, and Rogat [2009] for discussion of science learning progressions and Daro, Mosher, and Corcoran [2011] for discussion of mathematics learning progressions).

Working in the area of mathematics, for example, Confrey (2012) and her colleagues have elaborated a learning trajectory for equipartitioning to capture the initial informal thinking of students about fairly sharing objects and single wholes as it evolves into the more complex understanding of sharing multiple wholes and “the equivalence of the operation a ÷ b, the quantity a/b, and the ratio a/b:1” (Wilson et al., 2014, p. 151). Subsequent professional learning with the equipartitioning trajectory enabled teachers to become aware of their students’ mathematical thinking and where it fits on the trajectory. Becoming familiar with the trajectory also allowed teachers to increase their own knowledge of mathematics, although this was mediated by the teachers’ prior mathematical knowledge for teaching (Wilson et al., 2014).

In a review of learning progressions in science learning (including example progressions for buoyancy, atomic molecular theory, and tracing carbon in ecosystems), Corcoran, Mosher, and Rogat (2009) explained that ideally learning progressions “are based on research about how students’ learning actually progresses—as opposed to selecting sequences of topics and learning experiences based only on logical analysis of current disciplinary knowledge and on personal experiences in teaching. These hypotheses are then tested empirically to assess how valid they are (Does the hypothesized sequence describe a path most students actually experience given appropriate instruction?)” (p. 8).

With notable exceptions in a recent volume on STEM and ELs (Bailey, Maher, and Wilkinson, 2018), few studies have expressly included ELs in their descriptions of learning progression development and implementation. In that volume, Covitt and Anderson (2018) described a program of research in the science field that uses clinical interviews and written assignments with K–12 and university students in order to develop a comprehensive learning progression framework. Student oral and written performances in the genres of scientific discussion, namely explanation, argument, and prediction, show trajectories from less sophisticated informal discourse in these genres to more sophisticated scientific discourse. The authors pointed out how ELs are faced with the challenge of acquiring not only a new language for day-to-day purposes, but also the characteristics of these different scientific genres. Also adopting a learning progression approach to describe alignment among science and literacy curriculum, instruction, and summative and formative assessment in the same volume, Wilson and Toyama (2018) articulated how the implementation of learning progressions with ELs may possibly differ from that of non-ELs. First, ELs may follow the same learning progression as non-ELs but are systematically at lower anchoring points as measured by assessment items of tasks. Second, ELs may follow the same learning progression but assessment items behave differently for ELs (i.e., as revealed by differential item functioning

[DIF] analysis of summative assessments). Third, ELs may follow a different progression from non-ELs. These hypotheses can guide future research on the creation and validation of learning progressions in STEM with ELs.