4

Research Tools, Methods, Infrastructure, and Facilities

In the past decade, significant advances have been made in the characterization (Section 4.1), synthesis and processing (Section 4.2), and computational (Section 4.3) capabilities available to materials researchers. These new tools have enabled previously unachievable materials insights, and this is especially true when used in combination—for example, in situ measurement and control of novel synthetic strategies or advanced data analytics techniques utilized simultaneously with advanced imaging diagnostics (Section 4.4). Development of these tools is a research frontier in its own right meriting further investment. This chapter highlights a number of methodological advances and the impact they have had on the materials community. One consequence of continually improving tools is the need for infrastructure reinvestment to ensure the availability of state-of-the-art tools (Section 4.5). Novel modalities for such investment are discussed. Last, the current and emerging capabilities available at intermediate-scale facilities as well as national user facilities are highlighted (Section 4.5).

4.1 CHARACTERIZATION TOOLS

4.1.1 Electron Microscopy

Transmission electron microscopy (TEM) is a key technique in all areas of materials science because it helps reveal how a material’s internal structure is determined by synthesis and processing and how it correlates with its physical

properties and performance. Imaging, diffraction, and spectroscopy can all be carried out across length scales ranging from interatomic distances to micrometers, often within a single transmission electron microscope and on the same sample.

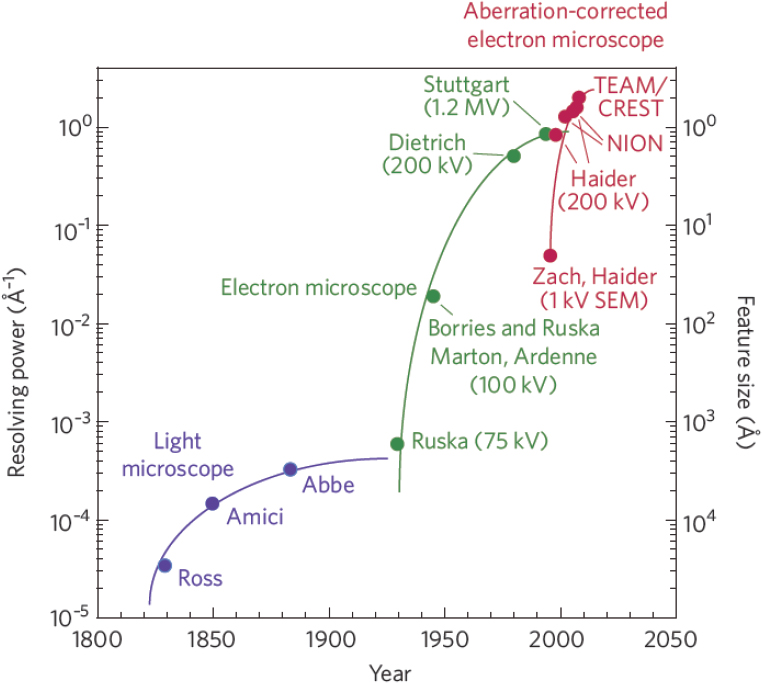

The past decade has seen tremendous advances in instrumentation—in particular, spherical and chromatic aberration correctors, monochromators, and new detectors (see Figure 4.1). One example is the continued evolution of the aberration-corrected microscopes. Advanced aberration-corrected scanning transmission electron microscopes (STEMs) can now achieve 0.5 Å resolution in TEM and

STEM modes along with 0.1 eV energy resolution.1,2 Other examples of advances afforded by spherical aberration correction include picometer-precision in determining atom column positions and tremendous improvements in the quality of atomic-scale electron energy-loss and energy-dispersive X-ray spectroscopic images, the latter in combination with new large solid-angle detectors. Understanding what sets the ultimate limit in the quest of further improving instruments to achieve even higher spatial resolution is a topic of ongoing research in the field. Advances in monochromators now allow for energy resolution of 30 meV (or better) in electron energy loss spectroscopy, sufficient to study phonons. Faster cameras and new types of sample holders developed over the past decade provide new opportunities for in situ studies of a wide range of processes in materials. Important ongoing developments include high-speed pixel array detectors that allow for detecting scattered electrons as a function of position in the detector plane. These new detectors allow for utilizing new imaging modes (e.g., differential phase contrast to image electric polarization) and, more generally, improve visibility of features and interpretability of electron microscope images.

In parallel with instrumentation, electron microscopy techniques have also made substantial advances. One of the main advantages of electrons for imaging—namely, their strong interaction with matter—was long thought to pose a challenge in the quantitative interpretation of image intensities. Truly quantitative interpretation of image intensities was demonstrated in the past decade, opening up quantitative analysis of atomic resolution images not only in terms of the position, but also the content, of atomic columns in a sample. Another highly active research area is three-dimensional (3D) imaging (electron tomography)3 of crystalline samples. A number of different approaches are actively being developed in the field. Such approaches have been applied to analyze the 3D positions of atoms in nanoparticles. Tomography has also been used in diffraction contrast imaging of crystal defects and, in combination with in situ straining, has allowed for dynamic visualization of the interactions of dislocations with grain boundaries.4

___________________

1 S.J. Pennycook, 2017, The impact of STEM aberration correction on materials science, Ultramicroscopy 180(1):22-33.

2 Q.M. Ramasse, 2017, Twenty years after: How “Aberation correction in the STEM” truly placed a “A synchrotron in a microscope,” Ultramicroscopy 180(1):41-51.

3 E. Maire and P.J. Withers, 2014, Quantitative X-ray tomography, International Materials Reviews 59(1):1-43.

4 A. King, P. Reischig, S. Martin, J.F.B.D. Fonseca, M. Preuss, and W. Ludwig, 2010, “Grain Mapping by Diffraction Contrast Tomography: Extending the Technique to Subgrain Information,” in Challenges in Materials Science and Possibilities in 3D and 4D Characterization Techniques: Proceedings of the Risø International Symposium on Materials Science, hal-00531696, Risø National Laboratory for Sustainable Energy, Technical University of Denmark.

4.1.2 Atom Probe Tomography

Current and emerging research areas in the physical and life sciences increasingly require the capacity to quantitatively measure the structure and chemistry of materials at the atomic scale. This atomic-scale information enables nanoscience research across a wide range of disciplines including materials science and engineering, fundamental physics, chemical catalysis, nanoelectronics, and structural biology.

Atom probe tomography (APT) is the only currently available material analysis technique offering extensive capabilities for simultaneous 3D imaging and chemical composition measurements at the atomic scale. It provides 3D “maps” that show the position and elemental species of tens of millions of atoms from a given volume within a material, with a spatial resolution comparable to advanced electron microscopes (around 0.1-0.3 nm resolution in depth and 0.3-0.5 nm laterally) but with higher analytical sensitivity (<10 appm). The development of commercially available pulsed-laser atom probe systems now allows for the 3D analysis of composition and structure at atomic resolution in nonconductive systems such as ceramics, semiconductors, organics, glasses, oxide layers, and even biological materials in addition to metals and alloys. New focused ion beam (FIB) methods enable the fabrication of tailored site-specific samples for atomic-scale microscopy with much higher throughput than was previously possible.

The latest generation of atom probe instruments now offers increased detection efficiency across a wide variety of metals, semiconductors, and insulators, increasing the fraction of atoms detected from approximately 60 percent to approximately 80 percent, and increasing the sensitivity. Faster and variable repetition rate dramatically increases the speed of data acquisition, and advanced laser control algorithms provide measurably improved sample yields. Atom probe experiments are inherently low throughput. The rate at which experiments can be conducted limits the outcomes that can be achieved, so improvements in the speed of acquisition and the yield of successful data sets promises an enormous step forward. This allows greater sensitivity per unit volume, which is extremely useful for the measurement of trace quantities of materials. This is a great help with geosciences applications such as mineral dating or measurement of nanoparticles or quantum devices.

One opportunity includes the development of new detectors or detector technologies that could push atom detection limits closer to 100 percent and raise the possibility of achieving kinetic energy discrimination, which would permit deconvolution of overlapping isotopes. Another significant opportunity lies in the development of multimodal instruments incorporating either a transmission electron microscope or a scanning electron microscope (SEM) column directly into an APT system or vice versa, to provide real-time or intermittent imaging or diffraction

data. This could allow assessment of specimen shape and crystallography during APT analysis, which could significantly increase reconstruction accuracy for complex heterogeneous materials. Additional opportunities include automation of procedures such as specimen alignment and application-specific control, which could free the user from monitoring the acquisition and encourage optimized analysis conditions as different material types or interfaces are exposed during analysis.

Currently, there is significant ongoing discussion about the development of APT standards. Such developments could help in establishing unified protocols for APT sample preparation, data collection processes, data reconstruction and analysis, and reporting of results worldwide. All these developments could lay the foundation for a bright future for APT as a characterization capability that can take not only materials scientists but also researchers from a variety of disciplines, including geology, biology, and solid-state materials, closer to the goal of achieving the 3D composition, structure, and chemical state of a material atom by atom. Sophisticated data analysis tools are required to extend the reach of the technique beyond visualization, and extract the type of meaningful, quantitative information that is required for the purpose of materials design (e.g., for thermodynamic calculations or grain boundary engineering). Intensive research in this area promises to improve the potential to open up to the application of this powerful technique to a wide range of scientific research areas.5

4.1.3 Scanning Probe Microscopies

Scanning probe microscopy (SPM) fundamentally relies on atomic interactions between a tip and a surface. In the two decades following its invention, advances focused on increasing spatial resolution, developing quantitative theory of sample-tip interactions and improving the robustness of signals. The first wave of SPM, extending the range of properties beyond surface structure, resulted in electric force microscopy, scanning kelvin force microscopy, piezoresponse force microscopy, scanning capacitance microscopy, and so on.

The past decade witnessed a dramatic expansion of the properties that could be probed by exploiting the frequency dependence of imposed and detected signals, achieving low detection limits, increasing scan and detection speed, and managing spatially and temporally resolved functional data sets. These advances allowed access to property functions rather than just constants at nm resolution, driving advances including the following:

___________________

5 A. Devaraj, D.E. Perea, J. Liu, L.M. Gordon, T.J. Prosa, P. Parikh, D.R. Diercks, et al., 2018, Three-dimensional nanoscale characterisation of materials by atom probe tomography, International Materials Reviews 63(2):68-101.

- Not only capacitance and charge, but also the real and imaginary components of dielectric function in organic, inorganics, and biological systems from impedance probes;

- Spatially resolved quantum efficiency of photo-generated charge in solar cell materials;

- Ultrasonic force detection for subsurface imaging;

- Electrochemical strain and ionic diffusion in battery materials;

- Dynamic processes including surface diffusion and real-time nucleation and growth in phase transformations;

- Ferroelectric switching and domain wall dynamics;

- Flexoelectricity in water or ambient of organic or inorganic materials;

- Force modulation quantifying elastic moduli and energy dissipation locally;

- Nuclear magnetic resonance, spin-resolved STM, and spectroscopy of magnetic atomic particles;

- Multi-tip scanning tunneling microscope (STM) quantifying transport and electronic structure of nanotubes, graphene, and two-dimensional (2D) materials; and

- Switching of polaritons in 2D materials and others with near field infrared spectroscopy.

Many of the recent SPM advances have been made routine and will continue to provide facile characterization to drive progress in materials research and application in the next decade.

Some challenges on the horizon require additional advances. The expansion of in situ/in operando measurements that approach realistic conditions in terms of chemical environment, temperature, and pressure would eliminate the need to extrapolate simplified measurements in order to reach the conditions for real applications. Opportunity space in this area includes battery materials, fuel cells, corrosion, catalysis, thin film growth, and nanoelectronics fabrication. Imaging rates are continuously increasing, but they are not routinely at video rates. As more scanning probes achieve this speed, the range of dynamic processes that can be quantified will increase.

While an optimal pathway is not yet clear, the potential implementation of quantum computation requires characterization of quantum mechanics-based behavior in a variety of settings: quantum optics at multiple frequencies, electronic transport in various materials configurations, and manipulation of matter at the atomic scale. The materials sets for this application range from graphene and other van der Waals materials, to topological insulators, to silicon qubits.

SPMs that use mechanical interactions to acquire subsurface imaging can produce tomographic images, taking characterization to an additional dimension.

Taking property tomography to the next level will advance understanding in thin film heterostructures, cells and biological materials, composites, and solar cells.

Many SPM techniques have evolved to probe structure and multiple property functions simultaneously, creating large data sets of interconnected information. Integrating the concepts of big data and machine learning could yield unexpected insight into complex behavior in functional materials.

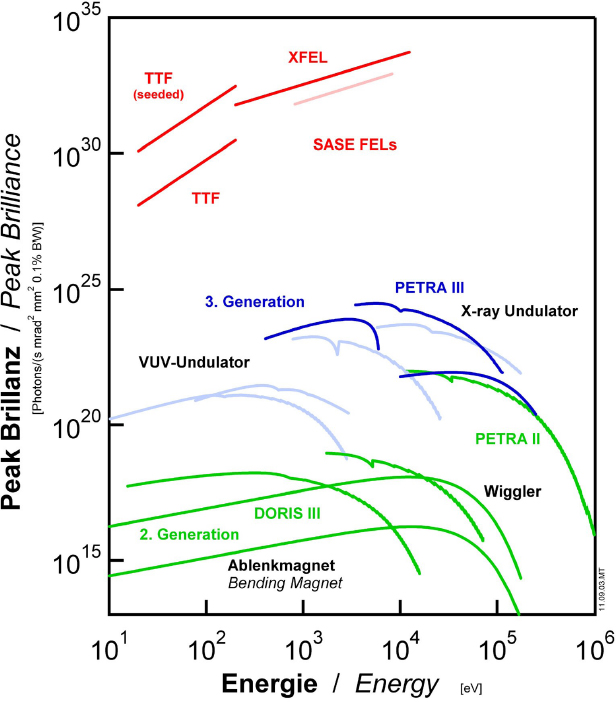

4.1.4 Time-Resolved, Especially Ultrafast Methods

In the past decade, significant advances in time-resolved, ultrafast methods have been achieved with picosecond resolution routine, femtosecond common, and attosecond emerging. These methods enable the study of atomic-scale dynamics of materials. Ultrafast, atomic-scale, dynamical motions underlie the performance of all functional materials and devices, and the ability to resolve them opens previously untapped potential to enhance materials performance and create new functionality. Such methods are available through table-top systems owing to advances in laser technology as well as an emerging number of X-ray free electron laser user facilities, beginning with the Linac Coherent Light Source (LCLS) at Stanford Linear Accelerator Center (SLAC). Upgrades to LCLS are already under way to enhance its capabilities. More intense and coherent synchrotron sources are becoming available, which will also enable a variety of more time-resolved experiments.

Applications of ultrafast spectroscopy have been very useful when investigating ultrafast biological processes, such as photo-induced proton and electron transfer or excitation energy transfer. The demonstrations have shown how the time-resolved spectroscopic techniques were useful in providing the understanding of such processes. All the electron transfer steps, especially the initial ones, are ultrafast, and early femtosecond pump-probe experiments revealed the final details of this process.6 Another more recent example of the impact of ultrafast methods is in atomic and ionic diffusion, which is fundamental for the functionality, synthesis, and stability of a wide range of materials. In particular, diffusion of electroactive ions in complex electrode materials is central to the function of fuel cells, batteries, and membranes used for desalination and separations. While much is known from first-principles modeling and simulation about how ions diffuse through a lattice,7 little is known experimentally about the atomic-scale processes involved in ion diffusion. Individual ion-hopping events between adjacent interstitial sites

___________________

6 W. Holzapfel, U. Finkele, W. Kaiser, D. Oesterhelt, H. Scheer, H.U. Stilz, and W. Zinth, 1990, Initial electron-transfer in the reaction center from Rhodobacter sphaeroides, Proceedings of the National Academy of Sciences U.S.A. 87(13):5168-5172.

7 G. Sai Gautam, P. Canepa, A. Abdellahi, A. Urban, R. Malik, and G. Ceder, 2015, The intercalation phase diagram of Mg in V2O5 from first-principles, Chemistry of Materials 27(10):3733-3742.

may approach approximately 100 fs time scales and are associated with significant changes in the crystal strain field,8 which in turn can influence the dynamics of neighboring ions.

The large response of many complex materials to electromagnetic radiation raises the possibility of ultrafast control of those materials properties through the application of short, intense photon pulses. This new field of materials research is showing promise in a number of areas, including especially strongly correlated electron materials. One example of this is multiferroic materials, which show promising potential applications in which magnetic order is controlled by electric fields. However, the underlying physics and ultimate speed of magnetoelectric coupling remains largely unexplored. Using ultrafast resonant X-ray diffraction revealed the spin dynamics in multiferroic TbMnO3 coherently driven by an intense few-cycle terahertz light pulse tuned to resonance with an electromagnon mode.9 The results show that atomic-scale magnetic structures can be directly manipulated with an electric field of light on a subpicosecond time scale.

Applications of X rays to quantum materials research include ongoing efforts to understand high-temperature superconductivity, the recent detection and spatial mapping of spin currents using X-ray spectromicroscopy, and the direct demonstration and discovery of new electronic phases of topological quantum matter with angle-resolved photoelectron spectroscopy. Despite this important progress in understanding fundamental material physics, the direct impact of X-ray tools on quantum information technologies has been very low to date. This is because the X-ray tools presently lack the spatial resolution to probe quantum matter on the relevant length scales.

The combined spectral, spatial, and temporal sensitivity enabled by emerging high brightness X-ray sources will dramatically change this situation. X-ray beams are currently typically 10-100 mm in size. In most cases, this is much larger than underlying quantum coherence length and any quantum information is averaged out. The new sources will enable powerful spectroscopic nanoprobes with few-nanometer spatial resolution. These nanoprobes will be able to measure the decoherence of wavefunctions, the influence of device morphology on emergent quantum phenomena, and the motion of quantum information at the heart of emerging quantum technologies. These experiments will investigate not only the spatial and temporal fluctuations of idealized, pure materials but also their manifestation in real-world devices.

___________________

8 A. Van der Ven, J. Bhattacharya, and A.A. Belak, 2012, Understanding Li diffusion in Li-intercalation compounds, Accounts of Chemical Research 46(5):1216-1225.

9 T. Kubacka, J.A. Johnson, M.C. Hoffmann, C. Vicario, S. De Jong, P. Beaud, S. Grübel, et al., 2014, Large-amplitude spin dynamics driven by a THz pulse in resonance with an electromagnon, Science 343(6177):1333-1336.

4.1.5 3D/4D Measurements, Including In Situ Methods

The past decade has seen tremendous growth in 3D and four-dimensional (4D) characterization capabilities that are specifically geared toward quantifying mesoscale microstructure and response under stimuli. This growth was made possible by significant advances in computer-based control, sensing, and data acquisition, and has resulted in novel experimental toolsets and methodologies that were not possible a decade ago. These advances have enabled a move from qualitative observations to digital data sets that can be mined, filtered, searched, quantified, and stored with increased fidelity and operability.

Mesoscale 3D and 4D characterization of materials with X rays can be divided into two subfields, tomography10 and diffraction-based microscopy. The former, commonly referred to as micro-CT, involves the collection of multiple radiographs with microscale resolution and computer-based reconstruction. Laboratory-based systems can readily produce 3D renderings of soft and lattice materials, but hard materials absorb X rays much more efficiently and require higher energy sources and in some cases synchrotron experiments. By comparison, diffraction-based 3D X-ray microscopy involves scanning a beam across a specimen and reconstructing the polycrystalline microstructure from reciprocal lattices. Variation in the placement of the detectors from near-field to far-field positions allows one to determine the orientation of individual voxels within the specimen, and close inspection of the far-field pattern facilitates the measurement of local elastic strains.

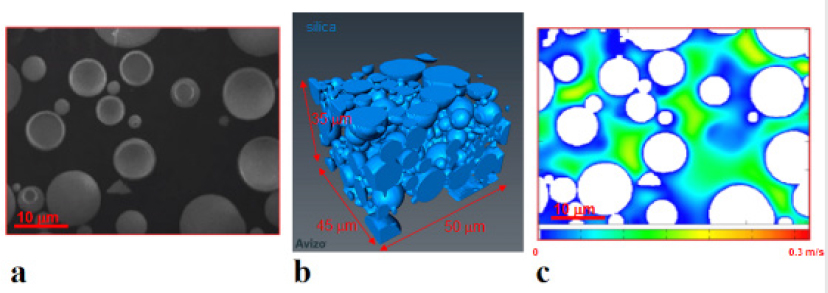

Serial sectioning combined with the acquisition of optical or electron micrographs and/or orientation and chemical maps has emerged as an alternative way of collecting and constructing 3D data sets. FIB-SEMs allow one to shape and extract materials with submicron precision (see, e.g., Figure 4.2), but the milling rates of conventional FIBs are prohibitive for mesoscale studies. Faster techniques have emerged and now allow for 3D characterization of volumes ranging from cubic microns to fractions of cubic millimeters. For hard materials, the emergent technologies have involved ultrashort (femtosecond) laser ablation, plasma-source FIB (P-FIB), broad ion beam sectioning, and mechanical polishing. The maturation of diamond-knife microtome sectioning systems that operate inside the SEM has enabled the structural biology community to gather enormous 3D data sets from SEM imaging at the meso-scale, for example sectioning of the entire brain of a larval zebrafish.11 Microtome sectioning is also emerging in the study of soft materials and metals.12

___________________

10 S.R. Stock, 2010, Recent advances in X-ray tomography applied to materials, International Materials Reviews 53(3):129-181.

11 D.G.C. Hildebrand, M. Cicconet, R.M. Torres, W. Choi, T.M. Quan, J. Moon, A.W. Wetzel, et al., 2017, Whole-brain serial-section electron microscopy in larval zebrafish, Nature 545(7654):345.

12 T. Hashimoto, G.E. Thompson, X. Zhou, and P.J. Withers, 2016, 3D imaging by serial block face scanning electron microscopy for materials science using ultramicrotomy, Ultramicroscopy 163:6-18.

After data collection, a number of steps are needed to extract meaningful information from a 3D data set. The typical flow of data processing involves registration, reconstruction, classification, and analysis. The raw data are often misaligned and distorted, and registration of fiducials must be employed to remove these distortions and misalignments. Reconstruction involves moving from the abstraction of a series of 2D images or maps to a 3D volume of data. In the case of serial sections, whatever property was measured at the surface of the slice is generally assumed to be consistent through the entire thickness of that slice. As long as the slice thickness is small compared to the changes in the measured features, this is a reasonable assumption, but one that the practitioner should be mindful of when considering measurements within the data. Classification involves unambiguous identification of features of interest within the volume. This can be trivial—for example, precipitates with large density differences can easily be identified by backscatter imaging or tomography, and grain boundaries can be highlighted by placing a threshold on the orientation gradient in an orientation map. But, in many cases, the contrast between two regions of interest are not easily differentiated. Human intelligence is extremely well optimized for pattern recognition and classification and can accept a very high level of anomalies within an image and through context and prior knowledge can easily infer and identify the features of interest within a set of images. However, the computer-based methods for determining regions of interest in most imaging modes do not have this context, and the segmentation of the volume is often much more difficult than expected.

The final step is that of analysis of the structures, which can be a wide variety of measurements including size, arrangement, shape metrics, crystallographic

orientation textures and gradients. One of the greatest difficulties in 3D data processing and analysis is the lack of well-developed software packages and tools for 3D analysis. Materials researchers must develop custom codes and pipelines. While developing custom processing tools can have certain advantages, it is counterbalanced by the current massive duplication of effort across separate groups, which is further compounded by the lack of standards for data descriptions and file formats that would make interoperable tools easier to develop. As an example, the development of the DREAM.3D software package13 has been extremely beneficial in reversing this trend. Initially developed for the analysis of 3D electron backscatter diffraction data, the platform continues to evolve to analyze multispectral data, providing a set of standards for data and processing formats and documentation.

As an example of the success of these methods, 3D data sets of polycrystalline microstructures have been obtained for a variety of aerospace aluminum, titanium, and nickel alloys, and recent in situ 4D synchrotron experiments have elucidated the importance of residual stress and the redistribution of stresses during plastic deformation.14 A compact ultra-high-temperature tensile testing instrument, fabricated for in situ X-ray microtomography using synchrotron radiation, has been used to obtain real-time X-ray microtomographic imaging of the failure mechanisms of ceramic-matrix composites under mechanical load at temperatures up to 2300°C in controlled environments.15 It should also be noted that X-ray diffraction studies of hard materials have historically been conducted in multiuser synchrotron facilities, but significantly enhanced laboratory-scale systems have emerged in recent years and hold the promise for much more widespread availability and use of this technique.

At the same time, improvements in experimental tools and accompanying modeling of mechanical properties at nanoscale to micron-scale dimensions have enabled mechanical properties to be quantified at a variety of length scales down to ~100 nm, enabling the quantitative study of micro- and mesoscale unit deformation processes with unprecedented spatial precision. Similarly, a variety of techniques have been reported that allow bulk physical properties such as thermal diffusivity to be accurately measured in micrometer-scale depths. This allows more comprehensive assessments to be made of the mechanical and physical properties of surface-modified materials treated by case hardening or ion implantation/plasma

___________________

13 M. Groeber and M. Jackson, 2014, DREAM.3D: A digital representation environment for the analysis of microstructure in 3D, Integrating Materials and Manufacturing Innovation 3:5, doi:10.1186/2193-9772-3-5.

14 Various works of Carnegie Mellon University, the Air Force Research Laboratory, Los Alamos National Laboratory, and Japanese groups.

15 A. Haboub, H.A. Bale, J.R. Nasiatka, B.N. Cox, D.B. Marshall, R.O. Ritchie, and A.A. MacDowell, 2014, Tensile testing of materials at high temperatures above 1700°C with in situ synchrotron X-ray micro-tomography, Review of Scientific Instruments 85(8):083702.

processing. In addition, these technique advances allow improved quantitative evaluations of the physical and mechanical properties of the near-surface regions of ion-irradiated materials as a proxy to neutron irradiation conditions that could be difficult, costly, and time-consuming (multiple-year experiments) to obtain. As an example, in situ measurements and 3D X-ray characterization of individual grains in polycrystalline bulk materials have paved the way to a better understanding of microstructural heterogeneity and localized deformation in irradiated materials. Such information is critical to the prediction of material aging and degradation in nuclear power plants and the design of new radiation-resistant materials for next-generation nuclear reactors. For instance, researchers have studied in situ heterogeneous deformation dynamics in neutron-irradiated bulk materials using high-energy synchrotron X rays to capture the micro- and mesoscale physics and link it with the macroscale mechanical behavior of neutron irradiated materials of relevance to reactor design.

It is clear that volumetric characterization at the meso- to macroscale via destructive and nondestructive experimental methods have matured tremendously in the past decade, enabling workflows that provide high-fidelity microstructural information across multiple length scales in a diverse range of material systems, but there are still many barriers that have limited its utilization within the materials community to first adopters and domain specialists. For both destructive and nondestructive workflows, a sorely needed advancement over the next decade is the in situ data analysis of the data collection procedure. The overwhelming majority of volumetric data collection is performed asynchronously and often independent of the analysis and ultimate utilization of the information. “Smart” data collection, where data are refined in key regions to provide additional resolution where required, or additional modalities to provide other attributes of materials state are needed. Dynamic sampling approaches, where data are collected efficiently and iteratively based on prior training using machine learning methods, have appeared in the literature for 2D data collection using a single modality,16 and these methods will provide greater benefit in 3D because of the exponential growth in collection time.

Other examples include the ability to detect anomalies and other rare features in data collection using lookup tables and dictionary-based approaches, which may potentially allow for refining analysis dynamically for unknown features based on prior knowledge of the expected structure. Furthermore, truly incorporating and integrating multimodal “costly” information into 3D experiments—based on instrumentation price, acquisition time, or surface preparation requirements—can only realistically be achieved using such integrated approaches. Other needed advancements include utilization of machine learning in the classification of

___________________

16 Charles A. Bouman, School of Electrical and Computer Engineering, Purdue University.

microstructure, the development of efficient collection methods that more directly measure properties of interest, the collection and use of more signals—ultrasound, contact methods (nano-indents), continuing to push the development of larger volumes to generate higher level statistics, and closed loop material removal for serial sectioning.

4.2 SYNTHESIS AND PROCESSING TOOLS

Given the increase in characterization tool capability and capacity over the past decade, there has been a corresponding need to advance synthesis and processing capabilities. These advanced tools not only facilitate accelerated materials discovery but also enable materials control with resolution consistent with advanced measurements. Often these synthetic advances are facilitated by advanced computational methods for predicting new materials.

4.2.1 Precision Synthesis

Full realization of the promise of precision materials synthesis (size, shape, composition, architecture, etc.) across length scales will transform materials science in a revolutionary way. Specific examples emerging of the possibilities and power of precision synthesis include molecular engineering of catalytic materials for selective reactivity, control of electrochemical energy conversion with atomically precise materials, new biodegradable polymers with control of degradation rate via sequence control, precision placement of nitrogen vacancy center defects in diamond to create materials for quantum information, and self-assembly of peptide amphiphiles into fibrous and micellar structures with extraordinary bioactivity. These are the tip of an iceberg beginning to appear.

Realization of the full “iceberg” is a surpassingly ambitious objective but is an enormously promising direction to invest in. It means not only putting every atom in a material where you want it, but also knowing where you want to put the atoms, and why, and being able to determine whether you have really put them there. Therefore, what sounds at first hearing to be a “synthesis” challenge is actually a challenge for synthetic materials chemistry, theory, simulation, and instrumental characterization. The “across length scales” part of this challenge brings several new aspects into this synthesis challenge. Researchers need to gain the understanding necessary to predict how precision control structure at the atomic and molecular level plays out in macroscopic behavior and properties. They need to understand what level of precision is needed to achieve particular goals. Furthermore, as the Department of Energy (DOE) Basic Energy Sciences Basic Research Needs report on synthesis science points out, “The challenge of mastering hierarchy [meaning across length scales] crosscuts all classes of syntheses. In interfacial, supramolecular,

biomolecular, and hybrid matter, hierarchy is the characteristic feature that leads to function.”17 This goal will require different synthesis techniques and chemical tools, all operating at different length scales. For example, covalent electronic chemistry would be used to make the building blocks, followed by noncovalent assembly of the building blocks into larger structures. Of course, this is research that is being done already, but without the kind of real-time instrumental monitoring and control needed to achieve optimum results. Atomic layer deposition and molecular beam epitaxy (MBE) are atomic-level equivalents of additive manufacturing (AM) at the macroscale.

Hierarchical synthesis processes that have not traditionally been viewed as kinetic processes, such as self-assembly, should be considered as such; the fact that they are driven by thermodynamics does not mean they do not have kinetic trajectories (often sluggish ones). Kinetically stabilized and metastable phases may be the desired product. A wide variety of materials types will be incorporated into one finished product, necessitating wider understanding or, more likely, collaboration on the part of the synthesizers, in order to master the creation of these materials.

4.2.2 3D Structures from DNA Building Blocks

DNA origami is the folding of a long strand of DNA, the scaffold, into nanoscale objects through the use of short-chain DNA oligonucleotides, the so-called strands. The past decade has seen significant advances in the design toolbox to build 3D structures, and with each development the number of degrees of freedom increases and this enables construction of more intricate shapes. The first approach to 3D structures was achieved by bundling DNA helices in a honeycomb structure. Curved objects were achieved by adding or deleting the strands between the helical scaffold bundles. Another approach involved the use of curved rings to enable control of the shape. From the bundled helical structures came wire-frame designs in either a grid-iron pattern or triangular mesh. This advance has important implications for biomedical applications because structures created through these approaches offer higher resistance to cation depletion under physiological conditions. The advances in scaffold structures has been complemented by design platform software packages such as caDNano, DAEDALUS, and vHelix.

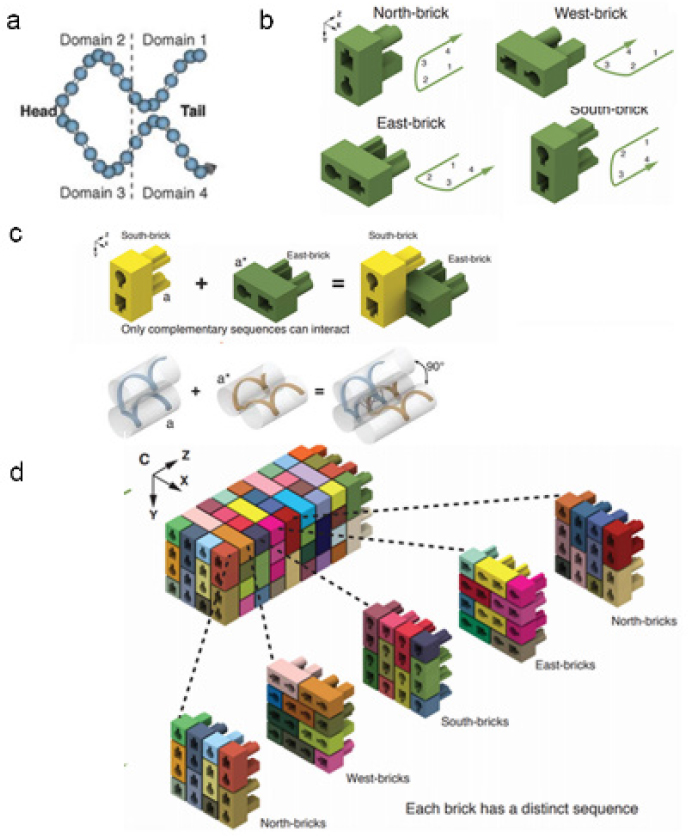

DNA LEGO-like bricks (Figure 4.3) were developed in the past decade.18 Each brick consists of four short single DNA strands; two head and two tail domains. Using

___________________

17 See U.S. Department of Energy, 2017, Basic Research Needs Workshop on Synthesis Science for Energy Relevant Technology, https://science.energy.gov/~/media/bes/pdf/reports/2017/BRN_SS_Rpt_web.pdf, Figure 6.

18 Y. Ke, L.L. Ong, W.M. Shih, and P. Yin, 2012, Three-dimensional structures self-assembled from DNA bricks, Science 338:1177-1183, doi: 10.1126/science.1227268.

a selection of bricks from a menu of preformed motifs, it is possible to self-assemble almost arbitrarily complex 3D structures without the need to use a scaffold structure.

The origami structures have been used as templates for producing Au nanoparticles, Au nanorods, and quantum dots; molds in which to synthesize nanoparticles; meshes for microlithography; and biosensors; and for drug delivery. In addition, active systems, walkers, machines, and factories have already been demonstrated on origami platforms.19 Perhaps an area that has not been given much attention is the possibility that when using DNA as a structural material, there might be unintended potential for DNA to behave in a signaling fashion.

Furthermore, it was demonstrated that origami was possible with long-chain RNA scaffolds. This was first demonstrated by using DNA staples and later using RNA staples.

4.2.3 2D Shape-Changing Materials

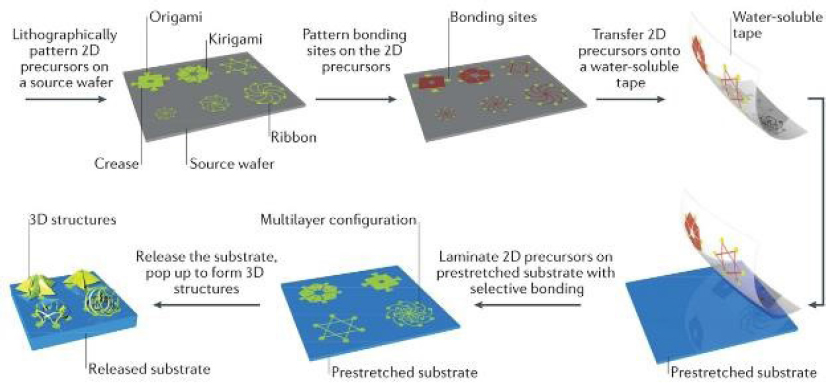

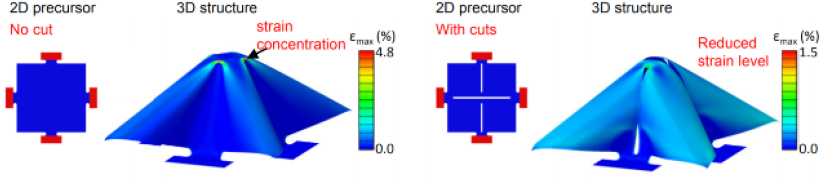

A class of reconfigurable metamaterials that has advanced considerably over the past decade is one based on 2D films that fold or bend into predetermined 3D structures. These materials have potential applications to biomedical devices (e.g., self-deployable stents), energy storage (e.g., stretchable Li-ion batteries), robotics, and architecture (smart window coverings to control light reflection). Advances in the ability to fabricate 3D structures at the micro- and nanolength scales were achieved.20 This includes advances in AM techniques and inks based on metals, metal oxides, biomaterials, and biocompatible polymers. Other approaches to develop 3D nanostructures exploit bending and folding of thin plates by the actions of residual stresses or capillarity effects and self-actuating materials. It is also possible to generate 3D structures that respond to an external stimulus such as heat or water. Origami (folding) and kirigami (cutting and folding)-inspired designs have expanded the structures that can be formed and increased the materials space to include important ones needed for future advanced technologies. An example of the processing of a mechanically guided scheme is shown schematically in Figure 4.4. The formation of robust 3D structures requires minimization of the overall strain as well as the formation of strain concentrations. The minimization of the strain has been achieved through the use of finite element modeling, which has shown that the length and width of the kirigami cut are important. It turns out that longer cuts are better than shorter ones, as stress concentrations are avoided, and wider

___________________

19 H. Gu, J. Chao, S.-J. Xiao, and N.C. Seeman, 2010, A proximity-based programmable DNA nanoscale assembly line, Nature 465:202-205, doi: 10.1038/nature09026.

20 Y. Zhang, F. Zhang, Z. Yan, Q. Ma, X. Li, Y. Huang, and J.A. Rogers, 2017, Printing, folding and assembly methods for forming 3d mesostructures in advanced materials, Nature Reviews Materials 2:17019.

cuts better than narrower ones, as the maximum strain is reduced. The minimization of the strain owing to the introduction of kirigami cuts is shown in Figure 4.5 for 2D square silicon membranes.21 This illustration of finite element modeling is advancing the design of robust 3D structures.

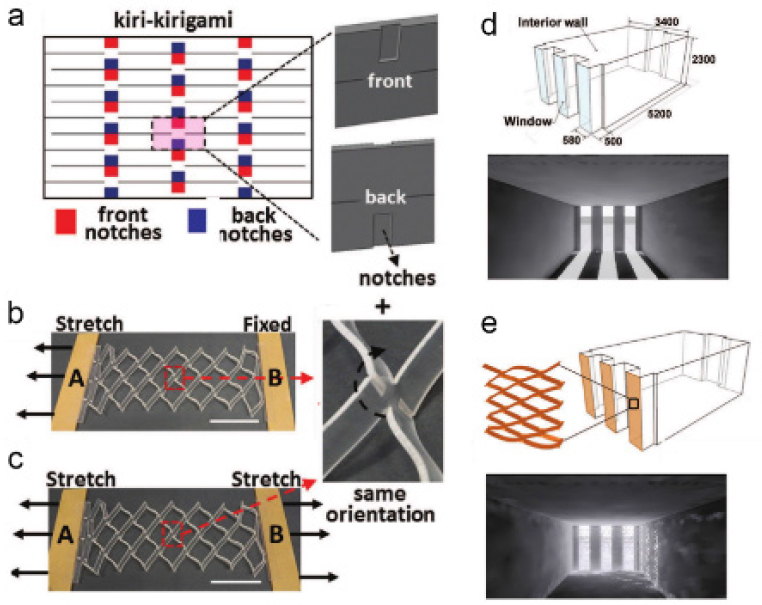

One application of kirigami structures has been as window blinds to control the sunlight entering a room and thus creating an adaptive energy saving structure. To control the tilt of the louvres, a lattice of linear cuts on an elastomeric sheet is augmented by notches on one side or the other of the sheet, a technique referred to as kiri-kirigami to indicate cuts on cuts.22 When stretched, the cuts open into diamond-shaped holes bounded by narrow segments that undergo out-of-plane buckling and twisting whose directions are controlled by the placement of the notches. Importantly, in this design the direction of the twist of the joints is independent of the loading direction. The reflectance of the stretched sheet depends on the direction of the twist. This can be controlled passively through mechanical stretching or having cuts on the front and back in the kiri-kirigami structure. Figure 4.6(a) shows a schematic illustration of the effect of loading in one and two

___________________

21 Y. Zhang, Z. Yan, K. Nan, D. Xiao, Y. Liu, H. Luan, H. Fu, et al., 2015, A mechanically driven form of kirigami as a route to 3d mesostructures in micro/nanomembranes, Proceedings of the National Academy of Sciences U.S.A. 112(38):11757-11764.

22 Y. Tang, G. Lin, S. Yang, Y.K. Yi, and R. Kamien, 2017, Programmable kiri-kirigami metamaterials, Advanced Materials 29:1604262.

directions on the distortion of the unit cell. Here it can be seen that the orientation of the twist is always the same, with images of light actually entering a room with windows without and with the kiri-kirigami louvres. Window treatments based on this design have been tested to determine the ability to use such structures to control light.

These experimental realizations of origami-kirigami structures have been accompanied by sophisticated theory, an example of which focuses on the special case in which kirigami cuts are constrained by the geometry of a honeycomb and are characterized by the disclinations and dislocations they create in that lattice.23 An end product of this work is an algorithm24 for arrangement of kirigami cuts that can produce any topographic shape. Similarly, an algorithm has been developed that successfully yields the folding pattern to produce any polyhedral shape in the least number of folds.

4.2.4 Additive Manufacturing

Several fabrication innovations have transformed the approach for producing complex components. During the past decade, AM of metallic components has transformed from a fledgling research effort to a high-visibility commercial activity, with particularly high impact for aerospace and medical implant applications. AM is the ability to deposit materials layer by layer or point by point to fabricate complex components directly from computer-aided design models. An example is provided in Box 2.6. The materials palette for AM extends beyond metals to polymers, ceramics, composites, and biomaterials, employing a variety of different techniques for assembly. Even apartment buildings have now been additively manufactured in China.25 As indicators of the increased interest in AM, the current industrial growth rate is approximately 30 percent per year,26 and the number of peer-reviewed publications per year more than quadrupled between 2006 and 2016.

In pursuit of AM for mainstream applications, four goals support more rapid and widespread usage of this technology:

- Enhancing AM components performance through materials development;

___________________

23 See L. Hardesty, 2017, “Origami Anything: New Algorithm Generates Practical Paper-Folding Patterns to Produce Any 3-D Structure,” MIT News, June 21, http://news.mit.edu/2017/algorithm-origami-patterns-any-3-D-structure-0622.

24 C. Modes and M. Warner, 2016, Shape-programmable materials, Physics Today 69(1):32-38.

25 See 3ders.org, 2015, “WinSun China Builds World’s First 3D Printed Villa and Tallest 3D Printed Apartment Building,” January 18, http://www.3ders.org/articles/20150118-winsun-builds-worldfirst-3d-printed-villa-and-tallest-3d-printed-building-in-china.html.

26 D.L. Bourell, 2016, Perspectives on additive manufacturing, Annual Review of Materials Research 46:1-18.

- Developing new methodologies for certifying additive components for use;

- Developing integrated computational materials engineering capabilities together with high-throughput characterization techniques to accelerate the development to deployment cycle of AM; and

- Developing new processes and machines with increased deposition rates, build volumes, and mechanical properties.

Materials development, certification, and integrated characterization and modeling (goals 1-3) represent an important investment opportunity because of the potential to enable disruptive change in manufacturing.

AM is still a very small market, at approximately $4 billion worldwide and growing at approximately 34 percent per year (compared to $11 trillion per year in worldwide manufacturing), but it is estimated to be valued at $33 billion by 2023.27 AM systems are limited by the costs of materials, rates of fabrication, reliability of processes, integration with other processes, and limitations in layer-by-layer deposition. Historically, AM has been limited to small build envelopes at low deposition rates with limited materials that are sold by the equipment manufacturers. Next-generation systems explore controls, hardware, feedstock condition, and software to develop new machines with high deposition rates, large build volumes, and improved properties using the low-cost feedstocks available. As systems are enhanced, additional applications are possible, increasing the number of companies interested in the technology, and its potential impact. New control and robotic systems are driving advances in AM that have the potential to include out-of-plane deposition, resulting in true AM and the ability to deposit multiple materials in the same machine. A wide range of feedstocks, including irregular particulate morphologies and other product forms, have the potential to lower overall cost of the materials involved. Development in smart or enhanced feed mechanisms will also increase reliability and the overall cost impact. All of these enhancements are directed at achieving transformation of AM from a 1- to 2-sigma process (30 to 70 percent success rate) to a 6-sigma process (3.4 failures per million). Achieving this goal will require specific focus on identification of design rules for new processes, development of robust tool paths via advanced slicing software to minimize residual stress and part defects, development of machine design, process monitoring and control to improve reliability and repeatability of the deposition process, and layer-by-layer inspection and adaptive process control to correct part defects during manufacturing. The improvements in AM processes and machines will proceed in parallel with

___________________

27 See Markets and Markets, “3D Printing Market by Offering (Printer, Material, Software, Service), Process (Binder Jetting, Direct Energy Deposition, Material Extrusion, Material Jetting, Powder Bed Fusion), Application, Vertical, Technology, and Geography—Global Forecast to 2024,” https://www.marketsandmarkets.com/Market-Reports/3d-printing-market-1276.html, accessed May 12, 2018.

the materials developments that expand the potential uses of the technology. One example of an improvement needed is better ability to control the surface finish.

4.2.5 Cold Gas Dynamic Spraying

Cold gas dynamic spraying, commonly referred to as “cold spray,” is a solid-state material deposition process that uses powder particles sprayed at high velocity onto a substrate. The powder particles plastically deform upon impact, creating a metallurgical bond between the powder and the substrate. The process utilizes an accelerated gas stream (N2, He, or air) to propel particles at speeds ranging from 300-1200 m/s toward a substrate, resulting in solid-state particle consolidation and rapid buildup of material. While cold spray is a fundamentally solid-state process, it is performed over a range of temperatures that can reach more than 50 percent of the melting point of the material. The advantages of cold spray are low thermal impact to the substrate, no combustion fuels/gases, no melting of the coating material, and a resultant coating with high density and moderate compressive residual stresses. More importantly, cold spray can be applied for additive repair in a field environment.

As cold spray deposition technology rapidly advances, many critical and intriguing scientific questions are uncovered and remain to be answered. The actual physics present at a single-particle impact is still a very open question. New, fascinating, fundamental experiments using laser-shock acceleration combined with ultra-high-speed cameras are allowing for the imaging and measurements of single-particle impact dynamics. These new measurements are providing crucial experimental data to support, inform, and validate the many theoretical models that have been and are being developed to describe the cold spray deposition process. The sudden impact of metallic particles also causes the grain/crystallite structure to be reduced by an order of magnitude in size, but the mechanism by which this transformation takes place in less than 100 ns is still unknown. To this point, the community really needs more quantitative information about the micro- and nanostructures generated by the cold spray process.

4.2.6 Nonequilibrium Processing

Materials made either by nature or by manufacturing are seldom the result of equilibrium processes. Among the exceptions are crystals and alloys that are thermodynamically stable and formed by slow cooling. Living systems make their wide variety of functional materials from proteins. They continuously produce these proteins through an active process using ribosomes and genetic information to assemble specified amino acids. More than half of their metabolic energy is consumed in protein production. Manufacturing processes use energy to heat,

cool, mix, stress, chemically change, and otherwise manipulate constituents into materials with desired properties that are not found in thermodynamically stable form. Material quenches and production techniques such as MBE produce metastable materials.

Although researchers are growing progressively more sophisticated in using far-from-equilibrium processing, from semiconductors to AM, the underlying science is missing. Thermodynamics and statistical mechanics provide rules for averaging the well-known dynamics of classical or quantum states to obtain the macroscopic properties of materials and many-particle systems in equilibrium. There is a solid foundation in laws that are universal, easily applied, and well tested over the past two centuries. The answers result from extremizing free energies and related state functions. There are no such universal governing principles or a set of free-energy-like functions to be extremized for nonequilibrium processes—for example, in some instances, dissipation is maximized; in others, it is minimized. The need for a deeper understanding of nonequilibrium phenomena is nowhere greater than in materials science.

Recent advances, such as AM, present new challenges and opportunities for using and understanding nonequilibrium processes. “Laser-based AM processes generally have a complex nonequilibrium physical and chemical metallurgical nature, which is material and process dependent. The influence of material characteristics and processing conditions on metallurgical mechanisms and resultant microstructural and mechanical properties of AM processed components needs to be clarified.”28 The extreme temperature, solvent, or stress gradients imposed by such processing will provide insight into far-from-equilibrium reactions and the properties of the metastable materials they produce.

Levitation is both a synthesis method and a characterization method because the influence of the container on the measurement is removed. Initial work in levitation was on metallic glasses, low-temperature melting materials to create high entropy, and nonequilibrium structures. Levitation methods have been expanded to include acoustic, aerodynamic, electromagnetic, and electrostatic, depending on the heating source and the sample size resulting. Both X-ray and neutron characterization can be applied to levitating materials. Samples fabricated from levitation are highly nonstable, and one must consider whether evaporation plays a role during characterization of said sample. These sets of techniques are helping to elucidate structure pathways of materials from high-temperature through a variety of cooling routes. Characterization of containerless synthesized materials is extremely helpful for understanding and design of novel materials.

___________________

28 D.D. Gu, W. Meiners, K. Wissenbach, and R. Poprawe, 2012, Laser additive manufacturing of metallic components: Materials, processes and mechanisms, International Materials Reviews 57(3):133-164.

4.2.7 Single Crystal Growth

Advances in fundamental and applied materials research have been driven by the development of numerous different families of crystalline materials with widely varied functionalities.29 The two main paths involve synthesis of (1) high purity but chemically simple and abundant materials and (2) systems with complex stoichiometries/structures that feature multiple tunable characteristic energy scales. Examples in the first category include germanium, silicon, and gallium arsenide, while examples in the second category include strong rare-earth permanent magnets, high-temperature superconductors, and many other quantum materials. Despite the fundamental importance of crystal growth for many different scientific and commercial purposes, it remains the case that it is very often more of an art or technique than a science. Furthermore, many synthesis methods that are routinely used have made only marginal evolution during the past several decades. General categories include (1) solid-to-solid reactions, (2) liquid-to-solid reactions, and (3) vapor-to-solid reactions. Here, the focus is on methods to produce bulk crystals, but the arguments below lend themselves to thin films as well. For example, (1) includes solid-state reaction and spark plasma sintering; (2) includes molten flux growth, arc- and induction-furnace melting, Czochralski crystal pulling, and Bridgman crystal pulling; and (3) includes vapor transport (e.g., using iodine) and thin film techniques. For a detailed review of most such methods, see B. R. Pamplin’s book first published in 1975.30 Note that this and other detailed accounts of these synthesis methods were already presented at least four decades ago, and in many ways the same methods remain the state of the art. This emphasizes the difficulty in developing new strategies but also highlights that this is an area where there is significant opportunity for transformative advances.

Despite their usefulness, most crystal growth methods are limited by several practical problems that can now be addressed. First among them is that the progression of events during crystal formation is not often quantitatively understood. Instead, most processes are developed by trial and error, and even the philosophy of synthesis is guided by qualitative experience of individuals or isolated groups—that is, through colloquial methodology. In order to advance beyond these limitations, it is necessary to develop routine methods that provide detailed knowledge about processes that occur during a reaction as well as active modeling that allows modification of a growth in real time. There have been limited recent attempts to do this—for example, where a crystal growth process is observed through neutron scattering, but this field is wide open for advances. Also important is that most

___________________

29 See National Research Council, 2009, Frontiers in Crystalline Matter: From Discovery to Technology, The National Academies Press, Washington, D.C., https://doi.org/10.17226/12640.

30 B.R. Pamplin, ed., 1980, Crystal Growth, 2nd edition, Pergamon Press, Oxford, U.K. https://www.elsevier.com/books/crystal-growth/pamplin/978-0-08-025043-4.

real materials harbor defects on all length scales that are difficult to characterize and even more difficult to correct. A simple example is seen for single crystals that are pulled from a melt (e.g., using the Czochralski technique), where chemical gradients along the growth axis are commonplace. For quantum materials, such variations often have a large impact on electronic and magnetic properties and a mastery of them is needed but undeveloped. An in-depth knowledge and control of the growth process would mitigate these problems and furthermore would open yet another tuning parameter to control a material’s properties.

In order to accomplish these goals, a multifaceted and well-funded push to develop new intersections for crystal growth within the materials research (MR) community is needed. In this scenario, crystal growth would be treated as a research area of its own that is not strictly associated with specific classes of materials or topics. Two promising directions of research are (1) methods for rapid-throughput/rapid-characterization and (2) synthesis methods under extreme conditions (e.g., applied pressure, magnetic fields, electric field, etc.). This research requires suites of materials analysis (e.g., neutron, X-ray scattering, and microanalysis) and computation collaborations to facilitate progress, plus ways to broadly distribute information.

4.3 SIMULATION AND COMPUTATION TOOLS

The nature and benefits of computation capabilities in MR are extensive, but vary dramatically, depending on the material class or application. For example, the useful computational tools for the well-developed semiconductor and aerospace industries are very different from those needed for new materials, where there are still basic questions and no elaborate databases for mining or application of artificial intelligence. However, it is clear that computational capabilities, on both the large as well as the small scale, will continue to advance large expanses of the MR landscape.

4.3.1 Integrated Computational Materials Engineering and Materials Genome Initiatives

Two initiatives began during the past decade that aimed to accelerate the timeline from development to deployment of a material, by highlighting the benefits of experiment and computation working together, and the need for materials computational design at all stages of the manufacturing process.

One initiative, the Integrated Computational Materials Engineering (ICME) approach, was detailed in a National Academies study of 2008.31 The ICME approach seeks to integrate materials models across different length scales (multiscale) and computational methodologies to capture the relationships between synthesis and processing, structure, properties, and performance. The initial successes of ICME were enabled by the existence of a wealth of data on specific materials systems that had been generated over prior decades of research.32

The second initiative began in 2011, when President Barack Obama launched the Materials Genome Initiative (MGI) with the intent “to discover, manufacture, and deploy advanced materials twice as fast, at a fraction of the cost.”33 Central to this vision was the equal weighting of, and integrated nature of, computational tools, digital data, and experimental tools. The latter included synthesis and processing as well as material characterization and property assessment. It was recognized that in each area there was a need to develop new tools and capabilities to advance the field and to explore the intersection between each of the three. The initiative recognized the importance of data and the need to develop databases and the tools to interrogate and visualize it. This need is particularly important to the success of the initiative as extensive and accessible databases exist for only a few material systems. It was important that the integrated tools were to be one framework over the seven stages of the materials development continuum (the seven stages are discovery; development; property optimization; systems design and integration; certification; manufacturing; and deployment).34

Following the direction indicated by these two initiatives has led to some notable successes.35 For example, Ford Motor Company used the ICME framework to obtain a 15-25 percent decrease in the product development time of cast aluminum power train products. For cast aluminum components, subtle changes in the manufacturing process or component design can lead to engine durability issues and program delays, but simulating the effect of manufacturing history on the engine durability allowed Ford to avoid these costly delays.

___________________

31 National Research Council, 2008, Integrated Computational Materials Engineering: A Transformational Discipline for Improved Competitiveness and National Security, The National Academies Press, Washington, D.C., https://doi.org/10.17226/12199.

32 G.B. Olson, 2013, Genomic materials design: The ferrous frontier, Acta Materialia 61:771-781, http://dx.doi.org/10.1016/j.actamat.2012.10.045.

33 See Office of Science and Technology Policy, 2011, Materials Genome Initiative for Global Competitiveness, June, https://www.mgi.gov/sites/default/files/documents/materials_genome_initiativefinal.pdf.

34 Includes sustainability and recovery.

35 See B. Obama, 2016, “The First Five Years of the Materials Genome Initiative: Accomplishments and Technical Highlights,” https://www.mgi.gov/sites/default/files/documents/mgi-accomplishments-at-5-years-august-2016.pdf.

Computational methods integrated with material property databases have successfully been used to recently develop two forms of steel that were licensed to a U.S. steel producer (by QuesTek Innovation, LLC), and then deployed into demanding applications. The first alloy was for the U.S. Air Force (USAF): Ferrium S53, an ultra-high-strength and corrosion-resistant steel that eliminates toxic cadmium plating, and is now flying as safety-critical landing gear on USAF A-10, T038, C-5, and KC-135, and on numerous SpaceX rocket flight-critical components. The second alloy was for the U.S. Navy: Ferrium M54, an upgrade from legacy alloys, which offers more than twice the lifetime of the incumbent steel while saving $3 million in overall program costs and is now deployed on their T-45 safety-critical hook shank component. As seen in Box 2.2 in Chapter 2, the time from development to deployment was reduced from 8.5 years for Ferrium S53 (deployment in 2008) to 4 years for Ferrium M54 using only one design iteration (qualification in 2014). QuesTek has designed, also using integrated methods, a third steel: Ferrium C64, which is a best-in-class gear steel that allows for increased power density, fuel efficiency, and lift of military helicopters. This steel has been patented and is now available for purchase.

Other successful areas of integrated computation-experiment-data have been in new materials for batteries,36 and many other companies, such as Boeing, use integrated approaches for advanced metals and other material discovery and deployment. An example of the acceleration of the discovery of materials through a combination of quantum mechanical calculations, synthesis, and experiments is the design and optimization of liquid crystal sensors.37 These sensors work on the principle of the selective displacement of liquid crystal molecules by analytes that results in an optically detected transition of the liquid crystal. Liquid crystals are in general sensitive to ultraviolet light, poisons/pollutants, and strain. There are now many liquid crystal sensors. They hold promise as inexpensive, portable, and wearable sensors for key applications (e.g., poisonous gas detection).

One challenge regarding the MGI is that it has tended over time to become an interaction between materials modeling, computing, and data communications, leaving experiment behind. Vast databases have been created of purely computational results, without experimental validation of stability or accuracy. In addition, without experimental results, the materials cannot be easily tested or deployed for manufacture. Some of these issues are addressed further in Section 4.3.5 on databases. Another challenge is that it is difficult to maintain modeling continuity across the full range of seven stages from discovery to deployment unless the

___________________

36 See, for example, G. Ceder, “The Ceder Group,” http://ceder.berkeley.edu/, and the many successes on that website.

37 H. Hu, Z. Lu, and W. Yang, 2007, Fitting molecular electrostatic potentials from quantum mechanical calculations, Journal of Chemical Theory and Computation 3(3):1004-1013.

research group is immersed in a development environment. The MGI goal would be furthered by increased university-industry interactions, which have widespread benefits, as explained elsewhere in this report.

4.3.2 Computational Materials Science and Engineering

Over the past decade, there have been significant improvements in modeling materials on multiple length scales, including quantum mechanical, atomic, mesoscale (course-grained or phase field), and continuum scales, in addition to statistical methods. This would also include, for example, the Landau-Lifshitz-Gilbert equation38 for magnetic materials, and progress on understanding Gilbert damping.39 These advances have been spurred on by the advances of physical science, such as the example just given, together with the vast increase in computing power over the past decade as well as the integration with experiment and data described in the last section. The area of quantum-level modeling has had perhaps the greatest advancement and opportunity for future improvements, and will be summarized first.

In a significant shift, electronic structure (i.e., density functional theory, DFT) computational software has become readily available in packages both commercial (CASTEP, VASP, WIEN2K) and open source (Quantum Espresso, Abinit).40 These packages are well-documented online, and some (e.g., VASP) have well-developed user interfaces. The calculations of material properties enabled by these packages have high fidelity. They are used to predict structure-property relationships for many material types, discover new structures, enhance the interpretation of experimental data, and populate databases.

When magnetic material properties are calculated with DFT, the addition of a “Hubbard U” term can give very accurate properties of d-electron materials, and in the past decade there have been many developments in this area.41 Modern DFT packages can handle full 3D spin dependence (not just spin up or down), and including relativistic effects and spin-orbit coupling is now a matter of setting

___________________

38 For a recent review, see, for example, M. Lakshmanan, 2011, The fascinating world of the LandauLifshitz-Gilbert equation: An overview, Philosophical Transactions of the Royal Society A 369:1280.

39 L. Chen, S. Mankovsky, S. Wimmer, M.A.W. Schoen, H.S. Körner, M. Kronseder, D. Schuh, D. Bougeard, H. Ebert, D. Weiss, and C.H. Back, 2018, Emergence of anisotropic Gilbert damping in ultrathin Fe layers on GaAs(001), Nature Physics 14:490.

40 For the five programs, see the CASTEP website at https://www.castep.org, the Vienna Ab initio Simulation Package (VASP) website at https://www.vasp.at, the WIEN2k website at http://susi.theochem.tuwien.ac.at/, the Quantum Espresso website at https://www.quantum-espresso.org, and the ABINIT website at https://www.abinit.org, all accessed June 6, 2018.

41 B. Himmetoglu, A. Floris, S. de Gironcoli, and M. Cococcioni, 2014, Hubbard-corrected DFT energy functionals: The LDA+U description of correlated systems, International Journal of Quantum Chemistry 114:14.

parameters in the input file. DFT is challenged when there are multiple sources of magnetism, such as the f-electron materials, which often have unfilled d-electron orbitals as well (for which an additional parameter J is often added, and even then comparison with experiment is often needed), and whenever there are many-body interactions (superconductivity, metal-insulator transitions, Kondo effects, complex oxides, etc.) not describable by single-particle states, as DFT is, based on functions of the local density.

Many useful improvements of DFT have been developed in the past decade, including extensions of DFT to finite temperature, excited states, and time dependence. These often combine perturbation theory with standard methods (such as the GW approximation method) or go beyond it.42 These extensions add much calculation overhead. In addition, active work includes improvements to the exchange functional used in DFT codes, as well as to the pseudopotentials used to estimate the inner cores of atoms in programs such as quantum espresso and VASP.

Going beyond DFT to attempt to model many-body physics of complex materials such as the rare earths is dynamical mean field theory (DMFT), which maps the lattice problem to a local impurity model. This lattice, even though it is a many-body problem, has a recognized set of solutions. The main approximation of this method is to assume that the lattice self-energy is independent of momentum, an approximation that becomes exact in the limit of infinite dimensions. This methodology, combined with DFT, has had some notable successes, including the phase diagram of plutonium,43 and the metal-insulator phase transition in the Bose-Hubbard model.44 By combining DMFT with time-dependent DFT (TDDFT), properties that depend on the time evolution of electronic states such as multielectron and hole bound states (excitons, trions, etc.) could be calculated. TDDFT has the advantage of being a theory of one time-argument function, the charge density.

Quantum Monte Carlo (QMC) is another technique for studying materials with many-body effects. In general, it is an accurate and reliable method that is trivially parallelizable and thus high-performance computing (HPC)-friendly. It is also computationally expensive, complex to apply, and thus challenging. Among the different flavors of QMC, Diffusion Monte Carlo (DMC) is the most popular. It is a stochastic method that allows direct access to system ground states and sometimes of the excited states of a many-body system as well. In principle, DMC is an exact

___________________

42 S.X. Tao, X. Cao, and P.A. Bobbert, 2017, Accurate and efficient bandgap predictions of metal halide perovskites using the DFT-1/2 method: GW accuracy with DFT expense, Nature Scientific Reports 7:14386.

43 N. Lanatà, Y. Yao, C.-Z. Wang, K.-M. Ho, and G. Kotliar, 2015, Phase diagram and electronic structure of praseodymium and plutonium, Physical Review X 5:011008.

44 P. Anders, E. Gull, L. Pollet, M. Troyer, and P. Werner, 2010, Dynamical mean field solution of the Bose-Hubbard model, Physical Review Letters 105:096402.

method that maps the Schrödinger equation to a diffusion equation. However, approximations must be made for computational feasibility when modeling fermions (i.e., electrons). The most common approach is the fixed-node approximation—nodes of the wavefunctions are kept fixed to those of the original trial wavefunction during the search for ground-state wavefunctions.

For molecular solids, quantum chemistry methods tend to be more accurate. These include configuration interaction, coupled cluster, and multireference methods. The trade-off for accuracy is that they are computationally formidable and not convenient for high-performance computing.

Moving from the subatomic scale of the quantum methods to those on the atomic scale, a technique widely used in chemistry for molecules but also useful for especially surfaces of materials is molecular dynamics. This method uses force fields generated by DFT or other more approximate methods based on tables of vibrational analysis of bonds. Molecular dynamics can generate time dependence of atomic movements (for very short periods of time) at finite temperatures. A development to note of the past decade is the availability of highly modular, massively parallel shareware software to carry out large-scale atomic-scale simulations with high efficiency of dynamical properties of materials, including thermal conductivity, mechanical deformation, and irradiation, and many other properties. An example of an molecular dynamics software package is LAMMPS (Large-Scale Atomic/Molecular Massively Parallel Simulator),45 developed by Sandia National Laboratory. Such atomic-scale simulations have been further enabled by the availability of multiphysics (multiple interacting physical effects) reactive force fields or potentials that provide frameworks for the study of heterogeneous material systems. The cataloging of reactive potentials, through efforts at the National Institute of Standards and Technology (NIST) and the OpenKIM project,46 are providing important ways to track performance and suitability of these empirical methods. Development of simple atomistic potentials with accuracies at the level of DFT and computational efficiency sufficient to undertake simulation of realistic (laboratory-level) large length- and time-scale simulations or for high-throughput calculations is necessary. Machine learning has helped develop such potentials, as described in Section 4.3.3.

Mesoscale modeling has also had significant advancements over the past decade. One example is the software CALPHAD (Computer Coupling of Phase Diagrams and Thermochemistry),47 which enables the prediction of phase diagrams

___________________

45 See Sandia National Laboratories, “LAMMPS Molecular Dynamics Simulator,” http://Lammps.sandia.gov, accessed January 5, 2018.

46 See NIST, “Interatomic Potentials Repository,” https://www.ctcms.nist.gov/potentials/, and OpenKIM, “Knowledgebase of Interatomic Models,” https://openkim.org, both accessed January 5, 2018.

47 CALPHAD is a computational methodology. See, for example, Thermo-Calc Software, “Thermo-Calc,” http://www.thermocalc.com/products-services/databases/the-calphad-methodology/, or a free development at S. Fries, “Open Calphad,” http://www.opencalphad.com/, both accessed January 5, 2018.

and thermodynamic behavior. Phase field modeling48 simulates materials growth and mesoscale structure-property relationships, and as a third example on the mesoscale, coarse-grained simulation methods have much improved their usability for modeling molecular materials such as polymers.

Over the past decade, there has been an increasing translation of computational tools to industrial application: the boxes in Chapter 2 provide examples in alloy development and industrial processing. Continuum-state variable process models are used in manufacturing for casting, forging, rolling, vapor deposition, machining, and so on. Further, CALPHAD and the DICTRA (diffusion module of computer code for Thermo-Calc) diffusion code and method are ubiquitous and heavily supported by industry. The transition of other tools—for example, phase field, kinetic Monte Carlo, and so on—are in progress. Progress has been made in physics-based multiscale models for mechanical behavior prediction, but these have yet to be adopted by industry.

Intense effort has gone into developing and improving single- and multiscale methods that may be concurrent, hierarchical, or hybrid and that may be solved in parallel, sequentially, or in a coupled manner. These methods have enhanced both fundamental science studies of, for example, the mechanics of materials and the physics and chemistry associated with materials growth, and applied engineering efforts associated with, for example, the manufacture of material parts in industry. The ability of these methods to make full use of improvements in computer architecture varies with method type, length scales, and time scales. Time-dependent materials have made great strides over the past decade (e.g., modeling creep), but as is the case for classical molecular dynamics simulation, as system size increases, there are rapid increases of computational demands of time and memory. Consequently, redesigning software or using combinations of methods (e.g., molecular dynamics and Monte Carlo) are important.

Other advances have been achieved through co-design of experiment and computational infrastructure, which has come to the fore in the past decade. Examples include progress in image recognition for microstructure identification and the use of the parallel advances in brightness and power from scattering methods, such as X-ray and neutron scattering, and computational materials science that promise to advance the field of scattering science by elevating the interrogation of data from scattering experiments.

A grand challenge in computational materials science is to design the electronic structure of materials directly from first principles, to go from physical/mechanical properties to structure and atomic constituents, rather than the usual other way around. Technical roadblocks in the capabilities of computational methods are being addressed in federally supported research centers, as well as in selected

___________________

48 L.Q. Chen, 2002, Phase-field models for microstructure evolution, Annual Reviews of Materials Research 32:113-140.

academic centers and private companies, and much progress in the coming decade is expected toward this goal.

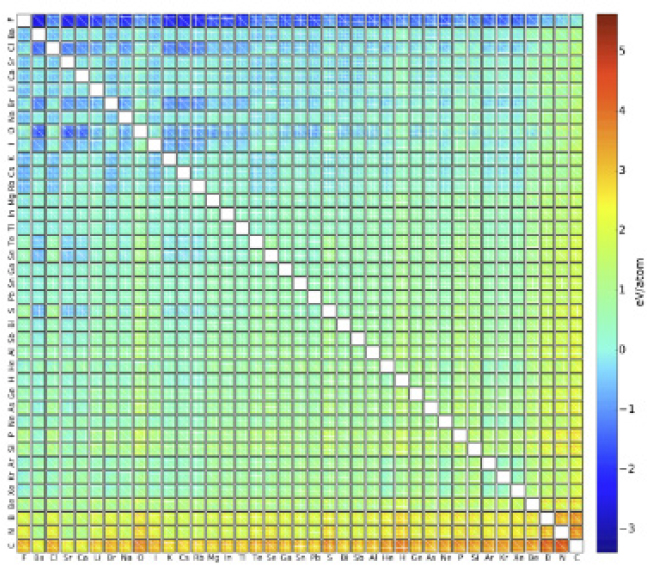

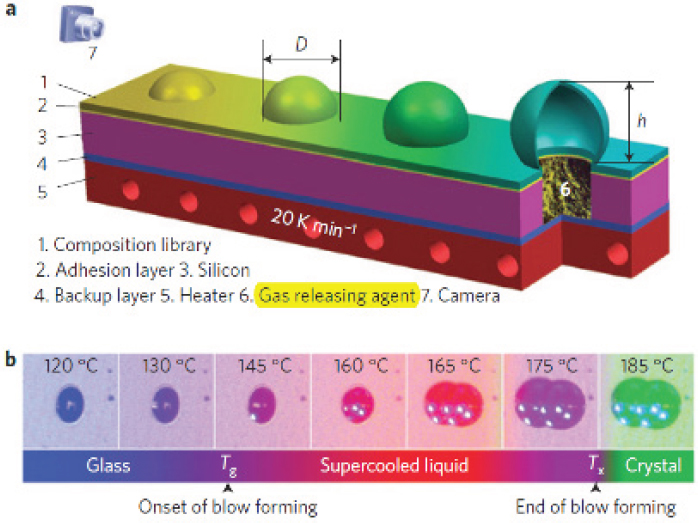

4.3.3 Machine Learning for Materials Discovery

Over the past decade, both supervised and unsupervised machine learning algorithms have been used to calculate materials properties, explore materials compositional space, identify new structures, discover quantum phases, and identify phases and phase transitions. Although training is usually necessary, once set up, these models are able to calculate a wide range of properties, with high accuracy, at large scale, and at speeds orders of magnitude faster than conventional computational methods. Supervised machine learning algorithms that have been applied to materials include random forests, kernel ridge regression, and multilayer perceptron artificial neural networks. These methods allow the mapping of a section of features—for example, atomic positions—to output values such as material properties and performance; their goal is to map output to input.