6

Integrating Social and Behavioral Sciences (SBS) Research to Enhance Security in Cyberspace

Cyber-related developments have both dramatically altered the nature of security threats and expanded the landscape of potential tools for countering those threats. Experts from multiple disciplines, including electrical engineering, software engineering, computer science, and computer engineering, have a laser focus on cybersecurity, but that focus has been primarily on technical or data challenges, such as identification and prevention of malware, prevention of denial-of-service attacks, self-fixing code, unauthorized data breaches, tools for the cyber analyst, and privacy. Indeed, cybersecurity is often characterized as the set of techniques used to protect the integrity of networks, programs, and data from attack, damage, or unauthorized access.1 These techniques have undisputed value, but they address only technological challenges, not the human behaviors and motivations that shape those challenges.

The tools of cybersecurity have obvious relevance for national security. Intelligence analysts, however, seek to understand a different but related set of critical problems—those that involve cyber-mediated communication (communication that takes place through computer networks). To understand this phenomenon, it is necessary to integrate insights about constantly evolving technology with understanding of fundamentally human phenomena. The emerging field of social cybersecurity science has developed to

___________________

1 For examples, see https://searchsecurity.techtarget.com/definition/cybersecurity December 2018] and https://www.paloaltonetworks.com/cyberpedia/what-is-cyber-security [December 2018].

fill this need.2 Researchers in this field build on foundational work in the social and behavioral sciences (SBS) to characterize cyber-mediated changes in individual, group, societal, and political behaviors and outcomes, as well as to support the building of the cyber infrastructure needed to guard against cyber-mediated threats. This chapter describes this emerging discipline, explores the opportunities it offers for the Intelligence Community (IC), illustrates its relevance to intelligence analysis with an example, and describes research needed in the coming decade to fully exploit the field’s applications to the work of intelligence analysis.

WHAT IS SOCIAL CYBERSECURITY SCIENCE?

The field of social cybersecurity developed to meet a national need. It was developed by researchers with backgrounds in numerous fields to meet two primary objectives:

- characterize, understand, and forecast cyber-mediated changes in human behavior and in social, cultural, and political outcomes; and

- build a social cyber infrastructure that will allow the essential character of a society to persist in a cyber-mediated information environment that is characterized by changing conditions, actual or imminent social cyberthreats, and cyber-mediated threats.

Scientists in this field seek to develop the technology and theory needed to assess, predict, and mitigate instances of individual influence and community manipulation in which either humans or bots attempt to alter or control the cyber-mediated information environment (Carley et al., 2018). While researchers in the social cybersecurity area come from a large number of disciplines, many identify themselves as computational social scientists. The field is rapidly expanding to meet a growing need; the number of academic papers published in this area has risen exponentially in the past 10 years (Carley et al., 2018). The number of researchers in this area is also

___________________

2 The term “social cybersecurity” is also sometimes used to refer to cyber-mediated security threats themselves, with emphasis on the human, as opposed to the technological, aspects of those threats. Examples of such threats are recruitment of members of covert groups and their training in social media, the spread of fake news and disinformation, attacks on democracy through manipulation of how citizens receive news, the fomenting of crises by creating a perception of the rampant spread of disease or state instability, phishing and spear phishing attacks (i.e., attempts to obtain sensitive or protected information online by posing as a trustworthy entity), recruitment of individuals to act as insider threats (see Chapter 5) through social media, and online brand manipulation and rumors designed to destroy corporations.

growing because of widespread concern about the global consequences of such social cybersecurity attacks as disinformation campaigns, social media manipulation, and phishing to develop insider threats. Many researchers came to this area of study independently, but they are quickly coalescing as a formal discipline through participation in emerging groups, such as the social cybersecurity working group,3 and domestic and international conferences, such as the International Conference on Social Computing, Behavioral-Cultural Modeling, and Prediction and Behavior Representation in Modeling and Simulation.4

Experts in cybersecurity focus on attacks made on and through the cyber infrastructure that are intended to interfere with technology, steal or destroy information, or steal money or identities (Reveron, 2012). While cybersecurity experts do draw on social science research (see Box 6-1), social cybersecurity researchers have a different approach: they focus on activities aimed at influencing or manipulating individuals, groups, or communities, particularly activities that have large consequences for social groups, organizations, and countries. The solutions to some problems, such as denial-of-service attacks, malware distribution, and insider threats, require both types of expertise, but the emphasis in the two fields is quite different. What links researchers in social cybersecurity is that they

- take the sociopolitical context of cyber activity into account both methodologically and empirically;

- integrate theory and research on influence, persuasion, and manipulation with study of human behavior in the cyber-mediated environment; and

- focus on identifying operationally useful applications of their research.

The boundaries between cybersecurity and social cybersecurity are not altogether sharp, but Table 6-1 lists some key differences between the two. Because cybersecurity focuses primarily on technology, for example, a cyberbreach conducted to steal or compromise data would be in that realm (Carley et al., 2018). In contrast, the manipulation of groups to provide funding for covert actors or extremist groups (Benigni et al., 2017b), sway opinion to win elections (Allcott and Gentzkow, 2017), artificially boost the perceived popularity of actors (Woolley, 2016), or build groups so as

___________________

3 Available: www.social-cybersecurity.org [February 2019].

4 See http://sbp-brims.org/2019/about [January 2019].

TABLE 6-1 Key Differences between Cybersecurity and Social Cybersecurity

| Characteristic | Cybersecurity | Social Cybersecurity |

|---|---|---|

| Core Disciplines | Electrical engineering, software engineering, computer science, computer engineering | Computational social science, societal computing, data science, policy studies |

| Illustrative Problems | Encryption, malware detection. denial-of-service attack protection | Spread of disinformation, spam, altering who appears influential, creating echo chambers |

| Core Methods | Cryptography, software engineering, computer forensics, biometrics | Network science/social networks, language technologies, social media analytics |

| Illustrative Level of Data | Packets | Social media posts |

| Focus on the Issue of Insider Threat | Encryption to prevent ease of reading, software to prevent or detect illicit data sharing, firewalls | Social engineering to seduce insiders to share information, information leakage in social media |

| Focus on the Issue of Spreading Malware via Kitten Images on Twitter | How malware is embedded and detected | Use of bots to promote message sharing, what groups are at risk to download |

| Focus on the Issue of Denial-of-Service Attacks | Technology to detect, enable, or prevent denial-of-service attacks | Social media and dark web identification of hackers who perpetrate denial-of-service attacks; analysis of how these hackers are trained |

| Illustrative Tools | SysInternals, Windows GodMode, Microsoft EMET, Secure@Source, Q-Radar, ArcSight | ORA-PRO, Maltego, TalkWalker, Scraawl, Pulse, TweetTracker, BlogTrackers |

| National Infrastructure Support | United States Computer Emergency Readiness Team (US-CERT) | Nothing comparable—emergent self-management by social media providers |

| Illustrative Central Conferences | RSA, Black Hat, DEFCON, InfoSec World, International Conference on Cybersecurity | World Wide Web, SBP-BRiMS, ASONAM, Social Com, Web and Social Media |

to have an audience for recruitment (Benigni et al., 2019) would all best be addressed by social cybersecurity.

Drawing on Other Disciplines

SBS research plays a role in both cybersecurity and social cybersecurity; examples include research on deception and motivations for attacks at the individual and state levels (discussed below) and research on teams (see Chapter 7). Both cybersecurity and social cybersecurity are applied fields in which new technologies are developed and tested. The field of social cybersecurity does not simply supplant the important work of SBS research. Rather, researchers in the field build on some existing work and extend other work to generate new knowledge and in some cases develop new theory and methods that arise from the transdisciplinary approach for studying the cyber environment. Social cybersecurity is a computational social science, one of a growing number of social science fields that are using digital data and developing computational tools and models (Mann, 2016). Computational social science is not the application of computer science techniques to social science problems and data (Wallach, 2018); it is the use of social science theories to drive the development of new computational techniques, combined with further development of those theories using computational techniques for data collection, analysis, and simulation.

In the case of social cybersecurity science, computer scientists and engineers on the one hand and social scientists and policy analysts on the other have not always recognized the implications of each other’s perspectives for their own research. For example, computer scientists’ attempts to identify disinformation usually begin with fact checking. However, most disinformation campaigns rely less on blatant falsehood than on other strategies, such as illogic, satire, facts out of context, misuse of statistics, dismissal of topics, intimidation, appeals to ethnic bias, and simple distraction, all topics of SBS research (Babcock et al., 2018). Similarly, when SBS researchers seek to invent or reinvent computer science techniques, the results typically do not scale, are difficult to maintain, and lack generalizability. For example, affect control theory (a valuable computational model of human emotions based on social psychology) cannot be scaled to handle large social groups and populations. Computational social science, in contrast, requires deep engagement in and integration of knowledge, theories, and methods from both computer science and social science. Social cybersecurity science is often viewed as going beyond the interdisciplinary approach of integrating the methods and knowledge of diverse disciplines, having become a truly transdisciplinary science in the sense that it is creating new knowledge, theories, and methods. The objective of social cyber experts is to account for

the peculiarities of the cyber environment and the specific opportunities for exploitation available in the communication and entertainment technology used by actors engaged, explicitly or implicitly, in information warfare or marketing.

As the field has matured, “social cybersecurity” has become the recognized term for this work, but the approach has been associated with other terms, including “social cyberforensics,” “social cyberattack,” “social media analytics,” “cyber-physical-social based security,” “social cyberdefense,” “computational propaganda,” and “social media information warfare,” and a variety of terms are used for key concepts in the field. Table 6-2, although not comprehensive, indicates this variety.

One constant for researchers in social cybersecurity is the application of network science and social network analysis (see Chapter 5), often in combination with other methods. The field also builds on other computational social science methods, including those used in data science, visual analytics, machine learning, text mining, natural language processing, social media analytics, and spatiotemporal data mining. Key methods include detection of change in networks, assessment and forecasting of diffusion, study of belief formation, influence assessment, identification of network elites, group identification, analysis of mergers and breakups, cyberforensics, actor activity prediction, and topic analysis. As evidence mounts that social media manipulation involves manipulation of both social and knowledge networks, researchers in this area increasingly combine social network analysis and narrative methods (see Chapter 5).

Another constant is reliance on social media data. Social cybersecurity experts are particularly concerned with social influence and group manipulation, the emergence of norms within and between online groups, and the formation or destruction of groups that are either receptive to or proponents of particular ideas and willing to engage in particular actions. Thus, key areas of study include models and methods associated with dynamically evolving data, patterns of life, information and belief diffusion, social influence, narrative construction and manipulation, group inoculation, and group resilience. Increasingly, research in the field is concerned with cultural variations, which often manifest as geographically specific enablers, constraints, and variation. Researchers seek to understand differences across groups by exploring variations in how people in different parts of the world generate, consume, and are affected by social media. They also explore how geospatial constraints, such as the location of ports, the existence of water features, the characteristics of landscape, and the types of natural disasters to which an area is prone may influence how information spreads in cyberspace, and why. Research areas include methods of psychological and social manipulation, cognitive biases in information handling, social biases in accessing information, trust building, and disinformation strategies.

TABLE 6-2 Intersections between Social Cybersecurity and Other Disciplines

| Discipline | Key Terms | Key Methods Other Than Network Science/Social Networks | Key Sources of Data Other Than Social Media | Illustrative Question |

|---|---|---|---|---|

| Sociology | Influence in social media, online influence | Language technology | Demographics | Do online groups and group processes resemble those offline? |

| Forensics | Social cyberforensics | Forensics | Website scraping, dark web | Who is responsible for a particular social cybersecurity attack? |

| Political Science | Digital democracy, participatory democracy | Forum creation | Forums, legal and policy documents | How can social media be used to support or cripple democracy? |

| Anthropology | Digital anthropology, online ethnography | Rapid ethnographic assessment, area studies | Interviews, participant observation | How do people in different cultures use social media? |

| Information Science | Cyber-physical-social security, social media analytics | Machine learning | Phone and banking data | How and when does information diffuse in social media? |

| Psychology | Social engineering | Social media analytics, case studies | E-mail, laboratory experiments | When do people contribute to conversations in social media? |

| Discipline | Key Terms | Key Methods Other Than Network Science/Social Networks | Key Sources of Data Other Than Social Media | Illustrative Question |

|---|---|---|---|---|

| Marketing | Viral marketing, online marketing | Social media analytics, statistics | Economic indicators, brand diagnostics | How can social media be exploited to market goods and services? |

| International Relations | Social cyberattacks, social cyberdefense, e-government | Case studies, historical and policy assessment | News reports, court cases, dark web | How can state and nonstate actors use social media to gain influence and win battles via nonkinetic activities? |

| Economics | Digital economy, cybersecurity economics | Economic incentive assessment, econometrics | Money trails, price indices, cryptocurrency rates and usage | How do social media influence the economy? |

A Social Cybersecurity Approach to Studying a False Information Campaign

The issue of the spread of false information on Twitter illustrates the distinction between the approaches of social cybersecurity and either pure computer science or pure social science.

Analysis of this problem using a purely computer science machine learning approach would begin with a training set containing tweets that had been marked as containing false information, such as a doctored image or a fact that had been checked and found to be inaccurate. Narrative would be assessed in terms of what words, concepts, sentiment, or gist could be extracted computationally (see Chapter 5). These extracted features would become part of the vector of information used in the machine learning model, and as a result, values for these features would become associated with the presence of false news. A desired end-result might be an automated fact checker, similar to spam checkers, which could run on multiple platforms independently of human intervention.

It is not uncommon for a reliable training set to have 2,000 to 10,000 marked items. This set might be split in half, with some tweets used to train new algorithms and others used to assess their efficacy. Algorithms would then be devised for empirically categorizing tweets according to whether, and with what certainty, they contained false information. The utility of the new algorithms would then be determined by comparing their precision and recall against those features of older algorithms. The new algorithms would have limited utility in any context other than that in which they had been developed. It is common for other researchers to reuse such training data in developing alternative models for comparison, but a mislabeled training set can yield misleading conclusions. Box 6-2 highlights other data challenges for this research, which would affect all three approaches.

In contrast, a pure social science approach to the same problem might be to begin by defining false information and its nuances in the context of

a set of tweets, so that false tweets relative to that context could be identified. Then a quantitative researcher might statistically assess differences between sets of tweets with false information and sets of tweets without false information, using such metrics as the number of tweets, the topic areas addressed, the number of times tweets were retweeted or liked, and so on. This analysis would test a series of hypotheses derived from theories of human behavior (not technology) about, for example, rumor diffusion, attitude formation, persuasion, and social influence. Given the same set of tweets used by the computer scientists for training, the social scientist might assess the characteristics of the tweets and tweeters that affected interrater reliability5 in determining whether a tweet contained false information.

Social science researchers would likely use multiple qualitative and/or quantitative methods to support the utility of their theoretical model—for example, to understand whether narratives containing false information were different from those without such information, whether different actors used different narratives, what characteristics of actors or groups made them susceptible to believing false information, or what features of narratives containing false information made them persuasive.

In other words, the computer scientist might seek to develop algorithms for identifying false news and deceptive actors in order to eliminate vulnerabilities in social media technologies to prevent the spread of misinformation. In contrast, the social scientist might seek to understand the differences in types of disinformation; the social, economic, and psychological motivations behind deception; and the aspects of human cognition, social cognition, and attitude formation that affect when an individual or group is susceptible to false information.

Drawing on the potential benefits of both of these approaches, a social cybersecurity researcher would take into account the following:

- how social media technology can be manipulated to affect who receives which messages at which times;

- the way the messages are presented and accessed;

- the way humans, individually and in groups, can create, access, be influenced by, and influence others using these features of the technology;

- how the content of a message can be manipulated to affect its persuasiveness, or the tendency of the technology to suspend the sender or recommend the message;

- the features of the content that affect its longevity (e.g., the presence of images);

___________________

5 Interrater reliability refers to the level of agreement between those rating or coding a particular item.

- the similarities and differences among messages and the narratives and counternarratives coming from, going to, and being accessed by different users; and

- how the messages and technology could be manipulated to build up, link, or break down groups, and manipulate both the social network and people’s perception of it.

Social cybersecurity researchers engage simultaneously in developing both method and theory and determining whether SBS hypotheses hold up in real-world settings. They would use high-dimensional network analytics6 to analyze such questions as who is interacting with whom and who shares what narratives with whom. They would use visual analytics, statistics, and text mining to extract narrative features in order to characterize the empirical profile of messages that do and do not contain false information, the dialogues in which those messages are embedded, the narratives and counternarratives under discussion, the users that do and do not send the messages, the types of users and their motivations for sending those messages, and the groups that are or are not receptive to the messages. New methods would likely be tested on a combination of new and old data. As theoretical accounts are modified, social cybersecurity researchers develop new algorithms for collecting data on specific activities or measuring key features of those activities. The utility of these new methods and theories resides in the extent to which they support explanation and prediction in the wild, are reusable, and can be extended to new domains.

OPPORTUNITIES FOR THE IC

The field of social cybersecurity offers two primary benefits for the IC. First, it provides a means of strengthening the capacity of the United States to assess, predict, and mitigate the impact of attacks in the cyber-mediated environment that are aimed at affecting the hearts, minds, and welfare of U.S. citizens, corporations, and institutions. Second, the field provides a means of increasing U.S. capacity to assess, monitor, and forecast changes in behavior in other countries using social cyberintelligence.

The United States is engaged in an ongoing war in cyberspace, which is being conducted to a significant degree in and through social media (Shallcross, 2017; Waltzman, 2015): social cybersecurity threats are pervasive and on the rise because foreign adversaries and criminals exploit features of social media; 50 percent of the 10 worst social media–based

___________________

6 High-dimensional network analytics is the use of networks with multiple dimensions, such as a series of time-varying networks, networks with geocoordinates (geonetworks), or a set of networks varying in types of nodes and links (a metanetwork) (Carley, 2002).

cyberattacks occurred in 2017 (Wolfe, 2017). Spear phishing (sending a malicious file or link through an innocuous message) is also on the rise (Frenkel, 2017).

A key role of the intelligence analyst is to understand, explain, assess, and forecast the social threats in cyberspace and to counter those threats, which include the manipulation of information for nefarious purposes. Russia and China both have and use technologies that can manipulate content on social media by altering or disguising what is being said or who appears to be saying it, and influencing who will read or receive what information. Social cyber-mediated interference in elections is common. Bots, trolls, and cyborgs have supported information and disinformation campaigns aimed at influencing elections in the United States, Britain, Germany, and Sweden. Social cybersecurity attacks are prevalent: by one estimate, as many as one in five businesses have been subjected to a social media–based malware attack.7 Such cyberattacks are conducted by individuals, groups, nonstate actors, state actors, and actors sponsored by states, often supported by the use of bots. Because these actors vary in their capabilities, so, too, does the quality of their information maneuvers (Darczewska, 2014; Snegovaya, 2015; Zheng and Wu, 2005).

Virtually anything that can be represented in digital form can be falsified. Tools for falsifying content include fake actors (personas) (Mansfield-Devine, 2008), fake antivirus software (Stone-Gross et al., 2013), and fake websites (Holz et al., 2009). The spread of such intentionally deceptive material, particularly the spread of false information, has the potential to undermine societies and is a growing concern for governments around the world (Allcott and Gentzkow, 2017; Roozenbeek and van der Linden, 2018; van der Linden et al., 2017). The accuracy of recorded sound and images can no longer be taken for granted. Software can be used to alter digital images, mimic the sounds of human voices, and create simulated videos (e.g., Piotrowski and Gajewski, 2007; Kim et al., 2018). This technology can be used to portray people saying things they did not say and doing things that never actually occurred. The growing ability to fabricate audio and digital information not only complicates the task of societies in distinguishing between reality and false narratives but also complicates the intelligence analyst’s task in detecting deception (Joseph, 2017).

The IC must rely on open-source information in addressing a range of issues (Best and Cumming, 2007; Bean, 2011). Intelligence analysts collect, manage, and assess open-source data, seeking to understand the biases contained in the data, recognize when the data have been manipulated by an adversarial party, and recognize when individuals and communities in the

___________________

7 See https://www.pandasecurity.com/mediacenter/social-media/uh-oh-one-out-of-five-businesses-are-infected-by-malware-through-social-media [July 2018].

United States are under attack in the open-source information environment (Omand et al., 2012). Social cybersecurity science provides many of the tools and methods that can help meet these challenges.

Finally, the analyst has a need to understand which individuals, groups, and communities are at risk of being manipulated through social media and how that risk can be mitigated. This task includes understanding when the analyst and the IC organization are at risk. Meeting this challenge requires effective means of training IC analysts to recognize indicators and warnings that social cyberattacks are occurring, to be aware of the kinds of social cyber-mediated attacks that can occur and their consequences, and to operate safely in the social cyberenvironment. The IC needs to recognize quickly when it is under social cyberattack, as well as to identify the ways in which it is susceptible to related risks, such as insider threat, information maneuvers designed to discredit an investigation, or denial-of-service events conducted through social media. The field of social cybersecurity offers important perspectives on how to recognize and respond to such attacks. Other SBS research, particularly in the application of organization theory to high-risk organizations, provides guidance on how to promote heedful interaction in the cyber-mediated realm and how to develop and sustain an effective social cybersafety culture.

EXAMPLE APPLICATION: SOCIAL INFLUENCE ON TWITTER

An example illustrates the contributions of the social cybersecurity approach to intelligence analysis. The Islamic State of Iraq and ash-Sham (ISIS) makes extensive use of social media in its operations (Blaker, 2015; Veilleux-Lepage, 2015). It uses social media for recruitment (Berger and Morgan, 2015; Gates and Podder, 2015); information warfare on local populations (Farwell, 2014); and possibly intelligence gathering and training. Similarly, Russian information operations use social media to influence social opinion and alter behavior. Social cybersecurity theories and methods have been used to identify what tactics are being used for these purposes and to explore their potential impact. Much of this work has been done using data extracted from Twitter, although cyberforensic techniques allow researchers to connect to information in other media (e.g., Facebook and YouTube) as well.

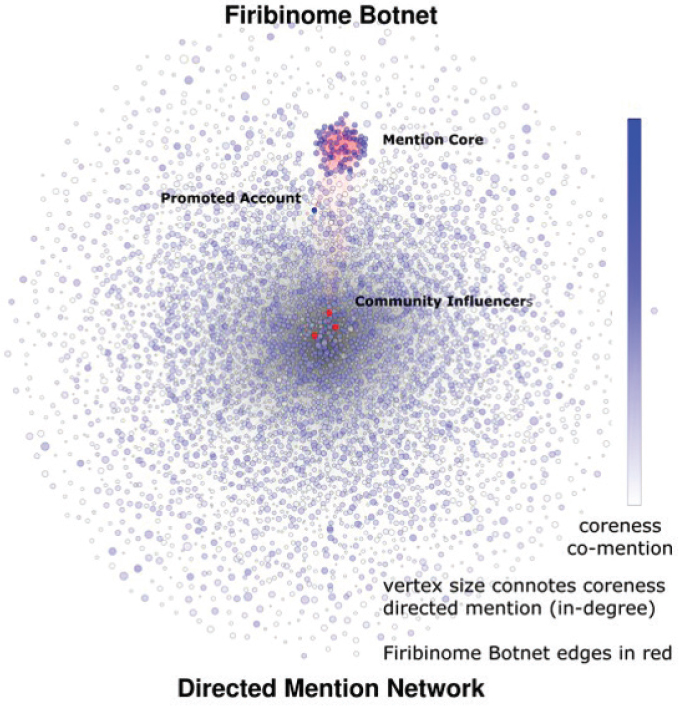

Consider an influence operation using Twitter to benefit ISIS. The high volume of tweets is such that Twitter may not have the resources to send every tweet from a particular user to all of that user’s followers, so prioritization schemes are needed to determine what to send and to whom. Twitter is organized organically into a set of topic-groups—dense communities of users that frequently mention each other and share topics, as shown in

Figure 6-1 (Benigni et al., 2017a, 2017b). A topic-group is simply the way humans self-organize in many social media systems. Social media platforms often have ways of measuring the size of these topic-groups and use information about the group’s size, membership, and topics of discussion to determine what messages, topics, or people to prioritize in various lists.

In our example, one of these topic-groups is focused on issues related to Syria. Initially, it also included many individuals who were, if not members of ISIS, at least sympathizers, and much of the discussion was related to ISIS recruitment and propaganda. Within Twitter topic-groups, users vary in their communicative power, so some individuals have a disproportionate ability to reach others in the topic-group when they tweet. These individuals are often identified using metrics from social network analysis such as page rank, superspreader, or superfriend. Superspreaders in particular have a large number of followers and are central figures in their topic-group. When such individuals tweet, their messages are more likely to be read and/or retweeted than are the messages of others in the topic-group, and tweets that mention such users are more likely to be retweeted, in part because of the algorithms used by Twitter to prioritize messages and users. The analytic theories and methods used in narrative studies, especially regarding what gives certain narratives and messages power (e.g., an underlying emotional message that leads to specific attitudes and beliefs), are relevant to this challenge (see Chapter 5). However, the application of these theories to social media technologies work needs further study, particularly because of the limits in how emotions can be conveyed in or understood from short text statements.

Twitter’s algorithms seem to prioritize tweets from superspreaders in the set of tweets received by other users. The term “echo chamber” refers to a set of users who tend to mention one another. The closer a topic-group is to being an echo chamber, the more rapidly information will diffuse within it. In these situations, emotions can escalate rapidly, and contradictory information is less likely to be broadcast. Messages from echo chambers appear to be prioritized in the set of messages Twitter sends to the topic-groups associated with those echo chambers. Thus if an echo chamber retweets a message from a superspreader, the members of the echo chamber are more likely to appear in the list of messages received by members of the topic-group.

Within the Syrian topic-group in our example, one superspreader is an imam. At this point, it is not known whether he is associated with ISIS or Enter the Firibi Gnome bot (an automated agent that engages in Internet activity and sends tweets) as shown in Figure 6-1. This bot—actually a network of bots—functions as a pure echo chamber. It retweeted messages from the imam, which caused members of the bot network to appear in the feeds of other members of the topic-group, who were often human. Thus,

NOTE: Each dot (vertex) is a Twitter user who has sent a tweet about a topic of interest. Dots in red are linked to the Firibi Gnome bot. The mention core is the set of Twitter users who are densely connected by mentions. The largest, densest mention core is near the top. The Firibi Gnome bot is in that core. The promoted account is the Twitter site associated with a website that is collecting money for the children of Syria. The influencer is the imam’s Twitter account.

SOURCE: Benigni et al. (2019).

members of the topic-group began to follow these bots. At some point, the bot network started tweeting messages with a link to a charity website that was ostensibly collecting money for the children of Syria, a site that some believe is linked to money laundering for ISIS. Without any active behavior from the imam, members of the topic-group were swayed by this bot to give money. Retweets by those who sympathized with messages from the bot were sufficient to manipulate the topic-group and to change the Twitter algorithm’s prioritization of messages and their recipients (refer to Figure 6-1).

Social cyber researchers have developed methods that make it possible to track and understand such online developments. These methods can be used to identify topic-groups and echo chambers (Benigni et al., 2017b); identify influential users in social media, such as superspreaders and super-friends (Altman et al., 2018); identify core topics (Alvanaki et al., 2012); identify cross-media linkages (Dawson et al., 2018); and measure the potential reach of a message (Hong et al., 2011). Research is also under way on technology that could be used to support the identification or spread of false information. Examples include technology for fact checking (Rubin et al., 2015; Snopes8); image modification (Schneider and Chang, 1996); duplication of images (Ke et al., 2004); brandjacking attacks9 (Youngblood, 2016); sentiment mining (Pang and Lee, 2008); stance10 detection (Somasundaran and Wiebe, 2010); personality, gender, and age identification (Schwartz et al., 2013); location identification (Huan and Carley, 2017); and event detection (Wei et al., 2015). This work builds on ongoing computer science research that is well funded and in which advances are already being made.

Social cybersecurity research based on this work uses these computational methods in developing new sociotechnical theories and methods focused on the spread of multiple types of information maneuvers that were previously treated as a single phenomenon.

RESEARCH NEEDED IN THE COMING DECADE

Research in the field of social cybersecurity is needed on two parallel fronts: (1) research to establish new scientific methods and techniques capable of processing and analyzing the new types of data and high-dimensional networks made prevalent by social media; and (2) research to translate the resulting findings and techniques to operational tools that can be used by the IC.

___________________

8 Available: https://www.snopes.com/fact-check/category/fake-news [November 2018].

9 Brandjacking is the practice of mimicking the online identity of a business for the purpose of deceiving or defrauding users.

10 In this context, “stance” refers to a publicly stated opinion, particularly one that is shared by an online community.

Advances in computer science, such as in the use and application of machine learning, have provided powerful tools for analyzing online activity. However, these advances are not readily transferable to the analysis of online activity in real time, nor are they sufficient to illuminate the broader context in which the activity is taking place. Multidisciplinary computational social science research building on both technological advances in computer science and SBS research has the potential to advance the research infrastructure in the field of social cybersecurity and expand the intelligence analyst’s capability to address cybersecurity questions.

Having the tools necessary to predict and prevent attacks in the social cyberspace will require an aggressive research effort to identify, characterize, and understand such attacks. The committee sees opportunities to address a number of issues of concern for intelligence analysis:

- identifying who is conducting social cybersecurity attacks,

- identifying the strategies used to conduct such an attack,

- identifying the perpetrator’s motive,

- tracing the attackers and the impact of the attack across multiple social media platforms,

- quantifying the effectiveness of the attack,

- identifying who is most susceptible to such attacks, and

- mitigating these attacks.

For each of these opportunities, we provide an overview of the challenge, summarize the recent work on which future developments would build, and specify the nature of further work that can pay significant dividends in the coming decade.

Social Cyberforensics: Identifying Who Is Conducting Social Cybersecurity Attacks

One of the keys to mitigating and responding to social cybersecurity attacks is being able to identify the perpetrators and impose sanctions against them. However, identification of perpetrators is a difficult problem in the cyber-mediated environment. Overcoming technical issues such as IP spoofing11 can partly address this problem (Tanase, 2003), but the possibilities for overcoming the problem would be greatly expanded if it were possible to identify behavioral patterns at the individual and group

___________________

11 IP spoofing has been defined as an attack in which “a hacker uses tools to modify the source address in the packet header to make the receiving computer system think the packet is from a trusted source, such as another computer on a legitimate network, and accept it”; see https://usa.kaspersky.com/resource-center/threats/ip-spoofing [January 2019].

levels, such as those associated with language use, location, credit taking, patterns of verbal communication, and use of images (e.g., Chen, 2015; Krombholz et al., 2015). Yet another need is the capacity to identify two actors appearing in two different media as in fact the same actor (such as when terrorist group members move from Twitter to a site on the dark web) (Maddox et al., 2016).

To illustrate, a writer’s identity may be revealed through the linguistic style of a piece of text, and some work has suggested that such clues can be traced. Researchers have developed a method of encoding stylistic attributes to develop so-called “writeprints” (markers akin to fingerprints) (Abbasi and Chen, 2008). This method is based on the premise that aspects of any individual writer’s usage (e.g., lexical, syntactic, structural, content-specific, and idiosyncratic features) are unconscious and persist from one document to another, so that they can be used to effectively identify an individual author (Pearl and Steyvers, 2012). Topic models have been built using these features. Thus it is now theoretically possible to develop documents that can exactly match the features of a particular author. Most of this work, however, is in early stages and is limited to English (Mbaziira and Jones, 2016).

Other detection tools are possible in the near term. Recent advances in social network/network science (Benigni et al., 2019) and social cyberforensic techniques (Al-Khateeb et al., 2017) offer promising possibilities for identifying perpetrators. The social media reach of perpetrators is often enhanced by the use of bot, cyborg, Sybil, or troll techniques (Johansson et al., 2013; Klausen, 2015).12 Indeed, as discussed above, many of the actors in social media may be bots; one study suggests that this may be the case for 48 million Twitter accounts (Varol et al., 2017). And according to a recent Pew Research Center report, two-thirds of all links shared on Twitter were shared by suspected bots (Wojcik, 2018). Emerging techniques are making it easier to identify whether perpetrators are humans, bots, or cyborgs, and further research is needed to increase the operational utility of these techniques for the intelligence analyst (e.g., Beskow and Carley, 2018; Morstatter et al., 2016). Bots and cyborgs that are used to influence and manipulate individuals and communities, often by exploiting features of a particular social medium, are evolving in sophistication and form as media platforms and bot-detection techniques evolve. At present, however, understanding of how bots and cyborgs evolve is limited to knowing that they are becoming more sophisticated, and no technology for predicting their evolution exists.

___________________

12 A cyborg is an actor that is part human and part bot, frequently a human assisted by algorithms. Sybil is another, less widely used name for a bot. A troll is a user who posts inflammatory or off-topic messages in an online community in order to start quarrels or upset people; a troll account may be used by a single person, a group, or cyborgs.

Information Maneuvers: Identifying the Strategies Used to Conduct an Attack

Used to manipulate individuals and groups, an information maneuver is any communication strategy intended to exaggerate or mitigate the spread of selected information or opinions, garner information, influence opinion, build or break connections among individuals to enable or prevent the spread of information or opinion, or exaggerate or minimize the influence of key actors (Al-Khateeb and Agarwal, 2016). A typical analytic approach to identifying information maneuvers is to look for something odd in social media posts, such as an increase in messages or the appearance of a new actor, and then collect specific data related to this anomaly. In so doing, an analyst working today would conduct detailed legwork involving tracking and reading messages. This approach is inherently costly, cannot be applied on a large scale, and is difficult to teach. A growing body of multidisciplinary research, however, has laid the foundation for new tools to augment intelligence analysis by detecting information maneuvers in a semiautomated fashion, identifying their intended audience, and classifying them by type. Much of this research has grown out of work on information warfare, marketing studies, and analyses of bot activity.

As discussed in Chapter 5, a central research challenge has been to investigate how fragilities of human social cognition and emotion can be exploited in an online context to shape information access and opinions, as well as how primary influencers exert their influence, and to better understand the nature of groups that are influenced through social media. These questions are important in seeking to understand information maneuvers and social cyberattacks, which typically operate at both the social network level (who is communicating with whom/influenced by whom) and the knowledge network level (who shares what information or opinions with whom). Such attacks typically exploit social cognition, including people’s perception of the generalized other (that nebulous entity that represents one’s opinion of what is common across the group), generalization strategies, and social influence procedures (Benigni et al., 2017a).

Information maneuvers can take different forms with very subtle nuances, and they require elaborate setups. Examples include maneuvers to manipulate an election (Metaxas and Mustafaraj, 2012), social engineering campaigns (Kandias et al., 2013), and satire campaigns (Babcock et al., 2018).

A social engineering campaign is the psychological manipulation of individuals to get them to perform specific actions, such as divulging confidential information or state secrets. Social engineering is one of the many tactics used in social influence campaigns on social media, such as those aimed at insiders (Kandias et al., 2013). Social engineering attacks, such as phishing and vishing (voice phishing), exploit not only factors well known to drive people’s responses (see, e.g., Kumaraguru et al., 2007) but also how those responses are constrained and amplified by new technology. Traditional social science theories suggest that, whether they are conscious of it or not, people are motivated by (Cialdini, 2001):

- reciprocity, or a sense of obligation to return favors;

- commitment, or a sense of obligation to do what one says one will;

- authority, or an inclination to obey or follow authority figures;

- social influence, or a tendency to do what others do;

- sociability, or a tendency to do what those one likes suggest; and

- scarcity reduction, or a tendency to desire what is scarce.

In a cyber-mediated environment, these motivations act somewhat differently because of the influence of other factors, such as a preference for easy modes of response, readily available information, and minimization of effort. Further, the features of the communication technologies influence who is motivated by what, and when, by making it possible to alter

- the way information is prioritized;

- constraints on choices;

- the attractiveness of options (e.g., using color, font and images, or repetition); and

- how easy it is to tell whether one is interacting with people, organizations, or bots.

A satire campaign is the use of exaggeration, humor, or irony to expose the inappropriate actions or views of particular people, groups, or organizations. In social media contexts, however, satire often appears out of context and so may not be recognized as such. Satire attacks can go viral and may be mistaken for news and then recharacterized as “fake news.” This latter pattern is sometimes referred to as “the Stewart/Colbert effect,” referring to the unintended persuasiveness of comedians’ personas (Amarasingam, 2011). Satire attacks are among the many tactics used in social influence campaigns on social media, such as those aimed at political groups Babcock et al., 2018).

The literature on information warfare also sheds some light on new forms of information maneuvers. Research on information warfare typically considers four broad strategies: distort, dissuade, distract, and dismay (Snegovaya, 2015). Classically, these strategies depend on how messages are constructed and communicated; there are well-known rhetorical strategies for persuasion (Ferris, 1994). Although forms of information maneuvers would fit into these four broad strategies, it is not yet known whether there are other strategic purposes to consider, or whether automatic characterization of an information maneuver or social media campaign as representing one of these strategies is possible. In addition, recent research has demonstrated that information maneuvers in social media may not only manipulate what is being said but also foster or undermine online communities or topic-groups associated with a message (Benigni et al., 2017b) or identities and brands (i.e., brand-jacking [e.g., Ramsey, 2010]).

Researchers are currently seeking ways to use features of social media posts and actors and the delivery/response sequence in characterizing information maneuvers. They have identified features that could work, including manipulation of emotions (Stieglitz and Dang-Xuan, 2013), presence (Naylor et al., 2012), group formation (Benigni et al., 2019), image manipulation (Tsikerdekis and Zeadally, 2014a), speed of spread (Vosoughi et al., 2018), and manipulation of the message and the group by bots (Benigni et al., 2017b). A large body of research explores the relationships among emotions, emotion manipulation, and the presentation of emotion

in social media (Asur and Huberman, 2010; Gilbert and Hutto, 2014; Liu and Zhang, 2012; Pang and Lee, 2008; Steiglitz and Dang-Xuan, 2013). Additionally, particular emotions have specific triggers and functions, all of which lead to different cognitions and prime different actions/decisions. Thus, research on how specific discrete underlying emotional states lead to different consequences would be useful.

Much of the work in this area uses highly simplistic measures of emotion focused on the valence and strength of words in general, in context, or across a body of posts (stance). Meanwhile, more sophisticated approaches to emotion, such as affect control theory and discrete emotions theory (Heise, 1987; Robinson et al., 2006), provide the basis for relating emotions to behavior and identity construction empirically. However, these approaches are generally not applied to social media (an exception being Joseph and Carley [2016]). The research on social media and emotions, however, is still not well connected to the research on affect control, emotion management, and group behavior.

Another approach to characterizing information maneuvers—the use of images and videos, including doctored or fake images—has become possible with the advent of new platforms that better support images and videos, as well as increasing bandwidth, the prevalence of smartphones, and growing consumer interest in moving from text to images or videos to communicate. Recent studies in this area have explored the use of images and videos in social media by terrorist groups to recruit, spread messages, distort opinions, sow fear, and spread misleading health information (Farwell, 2014; Huey, 2015; Mangold and Faulds, 200; Syed-Abdul et al., 2013). Automated image and video analysis, however, is being carried out largely in the field of computer science and has not made its way to the field of social cybersecurity. Although hundreds of social cybersecurity studies have used computational text analysis methods, there appear to be only a few that have used any form of computational image or video processing.13 An area of research prime for breakthroughs in the near future, then, is understanding how the presentation of emotion-laden messages and images in social media can influence groups, how such presentation varies across messages containing true and false information, and how the impact of such messages and images can be countered within and through information maneuvers.

___________________

13 This observation is based on an examination of all papers identified by Carley and colleagues (2018) as being in the area of social cybersecurity.

Intent Identification: Identifying the Perpetrator’s Motive

Although progress is being made in the development of methods for identifying when information maneuvers have occurred, understanding the intent behind these maneuvers presents its own challenges (e.g., Sydell, 2016). People choose to deceive others for many reasons, including to avoid something negative; to fulfill a desire for fun, economic benefit, or personal advantage; to bolster self-esteem, make others laugh, or act altruistically; or to be polite. They may also, of course, seek to deceive for malicious reasons (Bhattacharjee, 2017). Research on deception by state and nonstate actors in cyberspace has distinguished among three types of cyberattacks: they may be conducted for economic reasons (Lotrionte, 2014) or strategic cyberespionage and military reasons (Geers et al., 2013), or be opportunistic and politically motivated (Kumar et al., 2016).

An intriguing aspect of the motivation for information maneuvers is that much of the activity in social media is not malicious, but is aimed at spreading news or information on new products, sharing information on social activities, and building communities of like interest and concern. At a high level, bots and information maneuvers have been used in similar ways for both illicit and legal gain and with both malicious and nonmalicious intent. Thus information maneuvers useful for spreading false information are also useful for spreading true information. Tactics used to market real products (e.g., Safko, 2010; Scott, 2015) are also used to market illegal products (Benigni et al., 2019). And procedures used to recruit and support followers for sports teams are also used to recruit and support followers for terrorist groups (compare Henderson and Bowley [2010] and Farwell [2014]). Researchers have suggested that differences in metadata, word choice, and timing of messages may provide clues to the intent behind messages (Java et al., 2007; King, 2008), but determining the intent of a particular actor, or at least distinguishing malicious and nonmalicious activity in an automated fashion, remains a challenge.

Assessment of images and videos is frequently used to develop insight into the intent of those who spread deceptive information. In one example, a dismay maneuver used images of a bomb attack in the White House with the intent to spread terror (Weimann, 2014). In another case, a Russian information operation used fake images, some from video games (Luhn, 2017; Murphy, 2017), in tweets and Facebook posts claiming that the United States was supporting ISIS. Fake images of frightening phenomena, such as sharks in subways or airports flooded with water, are routinely circulated in the immediate aftermath of disasters to contribute to disruption (Gupta et al., 2013). Indeed, compendiums of such images have been developed, so many are reused or doctored and reused whenever disasters occur. Image analysis holds promise for understanding intent in such cases.

Researchers are also exploring other possible indicators that can be used to identify deception, including linguistic markers (Briscoe et al., 2014; Zhou and Zhang, 2008; Zhou et al., 2003); activity indicators (e.g., those used in detecting bots [Subrahamainian et al., 2016]); nonverbal behavior and the use of multiple accounts (Tsikerdekis and Zeadally, 2014a); and social structural behavior (i.e., behaviors that change who is interacting with whom and who is important in the social network) (Pak and Zhou, 2014). However, the ability to engage in deceptive behavior and the types of behaviors possible are dependent on the technology itself (Tsikerdekis and Zeadally, 2014b), language (Levine, 2014), the human social network (Chow and Chan, 2008; Tsikerdekis and Zeadally, 2014b), and human cognition (Spence et al., 2004). Other research has examined the profiles, characteristics, and motivations of hackers or cybercriminals who create fakeries or use deception or deceptive messages (Décary-Hétu et al., 2012;

Papadimitriou, 2009; Seigfried-Spellar and Treadway, 2014). Still other work seeks to identify the characteristics of individuals and groups that make them vulnerable to deceptive messaging (Pennycook and Rand, 2018).

Some of this work has led to automated fact checkers that rely on both human- and machine-labeled input (e.g., Snopes;14Hassan et al., 2015), software tools for identifying deception based on verbal cues in texts (Zhou et al., 2004), tools for creating and detecting fake personas (even those that create personas with disabilities) (DeMello et al., 2005; Schultz and Fuglerud, 2012), and software for modifying text and auditory and video/image data streams to engender trust in the false information (Emam, 2006; Stamm et al., 2010). While there has been a fair amount of work on detecting in-person deception based on auditory and visual cues, tools for autoidentification based on findings about auditory or visual human “tells” are less well developed (Vrij et al., 2010). Thus, ongoing research in social cybersecurity is seeking ways to uncover intent and deception computationally.

___________________

14 Available: https://www.snopes.com/fact-check/fake-news-stories [October 12, 2018].

Cross-Media Movement and Information Diffusion: Tracing the Attackers and the Impact of the Attack across Multiple Social Media Platforms

Classic theories of information diffusion are largely agnostic with respect to what media are used, and those that consider the media used often focus on social presence (Cheung et al., 2011), speed and network externality effects (Lin and Lu, 2011), and media features (Lee et al., 2015). In social media, however, there is not one medium but many. Studies have shown that movement among media or links from a message in one medium to another can increase the spread and reach of messages (Agarwal and Bandeli, 2017; Suh et al., 2010). Such movement among media can be engineered by bots (Wojcik, 2018), and allows actors to “hide,” moving groups and messages they take with them when they move between media (Al-Khateeb and Agarwal, 2016; Liang, 2015), which allows them to create safe havens.15 An article on the online news site Wired describes the phenomenon this way:

The Islamic State maximized its reach by exploiting a variety of platforms: social media networks such as Twitter and Facebook, peer-to-peer messaging apps like Telegram and Surespot, and content sharing systems like JustPaste.it. More important, it decentralized its media operations, keeping its feeds flush with content made by autonomous production units from West Africa to the Caucasus—a geographical range that illustrates why it is no longer accurate to refer to the group merely as the Islamic State of Iraq and al-Sham (ISIS), a moniker that undersells its current breadth.16

Some social media platforms are more likely to be used to receive rather than to generate messages. Most rumors on Twitter, for example, originate in other media (Liu et al., 2015), most notably in blogs. People in general use different media for different purposes (Haythornthwaite and Wellman, 1998). To be sure, diffusion models exist for social media such as Twitter (Xiong et al., 2012) and Flickr (Zhao et al., 2010). However, there are only a few theories of or models for information diffusion when multiple social media are present and in use (an exception being a model called Construct [Carley et al., 2009, 2014]), and even this model needs to be extended to account for the newer social media platforms). Although technologies are available for tracking a message or an individual across media (e.g.,

___________________

15 For example, “terrorists and extremists are increasingly moving their activities online—and areas of the web have become a safe haven for Islamic State to plot its next attacks, according to a report published last week by the London-based Henry Jackson Society” (quoted from http://www.homelandsecuritynewswire.com/dr20180409-stealth-terrorists-use-encryption-the-darknet-and-cryptocurrencies [April 2018]).

16 See https://www.wired.com/2016/03/isis-winning-social-media-war-heres-beat [April 2018].

Maltego), theories on and the ability to predict such moves do not exist (Al-Khateeb et al., 2017). Work in this area is currently limited by barriers to data collection, diffusion theories that do not account for who uses what media when, and a lack of good digital forensic skills and techniques (Bidgoli, 2006; Huber et al., 2011). Thus, most of this research considers only a single medium, such as blogs (Gruhl et al., 2004), Twitter (Romero et al., 2011), or e-mail (Mezzour and Carley, 2014). In stark contrast, most marketing guidance recommends the use of multiple media (e.g., Hovde, 2017). The technology exists to conduct cross-media assessment, but it is in its infancy and not widely available.

Real-Time Measurement of the Effectiveness of Information Campaigns: Quantifying the Effectiveness of the Attack

Real-time measurement of the impacts of information campaigns is a classically difficult problem, as those impacts often are slow to develop. In general, research is sparse on how to assess empirically and in real time the impact or success of an information maneuver (Carrier-Sabourin, 2011). The vast quantity of data and increased speed of communication that characterize social media create an environment in which it may be possible to make progress in this area. A number of metrics for measuring the reach and influence of messages and actors on social media have been suggested (Hoffman and Fodor, 2010; Sterne, 2010). Some of these are predicated on notions of social network influence (Benigni et al., 2019) and still others on rhetoric-based conceptions of reach (Carley and Kaufer, 1993). Nevertheless, there is little consensus among researchers on what to measure,

how to use these measures strategically, and whether proposed metrics are valid (Barger and Labrecque, 2013). Furthermore, it is unknown how the data collection strategy affects these metrics and whether, as a consequence, the measurement results could be biased. Another key challenge in this area is the creation and use of measures that can capture the dynamics of the underlying social and knowledge networks. Although approaches for assessing network dynamics exist (Ahn et al., 2011; Carley, 2017; Snijders, 2001), only those that can be used for incremental assessment scale well (Kas et al., 2013). Existing methods also cannot handle high-dimensional networks and so cannot assess impact in the social and knowledge networks simultaneously.

At-Risk Groups: Identifying Who Is Most Susceptible to Attacks

The risk of being susceptible to information maneuvers has traditionally been considered greatest among those who are socially or economically disadvantaged, and risk reduction has been viewed as a function of education, awareness, empowerment, and reduction of disparities. Studies focused on the 2016 elections, however, found that while education was positively associated with accurate recognition of the falsity of news stories, so, too, were age and total media consumption (Alcott and Gentzkow, 2017). Current research suggests several factors that could influence those at risk: lost trust in mainstream media (Ekovich, 2017), overly filtered information through the use of personalized news (Flaxman et al., 2016),

being embedded in topic-groups that are echo chambers (Benigni et al., 2019), and the inability to recognize that the information received is from bots (Benigni et al., 2017b). Other research, however, suggests that the majority of people do not trust information on social media (Ekovich, 2017) and that the spare empirical evidence available is not definitive on the impact of filtering (Zuiderveen Borgesius et al., 2016).

Inoculation techniques to reduce the susceptibility of individuals and groups to the spread of disinformation and to being affected by information warfare activities often take the form of media education. These techniques, however, are not based on empirical evidence and a deep understanding of the features of communication and entertainment technologies that can be exploited to spread disinformation. Such features include the short length of tweets, which makes it difficult to tell whether a message is satire (Babcock et al., 2018); marketing services that use bots to send tweets from an individual’s account as that person (Benigni et al., 2017b); and the removal (by Google) of image information that had made it easier to identify the falsity of information (Stribley, 2018).

SBS research shows that people will continue to persist in beliefs even when the evidence for those beliefs is discredited; facts do not change opinions (Kolbert, 2017). Thus knowing that news is manufactured does not keep people from believing it (Lilienfeld, 2014). A variety of mechanisms underlie this phenomenon (Shermer, 2002)—for example, (1) the belief that the untrue is fun, (2) the belief that true information from an untrusted source is not trustworthy, (3) social influence, (4) a reduction in cognitive dissonance, and (5) confirmation bias. Given the high volume of data in social media, it is often argued that trust in the source is used as a way of filtering information and reducing cognitive load, in which case false information from a trusted source is more trusted than true information from an untrusted source (Tang and Liu, 2015). Furthermore, a number of mechanisms have been suggested as supporting the sharing of false information, such as a preference for believing and sharing novel over more familiar information; a preference for stories that generate particular emotional reactions, such as surprise or disgust (see, e.g., Aral and Van Alstyne, 2011; Berger and Milkman, 2012; Itti and Baldi, 2009); a preference for believing what others believe (Friedkin, 2006); and appeals to the generalized other (Benigni et al., 2019).

It has become commonplace for social media providers, such as Facebook and Twitter, to use hidden algorithms to guide users to particular types of content and to other users with similar interests. This algorithmic strategy increases the likelihood that users will experience repeated exposure to particular individuals, groups, messages, and narratives. Bots and cyborgs can exploit these algorithms and create online communities

in which alternative messages are suppressed, appeals to the generalized other foster group acceptance (Holdsworth and Morgan, 2007; Mead, 1934), images and humor are used to limit discussion (Meyer, 2000), and users are exposed to artificially enhanced social influence (Benigni et al., 2019). Social influence is critical in affecting one’s beliefs and attitudes (Friedkin, 2006), and repeat exposure to these “contained” online communities increases the likelihood that an individual will embrace particular information and messages.17 Spammed messages to email or social media accounts is another mechanism that has been instrumental in driving people to fake websites and the adoption of malware (Moore et al., 2009).

Research has explored the spread of false information and has begun to document its potency. In a recent large-scale study, for example, Vosoughi and colleagues (2018) found that false information diffused “significantly farther, faster, deeper and more broadly than the truth in all categories of information” (p. 1147), although other studies have found that this is the case only when an offline receptive group exists (Babcock et al., 2018).

___________________

17 For examples, see work by Unkelbach (2007); Unkelbach and Stahl (2009); Alter and Oppenheimer (2009); and Fazio et al. (2015).

The Most Effective Responses: Mitigating These Attacks

Direct counterattacks on those conducting information maneuvers are often unsuccessful. Terrorists suspended from Twitter, for example, will recreate new accounts and engage in this activity even more vigorously (Al-Khateeb et al., 2017). Strategies focused on the receivers of the information and countermessages tend to be more effective,18 but the success of such strategies depends on how messages are constructed and communicated visà-vis the group that is to be counterinfluenced. Deep understanding of the sociopolitical context is also necessary to keep the messaging attempt from backfiring. Research has yielded numerous guidelines for the creation of effective countermessages—for example, increasing credibility through the use of visuals (Murakami et al., 2009), not engaging in direct confrontation (Goulston, 2015), including a URL (Suh et al., 2010), being unyielding in stance (Lajeunesse, 2008), creating trust in the source (Tarran, 2017), and providing for sufficient resources and planning (Southwell et al., 2017). Because information maneuvers in social media involve manipulation of both groups and messages, moreover, new approaches to countermessages that include attention to the nature of the group are needed. Examples of such approaches include the application of research on participatory democracy and deliberative democracy techniques (Mutz, 2006), as well as influence maximization (Chen et al., 2010).

Although such research provides some information to guide countermessaging, it does not address a key problem occurring in social media—that, as discussed earlier, those with similar opinions form topic-groups through which they receive constant social support for not listening to counterarguments (the echo chamber effect [Bakshy et al., 2015]). Individuals confined to a topic-group may attend selectively only to certain messages and not even be exposed to any counterarguments (what is known as the filter-bubble effect [Flaxman et al., 2016]). One potential countermessaging approach to address this problem is the use of a context-aware system that directs messages from one actor to another (Conroy et al., 2015; Fischer, 2012).

A key limitation of this research, however, is that it tends to focus either on winning the argument or on diffusing the message, and not on winning a diffusion contest against a competing message. The majority of the empirical work on the diffusion of competing ideas has used simulation (e.g., Carley, 1990; Krackhardt, 2001). However, these studies do not address how the type of communication medium affects the spread of ideas. A practical challenge in this area is that even if the perfect counter-

___________________

18 In operation, policies dictate which kinds of strategies are permitted under the law.

messaging strategy were known, its use might not be possible under current rules governing the IC.

With respect to such issues as the spread of malware through social media and phishing attacks, research has expanded to look at policies, defenses, and engineering solutions that can mitigate the impact of such attacks (Fette et al., 2007; Galbally et al., 2014; Kumar et al., 2016; Lin et al., 2009; Yin et al., 2007; Zahedi et al., 2015; Zargar et al., 2013). At the organizational level, much of this work has focused on technical solutions to preventing or minimizing the impact of malware spread by social media (Timm and Perez, 2010) and on training and toolbars to avoid phishing (Wu et al., 2006). Research is increasingly showing that a mitigation strategy needs to employ a three-pronged approach, encompassing corporate policy, social cybersecurity training, and technology (Cross, 2013; Oxley, 2013). Much of this research has been based in the areas of policy and cybersecurity without drawing on the wealth of research in organizational science. The organizational literature suggests that in general, when in a high-risk situation, an organization needs to have a safety culture (Guldenmund, 2000), elements of which include heedful interaction, awareness of the risk, and support for maintaining a safe environment. Although much of the work in this area has focused on health (Pronovost and Sexton, 2005) and nuclear power plants (Pidgeon, 1991), its general claims are equally relevant to social cybersecurity risks. Engaging in heedful social cyber interaction and developing and maintaining a social cybersafety culture can potentially reduce risks associated with social cyberattacks. The IC has itself been a victim of such attacks, and therefore may wish to explore how an IC-specific social cybersecurity safety culture can be instituted.

CONCLUSIONS

Cyber-mediated threats are a growing area of concern for the IC. Because their use is increasing and their platforms change rapidly, social media serve both as a mechanism for monitoring developments and cyber-mediated threats and as a mechanism that can be manipulated to influence behaviors in ways that may pose threats to national security. We note that current work related to cyberspace issues—including data collection, cybersecurity, and social cybersecurity—is fragmented across a large number of U.S. government agencies and parts of the IC. The tools used by these entities, the authority they have to collect information, and their agreements with third-party vendors to collect data or run assessments all vary. The IC may wish to explore whether a central office to coordinate cyberintelligence efforts is needed. We caution, however, that issues associated with terrorism, social cybersecurity, and cybersecurity each demand distinct sets of skills and authorities.

Designing ways to protect against such threats requires the ability to collect data on and analyze and visualize high-dimensional dynamic networks with both social network and knowledge network components; Twitter networks, for example, generate both social data on who replies, retweets, or mentions or which individuals are quoted, and knowledge data on hashtags or topics that co-occur. However, available machine learning techniques and standard computer science methods are of limited utility for answering nuanced questions about developing situations (Lazer et al., 2014). Nor are traditional social science methods sufficient to address complex issues in today’s information environment.

The promising next frontier is the combining of computer science techniques with deep understanding of how the media and entertainment technology used to collect these data operate, the sociocultural phenomena being studied, and relevant social and cognitive science theories (Carley et al., 2018; Wang et al., 2007). Social network/network science methods coupled with language technologies, geospatial crowdsourced information, or machine learning and applied to large-scale data form the methodological cornerstone on which new advances will be realized. This kind of data is “big” not only because of the quantity involved, but also because of the number of networks in which the messages are embedded over time (National Research Council, 2013).

Empirical assessment of influence and manipulation in social cyberspace is yielding methods capable of processing large volumes of data, often from multiple media, and carrying out high-dimensional network analysis. Such methods have been used for successfully addressing a number of issues, such as the likelihood of retweeting (Suh et al., 2010), information diffusion (Romero et al., 2011), disaster planning (Landwehr et al.,

2016), extremist recruiting (Benigni et al., 2019), and political polarization (Conover et al., 2011). Furthermore, geospatial assessments have shown great diversity in the ways in which social media are used by region, time, and political context (Carley et al., 2015).

This work provides a starting point for the development of tools that could be used by the IC for efficiently identifying propaganda, false information, and other social cyberthreats. In addition to building a body of research in this new field, researchers will need to address a number of methodological and data challenges if social cybersecurity research is to make the progress that is needed in the coming decade. These challenges include the development of both policy solutions for improving researchers’ access to data and more sophisticated techniques for working with large but often incomplete and biased datasets (Tufekci, 2014).

CONCLUSION 6-1: A comprehensive multidisciplinary research strategy for identifying, monitoring, and countering social cyberattacks, predicated on computational social science, would provide significant support for the Intelligence Community’s (IC’s) efforts to address the social cybersecurity threat in the coming decade. The emerging field of social cybersecurity research can yield insights that would supplement the IC’s training and technology acquisition in the area of social cybersecurity threats and foster an effective social cybersafety culture. These insights could support development of the capacity to, for example, detect bots and malicious online actors and track the impact of social cyberattacks.

CONCLUSION 6-2: The Intelligence Community could strengthen its capacity to safeguard the nation against social cyber-mediated threats by supporting research with the objectives of developing

- generally applicable scientific methods for assessing bias in online data, drawing conclusions based on missing data, and triangulating to interpolate missing or incorrect data using multiple data sources; and

- new computational social science methods that would simultaneously consider change in social networks and narratives within social media–based groups from a geotemporal social-cyber perspective; and operational computational social science theories of influence and manipulation in a cyber-mediated environment that simultaneously take into account the network structure of online communities, the types of actors in those communities, social cognition, emotion, cognitive biases, narratives and counternarratives, and exploitable features of the social media technology.

REFERENCES

Abbasi, A., and Chen, H. (2008). Cybergate: A design framework and system for text analysis of computer-mediated communication. MIS Quarterly: Management Information Systems, 32(4), 811–837.

Agarwal, N., and Bandeli, K.K. (2017). Blogs, fake news, and information activities. In G. Bertolin (Ed.), Digital Hydra: Security Implications of False Information Online (pp. 31–46). Riga, Latvia: NATO Strategic Communications Centre of Excellence.

Ahn, J., Taieb-Maimon, M., Sopan, A., Plaisant, C., and Shneiderman, B. (2011). Temporal visualization of social network dynamics: Prototypes for nation of neighbors. In International Conference on Social Computing, Behavioral-Cultural Modeling, and Prediction (pp. 309–316). Berlin/Heidelberg, Germany: Springer. Available: http://www.cs.umd.edu/hcil/trs/2010-28/2010-28.pdf [December 2018].

Al-Khateeb, S., and Agarwal, N. (2016). Understanding strategic information maneuvers in network media to advance cyber operations: A case study analysing pro-Russian separatists’ cyber information operations in Crimean water crisis. Journal on Baltic Security, 2(1), 6–27.