Proceedings of a Workshop

WORKSHOP OVERVIEW1

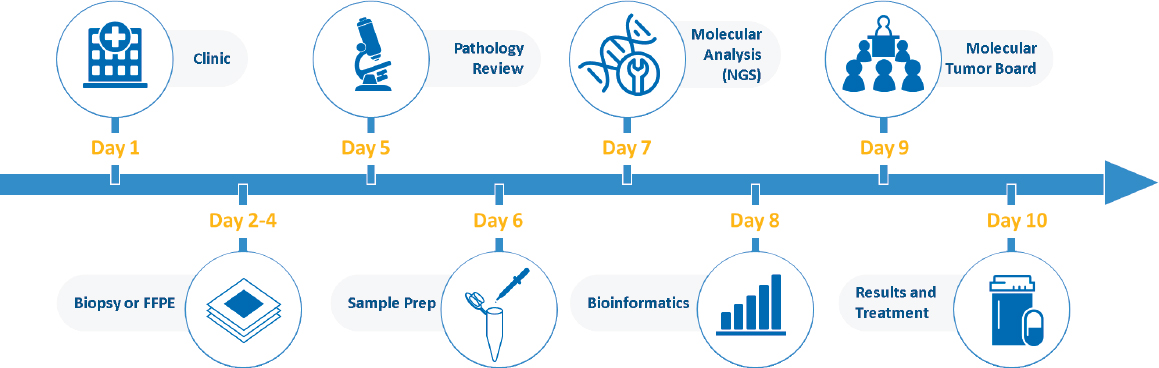

A hallmark of high-quality cancer care is the delivery of the right treatment to the right patient at the right time. Precision oncology therapies, which target specific genetic changes in a patient’s cancer, are changing the nature of cancer treatment by allowing clinicians to select therapies that are most likely to benefit individual patients. In current clinical practice, oncologists are increasingly formulating cancer treatment plans using results from complex laboratory and imaging tests that characterize the molecular underpinnings of an individual patient’s cancer. These molecular fingerprints can be quite complex and heterogeneous, even within a single patient. To enable these molecular tumor characterizations to effectively and safely inform cancer care, the cancer community is working to develop and validate multiparameter omics tests and imaging tests as well as software and computational methods for interpretation of the resulting datasets.

To examine opportunities to improve cancer diagnosis and care in the new precision oncology era, the National Cancer Policy Forum developed a two-workshop series. The first workshop focused on patient access to exper-

___________________

1 The planning committee’s role was limited to planning the workshop, and the Proceedings of a Workshop was prepared by the workshop rapporteurs as a factual summary of what occurred at the workshop. Statements, recommendations, and opinions expressed are those of individual presenters and participants, and are not necessarily endorsed or verified by the National Academies of Sciences, Engineering, and Medicine, and they should not be construed as reflecting any group consensus.

tise and technologies in oncologic imaging and pathology and was held in February 2018.2 The second workshop, conducted in collaboration with the Board on Mathematical Sciences and Analytics, was held in October 2018 to examine the use of multidimensional data derived from patients with cancer, and the computational methods that analyze these data to inform cancer treatment decisions.

The workshop convened diverse stakeholders and experts, including clinicians, researchers and statisticians, and patient advocates, as well as representatives of health care organizations, academic medical centers, insurers, and federal agencies. The workshop included presentations and panel discussions on the current state of computational precision oncology and its opportunities, challenges, and limitations. Topics explored included

- Data quality, completeness, sharing, and privacy;

- Preclinical and clinical validation of the reliability, safety, and effectiveness of diagnostic tests and clinical decision support tools;

- Regulatory oversight and reimbursement;

- Communication of omics findings to clinicians and patients; and

- Lessons from the use of computational precision oncology in clinical practice.

This workshop proceedings highlights suggestions from individual participants regarding potential ways to improve the translation of computational precision oncology into clinical practice. These suggestions are discussed throughout the proceedings and are summarized in Box 1. Appendix A includes the Statement of Task for the workshop. The agenda is provided in Appendix B. Speakers’ presentations and the webcast have been archived online.3 A brief summary of the first workshop in the series can be found in Box 2.

NEW PARADIGM IN CANCER DIAGNOSIS AND CARE

Several speakers described the evolving paradigm in precision cancer diagnosis and care due to the advent of new techniques for the molecular characterization of patients’ tumors, as well as advanced computational methods for interpretation of these complex data.

Christopher Cogle, professor of medicine at the University of Florida College of Medicine, compared computational modeling with the more traditional approaches for preclinical assessment of oncology drugs: in vitro and

___________________

2 See https://www.nap.edu/catalog/25163 (accessed January 2, 2019).

3 See http://nationalacademies.org/hmd/Activities/Disease/NCPF/2018-OCT-29.aspx (accessed January 2, 2019).

in vivo studies. For in vitro studies, tumor cells are removed from patients or animals and tested in the laboratory with a potential drug or drug combination. However, he said in vitro results are not strongly correlated with clinical efficacy (Burstein et al., 2011). For in vivo testing, potential drugs are administered to animals with tumors. In vivo testing may be more predictive of clinical efficacy, but this has not been shown conclusively, according to Cogle.

By contrast, computational modeling uses large sets of patient and tumor data to predict efficacy of specific therapies for different tumor subtypes. Computational modeling can consider multiple tumor characteristics and drug options simultaneously, and findings can be updated with advancing knowledge. Lisa McShane, acting associate director for the Division of Cancer Treatment and Diagnosis and chief of the Biometric Research Program at the National Cancer Institute (NCI), said that computationally intensive methods are best suited to answering complex questions in which there are many variables to consider and data from a large number of patients. She said that a major challenge in the development of complex computational algorithms

in oncology is the lack of sufficiently large datasets; when computational algorithms are derived from an inadequate number of patients, they often fail when tested in independent datasets.

Cogle stressed that the quality of computational modeling output depends on the quality and completeness of the data used. McShane agreed and added that there is a misconception that powerful computational methods can compensate for poor-quality data. However, the application of computational

methods on large quantities of poor-quality data typically lead to poorly performing algorithms (see section on Data Quality and Completeness).

Cogle noted that early precision oncology efforts used diagnostic tests to assess the activity of a single gene (e.g., human epidermal growth factor receptor 2 [HER2] in breast cancer) and to match patients to a single drug targeting that activity. Current precision oncology diagnostic testing is more complex, including multiplex testing to identify the tumor’s genetic sequence (genome), active genes (exome), and proteins (proteome). This complex information has the potential to enable more precise targeting of therapies. However, Cogle stressed that “from a regulatory perspective, there is not much guidance on how to use a more complex system other than single-gene–single-drug matching, and our technology is way beyond that.”

Atul Butte, director of the Bakar Computational Health Sciences Institute at the University of California, San Francisco, noted that molecular characterizations of patient tumors show that although tumors often share several common gene aberrations, many also have rare genetic flaws, with some unique to a single patient. For example, he said there may be nearly 1,000 different subtypes of lung cancer, defined by their unique genetic signatures. Some of these subtypes may include highly specific targets for drug therapy that will be effective in only a small number of patients. Keith Flaherty, director of the Henri and Belinda Termeer Center for Targeted Therapy, said, “We need a strategy, both diagnostic and therapeutic, that is going to allow us to make more rapid inroads into potentially very small patient subpopulations.” He also noted that treatment-salient features can vary across tumors, even within an individual patient. If most of a patient’s tumors have a molecular feature targeted effectively by a drug, their cancer will still eventually progress because of the subpopulation of tumor cells that can resist treatment.

Hedvig Hricak, chair of the department of radiology at the Memorial Sloan Kettering Cancer Center, showed how tumor heterogeneity in an individual patient can be revealed with molecular imaging. This imaging can facilitate appropriate treatment selection and is also used in drug development to optimize target engagement and determine appropriate dosage, she noted. “Molecular imaging is essential in patient- and value-driven care, and imaging in drug development is now slowly being accepted around the Western world,” she said.

Howard McLeod, medical director of the DeBartolo Family Personalized Medicine Institute, said genetic testing of a patient’s tumor and germline genome will be pivotal to the success of precision medicine. He noted that genetic information provides diagnostic, prognostic, treatment, and toxicity risk information, and can also suggest appropriate clinical trials for patients. However, genetic reports on individual patients can be as long as 50 pages, with listings of hundreds of variants that may not have clinical significance.

McLeod noted that it is difficult to interpret this genetic information, even for clinicians trained in molecular oncology. Lincoln Nadauld, executive director of precision medicine and precision genomics at Intermountain Healthcare, said that when his organization first launched their program of tumor genetic testing, clinicians used the test results less than 20 percent of the time, often because they were uncomfortable interpreting the data.

Mia Levy, director of the Rush University Cancer Center, also emphasized the growth of genomic data, with complex tests now able to detect hundreds of genetic changes. She said clinicians need decision support tools that address which tests to order, how to interpret and report test results, and how to combine genetic features with other patient features to determine an appropriate treatment. Lee Newcomer, principal of Lee N. Newcomer Consulting, agreed, saying, “We can’t take the multiple variables coming in now from oncology patients about the microenvironment, genomics, etc., and process those in a human brain. We need computational methods to drive us in the direction of making more subtle clinical decisions.” Hricak stressed, “Only by proper predictive modeling are we going to have personalized treatment.” Levy added, “We’re at this precipice of change in how we think about clinical decision support in medicine, which has evolved from the evidence-driven paradigm, to protocol-driven care, to data-driven approaches.”

Computational Technologies

Machine Learning

Pratik Shah, principal research scientist and a principal investigator at the Massachusetts Institute of Technology (MIT) Media Lab who leads the Health 0.0 research program, classified computational technologies used in precision oncology in a hierarchy of three major types. At the bottom level is automation. An example of automation is a machine learning computer algorithm trained with thousands of photographs that uses prior data to identify specific features in new images. “This low-level intelligence depends on large datasets and is subject to bias and invalid causal inferences,” he said. The middle level is knowledge creation, such as using machine learning to create new knowledge from data that humans might perceive, but do not understand how to use. The highest level is artificial intelligence (AI), in which the algorithm uses computational processes that are beyond human cognitive capacity.

According to Shah, most machine learning applications are in the form of automation. There are few examples of knowledge creation, he said, and even fewer examples of true AI. McLeod noted that fully AI-driven care may be achievable, but is still a distant goal because “there hasn’t been that hard work done to build the knowledge to have the I in AI.” He noted that such

intelligence needs to be able to consider the full patient context, such as kidney function, body weight, comorbidities, patient preferences, and cost.

Shah provided several examples of how computational technologies are being used for knowledge creation to support clinical decisions. In response to a request from clinicians at Brigham and Women’s Hospital, he and his colleagues devised a method to computationally “stain” prostate biopsy tissue slides without physically staining them (Rana et al., 2018). Traditionally, such slides are stained with a dye such as hematoxylin and eosin (H&E) that makes certain diagnostic features more prominent and facilitates cancer diagnosis. Shah and colleagues used a machine learning program to detect those features in tissue samples without staining, and then digitally altered the images akin to what a physical H&E stain would do when applied to the tissue slices. Shah and colleagues also created a neural network system that could digitally remove the stains on old stored samples and digitally re-stain them to identify different features. He is now testing this digital staining with clinicians, most of whom cannot distinguish between the digitally stained and physically stained tissues, and are able to diagnose tumors equally well in both types.

Shah also reported on a project in which he and colleagues used archived clinical trial data to train a computational algorithm to identify novel patterns in treatment toxicity and to use that information to optimize dosing regimens (Yauney and Shah, 2018). The program applies a technique called “reinforcement learning,” which teaches the algorithm to prioritize the goal of tumor reduction while minimizing adverse effects. The computer-driven regimens provided doses at different amounts and intervals and skipped more doses compared to dosing regimens devised by clinicians.

Constantine Gatsonis, founding chair of the Department of Biostatistics and the Center for Statistical Sciences at the Brown University School of Public Health, provided several examples of machine learning programs designed to aid diagnosis and treatment in oncology. One uses magnetic resonance imaging (MRI) features of rectal cancer patients to predict treatment response after chemotherapy and radiation therapy. In a retrospective study of 114 patients, the predictor performed better than qualitative assessments by radiologists (Horvat et al., 2018). Although this study and others have shown promising results, Gatsonis stressed that the clinical utility and stability of the algorithms have yet to be determined. Furthermore, it remains to be seen how reliably the algorithms will perform when tested on patients less similar to those on whose data and imaging they were trained. He also noted that these learning systems will continue to evolve as they are trained on new data.

Wui-Jin Koh, senior vice president and chief medical officer of the National Comprehensive Cancer Network (NCCN), asked how machine learning and AI might help primary care clinicians in the diagnosis of cancers at earlier stages. Bray Patrick-Lake, program director of the Duke Clinical Research Institute

Research Together™ program, responded that computational oncology could aid primary care clinicians when deciding whether a suspicious lesion should be biopsied. Newcomer added that computational technologies are being applied to the analysis of circulating free DNA in blood samples to develop tests to detect early-stage cancers. However, he cautioned that some computational screening technologies may not add significant value. He said one study found that a computational method to improve mammography screening did not reduce the rate of false positives, and only increased the breast cancer detection rate by two-tenths of 1 percent (0.002%). Giovanni Parmigiani, associate director for population sciences at the Dana-Farber/Harvard Cancer Center, noted that machine learning and genetic tests could identify primary care patients at high risk for cancer and might motivate behavior change that could prevent cancer. “As we continue our policy discussions about precision oncology, I hope we don’t leave behind the precision prevention side,” he said.

Machine Learning Applications in Radiology

Hricak stressed that the future of medicine lies in the integration of technology with human skills. She said that the increasing complexity of technologies used in radiology will alter the role of the radiologist, so that instead of searching through images and identifying features, the radiologist will synthesize clinical features identified via technology to interpret the findings. Machine learning will help radiologists to evolve from spending a large portion of their time as “film readers” to functioning more fully as physician–consultants who facilitate diagnosis. Radiologists will need to “adapt or perish,” she added, quoting the motto in the logo of the SSG Heathe N. Craig Joint Theater Hospital at Bagram Airfield.

Hricak also noted that a computer program can reliably identify changes in a longitudinal series of patient images within a few minutes. This will save radiologists time, while allowing them to provide a more precise assessment of the treatment response. Lawrence Shulman, professor of medicine and deputy director of clinical services at the University of Pennsylvania Abramson Cancer Center, agreed. He added that machines are not likely to replace clinicians but will improve efficiency and accuracy in oncology.

Health Apps

Gatsonis noted that numerous smartphone apps allow the user to send a photograph of a mole or other skin feature to be evaluated by a clinician or an algorithm. Gatsonis reported the example of a single convolutional neural network (which has potential for smartphone applications) that performed as well as 21 dermatologists in classifying general skin lesions (Esteva et al., 2017).

Butte noted that a new smartphone app that can provide access to patients’ health records at multiple facilities will facilitate the creation and dissemination of other health apps. “That’s the future—I can imagine hundreds of apps in the next year helping patients navigate through their cancer care because I see people writing these apps to deal with health records directly without having to know about various health systems or medical health record systems,” Butte said.

But Frank Weichold, director for the Office of Critical Path and Regulatory Science Initiatives in the Office of the Chief Scientist and the Office of the Commissioner for the Food and Drug Administration (FDA), expressed concern that health data from apps may not be sufficiently authenticated and vetted for quality. Gatsonis added, “Before we put these to use, we need to know that they perform at a certain level. These apps have a lot of potential, but they can also cause a lot of angst and pressure on health care systems.” He said that few mobile apps have been clinically validated and regulated as they should be, especially if they have diagnostic uses (Chao et al., 2017; Wise, 2018). Although FDA has proposed some guidelines for evaluating such apps, “there are no real quality standards and regulatory framework for them,” he said (Chao et al., 2017). He added that there are also ethical concerns related to where the data go, who has access to them, how data will be mined, and whether the devices adequately protect patient privacy.

TRANSLATION CHALLENGES

Several participants described challenges in translating computational technologies for clinical use, including ensuring data quality and completeness, identifying methods to validate novel computational methods, ensuring appropriate regulatory oversight, communicating results and potential risks to patients, and achieving appropriate reimbursement under patients’ insurance plans.

Data Quality and Completeness

Reliable algorithms depend on reliable data, stressed Amy Abernethy, chief medical officer, scientific officer, and senior vice president of oncology at Flatiron Health.4 She noted that many factors affect the reliability of data used to train an algorithm (i.e., completeness, quality, diversity, relevancy,

___________________

4 In February 2019, Dr. Abernethy became Principal Deputy Commissioner of Food and Drugs at the Food and Drug Administration. The views expressed in this proceedings do not necessarily represent the official views or policies of FDA.

timeliness, and accuracy. Data completeness requires key data elements such as information about cancer diagnosis, treatment, and outcomes. Data quality refers to whether appropriate variables have been measured in valid and reliable ways. Another key feature of data quality is provenance, that is, the original source of the data and how the integrity of the data was maintained as it passed from one record or database to another. Abernethy stressed that potential data for precision oncology should be assessed against each of these requirements before they are used in any clinical or regulatory context, and added that inadequate data quality will result in poorly performing algorithms. McShane agreed, and highlighted the importance of involving individuals with appropriate expertise to make assessments regarding whether data quality is sufficient to be used in the development of algorithms.

Abernethy noted that different types of datasets have different features that affect their reliability. For example, although instrumentation data, such as those generated in genetic tests or by imaging, are initially reliable, they may be compressed, stored, transferred, analyzed, or reported in ways that can introduce error. Often, results from images or tests include textual information that needs to be curated (translated) into an analytical file that can be processed by an algorithm. This curation process can introduce errors if the person performing it misunderstands the material or has incomplete, changing, or conflicting information, Abernethy noted.

Abernethy also stressed the importance of data completeness. She noted that electronic health records (EHRs) are often missing key data that need to be gathered from other sources. For example, a patient whose cancer progresses may have imaging data throughout their treatment, but no pathology data after the initial diagnosis. Increasingly, patient data are not considered complete without information about biological features important for diagnosis and treatment decisions. Levy added that EHRs do not consistently record response to clinical therapy or reasons for treatment discontinuation. In addition, she said, “There’s a lot of missing information, especially when you are limited by the EHR of a single institution, as we miss what happens to patients once they have left the institution, or we’re missing the data that came before they are at the institution.” Furthermore, dates entered into EHRs are often inaccurate when patients are asked to recall past care episodes that occurred at the beginning of their illness.

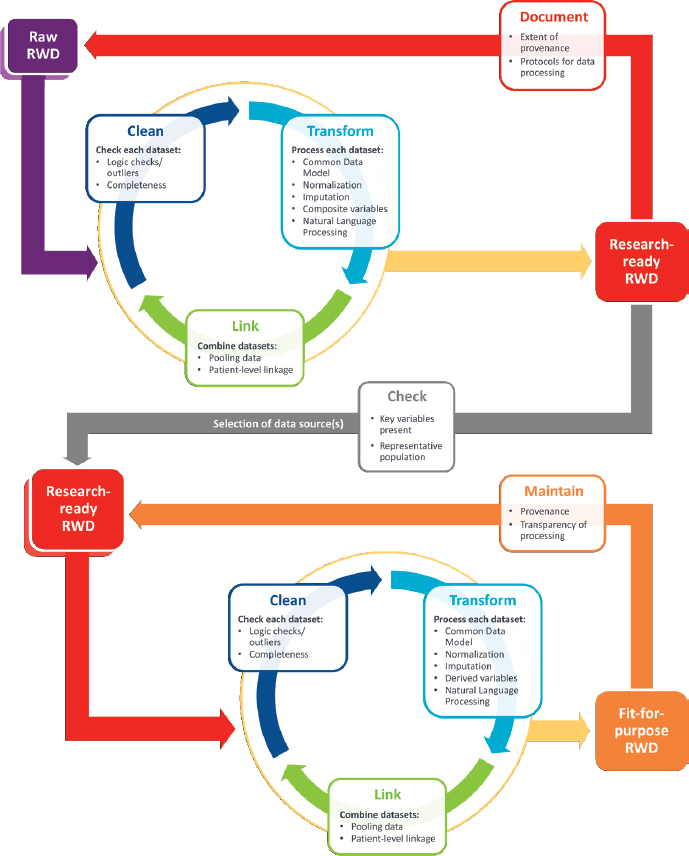

Abernethy noted that data reliability can be described for each data point in a standardized format. “Standardized reporting of data completeness now can be appended directly to datasets, so you have this information in your hands as you start to work with them,” she stressed. The creation of research-ready datasets that are fit for purpose requires documenting data quality, not only in source datasets, but also in the derived datasets used for final analyses and dataset generation, she added (see Figure 1).

NOTE: RWD = real-world dataset.

SOURCES: Abernethy presentation, October 29, 2018; Daniel et al., 2018.

Risk of Bias

Several participants stressed that inadequately representative datasets that do not include diverse populations lead to the creation of invalid and biased algorithms. Kadija Ferryman, postdoctoral scholar at the Data & Society Research Institute, described a study demonstrating how underrepresentation

of black individuals in health research data led to the creation of a genetic test for heart disease that incorrectly classified risk for black patients (Manrai et al., 2016). “A lack of inclusive populations impacts how these genetic variants are classified,” Ferryman noted. She gave another example of databases used to develop algorithms for melanoma detection that included few images of skin lesions in people of African descent (Adamson and Smith, 2018; Lashbrook, 2018). Ferryman noted that since the passage of the 1993 Revitalization Act, which aimed to increase diversity in clinical trials, less than 2 percent of the more than 10,000 cancer clinical trials funded by the NCI included enough minority participants to meet the goals of the National Institutes of Health (NIH) (Chen et al., 2014; Oh et al., 2015). “If this problem of inclusion persists, there are still going to be parts of the population that are going to be left out” when developing precision oncology algorithms with clinical trial data, Ferryman said.

Ferryman stressed that precision medicine focuses on improving care for individuals, but it is also critical to consider its effects on population subgroups before these technologies become widespread. “If we don’t address these issues at the forefront, it’s likely there will be negative impacts as the technologies develop. We need to ensure precision oncology that is equitable and leads to improvement for all of us,” she said.

The need for large and diverse datasets presents a challenge as cancer populations are increasingly divided into small subsets based on genomic classifiers. “More and more big data is moving toward small data,” Abernethy said, so that there are more datasets that represent small numbers of patients. Small datasets run the risk of not being representative of the population. Abernethy also noted that the likelihood that patients have genomic analyses of their tumors differs by race, ethnicity, and age, and that in itself may affect the representation of data in available genomic databases (Presley et al., 2018). “This is particularly important as we think about getting balance in our algorithms going forward,” she said.

Otis Brawley, Bloomberg Distinguished Professor at Johns Hopkins University, also cautioned against drawing population-wide conclusions from analyses performed on small samples of population subgroups, noting that findings from such analyses may not be generalizable. He gave the example of a study of azidothymidine in patients with HIV that concluded the drug was not effective in African Americans based on a subgroup analysis embedded within the larger study. A deeper analysis identified that the apparent effect was confounded by socioeconomic status, and African American patients had failed to respond because they could not consistently access the medication. Brawley concluded, “You can harm the public health by doing these subset analyses just as much as you might be able to help them.”

Validation of Computational Precision Oncology

Prior to entering the market, medical tests need to be validated to ensure that they consistently and accurately measure the intended target and provide clinically meaningful and actionable information. Some workshop participants noted that validation can be especially challenging for omics-based diagnostics and imaging, as well as for the predictive algorithms that drive precision oncology (IOM, 2012; NASEM, 2016). This creates a bottleneck that can slow implementation of new technologies.

One challenge for validation, identified by Gatsonis, is the “moving target” nature of machine learning algorithms. “The software evolves constantly so there’s a big moving target problem,” he said, raising the question “At what point do you evaluate such an algorithm? We need criteria for deciding when a modality is ready to move forward.” Gatsonis noted that one Institute of Medicine report, Evolution of Translational Omics: Lessons Learned and the Path Forward, suggests criteria for omics diagnostics, but not for radiomics (IOM, 2012). Advanced machine learning (i.e., AI) raises additional questions, such as whether there has been appropriate training for the algorithm, and how to calibrate and monitor its performance. Gatsonis stated, “We need to establish processes for monitoring performance and ensuring safety.” He also questioned the reproducibility of some omics tests and algorithms, and recommended that such tests be evaluated both technically and clinically prior to widespread implementation.

Test Development and Analytical Validation Steps

McShane outlined steps for developing and validating an omics predictor prior to clinical testing (McShane et al., 2013a,b). The first step is ensuring the specimen, such as a tumor sample, is adequate for the test. Improper handling and storage can affect the quality of the specimen and outcome of the test. The specimen also needs to be of sufficient size, and should be screened to ensure its DNA or RNA has not degraded. McShane provided the example of a diagnostic study in which 80 of 100 specimens collected were unusable because of improper freezing, and suggested that test developers need to create standard operating procedures to ensure proper handling.

Once a specimen is deemed adequate, the next step is to ensure the test itself is run properly and consistently. Small changes in methodology can have dramatic effects on results. McShane suggested the use of standard operating procedures and quality monitoring, as well as pre-established criteria for assessing specimen quality and batch effects. Researchers also need to assess the analytic performance of a test, including its sensitivity, specificity, bias, accuracy, precision, and reproducibility (Becker, 2015; Jennings et al., 2009).

The next step in test development and validation is to perform preliminary evaluations of the model and algorithm used to make predictions. At this step, researchers need to ensure the quality of the data, and use appropriate statistical methods for model development, performance assessment, and validation. The latter includes defining the clinical context and patient population for the test, and whether its clinical use would be prognostic, predictive, or serve another clinical purpose.

Statistical Validation

McShane noted that a common pitfall in the early validation steps is statistical overfitting, in which an algorithm is trained too closely to a particular set of data, and may therefore fail to fit additional data or reliably predict future observations. Overfitting can occur when the data that were used to develop a predictor are also used to test it. “Plugging in the same data into the model that you used to develop it is a useless thing to do because it will always look like you have a great predictor when in fact you might have a completely useless predictor,” McShane said. She suggested developers test their assays using several different external datasets, as was done for the FDA-approved Oncotype DX test,5 which predicts recurrence in women with early-stage breast cancer (Carlson and Roth, 2013).

Parmigiani also stressed the need to perform cross-study validation using external datasets. He noted, “If you are training a classifier using a machine learning technique, the ability of that classifier to do well on a set-aside piece of the original dataset is going to be way too optimistic in predicting what is going to happen when you take it elsewhere. That is well established.” He added that “if you teach your classifiers on multiple datasets, they will not only learn about what predicts patient outcome, but we will also learn about how that will vary from one context to another and we will only retain the features that are more stable across multiple studies.”

Parmigiani continued, “The ability to take classifiers trained in one context and take them to different contexts is an essential part of the translational process that goes from machine learning to bedside,” adding, “This is one of the important lessons the clinical community has been learning often the hard way over the past few years.” He also noted that the datasets used to train algorithms for health care are often much smaller than those used to develop other applications, and thus the predictions made by these machine learning systems may be less reliable.

___________________

5 See https://www.genomichealth.com/en-US/oncotype_iq_products/oncotype_dx/oncotype_dx_breast_cancer (accessed January 4, 2019).

Parmigiani and Steven Goodman, associate dean for clinical and translational research at the Stanford University School of Medicine, also stressed the importance of ensuring that validation datasets are unbiased. Goodman suggested using real-world data for validation purposes, noting that race, ethnicity, age, and other factors that may not have been in the eligibility requirements for the original training dataset may influence validation. He added that the validation dataset should include real-world variation in the test procedure, including variation in sample preparation, handling, and transport. “You have to make sure that your training and validation sets reflect some sort of reality,” Goodman stressed.

McShane outlined in more detail the steps for the rigorous validation of a predictor (see Box 3). “There are a lot of really important things that can be done with omics. We just have to make sure that we continue to educate people on how to do things correctly and have the data resources, time, and

right kind of expertise for validation for successful development and clinical translation,” she noted.

Clinical Validation

Gatsonis stressed that for clinical validation, algorithms should be evaluated in well-designed prospective studies. McShane added that in a clinical trial, study methodology and endpoints should be defined a priori, and there should be “an honest broker in the form of a statistician or computational specialist, who is the one who can make the link to the outcome data and knows the code, even if he or she can’t look inside it.” But McShane also acknowledged that it may not be possible to design and recruit patients for a clinical trial for every clinical validation study. “That’s why we’re going to have to have some very high-quality databases and specimen banks, so we can do some of these in very carefully designed prospective–retrospective studies,” McShane said. Lukas Amler, senior director of the Late-Stage Oncology Biomarker Development Department at Genentech, agreed with McShane, noting, “We need very significant scale as far as data go.” He suggested that clinical validation for some cancers will likely require a combination of real-world data and data from clinical trials.

With regard to machine learning algorithms that are continually evolving, Gatsonis noted that

we don’t know the way to guarantee that these algorithms are always going to hit a minimum level of performance. There’s no math that can specify conditions and say this is always going to be the case, hence we don’t know that this thing is not going to go off some deep end, depending on what it sees and what it learns. That’s why it’s so important that we need to be able to understand how they work and have new ways to have minimum performance standards as the system evolves.

Gatsonis also suggested establishing processes for monitoring performance and ensuring safety.

Richard Schilsky, senior vice president and chief medical officer of the American Society of Clinical Oncology, stressed that tests need to be validated for a specific use. “People often lose sight of this. They say ‘I’ve got a great technology and it can be used for a million things.’ Well, what should it be used for? Because unless you can declare that up front, then it makes it very difficult to design the appropriate validation studies,” he said.

David Chu, president of the Institute for Defense Analyses, suggested that FDA could rely more on academic partners for validation of new precision oncology technologies because academic institutions should not have a vested interest in the product’s outcome. “Think about the academic establishment as your partner in this to certify results, including the quality of data used for the development of the tools,” he said.

Face Validity

George Oliver, vice president for clinical informatics at the Parkland Center for Clinical Innovation, stressed the importance of transparency and face validity—the perception that an algorithm is taking appropriate computational steps in its decision making. Amler agreed, saying that “A black box isn’t going to work. We have to establish causality so there’s enough reason for us to follow this up to make current drug treatments better.” Gatsonis added, “We care about the face validity in order to be able to educate the physician as to what they have seen and why they’re making a particular diagnosis. If we can’t do that, we’re not going to be able to convince anybody. You cannot make a transparent treatment decision on the basis of a piece of information that is not transparent to you.” Gatsonis stressed that algorithms are decision support tools, and ultimately the clinician retains responsibility for making the appropriate diagnosis or treatment selection. Cogle reinforced this notion, stating, “If a medical oncologist is legally responsible for using the data in an app, we want to make sure we understand how the app or the computational system came to the conclusion it did.” Clinicians need to be able to demonstrate the face validity of decision support algorithms to justify their prescribing choices to insurers, he said.

However, Gatsonis noted that it can be difficult to achieve face validity for complex algorithms. “It is reverse engineering the neural net to be able to understand why it did what it did.” Schilsky pointed out that many oncologists use Oncotype DX, a genomic test to inform breast cancer treatment, without understanding how its algorithm works. “They accept at face value that this is a test that’s gone through an extensive validation process and that the recurrence score actually provides information that can be useful to them,” he said. Shah added, “We think explaining the algorithm is important but should not be rate limiting in creating new knowledge to help patients. We shouldn’t be using the black box phenomena as an excuse to put technology out of the hands of patients.”

Gaps in Reproducibility

Several speakers noted that algorithms for precision oncology often do not hold up to repeated attempts at validation. Goodman provided a number of explanations for this lack of reproducibility, including factors such as:

- Poor training of researchers in experimental design;

- Increased emphasis on making provocative statements rather than presenting technical details;

- Publications that do not report basic elements of experimental design;

- Coincidental findings that happen to reach statistical significance, coupled with publication bias; and

- “Over-interpretation of creative ‘hypothesis-generating’ experiments, which are designed to uncover new avenues of inquiry rather than to provide definitive proof for any single question” (Collins and Tabak, 2014, p. 612).

Goodman added another factor, citing an investigator who had 19 retractions of published research from his laboratory and claimed as his defense: “In these days of complex, interdisciplinary research, one depends on the trustworthiness of colleagues who use the methodologies with which one has no personal experience” (McCook, 2017). As Goodman noted, “This is part of the problem. With computational technologies, even the best of clinical and biologic researchers may have no idea what is going on in the computer and do not know how to look over the shoulders of their colleagues who do have this expertise.” He said in one online survey of 1,576 researchers, nearly 90 percent of respondents agreed that reproducibility of research findings could be improved by having a better understanding of statistics (Baker, 2016). Goodman outlined three types of reproducibility that are relevant in assessing precision oncology tests: methods reproducibility, results reproducibility, and inferential reproducibility.

Methods reproducibility Methods reproducibility is the extent to which research methodology is sufficiently described so that a repetition of the same experiment would produce the same result. Failure to achieve methods reproducibility may be due to a lack of transparency for methods, data and codes, and materials used to conduct the research. Achieving this form of reproducibility requires providing, in a shareable form, the analytic dataset, the methods by which the analytic results were produced, the computer code in human-readable form, the software environment, and documentation so researchers can try to replicate study results (Peng et al., 2006).

Results reproducibility Results reproducibility is the degree of support that subsequent studies provide for the original claim.

Inferential reproducibility Inferential reproducibility is whether the results are interpreted the same way by different people, that is, whether there is consensus in the scientific community about what the results mean. Goodman noted that inferential reproducibility is determined by the strengths of claims made, and the degree of proof, validation, or generalizability.

Regulatory Oversight

Several workshop participants spoke about the importance of regulatory oversight for computational precision oncology and discussed how computational precision oncology’s entry into the clinical market should be regulated. “What level of proof or evidence ought there be for computational algorithms used as treatment decision supports? Is a randomized trial testing their use versus standard of care enough, or do we need a new FDA regulatory mechanism for these algorithms?” Newcomer asked. Cogle added, “At what level do we have reasonable expectation that this technology is ready for prime time? Do we need survival data as a green light or can we use surrogate measures for survival?”

FDA has multiple regulations and standards relevant to omics tests, algorithms, and decision support tools, including regulation of digital data quality, performance standards for diagnostic tests, and regulation of devices, as described below.

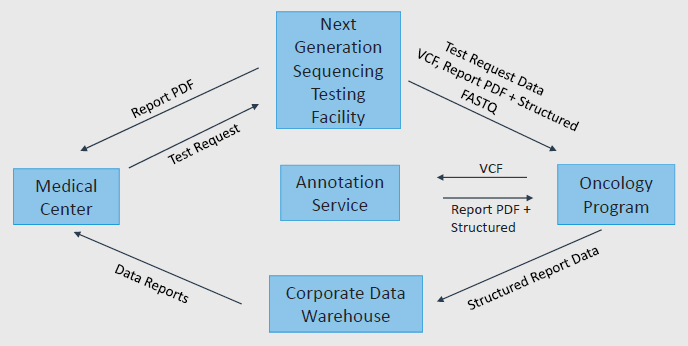

Digital Data Standards

FDA regularly receives outcomes data from sponsors from registration of clinical trials as well as from postmarketing surveillance. To facilitate interpretation and use of these data, the agency established data standards through the Clinical Data Interchange Standards Consortium6 (CDISC) for all data it receives. “Traditionally, we used to get data in a variety of different formats that followed a variety of different standards. Every sponsor had their own data center,” said Sean Khozin, associate director of FDA’s Oncology Center of Excellence. The CDISC standards require a unified framework for organizing study data, including templates for datasets, standard names for variables, and standard ways of creating common derived variables. This standardization allows FDA to receive, process, review, and archive submissions more efficiently and effectively (FDA, 2017b). “We can automate a lot of the functions during the review process because the data can be linked, and we can look at data in a more holistic way by looking across several different studies in meta-analyses,” Khozin said, adding, “We are thinking about using the data we have to train algorithms that can be open source and help the entire drug development community.”

CDISC is primarily a clinical data standard and does not address genomic data. However, Khozin noted that there are also standards for reporting genomic data. For FDA purposes, sponsors are asked to provide a spreadsheet with all the relevant genomic data outputs. Khozin explained that FDA does

___________________

6 See https://www.cdisc.org (accessed January 4, 2019).

not acquire the raw genomic data because of the complexities of data transmission and lack of technology for analyzing such data as part of regulatory decision making.

Khozin pointed out that as oncology drugs become more targeted and effective, fewer participants need to be treated in a clinical trial to demonstrate efficacy, although large numbers of patients may still need to be screened to identify a subset of patients meeting eligibility for enrollment in the trial. “So now there is a greater need to understand the postmarket experience, that longitudinal journey of the patient. And that is one of the areas where real-world evidence has been very useful and promising—in following the patient’s longitudinal journey,” Khozin said. FDA requires long-term patient follow-up that varies depending on the nature of the drug and observations from clinical testing. FDA also receives spontaneous postmarket reports on drugs from clinicians and patients via their website. “We have a continuous cycle for postmarket safety surveillance and pharmacovigilance,” Khozin said.

Devices

Nicholas Petrick, deputy director for the Division of Imaging, Diagnostics and Software Reliability of FDA’s Center for Devices and Radiological Health, reported that for regulation purposes, FDA divides devices into three classes based on the risk they pose to patients (FDA, 2018a) (see Table 1).

For all devices, FDA requires sponsors to follow good manufacturing practices, register the device with FDA, and report any adverse events. Class I devices (e.g., stethoscopes and tongue depressors) pose the least risk, and FDA typically does not require safety data for these devices to enter the market. Class II devices (e.g., computed tomography [CT], MRI, and ultrasound scanners), which are considered to pose moderate risk, may have additional requirements, such as postmarket surveillance or premarket data that may require a clinical study. Typically, Class II devices are able to enter the market

TABLE 1 Device Class and Premarket Requirements

| Device Class | Controls | Premarket Review Process |

|---|---|---|

| Class I (lowest risk) | General controls | Most are exempt |

| Class II | General controls Special controls | Premarket notification 510(k) or de novo |

| Class III (highest risk) | General controls Premarket approval | Premarket approval |

SOURCES: Petrick presentation, October 29, 2018; FDA, 2018a.

following the 510(k) pathway, which allows sponsors to demonstrate that the device is substantially equivalent to a product already on the market. Alternatively, Class II devices may come to market via the de novo pathway if there are no similar devices already in use, and general or special controls are considered adequate for ensuring safety and effectiveness.

Class III devices (e.g., novel imaging systems, leadless pacemakers, some in vitro diagnostic tests, and computer software and algorithms) pose the greatest potential risk. Most Class III devices require premarket approval, for which sponsors must submit clinical evidence of safety and effectiveness. To facilitate identification of the appropriate approval pathway, device makers are able to consult with FDA prior to submitting their device for review. These consults (called Q-subs) provide an opportunity for FDA to advise manufacturers on the appropriate regulatory pathway and suggest whether additional evidence may be required. “With Q-subs, companies have the opportunity to ask questions and get feedback before they delve into a large clinical study and potentially get in the wrong pathway,” Petrick said.

Petrick noted that in FDA decisions regarding validation requirements, the indication for a device is equally as important as the type of technology. “It is not just ‘here is my device and this is what it does,’ but what are the actual intended uses? On what populations will the device be applied? These are really important questions that have a large impact on the type of data we might see,” Petrick stressed.

Software as a Medical Device

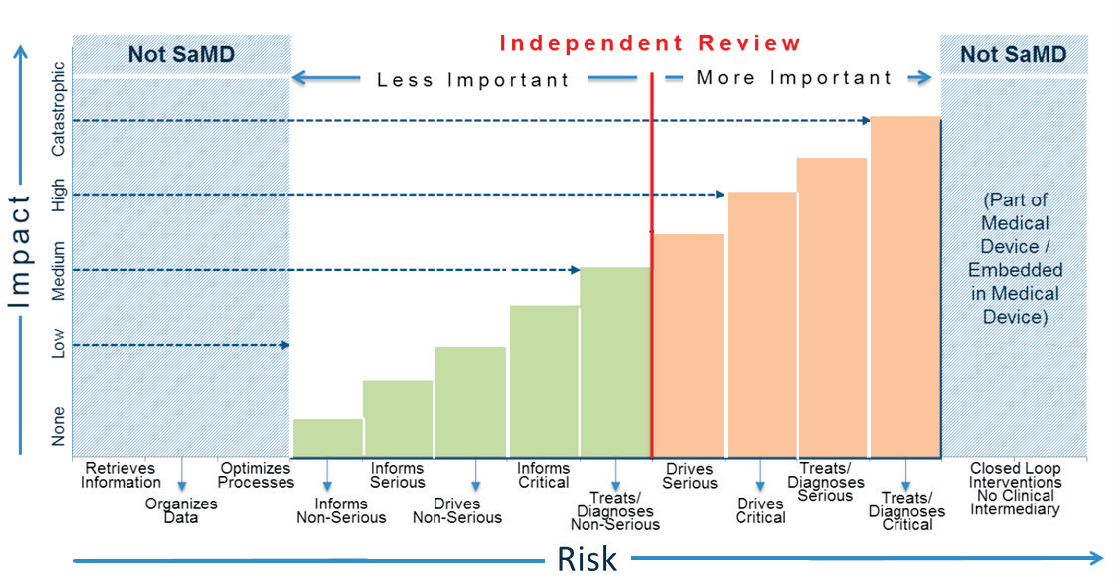

Petrick identified a new category of devices that the International Medical Device Regulators Forum (IMDRF) calls Software as a Medical Device (SaMD). This software (e.g., machine learning algorithms used to diagnose or monitor disease) is intended for medical purposes independent of a hardware medical device (IMDRF SaMD Working Group, 2013). Petrick noted that many precision oncology tools would be classified as SaMDs.

In 2017, FDA adopted the IMDRF’s basic principles for SaMDs as guidance (FDA, 2017a). The principles for clinical evaluation of SaMDs stipulate that there first must be a valid clinical association between the SaMD and a targeted clinical condition. Medical literature and professional guidelines may be used to establish this association, but it also may require a secondary data analysis or a clinical trial. The next step is to demonstrate analytical validity by providing evidence that the software meets various technical requirements and specifications. The final component of a clinical evaluation is demonstrating clinical validation, that is, evidence that the SaMD has been tested in a target population for its intended use and has generated clinically meaningful outcomes.

Petrick noted that FDA has been regulating SaMDs, especially in imaging, for about two decades. Similar to how devices are classified, FDA has been regulating SaMDs according to how much potential risk they may pose. Bakul Patel, associate director of the FDA Center for Devices and Radiological Health, added that when considering how to regulate algorithms, FDA considers unknown risks as well as known risks of implementing the technology in a clinical setting.

Petrick pointed out that there is a wide range of SaMDs, including both software that will be used by patients in their homes (e.g., software that collects and analyzes data about heartbeats and heart rates) and software in devices used in the clinic. He noted that software systems for displaying images may not require clinical data, but clinical data may be required for devices used for diagnosis or treatment (see Figure 2).

Petrick noted that FDA has received substantial input regarding machine learning and AI tools, especially related to imaging, but their approach to regulating these technologies is still evolving. The agency hopes to develop new guidance for the regulation of SaMDs to meet the influx of new technologies expected in the near future. Weichold noted that as FDA builds its knowledge base regarding computational algorithms and how best to regulate them, it maintains open dialogue with stakeholders. “It’s a learning process and not everything is set in stone. We have to learn together,” he said.

Butte noted that in the past 18 months, FDA approved seven machine learning health applications, including one that is cloud based. However, McShane suggested that many computationally based tests are not being evaluated by FDA because they do not fit in its regulatory framework. Consequently, many oncologists rely on NCCN guidelines when deciding whether to use these tests in patient care. These guidelines are informed by findings published in the medical literature, and McShane expressed skepticism about their validity. “We need to be thinking a little bigger about how we might find alternative mechanisms to give good evaluations of some of these algorithms,” McShane said.

There was substantial discussion about the regulatory challenges posed by modeling algorithms used for clinical decision support. Levy said these algorithms should be able to adapt to the latest data and knowledge, noting that “Because of the nature of the changing therapeutic landscape within oncology, we can’t just go to the FDA with a black box algorithm and say ‘I’m going to lock it down forever.’ We need new and adaptive models that allow us to bring decision support tools based on knowledge that is ever-changing.” McShane, however, expressed concern about this flexibility. She said,

These algorithms rely on certain input variables, and if those input variables are coming from assays that are versioned over time, how does that affect these models? As people mine more data and decide to tweak their computational

NOTES: SaMD = Software as a Medical Device. “Serious” and “Critical” refer to health care situations and conditions.

SOURCES: Petrick presentation, October 29, 2018; FDA, 2017a.

algorithm a little bit so it works better, how do we evaluate those kinds of things? I’m concerned there can be drift and we’re never going to know it.”

Petrick said FDA is wrestling with how to regulate algorithms that continually learn from data extracted in clinical settings. “It may be great to have these algorithms change and possibly work better over time, but if I have an algorithm specific for the California population, what happens if a clinician using it moves to New York?” he asked. Schilsky noted that clinicians want assurance that an algorithm can help them make better decisions for their specific clinical context.

Schilsky asked if software would be subject to regulatory oversight if it was used as a clinical decision support tool to select drug treatments for a cancer patient based on the results of a multiplex genomic test. Petrick responded that FDA would likely want to regulate such an algorithm, and would require clinical data to support its use. However, he noted that this regulation is still evolving. He said,

Even if the device is not working completely independently but in conjunction with the clinician, its type of analysis would be something that would be regulated and the question is what type of data is going to be needed for that. That’s the type of question we are trying to work through now—what types of data are needed under what scenarios.

Cogle noted that FDA requires explicit labels for drugs that include indications and mechanisms of action, and asked whether those same labeling requirements could be applied to software. He noted that the goal of these labeling requirements is to achieve transparency. “Would software developers be comfortable putting portions of their code in a prescriber’s label?” Cogle asked. Shah responded affirmatively that computer code and data should be made publicly available, but he reiterated that traditional regulatory approaches may be less relevant for algorithms.

Quantitative Imaging Devices

Petrick also discussed assessment of quantitative imaging devices. Quoting from the Quantitative Imaging Biomarker Alliance, Petrick stated that “quantitative imaging is the extraction of quantifiable features from medical images for the assessment of normal or the severity, degree of change, or status of a disease, injury, or chronic condition relative to normal” (Radiological Society of North America, 2018). Quantitative imaging devices can extract a single feature, such as volume measurements, or multiple features from an image or from non-imaging data. FDA is currently developing guidance for single-feature quantitative imaging measurements, which are not typically regulated. “If a company comes in and says ‘I have this tool that can measure the size of a lesion,’ as long as that is under the clinician’s control and there are

no claims associated with how well it performs, we do not see data on it and it just goes into the market,” Petrick noted. He suggested quantitative imaging tools could undergo technical assessment and clinical validation, which usually requires a randomized clinical study with patient outcome data. He also stressed that the performance of a single marker, such as CT lesion volume assessment, should be consistent when the same tool is used at different clinical sites and incorporated into devices made by different companies.

Petrick noted that technical assessment of quantitative imaging devices includes evaluation of accuracy and precision. The evaluation of accuracy is often complicated by the lack of reference standards against which results from the device can be compared. For example, radiologists’ assessments of lesion size in a CT image often vary, which makes it difficult to assess the accuracy of lesion size determined by a quantitative imaging device. Evaluation of accuracy of quantitative imaging may also involve an assessment of statistical linearity, which Petrick defined as a measure of how change in the reference reflects proportional change in the measurement. Linearity indicates how well measurements reflect clinical realities. Petrick noted that assessment of accuracy should also include consideration of statistical bias (i.e., the difference between the estimated expected value and the reference standard). He noted that bias may be acceptable in longitudinal assessments as long as it is consistent, so that change observed between two measurements is still accurate. Precision is evaluated in the clinical context and involves assessing the repeatability and reproducibility of findings when measurements are repeated under the same conditions or under types of conditions seen in the clinic.

Laboratory Developed Tests

A laboratory developed test (LDT) is “a type of in vitro diagnostic test that is designed, manufactured, and used within a single laboratory” (FDA, 2018b). LDTs are used often in precision oncology to match patients to appropriate treatments. These tests are not currently regulated by FDA,7 but laboratories that conduct LDTs are subject to requirements under the Clinical Laboratory Improvement Amendments (CLIA), which are overseen by the Centers for Medicare & Medicaid Services (CMS) (CMS, 2013). Laboratories conducting LDTs are required to adhere to certain standards and practices to receive CLIA certification. Companion diagnostics,8 which are included in drug labels,

___________________

7 FDA has stated that it has statutory authority for the regulatory oversight of all tests used in patient care, but has used its enforcement discretion (meaning it has chosen to not exercise that authority) for the oversight of LDTs (NASEM, 2016).

8 FDA defines companion diagnostics as diagnostic devices that “provide information that is essential for the safe and effective use of a corresponding therapeutic product” (FDA, 2014).

undergo FDA review as part of the regulatory pathway for the drugs with which they are used. However, Flaherty noted that for “any other form of diagnostic, particularly ones that come post approval of initial therapy, [there is] a woefully inadequate system for how to develop and establish them.” Flaherty called for strengthened regulation of LDTs, stating, “We need to establish a bar for how diagnostic tests need to perform and then we need to raise that bar. We need to aspire, at least in cancer, to have LDTs become FDA-approved tests.”

Patient Privacy

Deven McGraw, general counsel and chief regulatory officer for Ciitizen, reported on the evolving legal landscape for patient privacy. She noted that health care privacy is important to patients, and that patients with concerns about confidentiality may withhold health information or fail to seek treatment. Cancer patients in particular often express concerns about whether their health status will affect future employment or their ability to acquire health insurance. “There’s a population of people for whom assuring them that their data will be kept confidential is really essential to them getting care in the first place. These sensitive populations have fears about how that data might be used against them,” McGraw said. However, she also noted that privacy regulations should still allow legitimate use of data, stating “Privacy is about enabling appropriate use of data and good and responsible data stewardship.”

McGraw noted that privacy laws in the United States and abroad are founded on the Fair Information Practice Principles, which were published by the Department of Health, Education, and Welfare in 19739 and later incorporated into the Organisation for Economic Co-operation and Development guidelines (OECD, 2013). Those principles include informed consent and assurance of autonomous choice for patients with respect to sharing health information, as well as safeguards and accountability to ensure that patients’ privacy wishes are honored. However, McGraw explained that consent is not the overriding principle because it is often difficult or impractical to obtain (e.g., in the conduct of retrospective research). Other principles include transparency about how data are being used and data minimization (using the minimal data needed in order to accomplish a legitimate purpose). De-identifying data, that is, stripping it of identifiers, is one way to accomplish data minimization.

McGraw noted that under U.S. law, it is possible to have informed consent waived for some purposes, including retrospective research on large datasets. However, most organizations prefer to de-identify data to satisfy privacy requirements. McGraw explained that there is also a trend for regulators to enable

___________________

9 See https://aspe.hhs.gov/report/studies-welfare-populations-data-collection-and-research-issues/fair-information-practices (accessed January 7, 2019).

individuals to provide generalized consent for the use of their health information for future research purposes without the need to specify for which projects those data will be used. However, it is unclear how much detail about the nature of future research must be included in consent forms, and it can be difficult to balance the need to provide details about future research with the need for simplicity and conciseness. Other trends in privacy include broadening the definition of “identifiable” or “personal” data and increasing individual rights with respect to data (i.e., right to access and portability, right of amendment, right to restrict uses, right to withdraw consent, and right to be forgotten).

McGraw reported on several recent changes in privacy laws, including the European Union’s (EU’s) General Data Protection Regulation (GDPR) and California’s recent passage of the California Consumer Privacy Act of 2018 (CCPA).

General Data Protection Regulation

GDPR went into effect in the European Union in May 2018. It applies only to data “controllers” and “processors” (European Commission, 2016, 2018). GDPR also covers entities not located in the European Union, but who offer goods and services to EU residents or collect data from subjects within the European Union.

GDPR applies to “personal data” unless the data are made public by an individual or an individual is no longer living. McGraw reported that GDPR defines personal data as

any information relating to an identified or identifiable natural person (“data subject”); an identifiable natural person is one who can be identified, directly or indirectly, by reference to an identifier such as a name, an identification number, location data, an online identifier or to one or more factors specific to the physical, physiological, genetic, mental, economic, cultural, or social identity of that natural person. (European Commission, 2016, Chapter 1, Article 4)

There is some relaxation of individual rights provisions when data are “pseudonymized” or “coded,” but the GDPR does not define “de-identified” data.

McGraw noted that GDPR uses a broader definition of personal data than the Privacy Rule10 promulgated under the Health Insurance Portability

___________________

10 The HIPAA Privacy Rule “establishes national standards to protect individuals’ medical records and other personal health information and applies to health places, health care clearinghouses, and those health care providers that conduct certain health care transactions electronically.” See https://www.hhs.gov/hipaa/for-professionals/privacy/index.html (accessed March 29, 2019).

and Accountability Act of 1996 (HIPAA). “It is generally believed this is a higher standard than [the HIPAA Privacy Rule], but we don’t know enough about how this law is being interpreted by regulatory authorities to know for sure that is the case,” McGraw said. Under GDPR, all data processing must be “lawful,” with the assumption that consent is required (explicit consent in the case of health information) unless there is a lawfully permitted exception. Security safeguards are required, but the expectations for those safeguards are not set out in detail. A Data Protection Impact Assessment (and in some cases regulatory review) is required for certain high-risk processing activities, such as health data processed in large volume. GDPR permits pseudonymization (replacing personal identifiers with pseudonyms) in order for researchers to process data without acquiring consent, but McGraw noted that it is yet to be seen how this will be enforced. She pointed out that with GDPR, patients not only have the right to acquire their own data, but also have the right to acquire data in a machine-readable format, a requirement that is not included in the HIPAA Privacy Rule (see Table 2).

California Consumer Privacy Act of 2018

CCPA will go into effect in January 2020. The regulation applies to businesses that have gross revenues greater than $25 million, as well as to those that buy, sell, or receive large volumes of California consumers’ personal information (California Legislature, 2018). McGraw noted that many businesses located outside of California will be subject to the law. CCPA broadly defines personal information as information that identifies, relates to, describes, is capable of being associated with, or could reasonably be linked, directly or indirectly, with a particular consumer or household. This information includes biometric and genetic data. CCPA defines de-identified data as information that cannot reasonably identify, relate to, describe, be capable of being associated with, or be linked, directly or indirectly, to a particular consumer, provided that a business that uses de-identified information provides technical safeguards, and business practices prohibit re-identification. McGraw said this de-identification standard is believed to be stricter than the HIPAA Privacy Rule; thus, the HIPAA de-identification standard may not be sufficient for future research.

McGraw noted that CCPA provides some exemptions for health care entities, including for limited types of data collected as part of a clinical trial, although this is a narrow exemption that does not apply broadly to medical research. McGraw also noted that protected health information (PHI) subject to HIPAA are exempt from CCPA. However, once data are de-identified according to the HIPAA standard, these data are no longer considered PHI and lose their exemption to CCPA. To make patient data exempt from CCPA,

TABLE 2 Individual Rights Under the General Data Protection Regulation and the HIPAAa Privacy Rule

| General Data Protection Regulation | HIPAA Privacy Rule | |

|---|---|---|

| Right to be informed | Requires detailed disclosures on data practices, including information collected, purposes for processing, categories of recipients, etc. | Notice of Privacy Practices must cover only what entity has the right to use/disclose individual protected health information |

| Right to restriction of processing | Right to get controller to restrict processing under certain circumstances (e.g., where accuracy of data is contested; processing is unlawful); required to inform downstream recipients unless this is impossible or involves disproportionate efforts | Right to request restriction (no requirement to honor except with regard to disclosure to health plans for services paid for in full out of pocket) |

| Right of access/copy | Right to know what information controller has; right to obtain copies (within 30 days; free unless request is excessive) | Right to copy (within 30 days), but reasonable, cost-based fee can be charged for labor associated with making the copies |

| Right of erasure | Also known as the “right to be forgotten;” applies if no longer basis for lawful processing or other reasons; must use reasonable efforts to communicate to downstream recipients | None |

| Right to rectification | Right to obtain rectification of inaccurate personal data (includes right to have incomplete personal data completed through supplementary statement) | Right of amendment is right to “request” amendment; however, must honor individual’s right to submit her version |

| Data portability | Right to receive personal data in a structured, commonly used, and machine readable format and the right to transmit that data to another controller without hindrance, where processing is based on consent and processing is carried out by automated means | Right to digital copy of information maintained digitally; right to copy in form and format requested if reproducible in that form/format |

a Health Insurance Portability and Accountability Act of 1996.

SOURCES: McGraw presentation, October 29, 2018; European Commission, 2016; Federal Register, 2003; U.S. Congress, 1996.

health care entities may opt to de-identify data using CCPA’s more stringent de-identification standard.

Communicating Results and Risks to Patients

Results from genetic analyses and their association with health risks are often quite complex. Patients’ interpretation of these results is complicated by the prevalence of low health literacy11 and numeracy. Galen Joseph, associate professor in the Department of Anthropology, History & Social Medicine at the University of California, San Francisco, noted that more than 36 million U.S. adults, approximately 20 percent of the population, struggle to read, write, or do math above a third grade level (Kutner et al., 2007; Rampey et al., 2016). Even among a college-educated population, 20 percent of adults cannot identify whether 1 percent, 5 percent, or 10 percent indicates a higher level of risk (Lipkus et al., 2001). Despite the likelihood that patients and families struggle with literacy and numeracy, Joseph noted that clinicians commonly overestimate patients’ skills. “Communication is the most common procedure in medicine,” Joseph stressed, so it is important that this is done well.

One component of poor communication between clinicians and patients is clinicians’ frequent failures to address patients’ health concerns. Joseph described a study on communication of genetic breast cancer risk in which she and colleagues identified “a profound mismatch between what the genetic counselors talked about and what the women actually wanted to know about” (Joseph et al., 2017). Participants were most concerned about their cancer’s likelihood of recurrence and whether family members were at risk. By contrast, counselors typically spent 45 minutes explaining basic information about genetics and describing the patient’s risk for a hereditary condition. Genetics was unfamiliar for most of the participants interviewed in the study, and many found the information so overwhelming they did not fully engage in decision making about testing. “All that information kind of shut them down,” Joseph noted. Health care interpreters also had difficulties with the genetic information they were asked to translate for patients. “They were unfamiliar with genetics and genomics because that’s not something they’re trained in,” Joseph said.

Joseph also described the design of an ongoing study to evaluate strategies for genetic counseling communication (Amendola et al., 2018). Participants who elect to undergo whole exome sequencing to assess hereditary cancer risk are randomized to receive either traditional counseling or modified counsel-

___________________

11 Health literacy is defined as the degree to which individuals can access, comprehend, and use information and services needed to make appropriate health care decisions (WHO, 2016).

ing focused on health literacy. In the modified counseling arm, counselors use simple and direct language that emphasizes connection, and work to build rapport between the participant and counselor (see Table 3).

Many of the decisions made in the course of precision oncology therapy require an understanding of risk prediction or probability (e.g., the probability that a treatment will work or the risk that a cancer is aggressive). Many factors affect patients’ risk perceptions in addition to numeracy skills, including the consequences of the risk information, tolerance for uncertainty, prior beliefs about risk level, and cognitive and emotional traits such as pessimism or optimism (Lautenbach et al., 2013). Each patient will interpret information about risk or probability differently depending on these factors, leading them to different treatment decisions, Joseph said. She also noted that some methods of describing risk are easier for patients to understand. For example, most studies of risk communication recommend framing information in terms of absolute risk rather than relative risk.

Joseph concluded by noting that equal access to advanced therapies and technologies is not sufficient to ensure health care equity—effective communication is necessary for the ethical implementation of precision oncology. When considering how to broadly integrate precision oncology into clinical practice, the health care community must develop communication strategies to ensure that information is interpretable by all patients and clinicians. Joseph suggested that strategies for communicating information about precision oncology could be informed by the well-established principles of effective clinician–patient communication. These principles include

- Use plain language that is direct, concrete, jargon free, and communicated in the active voice;

- Verify patient comprehension;

TABLE 3 Results Disclosure Communication Approaches

| Traditional Counseling | Modified Counseling (Literacy Focus) |

|---|---|

Conceptually and linguistically complex

|

Conceptually and linguistically simplified

|

Emphasis on education

|

Emphasis on communication and psychosocial counseling

|

SOURCES: Joseph presentation, October 29, 2018; Joseph et al., 2017.

- Adapt communication and documentation for the patient’s literacy and numeracy; and

- Take responsibility for communicating understandably to patients because clinicians, not patients, are responsible for effective communication.

Challenges for Payers

Newcomer described challenges that precision oncology poses for health care payers. He noted that although the cost of genomic sequencing has declined, the cost of many targeted drugs has not. Some payers that cover the cost of tumor genomic sequencing may not cover therapies indicated by the genetic markers identified. Payers are particularly concerned that reimbursing treatments based on tumor mutation rather than cancer type will set a precedent that is considered a new coverage policy, and that this will lead to expectations of drug reimbursement even in cases where there is no clinical evidence of the drug’s efficacy. Newcomer said insurance payments are highly regulated and expected to be consistent. If a medication to treat a particular cancer is covered by an insurer for one patient, it must be covered for other patients as well.

To address the problem of missing evidence of clinical efficacy, Newcomer suggested a proof-of-concept clinical trial in which all patients would have genomic analyses of their tumors. The control group would be randomized to receive the standard of care, including any FDA-approved therapies for treatment of cancers with the identified mutations. The experimental group would receive any therapy targeting the mutations identified, regardless of whether there was clinical evidence to support that choice of therapy. Newcomer noted that if outcomes in the experimental group surpassed those in the control group, it would provide evidence to support the selection of therapies based on tumor mutation in the absence of other clinical trial data, and would establish a path toward payer coverage for such therapies. Michael Kelley, national program director for oncology for the Department of Veterans Affairs (VA), suggested that clinical data from the VA could be used to conduct a retrospective proof-of-concept study that would approximate the clinical trial suggested by Newcomer.

Newcomer noted that the complexity of genomic sequencing tests also presents a reimbursement challenge for precision oncology. He explained that compensation for clinical laboratories traditionally has been based on a model of low cost and high volume. Genomic tests challenge this model because of the time and expertise required for the interpretation of results. Newcomer said that interpretation of genomic sequencing results can require as much as a full day’s time by a Ph.D. scientist, yet there is no existing mechanism to bill for this effort. Newcomer stated, “We have to think about how we define

complex decision support systems and what we pay for them if we’re going to see this field continue to progress.”

McLeod agreed that reimbursement for complexity will be a key driver of the integration of precision oncology in clinical care. He added that reimbursement policy could provide incentives to reduce complexity of diagnostic readouts provided by new technologies. As an example, he noted that the readouts for electrocardiogram machines have become simpler as the technology has progressed, with much of the complexity of interpretation accomplished by the machine rather than the clinician. Levy added that unless the complex tests are being performed and interpreted at the same institution, as they are at Intermountain Healthcare, there is no way to recoup the expense of providing molecular interpretation services. “The only way we can get reimbursed for these types of things is to actually lay hands on the patient and see them in a clinical environment. There needs to be some way to facilitate reimbursement so that new decision support tools can be developed,” Levy said.

Newcomer responded that a value-based reimbursement model would incentivize precision oncology as a method of identifying effective therapies, and would circumvent the need to find payment within a fee-for-service paradigm. He said that bundled care could provide financial support for these computational tools “because if the tools you use lead to more efficient care, the decision support algorithms would be paid for by the shared savings component.”

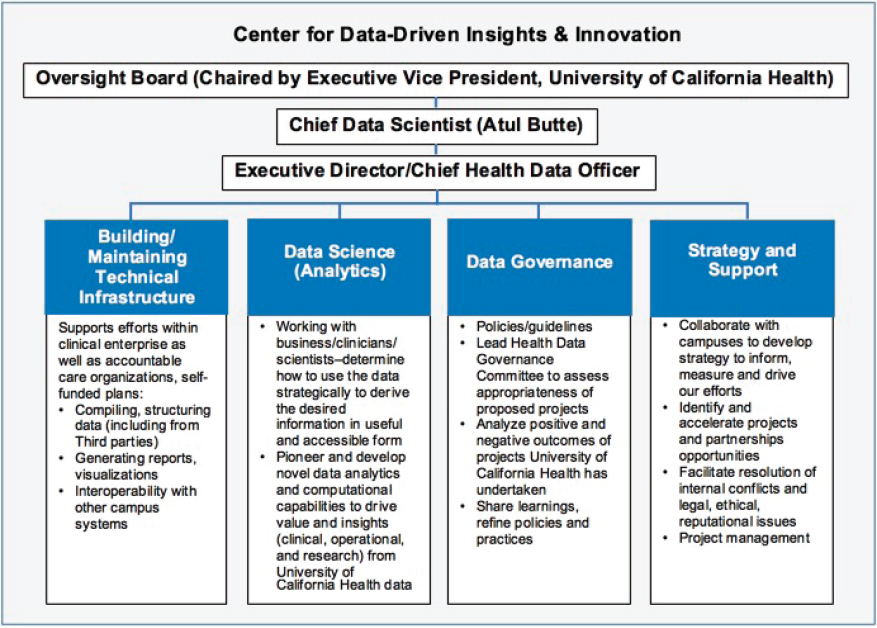

Newcomer also suggested that claims data could be used for post-approval surveillance. He noted that claims data can be used to follow a patient over time, and can serve as powerful tools to study effectiveness and cost-of-treatment regimens if integrated with clinical data. “Think about claims as a tool to integrate into our decision-making process because it gives us a longitudinal record you can’t get anywhere else,” Newcomer stressed.