2

Hardware and Software Engineering Assumptions at Risk

John Manferdelli, Cybersecurity and Privacy Institute at Northeastern University and member of the forum, moderated a panel discussion that focused on the future of chip design, including trade-offs among cost, performance, and security; engineering and design assumptions; and possible responses to Spectre and similar vulnerabilities.

Manferdelli began with a review of how the thinking around security and vulnerabilities has evolved. In the 1980s, he said, people had only a glimmer of potential security issues. Back then, it was possible to examine every detail in 10,000 lines of code, giving programmers a clearer window into potential vulnerabilities. Also, timing information and individual machines were not considered at risk because they could be closed off from a network. In that era, protecting against network attacks was the more immediate concern, he said, and the prevailing thought was that securing hardware completely was neither possible nor necessary.

Over the decades, Manferdelli argued, bad actors have gotten closer and closer to reaching the hardware. Cache and timing attacks have affected cryptographic keys: despite placing a high priority on securing the hardware and keys, designers succumbed to “the tyranny of performance and capability.” Companies were loath to

slow down processors for any reason, including security. Another reason for the focus on speed was more basic: it is a measurable metric, whereas security is not.

Echoing Kocher’s words, Manferdelli reiterated that hardware and software are more intertwined now than they were decades ago, and he added that most people in the field lack a systemic knowledge of the relationship between the two, with software designers relying on manufacturers to ensure device security.

Manferdelli noted that researchers can point to security questions, but it is not easy to test a design’s resilience until it is used in the real world. With today’s increasingly complex systems, it is unlikely that even the most experienced engineer could examine a processor and determine how safe it is, much less provide a safety guarantee. To build resilient systems that can respond to Spectre, he said, we will have to start balancing trade-offs in a new way.

ERNIE BRICKELL

Independent Security Researcher

Ernie Brickell shared several ideas that he believes can improve the security environment. While at Intel, Brickell ran a security architecture review team that reviewed the architecture for all proposals for improving security of Intel platforms and for any new features that might impact security. His team discovered and fixed many security issues during this architecture design phase. They did not review what was carried over from older chip designs. Brickell also led Intel’s research into cache side-channel attacks.

Most hardware is repaired via encrypted microcode patches, which are verified and installed upon rebooting, Brickell explained. Not all hardware can be patched in this way, but Intel, for example, designs its processors to allow as much patching as possible. Patches are encrypted to protect intellectual property as well as for patch integrity. For patches that fix a security flaw, another benefit of encrypted patches is that they can delay or inhibit public discovery of the underlying security flaw. This is beneficial, since not all users patch their systems immediately upon release of the patch. Patches

are encrypted with a global key, where the same global key exists in many processors. It may be possible for sophisticated adversaries to discover this key. In the future, Brickell said, designers may switch to physically unclonable functions, which in theory make it harder to extract a cryptographic key.

In the discussion, Eric Grosse, independent consultant, asked Brickell how Intel would know that the global key had leaked, and more broadly if there is a way to detect attacks that exploit a compromised global key. Brickell said that there would likely be no evidence that someone has discovered the key, and Grosse speculated that it might be important to create a mechanism for notification.

Brickell next pointed out that open source systems are not necessarily more secure than proprietary systems. While, in theory, anyone can review open source systems for vulnerabilities, it is unlikely that many people will actually do this, or do it well. Open source can also leave users vulnerable when a patch is issued that fixes a security flaw. Adversaries could quickly examine a patch, discover the underlying security flaw, and write code to exploit the security flaw. They could then use this exploit code on any unpatched systems. Some critical systems are never patched at all because administrators prioritize availability over security, and they do not want to risk having the system fail due to applying a patch. Brickell reminded participants that OpenSSL, an open source cryptography library, for example, had flaws that remained undiscovered and unpatched for years.

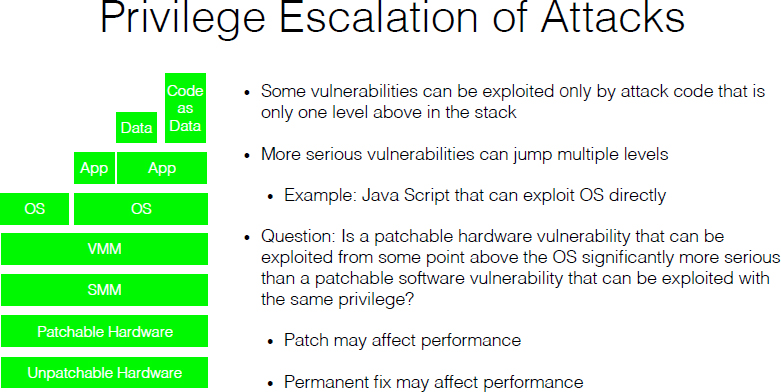

Brickell emphasized that security researchers perform a valuable service by discovering and reporting vulnerabilities. Vulnerabilities can occur at any layer in the software and hardware stack (see Figure 2.1). Vulnerabilities are more serious if a vulnerability at a low level in the stack can be exploited by software at a much higher level. Systems are typically designed so that as much as possible of the system can be patched with an update if vulnerabilities are found. But there is always some hardware component that cannot be patched, such as the hardware that validates a hardware patch. If a hardware patch for the patchable hardware is needed, it is distributed to users in the same way as a software patch, such as an operating system (OS) patch or a system management mode (SMM)

patch. So, he noted, it is reasonable to ask whether a patchable hardware vulnerability is more serious than a patchable OS or SMM vulnerability. If a patch or permanent fix to a vulnerability affects performance, that is also a factor in the seriousness of a vulnerability.

In the security field of cryptography, there has been a long history of algorithms designed, weaknesses found, and then new algorithms designed that are not subject to those weaknesses. This cycle repeated itself many times, but it now appears to have stopped, Brickell said, or at least it has slowed down considerably for cryptographic algorithms. When the Advanced Encryption Standard competition was held in the late 1990s, the top algorithm contenders were all designed to be immune to all of the attacks known at the time. There have been no new significant weaknesses found in these algorithms in the past 20 years. Brickell noted that this approach could be applied to hardware. As security researchers discover new attack vectors, they publish these attacks. Hardware vendors can then design their hardware to be immune to these attack vectors. There may be multiple cycles of this, but over time, as was the case with cryptographic algorithms, the security of the hardware will improve.

Recognizing the trade-offs between performance and security, Brickell noted that fixes such as executing protected threads on separate cores or zeroing out affected resources on a context switch would affect performance. However, this tension could be overcome or at least made transparent if users were given a choice between increased performance or increased security, depending on the application being used. He suggested that the default should emphasize security over performance.

Another idea to improve security, Brickell suggested, is to apply mitigations to more sensitive applications, which could be executed on a separate partition such as a separate processor-core without shared resources with other cores. Multiple protected applications, with mutual trust, could share a separate core. There could also be separate caches devoted to different security sensitivity levels. Brickell noted that there is a trade-off between security and performance that must be addressed in doing so. Security is improved by having fewer applications in each partition, while performance is improved by executing more threads in each partition.

Many hardware vendors are already designing hardware to partition applications through trusted execution environments (TEEs), which provide a place to execute applications protected from eavesdropping on secret data values or control flow detection by the main operating system. Brickell said that hardware engineers could design TEEs to protect from side-channel attacks if they use separate resources for the TEEs, but right now some of the TEEs share a cache with the main OS and do not clean the cache on a context switch between the TEE execution and the main OS execution. There is a good business reason to pursue expanding the features and uses of TEEs, Brickell noted.

GALEN HUNT

Microsoft Research

Galen Hunt described how isolation in microcontroller units (MCUs) enables Azure Sphere to create highly secure MCUs for use in Internet of Things (IoT) devices.

Hunt explained that MCUs are low-cost, frequently Wi-Fi enabled, single chips that are powering the IoT device deluge. Nine billion new MCUs are built and deployed every year, and there are 50 billion to 100 billion MCUs already in use, from toys to appliances to office equipment. Hunt explained that almost everything with a status light is using at least one MCU. While MCUs used to be considered “dark matter”—beyond the Internet’s reach—that is no longer the case.

Connected devices make a consumer’s life easier, but their connectivity makes them vulnerable to the growing number of ransomware, botnet, and malware attacks. In 2016, for example, the Mirai botnet attack exploited weaknesses in more than 100,000 IoT security cameras to launch a widespread denial-of-service attack. The attack was not detected for some time and could not be fixed by a remote update, Hunt explained. In a 2017 example, hackers gained access to a casino’s sensitive client information via an IoT thermostat in the casino’s fish tank.

IoT devices can also potentially be turned into physical-world weapons. A gas stove manufacturer discovered (and fixed) a vulnerability that could have allowed a hacker to create an explosion in a user’s home. IoT capabilities in HVAC units could pose similar risks. Yet many manufacturers are so focused on capabilities and sales that they become blind to such dangers, Hunt said, or lack the security expertise to adequately address them.

To counter these dangers, Hunt noted, Microsoft is focused on ensuring that its MCUs satisfy what the company has outlined as “The Seven Properties of Highly Secure Devices.”1 These properties include a hardware root of trust, defense in depth, small trusted computing bases, dynamic compartments, certificate-based authentication,

___________________

1 See G. Hunt, G. Letey, and E.B. Nightingale, 2017, “The Seven Properties of Highly Secure Devices,” Microsoft Research NExT Operating Systems Technologies Group, MSR-TR-2017-16, March, https://www.microsoft.com/en-us/research/research-area/security-privacy-cryptography/publications/.

failure reporting, and renewable security. Security must be end to end in this framework, meaning complete throughout hardware, software, and the cloud, because insecurity in one of those areas invariably affects all of them.

Hunt described how Microsoft is trying to bring the best available knowledge about security and connectivity to the world of IoT. Azure Sphere’s MCUs are connected, secure, crossover devices that enable other manufacturers to build highly secured IoT devices. They run in part on cloud services, which form an integral part of the system’s security by brokering hardware trust, detecting emerging threats, and providing software updates. The MCUs also have real-time processing, more sophisticated security, physical segregation, and firewalls. Their security makeup includes several isolated subsystems, such as the application processor, Wi-Fi, and input/output peripherals and processing. Context switching is not allowed; instead, Hunt explained, everything is completely separate, with no shared memory, resources, networking, or processors.

The basis of the security model is Microsoft’s hardware security platform, the Pluton Security Subsystem. It protects the device’s identity, its cryptographic keys are never shared with software, and it enables secure device reboots. Its separate, isolated core has its own dedicated memory but no cache or speculative execution. That core runs safety-critical code using slower, safer processors. Because of Pluton’s security mechanisms, devices with these MCUs can be considered highly secure, Hunt said. They have been tested and verified to resist data leakage and differential power attacks. Pluton validates all the other code, boots, hashes, and signatures, and also protects firewalls and uses private, self-generated keys for identification.

Hunt stated that throughout Pluton’s design, Microsoft has applied the principle of “least privilege,” which means that every layer can access information or resources only for its own legitimate

purpose. If one part of the stack becomes compromised, least privilege prevents an attack from escalating to other areas.

Security is foundational to Azure Sphere, and separation is foundational to security. As a result, Hunt sees physical isolation as the system’s most critical design feature. The MCUs also use error logs, he said, located in all the chips, to monitor any unexpected problems.

ANDREW MYERS

Cornell University

Andrew Myers presented ideas for designing hardware without the timing channels that are at the crux of Spectre. He noted that he and his colleague, Ed Suh, have carried out some promising research in pursuit of this goal, although more work is needed.

Although many have been inclined to trust that lower levels in the computing stack are adequately secure, Myers said Spectre is a wake-up call that they are not. Indeed, all layers of the stack are vulnerable to side-channel attacks. Spectre raised the security stakes, both because it is so widespread and because it affects a stack’s lowest layer.

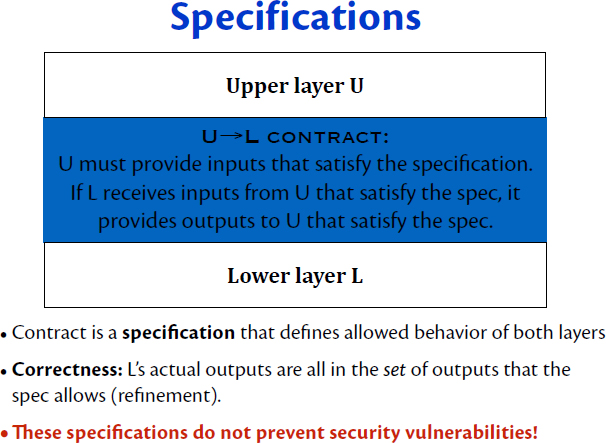

Every level of the stack needs improved security, Myers said, but we also need to consider the system as a whole. Systems are designed in layers as a way to manage complexity. However, there needs to be some kind of contract between layers, with each layer designed to rely on the layer below it satisfying its contract. The usual notion of a contract is a specification that describes the possible behavior and outputs of that layer. As long as the actual behavior of a layer is one of the possible behaviors, it can be said that the behavior refines the specification (see Figure 2.2).

Before Spectre, contracts defined in terms of refinement of specifications were generally thought to be enough to enforce security. Now, Myers claimed, it is clear that these kinds of specifications are not good enough. The key flaw is that specifications to date have not accounted for the time delay involved in performing computer instructions, and these time delays can be exploited to create dangerous timing channels. The Spectre attack creates a

correlation between these time delays and the contents of otherwise inaccessible memory locations. These attacks can completely bypass the normal OS memory protection.

Myers argued that safer contracts must address time. Protecting the timing channel will require designing contracts to constrain information flow between computing layers—in particular, its flow into time. Contents of sensitive memory should be prevented from influencing the amount of time taken by instructions that are observable by untrusted parties. Initial research has demonstrated the potential for designing hardware that obeys this rule, but there are still many technical challenges to address, such as how to specify these information flow constraints between all the layers and how to design software and hardware that will satisfy those contracts.

Myers noted that Microsoft’s approach to isolation could satisfy these information flow contracts. However, the complexity of isolation and information flow is problematic. Securing hardware

timing channels has to start from a chip’s initial construction, and the approach must be kept simple to keep the hardware safe, he said.

Fixing hardware in response to Spectre requires a change to the chip’s architecture—in particular, partitioning the cache so that it can store different levels of sensitive information, Myers said. Adding information flow policies to the code that defines the hardware implementation makes it possible to verify that information is kept secret, including preventing timing channels. If there are timing leaks in the code for the hardware implementation, then the compiler for the hardware description language will reject that code.

Myers and his team used this methodology to build two relatively simple and safe microprocessors through modification of existing processor designs. Neither of the processors fully has the kind of speculative execution that Spectre exploits, but their RISC-V processor does perform speculative fetch, a less aggressive form of speculation, and timing channel vulnerabilities in that speculation mechanism were detected by their approach.

Encouragingly, he said, the added security in these two processors does not greatly affect performance. Although proof-of-concept has been demonstrated, Myers acknowledged that his processors are simple, and research is needed to determine if more features can be incorporated and whether the approach can scale to the level of complexity of modern commercial microprocessors.

To make secure chips, Myers argued, we must check information within the hardware design, but it is also important to remember that hardware is only one layer of the computing stack. To fully support all layers and guarantee safety, we need a more expressive way of defining contracts between layers (one that can talk about information flow in addition to functional correctness and timing), a better secure hardware description language, full specifications for the layers expressed in this language, and the capability to perform automated verification of the resulting stack of abstractions.

DISCUSSION

Several themes emerged from the question-and-answer period that followed the panelists’ presentations. Participants delved into Myers’s chip design and future chip design more broadly. They also discussed what to do during the wait for more secure chips, the challenges of IoT security, and how to determine whom to trust.

Exploring Myers’s Design

Bob Blakley, Citigroup, asked Myers how his proposed processor architecture differs from older architectures such as IBM System/360. Myers replied that the difference is that his architecture specifically controls timing channels as opposed to merely not making guarantees about timing but still measuring time.

Steven Lipner, SAFECode, asked if the design was influenced by computing trends from the 1980s, especially with regard to formally verifying the absence of storage channels and more ad hoc search for timing channels. Myers agreed that several such techniques were used as inspiration, but a key difference is the statically verified information flow, an approach that is less prone to difficulties than a dynamically verified flow, which was more prevalent in the 1980s.

Butler Lampson, Microsoft, asked if the hardware description language security would not carry over to higher levels of the stack, and Myers replied that the contracts between layers could extend the improved information flow policies to the higher levels. Lampson noted that it is impossible to predict how different software timing channels will interact with the improved contracts. Myers agreed, but reiterated that the right contracts would be able to analyze the software and create any needed mitigations from within the microarchitecture. While it is not yet possible to analyze the software’s timing channel abilities, Myers said that some of his recent work is showing progress in this area.

Tadayoshi Kohno, University of Washington, asked if Myers’s research addresses other types of hardware vulnerabilities. While his team is mostly focused on confidentiality leaks, Myers noted that the approach could potentially be used to resist other types of attacks, such as integrity or availability attacks.

Another participant expressed agreement with Myers’s design in theory but argued that the technology to implement it has not yet been achieved. Myers agreed. For example, he said, there is a need to develop more expressive ways to state information flow policies, although he believes this is possible.

Hunt and Brickell expressed doubt that formal verification on such a system would be possible, but Hunt noted that software fuzzing techniques could be applied. The participant replied that formal verification was not what he had in mind, but a more on-the-fly, OS-level detect-and-defend set of instructions or, at the very least, a red flag. Myers added that it is very difficult to test for information leakage. Software fuzzing catches ordinary violations, but information flow would be slower because it has to be more thorough. Another promising technique, he suggested, could be relational model checking.

Options for Built-in Device Expiration

Blakley pointed out that while no system is 100 percent secure, companies must still make decisions and move forward. What can companies do when only imperfect systems and aftermarket mitigations are available? One option, he continued, is to make devices with some sort of expiration date built in, such as through an automatic deactivation or kill-switch mechanism. For example, Hunt said Azure Sphere considered designing devices to auto-disconnect after 10 years. The idea was not popular with vendors and was eventually dropped, although Microsoft did retain the right to disconnect any vendor’s devices if it discovers a vulnerability. Schneider described another potential kill-switch approach: sealing batteries so they cannot be replaced, meaning the whole device must be discarded once the battery life runs out.

Paul Waller, U.K. National Cyber Security Centre, agreed that transparency with regard to a device’s life span and its support system would help. He suggested that instead of asserting that a product will be functional and secure forever, it might be more feasible to assure customers that the company will fix the product if a security vulnerability is discovered. Several participants added that it would

be essential to clearly communicate such a guarantee to consumers, perhaps through some sort of standard label.

Katie Moussouris, Luta Security, expressed concern about the environmental impact of a built-in expiration or kill switch, because such an approach could add to the already worrisome burden of electronic waste. Hunt clarified that a kill switch would not necessarily have to mean the device would be nonfunctional, just that it would no longer be connected. That connectivity could even potentially be reinstated after patching or updates are available to ensure continued security.

Challenges of the Internet of Things

Participants grappled with some of the concerns posed by vulnerabilities in the IoT realm. Noting that Wi-Fi is virtually everywhere, Grosse asked, is it even possible to isolate oneself from IoT devices? Hunt said it likely is not, although when the Azure Sphere–powered devices come out, IoT security will be better. Grosse asked if those devices could enable a user to at least know what the device is doing. Hunt explained that Azure Sphere intends to add that feature so that users would have more control, but noted that this approach does not address home routers, which represent another challenge for IoT insecurity. In response to a question from Ed Frank about Azure Sphere’s level of confidence in the security coverage of its MCUs, Hunt noted that the MCUs, like anything, are not 100 percent guaranteed, and the company continues to consider potential areas of concern.

Lampson reiterated Kocher’s idea that different domains require different techniques. To keep IoT devices safe, he continued, both technology and regulation are required because people’s lives are at stake. Effective IoT regulation, he said, should mandate appropriate partitioning so that a safety-critical core, much smaller than the rest of the code but integral to its security, is separate from connected parts. If its vendor cannot demonstrate that baseline, then the product should not be sold, he emphasized, because it will be a danger to the public.

Whom Can We Trust?

Participants discussed the security motivations and capabilities of various stakeholders. Given the diverse landscape of players and an absence of regulation, what assumptions can be made about who can be trusted to protect security and to respond adequately when things go wrong?

Kocher asked the panel if, fundamentally, vendors can be trusted to get security right. Brickell answered that vendors are getting a lot of security right on a broad level, but vulnerabilities change over time and it is impossible for anyone to have a complete picture. Although some do a better job at security than others, in many cases there are not many viable alternatives.

Kocher agreed that neither proprietary nor open source products can be completely trusted, and he added that there is a dearth of regulation requiring disclosure of known problems in both cases. Right now, any acknowledgment is up to the engineers themselves. Kocher wondered if it would be possible for vendors to disclose relevant information about the elements of their system in order to create transparency, and hopefully trust, in device security. No matter what we do, Brickell said, a future without any security problems is highly unlikely, so we must plan to respond adequately to problems as they arise.

Lampson pointed to the 2007 National Academies report Software for Dependable Systems: Sufficient Evidence?,2 which asserted that sellers of safety-critical technology should have to provide sufficient evidence that adequate protections are being built in. To really improve security, he said, we must build with two pillars: better technology and regulation. Technology will improve more quickly, and engineers will have to figure out how to collaborate with the regulation pillar, which is likely to develop much more slowly.

Lipner, who served on the committee that created the Sufficient Evidence? report, noted that the report offered valuable insights but also left some problems unsolved, such as the question of exactly what evidence is convincing enough to determine that a software is secure and who should be responsible for developing and

___________________

2 National Research Council, 2007, Software for Dependable Systems: Sufficient Evidence? The National Academies Press, Washington, D.C., https://doi.org/10.17226/11923.

documenting that evidence. In addition, he said, the gap between intentions and reality can be wide, and there is more work to be done to define and document security protections.

Moussouris pointed out that commercial realities factor in as well. Device makers are motivated by the desire to sell their products; for a device to be successful, she said, it must have developers eager to create applications for its use. Hunt noted that if security is taken seriously enough, it is possible for a company to change its practices, even in quite dramatic ways. For example, Microsoft once stopped work on a product for a relatively long period in order to address security vulnerabilities before moving forward. However, such events are uncommon, and Moussouris emphasized that security also needs to be a priority among application developers. Another participant noted that until businesses see losses, they are not likely to be willing to change. In some cases, it may take actual fatalities—for example, caused by breaches in the IoT realm—for developers to start prioritizing security.

Rethinking Assumptions

Manferdelli asked whether, in this climate of overcomplexity, it is even possible to design a resilient system. Myers asserted that it is possible, explaining that the first step is to create technologies to support secure systems and verify that their information flow properties work as they are supposed to. But technology alone is not necessarily sufficient in the absence of a regulatory environment that incentivizes companies to take security seriously. Kocher pointed out that thus far, security design has been a very closed, opaque process, with little or no regulatory framework.

Kocher brought up a broader question: Can we accept a world with inevitable security vulnerabilities, or should we pursue intentionally simpler processors that increase security? Brickell pointed out that the complexity of the whole system is at the crux of the issue. Perhaps, he suggested, we should save complexity for applications that really need it and keep others as simple as possible. On the flip side, Blakley wondered if simplicity was a plausible goal, given the complexity of existing systems and the reasons they became so complex in the first place.