3

Adversarial Attacks

MEDIA FORENSICS

Matthew Turek, Defense Advanced Research Projects Agency

Matthew Turek, manager of the media forensics “MediFor” program at the Defense Advanced Research Projects Agency, provided an overview of how the program detects manipulations of media assets, such as image and video. He started the presentation by giving the background of the program and by telling the MediFor story by showing a slide with several images and the question “Do you believe what you see?” He highlighted mantras such as “a picture is worth a thousand words” and “seeing is believing” that make up the current visual media narrative and noted that it will need to evolve. Turek then described several slides containing images that were either authentic or manipulated.

It is useful to understand if and how an image has been manipulated and be able to describe how assets could be related. Turek noted that the manipulation of media assets is not a new problem, but the dramatic increase of digital content from a host of sources such as social media, YouTube, and surveillance video over the past 20 years has changed the landscape. The changes associated with the rise of digital media include the ability to manipulate media assets with less skilled human intervention, effort, and resources. He pointed out that although an individual with a gaming-level personal computer can generate a convincing manipulation, we are not at the point where it could be done reliably and with targeted intent—but we are heading in that direction.

Prior to MediFor, there was the problem of not being able to assess the integrity of image and video assets at scale, even with sufficient expertise. The majority of assessments were manual, and there is more software available to create manipulation than detect it. He hopes that MediFor will raise awareness in the research community to develop software and techniques for automatically detecting manipulations in media.

To highlight the process of manually assessing an image, he showed the example of a manipulated image of a hovercraft landing and described how one can use the manual indicators such as the wakes, shadow consistency, and sun angle to determine the integrity of the image. Turek described the following underlying challenges of assessing manipulation: (1) There is not sufficient time or expert personnel to

manually analyze individual images or videos, and (2) no one technique will work. He then pointed out that there is a need for broad-based capabilities for detecting manipulations in media.

MediFor detects media manipulations by looking at three essential elements of media integrity: digital integrity, physical integrity, and semantic integrity. He pointed out that semantic and physical integrity indicators are needed because digital integrity indicators are strong but can break down under certain circumstances—e.g., high levels of compression. Turek described a fourth element, integrity reasoning, which combines the digital, physical, and semantic integrity elements into one integrated assessment. The MediFor system was created to conduct the integrated assessment of all of the digital, physical, and semantic analytics and indicators and ultimately produce an integrity report. The integrity report consists of the media integrity indicators, an integrity score (composed of each of the media integrity indicators), and the integrity basis (e.g., camera geometry). The MediFor system also has a console where the user or an analyst can interact or upload different media assets.

Turek described important digital, physical, and semantic integrity and integrity reasoning indicators. Each description consisted of the underlying questions, examples of manipulation detection, the previous state of the art, work of the MediFor researchers, and challenges.

- Digital integrity indicators. The underlying questions of digital integrity are as follows: Are the pixels/representations inconsistent and is the metadata consistent? Examples that indicate manipulations include blurred edges from object insertion, different camera properties, replicated pixels, and mangled compression. MediFor researchers’ work includes looking for color-shifts, copy-paste, and composites. Turek highlighted that one researcher observed that computers can pick up on low-level signals that humans cannot, such as rounding differences from different implementations from compression. Prior to MediFor, manipulations were not classified or characterized in detail; this is a manually intensive process and is hard to analytically combine evidence from multiple sources. However, the ability to classify real versus simulated (e.g., deepfakes) and composite imagery is challenging. Currently, MediFor researchers are gathering evidence to determine how an image or video was manipulated and where the manipulation occurred.

- Physical integrity indicators. The main underlying question of physical integrity is as follows: Are the laws of physics violated? Examples that indicate manipulation include inconsistent shadows and lighting, elongation/compression, and multiple vanishing points. Previously, the majority of the detection technology was focused on the digital level, and processes were manual and time consuming. MediFor analyzes shadows and highlights and scene dynamics such as abrupt inexplicable changes in motion. Turek highlighted the difficulty that MediFor researchers have working on different aspects of reflection analysis such as automatic detection, understanding the geometry, and consistency. The automatic detection of shadows and reflection and scene consistency remains challenging.

- Semantic integrity indicators. The main underlying question of semantic integrity is as follows: Are the hypotheses about a visual asset supported or contradicted? Questions that help determine manipulation include the following: Is there contradicting evidence in associated images? Was the media asset repurposed? Are dates, times, and locations verifiable? Examples of work by MediFor researchers are combining information of different assets and media provenance. Previously, association and classification would only work well with dense coverage and unique features such as text. MediFor researchers are working on the difficult problem of media provenance to include the analysis of fewer images, less overlap, and subtle image cues. Challenges include verifying spatial temporal assumptions such as the location of an image on Earth at a particular time, uncovering provenance of an asset, and analyzing and space time aspects of video.

- Integrity reasoning indicators. The underlying question of integrity reasoning is “How can one assimilate digital, physical, and semantic integrity indicators into an integrated assessment?” Previously, most measurements were taken manually, were subjective, and had few quantitative

standards. MediFor researchers are working on fusing integrity indicators using contextual information because algorithms are looking for specific elements. Establishing confidence measures for various analytics and combining scores of different integrity modules are challenges.

The MediFor program collects data from annual challenges with the goal to target particular applications. The data set was comprised of a few thousand images at the initiation of the program. The National Institute of Standards and Technology, an evaluator of the MediFor program, conducted the nimble challenge in 2017. The data set contained 13,500 images, 1,151 videos, and focused on problems such as overhead imagery, commercial satellite data, and images embedded in scientific documents. Turek highlighted the auto journaling tool, which enables the ability to capture the history of the manipulations in a graphical format. Some of the journaling tool steps can be automated. In 2018, the media forensic challenge data set included 151,200 images and 3,628 videos. Problems extended to computer-generated imagery, green-screen manipulations, Photoshop manipulations, audio manipulations, and generative adversarial network (GAN)-altered images and video—the technology that underlies deepfakes. Future challenge problems will include the camera ID challenge and event recognition. The camera ID challenge will identify methods to tell if an image was taken by a particular cell phone; event recognition is the ability to sort images into known events. Turek suggested these capabilities will assist provenance, the ability to understand the content of images and video.

Annual evaluations comprehensively assess the MediFor program capabilities. The evaluations focused on image manipulation detection in 2017. Evaluations in 2018 were scaled to include video manipulation detection and image manipulation detection and localization that could enable detection of pixels that have been manipulated in an image. Turek explained that future evaluations may include work from the challenge problems and extend to video manipulation localization (both temporally and spatially), which could enable identification of specific ranges of frames that have been manipulated.

Turek presented a series of receiver operating characteristic1 (ROC) curves to show the quantitative results of the MediFor program. He assessed media forensic challenge algorithms using nimble challenge data (4,000 images) on a variety of manipulations to produce the ROC curves. Results show significant improvement in detecting image manipulations over time. Turek also introduced ROC curves that use the opt-in feature, which allows algorithms to choose images to assess based on the features they were designed to evaluate. He explained that the fusion of media integrity indicators results in a significant performance gain. Turek noted that detection of video manipulations is a challenge.

Turek presented the GAN challenge that looked at manipulation detection and localization task on modified data. The challenge used three data sets: the full image set, containing images partially altered by GANs; the crop image set, which are completely generated by GANs or are real; and the video set, including deepfakes. Turek showed the full score and opt-in ROC curves for the full and crop image data sets. He highlighted that only one researcher had an ideal result if the data were fully manipulated by GANs.

Turek showed a GAN video example of the face swap, when one person’s face is posted onto another person. He described that in order to have a convincing face manipulation, the faces of the original subject and manipulated subject should have similar characteristics. The characteristics described include similar face shapes, features, skin color, lighting, and background. The MediFor research groups observed that automated manipulations work best when the training and target data are well aligned. Turek showed the ROC curves with full scoring and opt-in feature and noted that the results showed credible performance levels and the need to reduce the false-alarm rate. Turek believes that future manipulations will become more robust and shared, promising unpublished work from a group that hopes to attribute specific GANs to specific manipulations.

In response to a participant’s assertion that a manipulation-detection arms race exists, Turek agreed and added that the MediFor program has been open and publishing the results of its work. He further emphasized

___________________

1 The receiver operator characteristic curve plots the true positive rate of detection of media manipulation against a false positive rate. Ideal results have a true positive rate of 1 and false positive rate of 0, which is the upper left corner of the plot.

that defenses consist of a broad range of capabilities—the digital, physical, and semantic layers—and the burden will be on the manipulators to access sufficient training data to produce a convincing manipulation. He also remarked that MediFor is investigating how easy it would be to take a detector and build that into the GAN framework and noted that GANs are difficult to optimize and train. He also responded to a question about whether downstream editing or other tools degrade sensor signatures; Turek responded yes, but sometimes they leave their own “fingerprints.” He said that MediFor researchers are investigating how to identify software packages that have manipulated images or video. He added that the camera ID can often be recovered, even under certain levels of compression and manipulation; however, it does degrade with the removal of the noise artifacts.

Another participant asked why there is a big drop in the accuracy for detection of manipulation of videos. Could the same technology that works well for images be applied to videos? Or, is it a scalability or computational complexity issue? Turek responded that video detection capabilities are newer, less robust, and less mature.

FORENSIC TECHNIQUES

Hany Farid, Dartmouth College

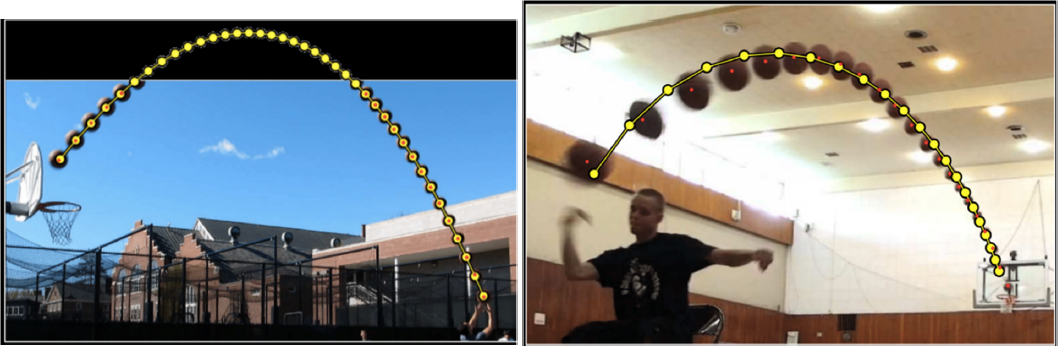

Hany Farid, Dartmouth College, explained that owing to the power of visual media, serious issues of media authenticity are prevalent. His presentation focused on three forensic techniques that can be used to determine the authenticity of digital videos. First, he shared a video of a man sliding off a ramp, flying through the air, and landing in a pool. In order to determine the video’s authenticity, the physics of the scene has to be understood. Farid explained how to reason about that type of motion in a video. Based on what is known about ballistic trajectory, once something is launched in the three-dimensional (3D) world, it follows a parabola (assuming no significant effects of wind or other external forces). While this is evident in a two-dimensional (2D) video if the camera capturing the scene is perpendicular to the plane of motion, understanding the physics of the motion becomes more challenging if the camera is positioned differently, at an angle, because the motion in the 2D video will no longer be a parabola. However, assuming the camera is static, one can parameterize the projection of a 3D parabola into a 2D camera and determine if the trajectory of a purported ballistic motion is consistent with the physics of a 3D projectile. If the motion is physically plausible then the video is likely authentic (see Figure 3.1a); otherwise, it might be manipulated (see Figure 3.1b) (see Conotter et al., 2012). Although the initial assumption was of a stationary camera, Farid noted that these techniques could also be applied with moving cameras. Although this technique applies to a narrow set of videos, Farid said that researchers are developing a number of different forensics that each apply to a small number of cases, but combined they can be used to analyze a broad range of videos.

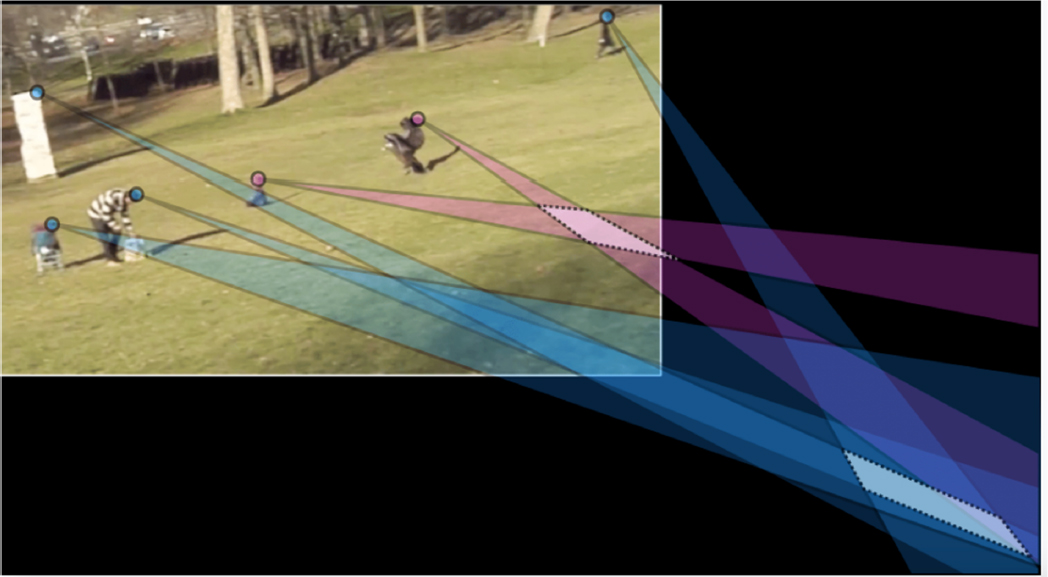

In the next example, Farid showed a video in which an eagle appears to swoop down and grab a child in a park. He explained that this motion is much more complex than the ballistic motion discussed in the previous examples because the motion of an eagle cannot be easily modeled. However, with outdoor images, reliable information exists about the lighting since the Sun is the dominant light source. To determine the authenticity of this video, one can reason about the physical plausibility of shadows in the video. In the 3D world, there is a linear constraint among the point on a shadow, the corresponding point on an object, and the light source. This constraint holds in each 2D frame of a video. As shown in Figure 3.2, the linear constraints in blue for five objects in the scene are inconsistent with the constraints in purple for the eagle and the child. The eagle and the child are computer generated and were digitally inserted into a live-action scene.

Farid pointed out that if adversaries understand the techniques for manipulation identification, they can create more authentic-looking imagery. This growing capability for manipulation is a societal concern.

In the final example, Farid showed a video in which former President Obama appears to be talking, but in reality actor Jordan Peele is talking. This is an example of a deepfake. In this instance, everything in the video of Obama is real, with the exception of his mouth, which was altered through use of deep neural networks to be consistent with Peele’s speech patterns. Making videos like this could disrupt presidential elections and could give a politician plausible deniability in any video released that shows him/her in a poor light.

To detect this sort of fake, Farid and his team rely on soft biometrics. There is a relationship between what a person says and how he/she says it (e.g., in terms of facial expressions or head movements). Looking at these correlations and then building a probabilistic model reveals which video is likely to be authentic

and which is likely to be fake. His team is working on building these models for all individual world leaders, though they do not yet know if they will generalize to other people. Farid suspects the models will generalize, because human faces do not move in completely random ways during speech.

Although many good techniques exist to detect manipulation, Farid emphasized that there is still progress to be made in the near and long term. Many of the current techniques require a human in the loop, which does not scale. More automated processes are needed, as are faster approaches to handle the large volume of data available. Improved accuracy is also desirable; he noted that 99 percent accuracy is actually terrible because it indicates that 1 out of every 100 fake videos on the Internet is undetected. Secure-imaging pipelines, as developed by companies such as Truepic,2 could help address this problem, Farid explained. Such technology could be especially useful for law enforcement agencies because it can prevent the manipulation of digital evidence. Farid even foresees that options for secure and unsecure photography will eventually be built into camera apps. Although there are still vulnerabilities with that approach, such as with a rebroadcast attack where a manipulated image is re-imaged to avoid detection, the risk could be more manageable. Farid asserted that better cooperation from social media companies is needed to reduce deepfakes. He believes that social media platforms have been weaponized and that most have not been responsive enough to misinformation campaigns on their platforms. He added that citizens have to become more informed in the digital age and that guidelines are needed for responsible deployment of technologies.

In response to an audience participant, Farid said that the spread of misinformation extends beyond a data ownership problem. U.S. citizens have to consider the appropriate balance between individual rights and societal harm, perspectives about which vary across the world. For example, Germany enacted aggressive legislation in an attempt to stop the spread of misinformation. In response to a comment from Ruzena Bajcsy, University of California, Berkeley, Farid said that U.S. citizens have given up privacy with social media without thinking carefully about it and are now trying to backtrack on those decisions. He added that it is preposterous that the majority of Americans take in their news from Facebook, a platform whose corporate interests may not align with users’ interests. For these two reasons, it is crucial to better educate people. A workshop participant raised a question about the right approach toward open-source code and research. Farid responded that he has started to question his beliefs about publishing work openly and suggested that holding back code is a good compromise because new technologies can be assimilated into generative adversarial networks quickly. An audience participant expressed concern about finding the right balance with so many competing objectives in maintaining the integrity of communication, while another audience participant cautioned that too much obscurity of information could lead to an inability to see what our adversaries are doing.

___________________

2 For more information about the company Truepic, see https://truepic.com, accessed April 3, 2019.