Proceedings of a Workshop

| IN BRIEF | |

|

September 2019 |

Artificial Intelligence: An International Dialogue

Proceedings of a Workshop—in Brief

On May 24, 2019 the National Academies of Sciences, Engineering and Medicine, in partnership with the Royal Society, held a symposium entitled Artificial Intelligence: An International Dialogue in Washington, DC. The symposium addressed if and how artificial intelligence (AI) would benefit from further international cooperation. It primarily focused on the aspect of AI known as ‘machine learning’—or ‘deep learning’—as this is an area of increasing attention and rapid technological advancement.

The event also summarized discussions at a day-and-a-half meeting on May 23-24, 2019* convened by the National Academies and Royal Society where 45 scientists, engineers, and other AI experts from the United States, United Kingdom, Canada, China, the European Commission, Germany, and Japan discussed key areas of national and international policy on AI where international collaboration would be most beneficial. Among the topics addressed at that earlier meeting and summarized at the symposium are the impacts of AI on the global economy and social cohesion, and the ethical and privacy considerations surrounding its development. Participants in the May 23 meeting also engaged in a broader dialogue of public and private institutional responses to the development of this rapidly changing technology; what role governments, companies, and international institutions can play in its stewardship; and what concretely is needed in order to foster international cooperation.

The symposium was planned by a committee co-chaired by Ajay Agrawal, professor, University of Toronto, and Angela McLean, professor, University of Oxford. It included two keynote presentations on the history of AI, current and near-term capabilities of AI, and the U.S. government’s initiatives to advance AI. A panel of experts then provided a summary of discussions at the May 23 meeting. Comments from the audience sparked further discussion on the state of the technology and questions that remained to be answered, such as how to ensure that AI systems are human-centric.

KEYNOTE SPEAKERS

Jack Clark, policy director, Open AI, summarized the recent history of AI and its near-term future. He described AI “as a phenomenon where machines can sense the world, observe imagery, and listen and compile observations into structured information.” Artificial intelligence can now be used in applications ranging from preservation of the environment, to answering spam telephone calls, to assistance with medical diagnostics. It is progressing extremely rapidly as the result of five decades of federal and commercial support for research and development, algorithmic advances, availability of enormous databases, an open-by-default research culture, and increasingly powerful computers. Indeed, he said, there has been an exponential increase in the computational capacity in large machines over the past six years as well as global algorithmic innovation. He illustrated the rapid growth in AI by describing improvements in synthetic imagery and language processing, both of which have widespread applications. In Clark’s view, areas in which there are shared incentives to collaborate, such as safety, predictability, and robustness of AI, are ideal for international collaboration.

In a second keynote, Lynne Parker, assistant director for AI at the White House Office of Science and Technology Policy, described current and near-term capabilities of AI and efforts of the federal government to advance AI. Parker began by noting that although there is no agreement on a single definition for AI, the John S. McCain National Defense Authorization Act for Fiscal Year 2019 defines AI to include, among other concepts, “an artificial system that performs tasks under varying and unpredictable circumstances without significant human oversight, or that can learn from experience and improve performance

* The event also summarized discussions at another meeting convened on May 23 and the morning of May 24 at the National Academies.

![]()

when exposed to data sets.”1 AI is a broad set of technologies and takes many forms, Parker said, including perception, natural language processing, machine learning, and robotics. A surging interest in AI has arisen due to improved algorithms, increasing amounts of data, and faster computers that map well to deep learning. She noted that AI is transforming the economy and society, and that its applications can be used to promote quality of life and economic growth.

Parker then discussed Executive Order 13859 on Maintaining American Leadership in Artificial Intelligence, issued on February 11, 2019.2 The Executive Order establishes an initiative to promote and protect national AI technology and innovation. It adopts a multi-pronged approach, including sustained investments in AI research and development, removing barriers to innovation (while upholding civil liberties, privacy and American values), enhancing access to high-quality data and cyberinfra-structure, ensuring leadership in technical standards, providing education and workforce training, and protecting U.S. leadership and advancements in AI.

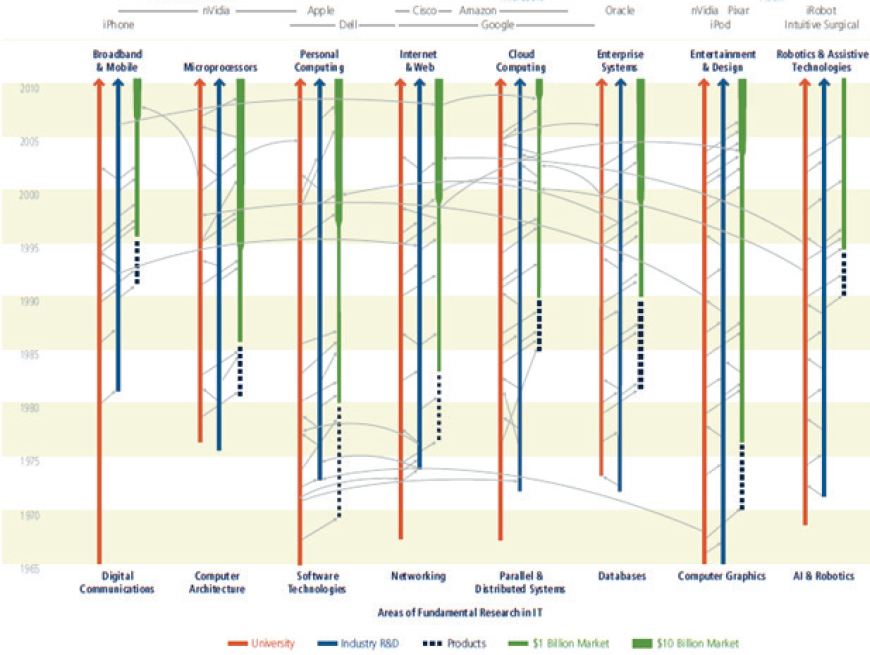

The federal government funds numerous AI projects, Parker said, in areas such as transportation, healthcare, manufacturing, oceanic and environmental monitoring and mapping, and agriculture. Examples of AI policy development include U.S. Department of Transportation policy development for integration of unmanned vehicles into the transportation system, a Federal Aviation Administration pilot program to accelerate the integration of drones into U.S. airspace, and improved Federal Drug Administration oversight for marketing of AI-based tools for medical purposes. Other uses of AI include the National Oceanic and Atmospheric Administration use of drones, using surface and underwater vehicles to monitor fish stocks, mapping coastlines, and counting protected marine species. Additionally, the U.S. Department of Agriculture invests in AI agricultural applications in food such as removal of fruit stems. She cited a 2012 National Research Council report3 summarizing long-term research and development investments in information technology that have enabled AI advancements (Figure 1). A summary of federal government AI initiatives can be found at AI.gov.

Source: National Research Council. 2012. Continuing Innovation in Information Technology. Washington, DC: The National Academies Press. https://doi.org/10.17226/13427.

___________________

1 Pub. L. 115-232. August 13, 2018.

2 See: https://www.whitehouse.gov/presidential-actions/executive-order-maintaining-american-leadership-artificial-intelligence.

3 National Research Council. 2012. Continuing Innovation in Information Technology. Washington, DC: The National Academies Press. https://doi.org/10.17226/13427.

While AI presents numerous opportunities, Parker noted that there are still many challenges, including understanding how AI is being utilized, guarding against adversarial manipulation, assessing risk of bias (fairness), proving the performance of AI systems (safety, security), and building AI that interacts well with human decision makers. Not all AI is the same, and some use cases pose more risk than others. A risk-based, use-case approach to AI governance is needed, she said, and the administration plans to issue a memo on this topic in the near future.

Efforts to craft this memo are underway by the Office of Management and Budget’s (OMB) Office of Information and Regulatory Affairs to provide AI guidance to federal agencies. The guidance will help federal regulatory agencies develop and maintain regulatory and non-regulatory approaches for safe and trustworthy creation and adoption of new AI technologies. A draft guidance memorandum will be published for public comment this summer, and the administration plans to finalize the memorandum later this year. Agencies will then have six months to develop plans to implement the OMB Guidance.

In the area of international cooperation, Parker noted the administration’s active participation in the development of the Organization for Economic and Cooperative Development’s (OECD) Principles on Artificial Intelligence, issued on May 22, 2019.4 The OECD AI Principles provide high-level guidance for responsible stewardship of trustworthy AI, which include inclusive and sustainable growth and well-being, human-centered values and fairness, transparency and explainability, robustness and safety, and accountability. OECD is also establishing an AI Policy Observatory5 for next steps in sharing information.

PANEL SUMMARIZING MAY 23 MEETING DISCUSSION ON INTERNATIONAL COOPERATION IN AI SCIENCE AND POLICY

An expert panel summarized key discussion points from the May 23, 2019 meeting on AI convened by the National Academies and the Royal Society. The panel was moderated by Ajay Agrawal and Angela McLean who served as co-chairs of the symposium planning committee. Panelists included Scott Stern, professor, Massachusetts Institute of Technology, Kate Crawford, professor, New York University; Henry Greely, professor, Stanford University; and Gillian Hadfield, professor, University of Toronto. Each panelist summarized key points that arose in one of the four sessions of the previous meeting: AI and the Global Economy; AI and Social Cohesion; AI, Privacy and Bias; and National and International Approaches, Institutions and Cooperation.

AI AND THE GLOBAL ECONOMY

Scott Stern provided a summary of key themes that arose from the earlier meeting’s session on AI and the Global Economy. Stern first summarized the session’s discussion on defining AI. Session speakers noted that AI is a general purpose technology (GPT) that can be applied across numerous areas and is moving very rapidly. GPTs are technologies with pervasive use and application in a wide range of sectors that reshape the economy.6 The steam engine, electricity, and computers are other examples of GPTs.

AI includes symbolic systems and robotics, but the largest surprise has been recent advancements in the area of neural networks and deep learning. AI has a long history, Stern noted. The achievements of IMAGENET,7 an image database organized according to a hierarchy of English-language nouns, raised international awareness of the potential of AI and deep learning. Since IMAGENET’s launch in 2009, there has been an increase in the number of academic papers on machine learning. A component of AI that has dramatically improved, Stern added, is prediction, i.e. the ability of algorithms to learn from existing data, add classifications to new data, and generalize decisions. Other key themes that Stern touched upon were AI applications on labor markets, global trade, product market competition, and innovation.

The overall impact of AI on the workforce is still unknown. Stern noted that some jobs will likely be replaced by AI, although it is unclear how much job displacement will occur versus skill changes.8 In some cases, AI will augment human behavior to increase productivity rather than substitute for human labor. AI may also create a demand for more labor in certain fields.

___________________

4 Organization for Economic Cooperation and Development, “OECD Principles on AI,” https://www.oecd.org/going-digital/ai/principles.

5 See: https://www.whitehouse.gov/presidential-actions/executive-order-maintaining-american-leadership-artificial-intelligence/

6 Bresnahan and Trajtenberg coined the term General Purpose Technologies in Bresnahan, Timothy F. & Trajtenberg, M., 1995. "General purpose technologies 'Engines of growth'?" Journal of Econometrics, Elsevier, vol. 65(1), pp. 83-108. In their view, GPTs have three characteristics: pervasiveness, improvement over time, and innovation spawning.

7 IMAGENET is an ongoing research effort to provide researchers access to a large-scale visual database. Organized according to WordNet hierarchy, it crowdsources its photo annotation process and runs annual IMAGENET Large Scale Vision Recognition Challenges. See: http://image-net.org/about-overview.

8 Organization for Economic Cooperation and Development. 2018. Survey of Adult Skills (PIAAC), 2012, 2015.

Several speakers opined that the likely labor market impact of AI will be one of significant adaptation rather than immediate disruption. Some participants recommended additional research on the impact of AI on the future workforce.

Session discussions suggested that prospects for global trade agreements addressing regulation of AI may be limited. Past and pending WTO cases indicate that countries have and may continue to assert that their regulation of AI is covered by a national security exemption. In addition, some participants noted that differences in political systems, the potential for national rather than global markets for data, and lack of trust could impede prospects for global trade agreements for AI. The international science community (both academia and industry) is actively working together in the field of AI scientific research.

Session speakers discussed whether AI would lead to natural monopolies for certain products, or whether it may reduce business dynamism across the United States and many OECD countries. They noted that better data may lead to better predictions, which could lead to large market shares for certain companies (and thus generate better data), which could in turn foster monopolization in certain product markets. Stern noted that seven out of the top eight largest global companies are heavily involved in AI and these companies are predominantly concentrated in the United States and China. This indicates that significant investments and resources are being devoted to AI innovation, but it raises the question of whether these investments should be characterized as trade—or a trade war.

AI is likely to become a GPT. However, unlike other GPTs, AI is likely to also be a tool for the process of innovation itself. While patents and publications reflect significant global cooperation and competition in AI, there are few formal international mechanisms to ensure rules for data gathering and ethical application, as well as norms for collaboration and standardization.

One question raised was whether the World Intellectual Property Organization is sufficiently regarding international cooperation on IP related to AI, especially because only human-created IP is subject to IP protection.

AI AND SOCIAL COHESION

Kate Crawford, professor, New York University, summarized the May 23 meeting’s session on AI and social cohesion. Centered on the observation that AI tools have social implications, some participants observed that AI systems can produce biased and discriminatory outcomes. AI can also be a force multiplier for wealth and power in times of global inequality.

The session highlighted recent examples of AI systems in large high-technology companies that resulted in bias or discrimination. Bias is not implicated in all AI systems, but biased AI systems can have a significant impact in complex situations such as employment, criminal justice, and education. The challenge is how to protect civil rights in an AI sphere, and speakers noted that additional research on the topic is needed.

Session participants identified three potential ways to reduce biased or discriminatory impacts from AI systems:

- Algorithmic impact assessment framework is a tool to assess whether an early-stage AI system results in a bias or discrimination. International work is ongoing in this research field. Public agencies could deploy assessments of AI systems in high impact areas to assess disparate impacts.

- Algorithmic auditing enables meaningful research in auditing while reducing disclosure of trade secrets of the entity being audited. It is currently an active area of research.

- Governments could notify the public when they plan to deploy AI systems to assist researchers and journalists in assessing biased or discriminatory impacts.

On an international level, it would be beneficial for researchers and regulators to access AI systems that enable them to find ways to test if the system is working fairly. Crawford noted that standards do not currently exist in the AI field to assess impacts of systems. In her view classification or standards in areas such as data training and models, as well as retention policies for data sets, models, and systems would be valuable to researchers.

In the past, institutions such as courts and the rule of law have safeguarded due process. However, strong algorithmic institutions for AI systems do not yet exist. One session speaker posed the question of how best to develop professional, technical, and social standards for AI, asking how we could make AI serve as a democratic and civil tool rather than just an information technology. According to Crawford, community participation in AI system development or deployment is also important.

Crawford continued her summary of the session by describing a presentation on empirical research on computational propaganda in the United Kingdom and India. These research findings indicated that few regulatory mechanisms exist to prevent algorithmic manipulation of information in public debates.

Session participants noted the value of learning from the history of other GPTs, she said. They also indicated that regulatory approaches should focus on specific applications of AI rather than the general purpose technology itself, and that individuals with domain expertise need to participate in the development of the regulatory framework.

In closing, Crawford posed the question of what checks and balances could be used to prevent bias and discrimination in AI systems. She suggested that researchers and policy makers ask how technology would serve their vision for the world

rather than technology driving their global vision. In terms of international cooperation, she suggested examining our responsibilities for the AI tools that we are currently building and finding ways to gain deeper awareness of inequalities.

Pascale Fung, professor and director, Center for AI Research, Hong Kong University of Science and Technology, provided supplemental remarks. Fung emphasized the importance of international sharing of research and datasets in certain fields, such as medicine. She encouraged the use of AI tools to make tangible contributions to solving global challenges and benefiting humanity. For example, in the field of precision medicine, international sharing and aggregation of patient data could create large enough samples for machine learning.

AI, PRIVACY, AND BIAS

Henry Greely, professor, Stanford University, summarized the key themes from the May 23 meeting’s session on AI, privacy, and bias.

One session speaker pointed out that privacy used to be mainly a concern of celebrities, but now information is collected on everyone. There is evidence that Americans seem to care more about privacy than they did previously, as well as some anecdotal evidence that this may also be the case in China. While the right to privacy is generally considered important, there are also direct and indirect costs associated with privacy restrictions. Some regulatory standards could promote AI while others could result in barriers for market entry. There is limited information about the costs and benefits of different privacy and data protection regimes.

Session participants noted the benefits in learning from experiences with earlier technologies, such as biotechnology and nanotechnology, and public utilities such as electricity. One speaker asked, if data is the new oil and AI is the new electricity, what is the new pollution?

On the topic of AI challenges and legal, technical, and ethical implications, a session speaker delineated five main challenges from AI: invasions of privacy, challenges to informed consent, personal profiling, re-identification, and discrimination and bias. Legal and technical solutions are therefore needed, he said, to address these challenges. From a legal perspective, tiered regulations for the private sector might be useful. From a technological standpoint, privacy by design and federated learning and data anonymization technologies may be helpful. From an ethical standpoint, AI should use a human-oriented approach, i.e., AI should be fair, reliable, comprehensive, and controllable. The session speaker suggested employing a combination of light touch rules, mandatory rules, international law, and criminal laws. Another speaker noted, as an example of the difficulty of understanding what is happening in AI, that there is no standard industrial code currently for AI.

Another session speaker proposed a model for examining AI trust and transparency. The four-layer model consists of perception (bottom layer), machine-learning (second layer), decision (third layer), and action (top layer). Regarding gender bias, Greely noted that a session speaker cited examples of a Google Translate search, changing sentences from English into another language and back to English that resulted in translating the sentence “he is a nurse, she is a doctor” into “she is a nurse, he is a doctor.” The speaker also discussed an Airbnb smart pricing algorithm that resulted in racial bias. The algorithm sets rental rates for Airbnb properties for landlords who are unsure of what to charge. A study of the Airbnb algorithm showed that it suggested lower rental rates for properties offered by African Americans compared to properties offered by whites. A differential adaption rate by minorities of smart pricing mitigated but did not eliminate the racial bias.

Another session speaker noted that biased data lead to biased outputs i.e., incorrect or unfair results. AI systems can produce biased results, but there is also human bias. When relying on AI systems for decision making, the shortened timeframe can diminish thoughtful reflection upon and healthy skepticism of the results. In the case of AI profiling, some individuals believe that if any AI is used in the process, all of the results should be questioned.

Notably, the session discussed possibilities for further international cooperation. A number of opinions were voiced on the importance of an international legal framework for AI international cooperation. Some believed that non-governmental entities can play an intermediary role.

Stuart Russell, professor, University of California, Berkeley, responded to a question from a symposium participant on the broader issue of artificial general intelligence. In Russell’s opinion, additional technological breakthroughs are still needed for machines to have the capability of the many aspects of human-level intelligence. AGI poses three important questions: (1) how to control AI systems that are more capable than human beings; (2) how to avoid deliberate misuse of general AI systems; and (3) how to avoid overuse of those systems so that humans continue to manage the world. In his view, international cooperation will be needed to address malfeasance of this technology.

NATIONAL AND INTERNATIONAL APPROACHES, INSTITUTIONS AND COOPERATION

Gillian Hadfield, professor, University of Toronto, provided an overview of the May 23 meeting’s session on national and international approaches, institutions, and cooperation. The session addressed whether current legal or policy infrastructures were sufficient and where additional institutions or regulatory frameworks are needed to advance the development of AI or

provide oversight.

Session participants expressed mixed views on whether existing international organizations could fully respond to trade and intellectual property issues. Speakers noted that although there are not many formal international mechanisms for ensuring rules for the ethical application of AI and norms for standardization, researchers and firms are engaging in international collaborations in authoring papers and patent applications as well as forming research alliances. A globally diffuse and connected community of AI researchers work together, and there is an ethos of openness among this global community. This serves as a tremendous global resource. Hadfield noted that the demographics of this community (predominantly male with backgrounds in computer science residing in the developed world) do not reflect the full range of stakeholders impacted by AI.

Impersonation was recognized as a serious challenge to AI internationally. Several session participants stressed the importance of informing individuals when they are dealing with artificial intelligence systems as opposed to human beings. Others expressed support for the development of international norms, agreements, or treaties that would disallow impersonation of AI systems.

Session speakers examined the processes for developing AI ethical principles. The discussion focused on potential benefits from engaging countries with cultural differences to identify shared values as a basis for future AI frameworks. There were diverse views on whether it is best to find common values as a foundation for international AI cooperation or whether it is best to work on AI cooperation regardless of shared common values. Most participants supported continued building of science and policy networks, the development of metrics to measure and challenge the reliability of AI, and the shared public benefit of collaborative data projects.

Participants in this session noted that intermediary institutions, such as companies and trusted third parties, could also play an important role in international collaboration in AI. They could, for example, serve as global watchdogs or conduct audits. Another suggestion was for more private organizations to participate in developing regulatory structures for AI with appropriate oversight by governments.

Some session participants also noted the important role for AI in science diplomacy. They suggested that the research community select a short-term question in a field such as health or the environment and use this experience to build an AI infrastructure and form a basis for future policy development.

Expanding on Hadfield’s comments, Jennifer Chayes, technical fellow and managing director, Microsoft Research New England, pointed out that although AI is unique in some aspects, valuable lessons can be learned from the history of other disruptive technologies. She noted that the community currently working on AI is an international community. She supported the notion of the community selecting a particular initiative on AI cooperation, such as markers for diseases, to advance the AI community and form the basis for policy in the future.

BROAD THEMES

In closing the symposium, Ajay Agrawal identified several broad themes regarding international cooperation in AI. The impact of AI technology is vast, impacting many companies, sectors, and consumers. In contrast, there are a narrow number of producers of AI technologies. The impact of AI is significant and will influence a number of customer services. AI technology is evolving rapidly and based on the past, the future of AI development will be exponential rather than linear. Regulatory mechanisms and bodies at national and international levels move at a much slower pace than the technology.

He then summarized three key AI challenges: (1) prioritize areas for the AI international community to focus its attention; (2) focus regulation on specific applications of AI rather than AI itself, as AI is actually computational statistics embedded in applications; and (3) mobilize to engage in international cooperation in areas that maximize public benefit, such as health and education.

DISCLAIMER: This Proceedings of a Workshop—in Brief was prepared by Anita Eisenstadt, Gail Cohen, and Anne-Marie Mazza as a factual record of what occurred at the meeting. The statements made are those of the author or individual meeting participants and do not necessarily represent the views of all meeting participants, the planning committee, or the National Academies of Sciences, Engineering, and Medicine.

REVIEWERS: To ensure that it meets institutional standards for quality and objectivity, this Proceedings of a Workshop—in Brief was reviewed by Gina Neff, University of Oxford and Aude Oliva, Massachusetts Institute of Technology. Marilyn Baker, National Academies of Sciences, Engineering, and Medicine, served as the review coordinator.

SPONSOR: This symposium was supported by the Alfred P. Sloan Foundation.

For additional information regarding the meeting, visit: http://sites.nationalacademies.org/pga/step/index.htm.

Suggested citation: National Academies of Sciences, Engineering, and Medicine. 2019. Artificial Intelligence: An International Dialogue: Proceedings of a Workshop—in Brief. Washington, DC: The National Academies Press. doi: https://doi.org/10.17226/25551.

Policy and Global Affairs

Copyright 2019 by the National Academy of Sciences. All rights reserved.