3

An Evidence Review and Evaluation Process to Inform Public Health Emergency Preparedness and Response Decision Making

The committee was charged with developing a methodology for and subsequently conducting a systematic review and evaluation of the evidence base for public health emergency preparedness and response (PHEPR) practices.1 Specifically, the committee was asked to establish a tiered grading scheme to be applied in assessing the strength or certainty of the evidence (COE)2 for specific PHEPR practices and in developing recommendations for evidence-based practices. This chapter describes the committee’s approach to developing a transparent process for making judgments about the evidence for cause-and-effect relationships and understanding the balance of benefits and harms of PHEPR practices.

The chapter begins with a discussion of the evolving philosophies regarding the identification of evidence-based practices, the challenges of evaluating interventions that are complex or implemented in complex systems, and the developing methodologies to address those complexity issues. It then describes the established evidence evaluation frameworks that informed the committee’s methodology. Next, the chapter details the key elements and approaches of the methodology developed and applied by the committee for reviewing and evaluating PHEPR evidence to inform decision making. Finally, the chapter concludes with lessons learned from the development and application of the committee’s methodology and recommendations for supporting ongoing efforts to build a cumulative evidence base for PHEPR.

___________________

1 The committee defined PHEPR practices as a type of process, structure, or intervention whose implementation is intended to mitigate the adverse effects (e.g., morbidity and mortality, economic impacts) of a public health emergency.

2 “Strength of evidence” and “certainty of the evidence” are often used interchangeably. While the committee’s charge used “strength of evidence,” the committee uses the phrase “certainty of the evidence” throughout this report (except when referring to the grading of qualitative evidence, for which the field-accepted term “confidence” is used). “Certainty of the evidence” can be defined in different ways, depending on the context in which the term will be used. For the purposes of making recommendations, it represents the extent of confidence that the estimates of an effect are adequate to support a particular recommendation or decision. When it is not possible or helpful to generate an estimate of effect size, the certainty of the evidence may reflect the confidence that there is a non-null effect (i.e., the intervention is effective) (Hultcrantz et al., 2017).

EVOLVING PHILOSOPHIES FOR EVALUATING EVIDENCE TO INFORM EVIDENCE-BASED PRACTICE: IMPLICATIONS FOR PHEPR

Systems for evaluating the evidence supporting given practices and interventions are a valued resource for practitioners, policy makers, and others who seek to use the best available evidence for decision making, but who lack the time, resources, or expertise needed to review and interpret a large and potentially inconsistent body of evidence. Moreover, the conduct of such reviews by reputable expert groups can increase the efficiency and consistency of the process. As discussed in Chapter 1, knowledge regarding evidence-based practice is critically needed in PHEPR given the mandate of the PHEPR system to mitigate the health, financial, and other impacts of public health emergencies. To date, however, there has been little effort to develop a rigorous and transparent process for identifying evidence-based PHEPR practices. The development of such a process requires an understanding of the methodological foundation for evidence-based practice, which continues to evolve to meet the evidentiary needs of more complex problems. The following sections describe this evolution and the implications for PHEPR given the complex nature of the PHEPR system, the kinds of questions that are of interest to PHEPR practitioners, and the volume and types of evidence that exist to answer those questions.

Limitations of the Traditional Evidence Hierarchy

Issues concerning how to reach conclusions about cause-and-effect relationships have long been deliberated in the fields of science and health. The foundation for the primacy of the experimental clinical trial in a hierarchy that ranks sources of evidence of effect, generally based on experimental design, and underpins the rise of evidence-based medicine (EBM) dates back nearly 100 years (Fisher, 1925). This foundation has served the stakeholders in clinical care very well, as a plethora of advances in medicine have been shown to be beneficial in clinical trials (e.g., use of beta blockers following myocardial infarction, colorectal cancer screening in older adults), while other, once-popular interventions have been shown to be ineffective and hence discarded (e.g., extracranial–intracranial bypass to prevent stroke, Lorenzo’s oil to treat cancer). Following on the successes of the EBM model, the evidence hierarchy was subsequently applied in other fields (e.g., public health, education) to support evidence-based practice and policy (Boruch and Rui, 2008; Briss et al., 2000, 2004). In its broader application, however—and increasingly within clinical medicine as well—limitations of the traditional evidence hierarchy were recognized (Durrheim and Reingold, 2010). What works well when the intervention is an immunization or a medication may not work as well when evaluating a multicomponent quality improvement intervention or a systemic organizational change (Walshe, 2007). Notably, the application of EBM methods to research and reviews of public health practice has been challenging because, in addition to variation in effects across population groups and settings, the context in which an intervention is implemented can alter the intervention itself (as may be the case, for example, in organizational interventions) (Booth et al., 2019). This context sensitivity makes it difficult to draw conclusions about the relevance of findings from an intervention studied in one set of circumstances to the use of the same intervention under different circumstances. The early application of evaluation methods in medicine focused primarily on achieving impact estimates with high internal validity—a goal that is better suited to well-controlled clinical settings and relatively homogenous physiological systems than to public health (Green et al., 2017). Demonstrating effectiveness in a controlled setting is important, but so, too, is

knowing the likelihood that the findings from a study or set of studies conducted in particular contexts would apply to other settings (Leviton, 2017).

Moreover, not all interventions and practices can be studied in the context of a randomized controlled trial (RCT), for practical and/or ethical reasons (WHO, 2015). For example, communities cannot be randomized and assigned to experience a public health emergency, and in many instances, best practices for emergency response have been developed over time and cannot ethically be replaced with a placebo or no response. Nor is it always necessary to conduct an RCT to evaluate whether a cause-and-effect relationship exists. Thus, it is useful to consider any evidence that provides credible estimates of a causal impact (or lack thereof) between an intervention and the outcome of interest.

In 1965, Sir Austin Bradford Hill proposed a set of factors to apply when assessing whether an observed epidemiologic association is likely to be causal in nature. These factors draw on evidence from multiple sources and include (1) the strength of an association; (2) the consistency of the association (i.e., replicability across different studies, settings, and populations); (3) the specificity of the association; (4) the temporality of the association (i.e., whether the hypothesized cause precedes the effect); (5) the existence of a biological gradient (i.e., observation of a dose–response relationship); (6) the plausibility of the causal mechanism; (7) the coherence of the data with other evidence; (8) the availability of supporting evidence from experiments; and (9) the analogy or similarity of the observed associations with any other associations (Hill, 1965). Together these factors make up one of the earliest frameworks for evaluating evidence to reach conclusions about causal effects, and it is still widely applied for the purposes of causal inference. Hill’s criteria, however, were proposed in the context of simple exposure–disease relationships and may be less directly applicable to the evaluation of cause-and-effect relationships for complex or system-level interventions.

Since Hill’s time, the concept of frameworks for evaluating evidence has received increasing attention, and numerous such frameworks have been developed. Importantly, however, some authorities, including Hill himself, have argued that a rigid application of evidence criteria cannot and should not replace a global assessment of the evidence by someone with skills and training in the subject matter and methods used (Hill, 1965; Phillips and Goodman, 2004).

Evolving Methods for Evaluating Complex Health Interventions in Complex Systems

As policy makers and practitioners have increasingly recognized the importance of having an evidence base to tackle complex challenges, there has been a growing movement among those who conduct systematic reviews and develop guidelines to embrace methods that take a complexity perspective and use multiple sources and types of evidence. Early efforts to overcome methodologic challenges related to evaluating evidence for complex, multicomponent, and community-level public health interventions were undertaken during the development of The Guide to Community Preventive Services (The Community Guide) (Truman et al., 2000). More recently, three seminal report series were published that address these complexity issues and informed the committee’s work: the Cochrane series on Considering Complexity in Systematic Reviews of Interventions, the Agency for Healthcare Research and Quality’s (AHRQ’s) series on Complex Intervention Systematic Reviews, and the World Health Organization’s (WHO’s) series on Complex Health Interventions in Complex Sys

tems: Concepts and Methods for Evidence-Informed Health Decisions.3 It should be noted, however, that methods for evaluating complex interventions and systems represent an active area of ongoing development.

The complexity perspective reflects a shift away from a focus on simple, linear cause- and-effect models and has been used increasingly in the health sector, particularly in public health, to “explore the ways in which interactions between components of an intervention or system give rise to dynamic and emergent behaviors” (Petticrew et al., 2019, p. 1). Multiple dimensions of intervention complexity may be considered in the evaluation of evidence, including

- intervention complexity—for interventions with multiple, often interacting, components;

- pathway complexity—for interventions characterized by complicated and nonlinear causal pathways that may feature feedback loops, synergistic effects and multiple mediators, and/or moderators of effect;

- population complexity—for interventions that target multiple participants, groups, or organizational levels;

- contextual complexity—for interventions that are context-dependent and need to be tailored to local environments; and

- implementation complexity—for interventions that require multifaceted adoption, uptake, or integration strategies (Guise et al., 2017).

A complex intervention perspective is different from a complex system perspective, and the choice of which to adopt when conducting a review is appropriately determined by the needs of the policy makers and practitioners. A complex system perspective is appropriate when the focus is on the system and how it changes over time and interacts with and adapts in response to an intervention (Petticrew et al., 2019). In such cases, the objective of the review may shift from determining “what works” to understanding “what happens” and to formulating theories on how those effects are produced (Petticrew, 2015).

Addressing the issues of the complexity of an intervention, the details of the implementation process, and the context in which the intervention is implemented requires the adaptation of existing or the development of new frameworks for assessing evidence. Reviewers and guideline developers have been developing and testing novel quantitative, qualitative, and mixed methods for systematic reviews and evidence synthesis and grading to better capture complexity (Briss et al., 2000; Guise et al., 2017; Noyes et al., 2019; Petticrew et al., 2013a; Waters et al., 2011). The starting point for complex reviews is commonly to develop a logic model as the analytic framework that represents an intervention and how it works in the complex system in which it is implemented as the theoretical basis for subsequent reviews (Anderson et al., 2011; Rohwer et al., 2017). In addition to quantitative reviews of intervention effects using novel methods (Higgins et al., 2019), standalone qualitative evidence syntheses are particularly useful for gaining an understanding of intervention complexity, and of how various aspects of complexity affect the acceptability, feasibility, and implementation of interventions and the way they work in specific contexts with specific populations (Flemming et al., 2019). There exist approximately 20 different

___________________

3 In 2013, the series Considering Complexity in Systematic Reviews of Interventions was published by the Cochrane Review in the Journal of Clinical Epidemiology. The series Complex Intervention Systematic Reviews, which was published in 2017 in the Journal of Clinical Epidemiology, resulted from an expert meeting convened by AHRQ. In 2019, WHO released the series Complex Health Interventions in Complex Systems: Concepts and Methods for Evidence-Informed Health Decisions, which was published in BMJ Global Health.

qualitative synthesis methods, some of which enable theory development. Given this wide choice of methods, the European Union recently published guidance on criteria to consider when choosing a qualitative evidence synthesis method for use in health technology assessments of complex interventions (Booth et al., 2016).

Additionally, review methods for complex interventions and systems have focused on the integration of diverse and heterogeneous types of evidence. Qualitative4 and quantitative evidence may both contribute to understanding an intervention or practice and ultimately what works, necessitating synthesis approaches that combine these different types of evidence (Noyes et al., 2019; Thomas and Harden, 2008). In some instances, guideline groups have synthesized across diverse evidence streams by mapping qualitative to quantitative findings or vice versa, so as to better understand the phenomenon of interest (Glenton et al., 2013; Harden et al., 2018; WHO, 2018). For example, to better understand how lay health worker programs work, and particularly how context affects implementation, Glenton and colleagues (2013) mapped findings on barriers and facilitators (mediators and moderators) from a qualitative evidence synthesis onto a causal model derived from a previously conducted quantitative effectiveness review. The authors suggest that this integrative synthesis approach may help decision makers better understand the elements that may promote program success. Realist review methods (a mixed-method approach) are also gaining traction as an alternative to the traditional positivist approach5 (Gordon, 2016), focused on explaining the interactions among context, mechanisms, and outcomes (Wong et al., 2013). Realist review methods yield an evidence-informed theory of how an intervention works. By helping to understand the intervention mechanisms and the contexts in which those mechanisms function, realist reviews can assist decision makers in judging whether an intervention is likely to be useful in their own context(s), considering context-specific tailoring, and determining whether an intervention is likely to scale (Berg and Nanavati, 2016; Greenhalgh et al., 2011; Pawson et al., 2005).

Implications for Evaluating Evidence in the PHEPR System

The evolving methods described above for the review and evaluation of interventions that are complex or implemented in complex systems are of particular relevance to the PHEPR context. As discussed in Chapter 2, the PHEPR system, with its multifaceted mission to prevent, protect against, quickly respond to, and recover from public health emergencies (Nelson et al., 2007b), is inherently complex and encompasses policies, organizations, and programs. This complexity also stems in part from the nature of public health emergencies, which are often unpredictable, may evolve rapidly, and are highly heterogeneous with respect to setting and type (e.g., weather events, disease outbreaks, terrorist events) (Hunter et al., 2013). Setting is not limited to geographic location, but also encompasses the sociocultural and demographic environment, as well as the characteristics of the communities and the responding entities (e.g., organizational structure, managerial experience, staff capabili-

___________________

4 While there is general understanding of quantitative evidence as numerical data derived from quantitative measurements, misconceptions regarding what constitutes qualitative research and qualitative evidence are common. Qualitative research uses “qualitative methods of data collection and analysis to produce a narrative understanding of the phenomena of interest. Qualitative methods of data collection may include, for example, interviews, focus groups, observations and analysis of documents” (Noyes et al., 2019, p. 2). Qualitative evidence can also be extracted, for example, from free-text boxes in questionnaires, but this type of qualitative data tends to be less useful as it is thin and lacks context. A questionnaire survey would not, however, be considered a qualitative research study.

5 A positivist approach is anchored in a paradigm that assumes there is an objective truth that can be discovered through empirical evidence derived from quantitative enquiry (Ward et al., 2015).

ties, social trust, and other resources). PHEPR practices themselves may also be complex, featuring multiple interacting components that target multiple levels (e.g., individual, population, system), and with implementation that is often tailored to local conditions (Carbone and Thomas, 2018).

The questions prioritized by PHEPR stakeholders are not limited to the effectiveness of policies and practices as measured by their effects on health and system outcomes. PHEPR practitioners have identified important knowledge gaps related to implementation, such as understanding the barriers to using information-sharing systems to share data between and among states and localities (Siegfried et al., 2017) and knowing when an emergency operations center (EOC) should be activated. Addressing this wide range of operations-related questions requires assessing evidence beyond that generated through RCTs and other quantitative impact studies: evidence from qualitative studies and other sources is needed to supplement that from quantitative studies to illuminate the “hows” and “whys” in complex systems (Bate et al., 2008; Greenhalgh et al., 2004; Hohmann and Shear, 2002; Petticrew, 2015).

A considerable challenge when reviewing evidence to determine the effectiveness of PHEPR practices and implementation strategies relates to the often indirect links between the practices and primary health outcomes (e.g., morbidity and mortality) (Nelson et al., 2007a). Simple one-to-one linear cause-and-effect relationships between PHEPR practices and outcomes are the exception rather than the rule. In most circumstances, multiple pathways link practices to outcomes. Intermediate outcomes that reflect the array of potential harms and benefits may be organizational or operational, and the balance of benefits and harms is influenced by the various stakeholders’ values and perceptions regarding feasibility and acceptability. Moreover, multiple interacting interventions are often implemented simultaneously, making it difficult to assess the effect of each in isolation and their additive effects, and to distinguish those that are necessary from those that are sufficient, or at least contributory, for any given event (Nelson et al., 2007a). For example, a suite of non-pharmaceutical interventions, including isolation of sick patients, quarantine of contacts, and school closures, may be implemented simultaneously during an epidemic to reduce transmission and morbidity, making the effect of any one intervention difficult to measure. Moreover, for some PHEPR practices, it may be that there is no true effect that is replicable, as effects may be inextricable from the contexts in which a practice is implemented (Walshe, 2007). This way of thinking is a departure from most EBM, which assumes there is an underlying true effect of measurable size. In such cases, traditional evidence evaluation frameworks based on a positivist approach may not be well suited to addressing the review question(s) at hand. Questions about when and in what circumstances such practices as activating public health emergency operations is effective, for example, may be better assessed using the realist approach described above.

The PHEPR system draws on a wide range of evidence types, from RCTs to after action reports (AARs),6 and the approach to evaluating the evidence needs to reflect that diversity. In addition to research-based evidence, both quantitative and qualitative, it is important for the approach to make use of experiential evidence from past response scenarios, which offers the potential for validation of research findings in practice settings, as well as improved understanding of context effects, trade-offs, and the range of implementation approaches or components for a given practice.

___________________

6 AARs are documents created by public health authorities and other response organizations following an emergency or exercise, primarily for the purposes of quality improvement (Savoia et al., 2012). They contain narrative descriptions of what was done, but may also contain “lessons learned” (i.e., what was perceived to work well and not well) and recommendations for future responses.

Finally, public health interventions often lie at the intersection of science, policy, and politics, which means that decision-making processes around implementation need to reflect not only scientific evidence but also information related to social and legal norms, ethical values, and variable individual and community preferences. Accordingly, any systematic review of the evidence necessary to make informed decisions related to PHEPR needs also to include an explicit assessment of underlying ethical, legal, and social considerations.

To inform its methodology, the committee began by reviewing existing frameworks for evaluating different sources and types of evidence, both in health care and in other areas in which experimental clinical trials may be impossible or impractical (such as aviation safety), to determine their potential to accommodate the diverse PHEPR evidence base and questions of interest to PHEPR stakeholders. These existing frameworks are described below.

HOW DO DIFFERENT FIELDS EVALUATE EVIDENCE?: A REVIEW OF EXISTING FRAMEWORKS

The charge to the committee specified that in developing its methodology, the committee should draw on accepted scientific approaches and existing models for synthesizing and assessing the strength of evidence. Thus, the committee reviewed the published literature and held a 1-day public workshop on evidence evaluation frameworks used in health and nonhealth fields. (This workshop is reported separately in a Proceedings of a Workshop—in Brief [see Appendix E].7) During the public workshop, the committee also heard from experts on how evidence is assessed in other areas of policy, such as transportation safety and aerospace medicine, where making decisions about cause and effect is crucial for safety but conducting randomized trials, or even concurrently controlled experimental studies, is in most cases impractical. The models for evidence evaluation reviewed and considered by the committee are summarized in Table 3-1.8

For each approach, the committee identified some aspects relevant to a framework for PHEPR evidence evaluation. For example, the framework used by the What Works Clearinghouse (WWC) practice guides includes a mechanism for drawing on the real-world experience of experts to inform recommendations while making clear the limitations of such evidence (WWC, 2020), which the committee thought would be applicable to evaluating evidence from AARs and integrating PHEPR practitioner input. Additionally, the user-oriented presentation of information in the WWC practice guides and the inclusion of implementation guidance was of interest given practitioners’ emphasis on the importance of translation and implementation issues in PHEPR. For these reasons, the committee also carefully considered the Clearinghouse for Labor Evaluation and Research (CLEAR) approach to evaluating implementation studies.

The committee considered the causal chain of evidence approach, which employs analytic frameworks and is used by several groups, including the U.S. Preventive Services Task Force (USPSTF), the Community Preventive Services Task Force (CPSTF), and the Evaluation of Genomic Applications in Practice and Prevention (EGAPP), to be particularly relevant to PHEPR, as it was expected that there would be few, if any, studies that would provide direct evidence demonstrating the effect of a PHEPR practice on morbidity or mortality following a public health emergency. Instead, in most cases, evidence from across a chain

___________________

7 Available at https://www.nap.edu/catalog/25510 (accessed November 7, 2019).

8 This table is not intended to serve as an exhaustive list of all existing evidence evaluation frameworks. The committee’s objective was not to review every published framework but to understand the breadth of approaches in use across diverse fields and their potential application to PHEPR.

TABLE 3-1 Examples of Evidence Evaluation Frameworks Reviewed by the Committee

| Field | Evaluation Framework or Approach | Brief Description |

|---|---|---|

| Education | What Works Clearinghouse (WWC) | The Institute of Education Sciences founded the WWC to provide consistent methods for evaluating interventions, policies, and programs in education. The WWC has published standards, which vary by experimental design, for studies used to determine the strength of evidence.* The WWC publishes two kinds of products: intervention reports, which evaluate the effectiveness of an intervention based on studies that meet the WWC standards, and practice guides. The latter, which draw on expert input in addition to published evidence, are designed to serve as user-friendly guides for educators and provide recommendations on effective education practices, as well as implementation guidance (WWC, 2017a,b). |

| Labor | Clearinghouse for Labor Evaluation and Research (CLEAR) | The U.S. Department of Labor’s clearinghouse adopted and adapted the WWC’s methods to summarize research on topics relevant to labor, such as apprenticeships, workplace discrimination prevention, and employment strategies for low-income adults. Findings from the evidence reviews are made accessible through the agency’s clearinghouse to inform decision making. Individual studies are reviewed and assigned a rating for the strength of causal evidence. Synthesis reports evaluate the body of evidence from only those studies within a given topic area that achieved high or moderate causal evidence ratings and do not make recommendations (CLEAR, 2014, 2015). |

| Transportation | Countermeasures That Work | The National Highway Traffic Safety Administration publishes Countermeasures That Work periodically to inform state highway safety officials and help them select evidence-based countermeasures for traffic safety problems, such as interventions to reduce alcohol-impaired driving. The guide, which does not use a transparent evidence evaluation framework, reports on effectiveness, cost, how widely a countermeasure has been adopted, and how long it takes to implement (Richard et al., 2018). |

| National Transportation Safety Board (NTSB) Accident Reports | NTSB investigates aviation and other transportation accidents, reaching conclusions about causes and making safety recommendations, which are detailed in its accident reports (NTSB, 2020). Investigators identify probable causes and make recommendations based on mechanistic reasoning (e.g., theories of action based on knowledge regarding physics or chemical properties of materials), modeling, logic, expert opinion, and after action reporting from those involved in an incident. | |

| Aerospace Medicine | National Aeronautics and Space Administration (NASA) Integrated Medical Model | NASA needs to predict and prepare for health issues that arise in space, but conducting experimental studies in this area is often infeasible for a number of logistical and ethical reasons. To overcome that barrier, empirical evidence from past experiences with space travel is integrated with a variety of other evidence sources, including longitudinal studies of astronaut health, evidence from analogous contexts (e.g., submarines), and expert opinion, in a complex simulation model that informs decision making. Each parameter in the model may be adjusted, which allows for analysis of a wide range of decisions (Minard et al., 2011). |

| Field | Evaluation Framework or Approach | Brief Description |

|---|---|---|

| Health Care and Public Health | U.S. Preventive Services Task Force (USPSTF) | The Agency for Healthcare Research and Quality convenes USPSTF to review evidence and make recommendations on evidence-based practices for clinical preventive services (e.g., screening tests, preventive medications). USPSTF draws on evidence summaries from systematic reviews, which are conducted by evidence-based practice centers, to determine the effectiveness of a service based on the balance of potential benefits and harms. Graded recommendations are made based on the certainty of net benefit (USPSTF, 2015). |

| Community Preventive Services Task Force (CPSTF) | CPSTF is a Centers for Disease Control and Prevention (CDC)supported task force that reviews the evidence base for community preventive services and programs aimed at improving population health. Its findings and recommendations are published in The Guide to Community Preventive Services (The Community Guide). CPSTF developed its own methodology for evaluating and assessing the quality of individual studies and bodies of evidence. Because randomized controlled trials (RCTs) are often difficult to conduct for public health interventions, The Community Guide does not automatically downgrade the strength of evidence from non-RCT designs, but considers the suitability of the study design and the quality of execution for each study included in the body of evidence. CPSTF also considers the applicability of the evidence (e.g., to different populations and settings) in developing its recommendations (Briss et al., 2000; Zaza et al., 2000b). | |

| Grading of Recommendations Assessment, Development and Evaluation (GRADE) and GRADE-Confidence in the Evidence from Reviews of Qualitative Research (GRADE-CERQual) | GRADE is a method used to evaluate bodies of evidence to assess the certainty of the evidence (COE), up- and/or downgrading COE based on eight defined domains. In contrast to most other frameworks, GRADE does not set explicit quality standards for study inclusion, but instead adjusts the COE based on the quality and risk of bias of studies included in the analysis. GRADE also utilizes an Evidence to Decision framework for making transparent, evidence-based recommendations in the form of guidelines, considering evidence beyond that related to effect (e.g., feasibility, acceptability). Many international review and guideline groups use GRADE, and the methods are continually updated. Recently, GRADE was adapted for the assessment of qualitative evidence (GRADE-CERQual) (Guyatt et al., 2011a; Lewin et al., 2015). | |

| Evaluation of Genomic Applications in Practice and Prevention (EGAPP) | EGAPP, a CDC initiative, published guidelines on evidence-based processes for genetic testing and implementation in clinical practice. To generate an overall strength-of-evidence rating for a body of evidence, the EGAPP methods use different hierarchies of data sources and study designs for three distinct components of the evaluation (analytic validity, clinical validity, and clinical utility), thereby explicitly linking different evidence types to questions they are well suited to answering. EGAPP methods also consider the ethical, legal, and social implications of the genetic tests (Teutsch et al., 2009). | |

* While the committee uses the term “certainty of the evidence” throughout the report, some frameworks report on “strength of evidence.” The summaries in this table reflect the specific terminology used in each framework.

of intermediate outcomes would need to be linked together to reach health and other downstream outcomes. Analytic frameworks (examples of which can be found in Chapters 4–7) depict the hypothesized links between an intervention/practice and intermediate and health or other final outcomes. They also provide a conceptual approach for evaluating interventions, guiding the search and analysis of evidence (Briss et al., 2000).

The committee considered the framework developed and continually updated by the Grading of Recommendations Assessment, Development and Evaluation (GRADE) group to be most applicable to those kinds of PHEPR practices for which a biomedical focus is most relevant. Examples of such practices include quarantine or the use of potassium iodide for radiological incidents. The committee’s approach was also informed by a 2018 WHO report containing guidelines for emergency risk communication, which provided a timely example of how GRADE might be adapted and used in conjunction with GRADE-Confidence in the Evidence from Reviews of Qualitative Research (GRADE-CERQual) to evaluate evidence and develop recommendations on a wider range of PHEPR practices (WHO, 2018). The 2018 WHO report presents a model for synthesizing and grading evidence from quantitative and qualitative research studies, and includes guidance on inclusion of such other evidence streams as case reports and gray literature reports with similarity to AARs (e.g., governmental and nongovernmental reports containing lessons learned and improvement plans).

Although the National Transportation Safety Board (NTSB) does not rely on explicit evidence evaluation frameworks, the committee believed that organization’s use of mechanistic evidence to determine the cause of an aviation disaster was relevant to the evaluation of evidence to support decision making in PHEPR. NTSB’s investigation into the cause of the midair explosion of TWA Flight 800 illustrates that process. For example, an examination of the direction in which metal from the fuselage was bent and knowledge of the physics of explosions contributed to a conclusion that the explosion happened within the plane (rather than originating outside the plane, as in the case of a missile) (Marcus, 2018). This conclusion did not depend on a hypothesis-testing study with statistical tests for differences between what was observed and an alternative. This same kind of reasoning has been used to explain why one can have confidence that parachutes are better than uninhibited free fall when jumping out of a plane: it is known from physics that the rate of descent of an object dropped from the sky is slowed by the drag resistance of air, and that a parachute increases that drag such that with a big enough parachute, the descent of a 200-pound man can be slowed sufficiently for him to survive the fall.

For the purposes of this report, the committee defined mechanistic evidence as evidence that denotes relationships for which causality has been established—generally within other scientific fields, such as chemistry, biology, economics, and physics (e.g., the accelerating effect of the gravitational attraction of Earth and the slowing effect of air resistance)—and that can reasonably be applied to the PHEPR context through mechanistic reasoning, defined in turn as “the inference from mechanisms to claims that an intervention produced” an outcome (Howick et al., 2010, p. 434). For some interventions, such as the placement of auxiliary power units in hospitals at heights above expected water levels in the event of flooding, mechanistic evidence may be a significant contributor to decision making. Such evidence has not traditionally been incorporated into evidence evaluation frameworks, although processes for integrating biological mechanisms with more traditional evidence sources (e.g., data from clinical trials or epidemiological studies) have been developed (Goodman and Gerson, 2013; Rooney et al., 2014) and applied, for example, in systematic reviews of the toxicological effects of exposures (NASEM, 2017). The use of mechanistic evidence, however, can be seen as incorporating principles of a realist approach to evidence synthesis (discussed earlier in this chapter), for which it is established practice to develop theories of how an

intervention works and to use diverse types of evidence to explore interactions among context, mechanisms, and outcomes to better understand causal pathways.

Also of interest to the committee was the National Aeronautics and Space Administration’s (NASA’s) use of modeling to understand the trade-offs among different decisions constrained by the weight and volume limitations of a space capsule. Decision models such as the Integrated Medical Model used by NASA may have utility for considering practices, such as quarantine, for which the consequences of trade-offs can be modeled in advance of having to make decisions during emergencies. Although the development of such models was beyond the scope of this study, methods for integrating evidence from existing model-based analyses with empirical evidence were examined. It should be noted, however, that this is a nascent area of methodological development (CDC, 2018a; USPSTF, 2016).

From its review of the literature and discussions with experts, the committee concluded that none of the evidence evaluation frameworks it reviewed were sufficiently flexible, by themselves, to be universally applicable to all the questions of interest to PHEPR practitioners and researchers without adaptation. Furthermore, no one framework was ideally suited to the context-sensitive nature of PHEPR practices and the diversity of evidence types and outcomes of interest, many of which are at the organizational or systems level and thus often difficult to measure. Therefore, the committee developed a mixed-method synthesis methodology9 that draws on (and in some cases adapts) those elements of existing frameworks and approaches that the committee concluded were most applicable to PHEPR. As a starting point, the committee adopted the analytic frameworks from CPSTF and USPSTF and the GRADE evidence evaluation and Evidence to Decision (EtD) frameworks (see Box 3-1), while allowing sufficient flexibility to bring in other evidence types (e.g., mechanistic, experience-based, and qualitative) that are not accommodated by the traditional GRADE approach to the assessment of certainty in quantitative evidence. This approach allowed the committee to use the appropriate methodology to answer different types of questions of interest to PHEPR stakeholders. The development of this methodology and its application to the evaluation of evidence for four exemplar PHEPR review topics were undertaken in parallel using a highly iterative process, the steps of which are described in the sections below.

APPLYING A METHODOLOGY TO REVIEW, SYNTHESIZE, AND ASSESS THE COE FOR PHEPR PRACTICES

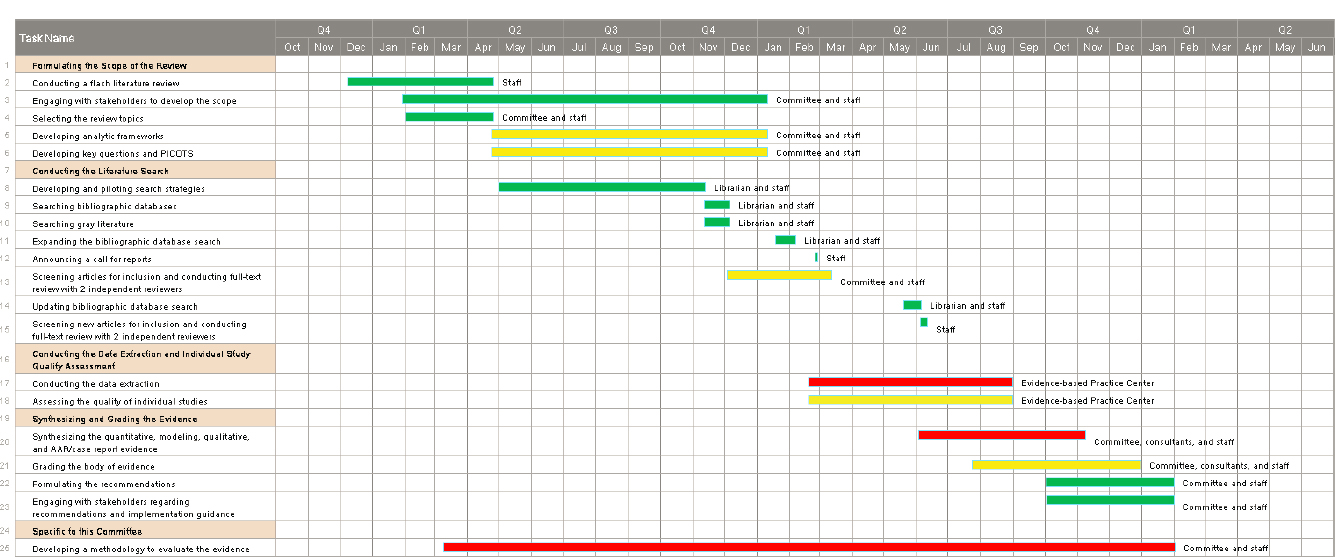

This section outlines the key elements and approaches of the methodology developed and applied by the committee for reviewing and evaluating PHEPR evidence to inform decision making (summarized in Box 3-2). This description is intended to inform future PHEPR evidence reviews and to serve as a foundation for future improvements and modifications to the PHEPR review methodology needed to promote its long-term sustainability.

The sections below briefly describe the committee’s approach to

- formulating the scope of the review and searching the literature,

- synthesizing and assessing the certainty of the evidence, and

- formulating the practice recommendations and implementation guidance.

To allow for a more comprehensive description of the committee’s processes for synthesizing the evidence, grading the evidence, and developing recommendations in this chapter,

___________________

9 A mixed-method synthesis approach involves the integration of quantitative, mixed-method, and qualitative evidence in a single review (Petticrew et al., 2013b).

| BOX 3-2 | STEPS IN THE COMMITTEE’S PHEPR EVIDENCE REVIEW AND EVALUATION METHODOLOGY |

- Select the review topic, considering published literature on gaps and priorities and stakeholder input.

- Develop the analytic framework and key review questions.

- Conduct a search of the peer-reviewed and gray literature and solicit papers from stakeholders.

- Apply inclusion and exclusion criteria.

- Separate evidence into methodological streams (quantitative studies, including comparative, noncomparative, and modeling studies and descriptive surveys; qualitative studies; after action reports; and case reports) and extract data.

- Apply and adapt as needed existing tools for quality assessment of individual studies based on study design.

- Synthesize the body of evidence within methodological streams and apply an appropriate grading framework (Grading of Recommendations Assessment, Development and Evaluation [GRADE] for the body of quantitative research studies and GRADE-Confidence in the Evidence from Reviews of Qualitative Research for the body of qualitative studies to assess the certainty of the evidence [COE] and confidence in the findings, respectively).

- Consider evidence of effect from other streams (e.g., modeling, mechanistic, qualitative evidence) and support for or discordance with findings from quantitative research studies to determine the final COE.

- Integrate evidence from across methodological streams to populate the PHEPR Evidence to Decision framework and to identify implementation considerations.

- Develop practice recommendations and/or implementation guidance.

the relatively standard steps of the systematic review process (formulating the scope of the reviews, searching the literature, inclusion and exclusion, and quality assessment) are only briefly mentioned herein but are described in more detail in Appendix A.

Formulating the Scope of the Reviews and Searching the Literature

The committee was charged with developing and applying criteria for the selection of PHEPR practices on which it could apply its systematic review methodology to assess the evidence of effectiveness. Rather than a sequential approach that would involve developing the evidence review and evaluation methodology in the abstract and then applying it to the PHEPR practices selected for review, the committee judged that it would be more fruitful to develop the methodology and test it on the selected PHEPR topics simultaneously. This approach was intended to result in a methodology that would be applicable across a range of different practices for which the evidence base would be expected to differ in nature. As a first step, the committee needed to select a set of review topics that would be illustrative of the diversity of PHEPR practices.

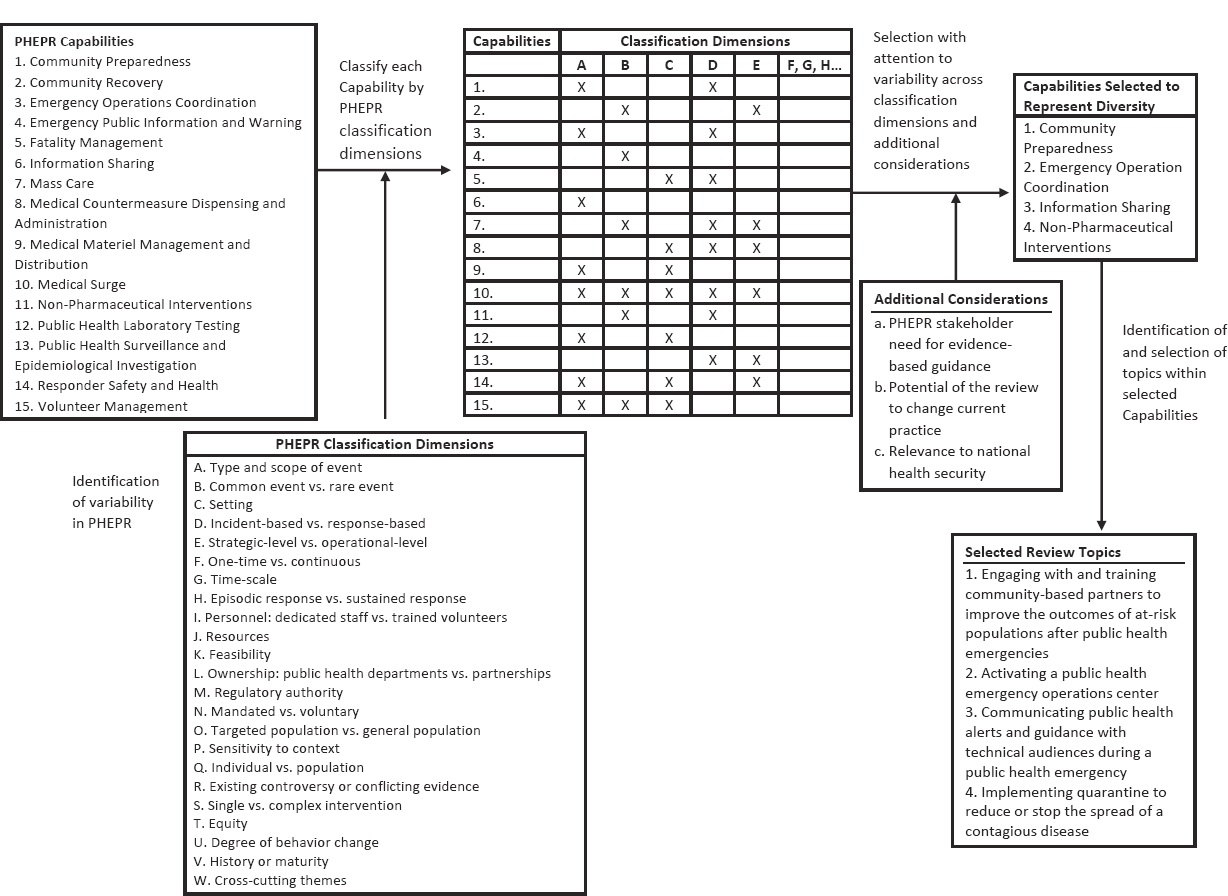

Consistent with its charge, the committee started its topic selection process with a list of the Centers for Disease Control and Prevention’s (CDC’s) 15 PHEPR Capabilities (CDC, 2018b) and developed criteria for prioritizing the Capabilities to select specific PHEPR practices. In considering its selection criteria, the committee sought to select test cases that would capture the expected diversity of the evidence base for various PHEPR practices result-

ing from different research and evaluation methodologies, as well as variability in practice characteristics. Such characteristics were defined as classification dimensions and included, for example, the type and scope of event in which a practice is implemented, the practice setting, whether the practice is complex or simple, whether it is under the direct purview of public health agencies, and whether it is preparedness or response oriented. The committee applied the classification dimensions to each PHEPR Capability to identify a set of Capabilities that were diverse with respect to those variables (see Figure 3-1). In addition to such diversity, the committee considered as criteria for selection of review topics the current needs for evidence-based guidance among key stakeholders, the potential of the review to change practice, and the relevance of a topic to national health security.10 The committee engaged with stakeholders (PHEPR practitioners and policy makers) to inform topic selection and referred to published literature that identifies practitioners’ research needs. Applying this approach, the committee, in consultation with PHEPR practitioners, selected the following four practices as topics for review:

- engaging with and training community-based partners to improve the outcomes of at-risk populations after public health emergencies (falls under Capability 1, Community Preparedness);

- activating a public health emergency operations center (Capability 3, Emergency Operations Coordination [EOC]);

- communicating public health alerts and guidance with technical audiences during a public health emergency (Capability 6, Information Sharing); and

- implementing quarantine to reduce or stop the spread of a contagious disease (Capability 11, Non-Pharmaceutical Interventions).

This chapter describes the application of the committee’s evidence review and evaluation methodology to these four review topics; the details of the review findings for each topic are presented in Chapters 4–7.

The next steps, standard practice for most systematic reviews and described in more detail in Appendix A, included the development of analytic frameworks and the identification of key questions11 for each topic area to further define the scope of the reviews; the development and execution of a comprehensive search of the peer-reviewed and gray literature; and the screening of titles, abstracts, and full-text articles by two reviewers to identify articles meeting the committee’s inclusion criteria. Of note, determining the eligibility of studies required iterative discussions as the review methods, the scope of the four topics, and the outcomes used to assess effectiveness were refined over time. The analytic frameworks and the key questions were reviewed and informed by a panel of PHEPR practitioners serving as consultants to the committee (the processes for appointing the panel of PHEPR practitioner consultants and for developing the analytic frameworks and key questions are described in Appendix A).

___________________

10 As noted earlier in this report, the review topics were selected prior to the COVID-19 pandemic.

11 Key questions define the objective of an evidence review. In some guideline development processes (e.g., that of USPSTF), each linkage depicted on the analytic framework (between intervention and outcome or between two outcomes) is represented with a separate key question. The committee did not develop separate key questions for linkage in the analytic frameworks, but instead defined an overarching review question that guided the review process and sub-questions of interest generally related to benefits and harms, as well as barriers and facilitators.

Synthesizing and Assessing the COE

To maximize the efficiency of the evidence review and evaluation process for each review topic, different component steps, described in the sections that follow, were commissioned to outside groups and individuals with the appropriate expertise. The initial classification of studies and the data abstraction and quality assessment for quantitative studies (except modeling studies) were performed by the Center for Evidence Synthesis in Health, an AHRQ-funded evidence-based practice center (EPC) at Brown University. The quality assessment and synthesis of qualitative studies were conducted by a commissioned team at Wayne State University. The evaluation and synthesis of selected modeling studies were performed by a modeling expert at Stanford University, and the evaluation and synthesis of AARs and case reports were conducted by a PHEPR expert in evaluation at Columbia University.

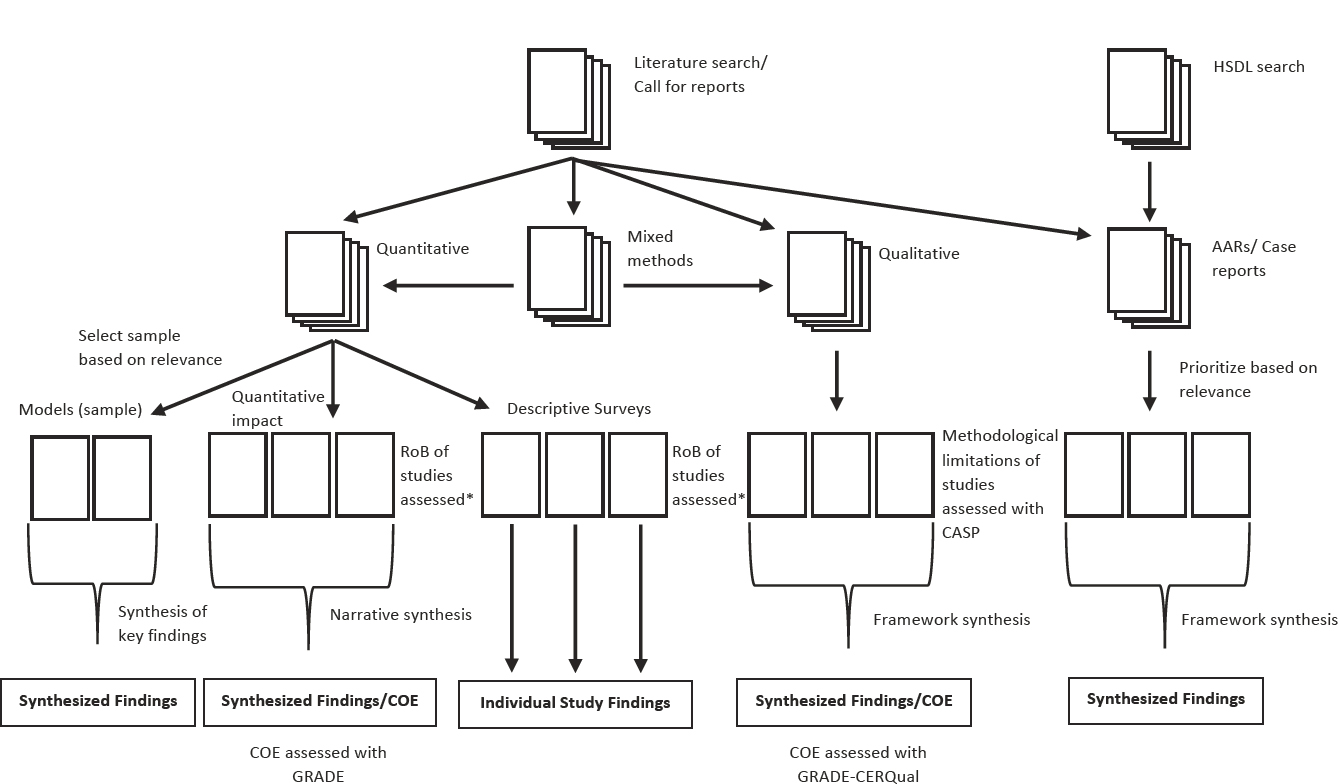

Classification of Studies into Methodological Streams

An overview of the evidence classification process is presented in Figure 3-2, from the point where the studies for inclusion had been identified. The evidence for the four PHEPR test cases was classified into the following categories: quantitative studies, qualitative studies, mixed-method studies, and case reports and AARs. These categories were defined by the methods employed rather than the subject of investigation, and thus encompassed the full range of evaluative studies (e.g., systems research and quality improvement studies in addition to more traditional impact studies). Mixed-method studies could be used in both quantitative and qualitative evidence syntheses, as depicted in Figure 3-2. The committee determined that no single method could be applied across these different types of evidence, and therefore describes later in this chapter separate processes for evaluating the quality and strength of each type.

Quantitative studies

Quantitative studies included articles and reports with quantitative results from the evaluation of a PHEPR practice. This included quantitative comparative studies, for which there was an explicit comparison of two or more groups (or one group at two or more time points) to assess whether they were similar or different, usually with a statistical test, as well as quantitative noncomparative studies (i.e., studies that provided only postintervention results, such as posttraining knowledge scores). Modeling studies were treated as a subset of quantitative studies, as were surveys, which were further classified on the basis of the method and questions asked. Surveys that did not include an evaluation of a practice during or following a public health emergency were categorized as descriptive surveys and were not used in the evaluation of effectiveness (but could be used, for example, to populate the EtD framework or to inform implementation considerations). Modeling studies were identified only for the evidence review on quarantine. Given the diversity of purposes of the modeling studies captured in the review, the committee opted to perform an in-depth assessment of a selected group of models judged to be highly relevant. Twelve modeling studies were selected for detailed analysis based on an assessment of their modeling techniques, data sources, relevance to key review questions, potential implications for public health practice, and disease condition studied. Following a review and assessment of the selected models (described below), a commissioned modeling expert conducted a narrative synthesis of the findings of the models, with attention to common results and themes related to the circumstances in which quarantine was effective. Many other modeling studies have been conducted and may have important findings relevant to the use of quarantine; however, a detailed analysis of a representative subset was pursued based on the resources available for the study.

NOTE: AAR = after action report; CASP = Critical Appraisal Skills Programme; COE = certainty of the evidence; GRADE = Grading of Recommendations

Assessment, Development and Evaluation; HSDL = Homeland Security Digital Library; RoB = risk of bias. * Risk of bias assessment tools were developed by adapting existing tools and/or published methods.

Qualitative studies

Studies were classified as qualitative if they explicitly described the use of qualitative research methods, such as interviews, focus groups, or ethnographic research, and used an accepted method for qualitative analysis (Miles et al., 2014). If studies did not report the application of qualitative research methods but nonetheless collected some qualitative data, they were generally classified as case reports or AARs, depending on the context in which the data were collected (described below). In the classification process, studies were identified that contained a qualitative analysis of free-text responses to a survey. Such studies were not classified as qualitative research studies, but their findings were extracted and considered separately in the qualitative evidence synthesis to affirm or question the findings of the more complete qualitative studies.

AARs and case reports

The committee sought to include a synthesis of AARs for two of its reviews (the EOC and Information Sharing Capability test cases) as an exercise in gauging the potential value of this evidence source to reviews of PHEPR practices. Case reports,12 which included program evaluations and other narrative reports describing the design and/or implementation of a practice or program (generally in practice settings), usually with lessons learned, were grouped with AARs because of similarity of methods and intent. A synthesis of case reports was conducted and included in the evidence reviews for all four test cases. Of note, commentaries and editorials were not included as case reports; such articles were excluded in the committee’s bibliographic database search and during the screening process (see Appendix A). Some case reports and AARs reported quantitative (e.g., from surveys) and/or qualitative (e.g., from interviews or focus groups) data, but such data were not collected in the context of research, and there generally was little to no description of the methods by which the data were collected. While the distinction from quantitative and qualitative studies was considered necessary for the committee’s reviews given the current limitations of these two sources, should their methods and reporting be strengthened, they could conceivably be combined with quantitative or qualitative studies in the future.

Data Extraction and Quality Assessment for Individual Studies

After the included studies13 had been sorted into one of the categories described above, individual studies were extracted and assessed for their risk of bias and/or other aspects of study quality, as described below. The full list of data extraction elements is included in Appendix A. For most studies of PHEPR practices, details about the practice itself, the context, and the implementation are necessary, and thus the committee selected for extraction some elements from the Template for Intervention Description and Replication checklist (Hoffman et al., 2014).

The quality assessment approach was determined based on study design. Many standardized tools for assessing quality or risk of bias are available, each with its own merits and shortcomings, and new tools continue to be developed. Described here is the approach taken by the committee and the groups commissioned to assess study quality and risk of bias; however, different tools and methods could reasonably be applied in future PHEPR evidence reviews. Studies were not excluded based on an assessment of the risk of bias or

___________________

12 The synthesis of case reports, as described later in this chapter, is distinct from case study research, which is an established qualitative form of inquiry by which an issue or phenomenon is analyzed within its context so as to gain a better understanding of the issue from the perspective of participants (Harrison et al., 2017).

13 The term “study” is used broadly here to include research studies and reports that may be descriptive in nature (e.g., AARs, case reports, program evaluations).

of methodological limitations, but this information instead was considered in the assessment of certainty for the body of evidence.

For quantitative impact studies, the Brown University EPC developed an assessment tool by drawing selected risk-of-bias domains from existing tools, including the Cochrane Risk of Bias version 2.0 tool (Sterne et al., 2019), Cochrane’s suggested risk-of-bias criteria for Effective Practice and Organisation of Care reviews (Cochrane, 2017), and the Cochrane Risk of Bias in Non-Randomized Studies of Interventions (ROBINS-I) tool (Sterne et al., 2016). The Brown University EPC developed and applied a separate tool for the assessment of descriptive surveys, drawing on published methods (Bennett et al., 2010; Davids and Roman, 2014). For qualitative studies, methodological limitations were assessed using the Critical Appraisal Skills Programme qualitative tool (CASP, 2018). Additional detail on these tools and their use in quality assessment of individual quantitative and qualitative research studies is provided in Appendix A.

An expert in modeling methodology assessed the selected group of quarantine modeling studies in detail, including the specific model structures/equations and how the interventions were instantiated within these structures/equations. This assessment was intended to determine whether assumptions encoded in such structures/equations could plausibly have had a strong impact on the results reported in the studies. Likewise, a careful reading of the methods section of each paper was focused on extracting explicitly documented assumptions, as well as other implicit assumptions based on methodological decisions (e.g., no change in mixing rates as the epidemic grows because of such processes as social distancing, perfect versus imperfect case finding to be eligible for quarantine, asymptomatic transmission).

Descriptive case reports do not fit any specific analytic study design and generally report few details concerning methods, and thus are not amenable to quality assessment using tools designed for research studies. Case reports and AARs were categorized as “high” or “low” priority using the significance criterion of the AACODS (authority, accuracy, coverage, objectivity, data, significance) checklist (Tyndall, 2010), an evaluation tool used in the critical appraisal of gray literature sources. This process mirrored the general principles of the approach outlined by Cochrane for selecting qualitative studies for syntheses when a large pool of sources needs to be reduced to a manageable sample amenable to synthesis that is most likely to address the review questions (Noyes et al., 2018). Rigor was not required as a sorting criterion because the primary purpose was to synthesize experiential data to add weight to findings from research studies, provide a different perspective from that of research studies, or provide the only available perspective concerning the specific phenomena of interest. An appraisal tool for evaluating the methodological rigor of AARs published in 2019 (ECDC, 2018) was applied by the commissioned PHEPR expert to the AARs included in the committee’s analyses (the tool’s criteria are described in Appendix A). While the results of this analysis informed the committee’s recommendations on improving the future evidentiary value of AARs (see Chapter 8), the appraisal tool was not useful in selecting reports to include in the synthesis of AARs and case reports because of the generally low scores for the majority of reports captured in the search. With improvements in the methodological rigor of AARs, however, such tools could be helpful in selecting high-quality AARs for inclusion in future evidence reviews.

Assessment of the Certainty and Confidence in Synthesized Quantitative and Qualitative Findings

After individual studies had been assessed for their quality and risk of bias, the next step was synthesizing and assessing the COE (or confidence in the case of qualitative evidence)

across the body of evidence, specific to each key question, outcome, or phenomenon of interest identified in the analytic framework.

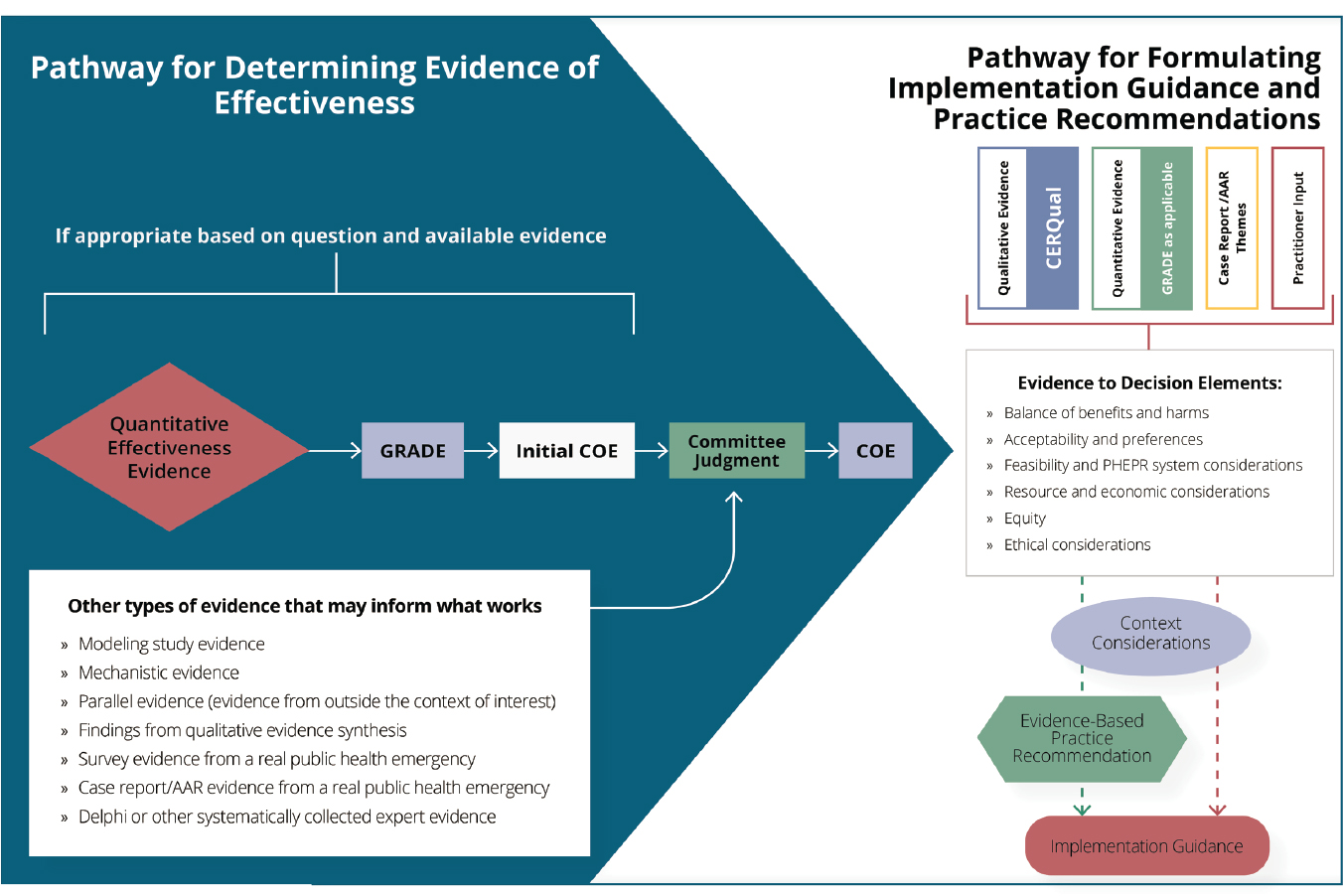

Initially, certainty of the evidence (for synthesized quantitative impact findings) and confidence in the synthesized findings from qualitative bodies of evidence were assessed separately using the GRADE and GRADE-CERQual frameworks, respectively, as discussed below. Subsequently, the coherence of evidence from across methodological streams (including evidence from cross-sectional surveys that evaluated practices,14 modeling studies, mechanistic evidence,15 qualitative studies, case reports, and AARs) was considered in developing summary findings for each key question. The committee employed two similar but distinct processes to integrate evidence from across methodological streams—one for assessing evidence of effectiveness, and the other for populating the EtD framework and developing implementation guidance. For evaluating evidence of effectiveness, the coherence of evidence from other streams was considered in rating the COE for each outcome.

Quantitative evidence synthesis and grading

For each of the test cases, the committee first assessed the body of quantitative impact studies using the GRADE approach (see Box 3-2 earlier in this chapter for a description of the GRADE assessment domains). The committee determined that a quantitative meta-analysis was neither feasible nor warranted based on the expected context sensitivity of the PHEPR practices. Thus, the committee undertook a synthesis without meta-analysis (Campbell et al., 2020) to draw conclusions regarding effect direction. These conclusions represented global judgments based on the number, size, and methodologic strengths of the individual studies, as well as the consistency of the results. If study authors performed statistical testing of a hypothesis, the committee considered the results of such testing when drawing its conclusions about the directionality of effect. However, statistical testing was neither a necessary nor a sufficient condition for drawing these conclusions. The committee did not prespecify a minimum meaningful effect size, as it was generally unclear what would be considered meaningful for the diverse set of outcomes examined by the committee. This poses a challenge for interpreting the importance of an intervention and represents an area for future development.

Existing guidance on the application of GRADE to a narrative synthesis (Murad et al., 2017) was followed to evaluate the certainty that a practice was effective for a given outcome. Consistent with the GRADE methodology, bodies of evidence that included RCTs started at high COE, which was downgraded as appropriate based on the committee’s judgment regarding risk of bias, indirectness, inconsistency, imprecision, and publication bias. Bodies of evidence that comprised only nonrandomized studies started at low COE and could be further downgraded or upgraded.16 Modeling studies were not included in the bodies of evidence assessed with the GRADE domains, but were considered in the COE determination as discussed later in this chapter.

___________________

14 There was no synthesis of noncomparative, descriptive surveys.

15 As discussed earlier in this chapter, the committee defined mechanistic evidence as evidence that denotes relationships for which causality has been established—generally within other scientific fields, such as chemistry, biology, economics, and physics—and that can reasonably be applied to the PHEPR context through mechanistic reasoning, defined in turn as “the inference from mechanisms to claims that an intervention produced” an outcome (Howick et al., 2010, p. 434).

16 According to GRADE, bodies of evidence comprising nonrandomized studies that were assessed with ROBINS-I (Sterne et al., 2016) could start as high COE, but would then generally be rated down by default by two levels because of risk of bias unless there was a clear reason for not downgrading (Schünemann et al., 2018). However, because ROBINS-I was not used for the quality assessment of nonrandomized studies per se (although domains from the ROBINS-I tool were considered by the Brown EPC in developing its quality assessment tool), the committee started bodies of evidence that comprised only nonrandomized studies at low COE.

TABLE 3-2 Definitions for the Four Levels of Certainty of the Evidence

| COE Level | Definition |

|---|---|

| High | We are very confident that, in some contexts, there are important effects (benefits or harms). Further research is very unlikely to change our conclusion. |

| Moderate | We are moderately confident that, in some contexts, there are important effects, but there is a possibility that there is no effect. Further research is likely to have an important impact on our confidence and could alter the conclusion. |

| Low | Our confidence that there are important effects is limited. Further research is very likely to have an important impact on our confidence and is likely to change the conclusion. |

| Very Low | We do not know whether the intervention has an important effect. |

Table 3-2 defines the four levels of the COE used in the committee’s evidence reviews. Of note, the differences among the levels are not quantitative, and there is no algorithm or set of rules for determining the COE (e.g., based on the number and quality of included studies). As with other systematic review and guideline development processes, the assessment of the COE is based on the judgment of the evaluators. In some cases, a single high-quality study may provide a high COE, while in others, having multiple RCTs with consistent effects could yield a lower COE (e.g., because of indirectness). Transparency is key so that the rationale for up- and/or downgrading decisions and the ultimate COE rating are clear. While this judgment-based approach allows the evaluators flexibility in the COE determination process, a potential limitation is poor interrater reliability (i.e., others could arrive at different judgments given the same set of evidence).

Two operational decisions made by the committee regarding the GRADE process warrant additional explanation. First, for those key questions and outcomes for which the only serious limitation was in the imprecision domain and the evidence came from a single, nonrandomized study of modest size, the committee considered the upgrading domains, in particular the domain for large effect size. The second decision relates to upgrading for nonrandomized studies based on large effect size.17 The quantitative evidence was rated for risk of bias by the Brown University EPC, and based on these ratings, an overall assessment of quality was made using a “good/moderate/poor” set of categories. Studies rated as good quality were considered to have no serious limitations for the risk-of-bias domain in GRADE, whereas those rated as poor quality were considered to have serious or very serious limitations for this domain. For studies that were rated by the EPC as having “moderate” risk of bias and had a large effect size, the committee asked the EPC to assess whether the factors contributing to the “moderate” risk-of-bias rating were likely or unlikely to be responsible for the large effect size. For those cases in which the EPC judged this to be likely, the committee did not upgrade the COE based on the large effect size. For those cases in which the EPC judged this to be unlikely, the committee considered whether to upgrade the COE based on the large effect size.

Qualitative evidence synthesis and grading

For the qualitative evidence synthesis, the primary studies were uploaded into Atlas.ti (Version 8.1, Atlas.ti Scientific Software Development GmbH, Berlin, Germany), and the key findings and supporting information from

___________________

17 Consistent with GRADE guidelines on rating the COE (Guyatt et al., 2011b), the committee upgraded for large effect when nonrandomized studies showed at least a two-fold increase or decrease in relative risk (or other measure of effect size) associated with implementation of a PHEPR practice.

each study were extracted in the form of key phrases, sentences, and direct quotations. This approach allowed researchers to identify and note evidence that mapped onto the phenomena of interest. The specific phenomena of interest were prespecified as questions around what happened when the practice was implemented, what was perceived to work, and what was perceived not to work. The EtD domains (e.g., acceptability, feasibility, equity) were also phenomena of interest for the qualitative evidence synthesis.

The Wayne State University team conducted the extraction and used the pragmatic framework synthesis method (Barnett-Page and Thomas, 2009; Pope et al., 2000), which employs an iterative deductive and inductive process to analyze and synthesize the findings. Framework synthesis is a matrix-based method that involves the a priori construction of index codes and thematic categories into which data can be coded. The method allows

- themes identified a priori to be specified as coding categories from the start,

- application of an a priori theoretical framework or logic model to inform the development of index codes and themes,

- incorporation of researcher experience and background literature and expert opinion, and

- combining with other themes emerging de novo by subjecting the data to inductive analysis.

A five-step process was used for the synthesis: (1) familiarization to create a priori descriptive codes and codebook development, (2) first-level in vivo coding18 using descriptive codes, (3) second-level coding into descriptive themes (families of descriptive codes), (4) analytic theming (interpretive grouping of descriptive themes), and (5) charting/mapping and interpretation (the authors’ more detailed description of each of these steps is provided in Box 3-3). A lead author from the two-person Wayne State University team was assigned for each review topic and was responsible for the synthesis of findings, which were developed through ongoing discussions with the other Wayne State team member and the committee.

GRADE-CERQual was used to assess the confidence in synthesized qualitative findings (analytic and some descriptive themes). CERQual provides a systematic and transparent framework for assessing confidence in individual review findings, based on consideration of four components:

- methodological limitations—the extent to which there are concerns about the design or conduct of the primary studies that contributed evidence to an individual review finding;

- coherence—an assessment of how clear and compelling the fit is between the data from the primary studies and a review finding that synthesizes those data;

- adequacy of data—an overall determination of the degree of richness and quantity of data supporting a review finding; and

- relevance—the extent to which the body of evidence from the primary studies supporting a review finding is applicable to the context (perspective or population, phenomenon of interest, setting) specified in the review question (Lewin et al., 2018).

___________________

18 During in vivo coding, a label is assigned to a section of qualitative data, such as an interview transcript, using an exact word or short phrase taken from that section of the data (Tracy, 2018).

| BOX 3-3 | STEPS FOR THE SYNTHESIS OF FINDINGS FROM QUALITATIVE STUDIES |

Step 1: The familiarization process involved an initial close reading of the project documents and the selected articles to create descriptive codes. The familiarization with the project documents unpacked the key questions, sub–key questions, evidence to decision issues, aims and objectives of the project, and analytic frameworks so that key phrases and words that meaningfully addressed the phenomenon of interest could be identified. The familiarization with the articles similarly identified key phrases and words that described various aspects of the phenomenon of interest. Both sets of key phrases and words were converted to descriptive codes, which captured the essence of the extractions and replaced the in vivo original words with ones that translated across studies, creating a common yet representative nomenclature. A codebook was developed to compile the codes with corresponding definitions, thereby forming a set of a priori descriptive codes.

Step 2: First-level in vivo coding involved multiple close readings of the articles in their entirety, with attention to findings wherever they appeared (particularly in the abstracts, results, discussions, and conclusions). The in vivo findings (consisting of verbatim key phrases, sentences, and paragraphs) related to the key questions, sub–key questions, context questions, or evidence to decision issues were highlighted and assigned a descriptive code. When there were no a priori codes that matched the essence of in vivo extractions, this was considered an emergent code. The emergent code was translated to a new descriptive code, and the code with a corresponding definition was incorporated in the codebook. During this process, attention was paid to all meaningful extractions, whether they appeared to confirm or counter previously coded extractions. For mixed-method studies that had both qualitative and quantitative portions, only the qualitative findings were coded.

Step 3: Second-level coding involved a synthesis process of creating descriptive themes, where a theme was a family of descriptive codes in which codes that formed a cohesive set were grouped together. The themes represented a nuanced description, rather than just a generalized description, of the phenomenon of interest.

Step 4: This step involved a synthesis process of creating analytic themes. This analytic theming relied on a robust interpretation of the descriptive themes and how they intersected relationally with one another. The descriptive themes were grouped together in a nuanced manner to create the analytic themes.

Step 5: Charting/mapping involved explaining how the analytic themes specifically addressed the phenomenon of interest. Additionally, evidence to decision issues were addressed in this step by looking at how the analytic themes were grounded in descriptive themes, codes, and in vivo extractions.

Based on these ratings, each synthesized finding was then assigned an overall assessment as follows:

- High confidence—It is highly likely that the finding is a representation of the phenomenon.

- Moderate confidence—It is likely that the finding is a representation of the phenomenon.

- Low confidence—It is possible that the finding is a representation of the phenomenon.

- Very low confidence—It was not clear whether the finding is a representation of the phenomenon.

Confidence in the synthesized findings was assessed by the lead author for that review topic. The second author reviewed the assessments, queried the lead author for additional information, and offered suggestions. The discussion culminated in the final assessment of confidence.

Synthesis of evidence from case reports and AARs

For the framework synthesis of findings from case reports and AARs, report characteristics (e.g., type of event, type of report, location) were extracted from the reports, which were then coded using a codebook developed based on the key areas of interest and adapted from the codebook used for the qualitative evidence synthesis (see Box 3-3) to facilitate alignment between the two evidence streams when feasible. Although case reports and AARs were analyzed jointly, findings were considered by report type to assess for any differences. No assessment of the confidence in the findings from the synthesis of case reports and AARs was conducted.

Integration of Effectiveness Evidence from Across Methodological Streams

As noted earlier in this chapter and depicted in Figure 3-3, the committee took a pragmatic, layering approach to synthesizing and grading the full body of evidence to determine the COE for the effectiveness of a given PHEPR practice and to inform practice recommendations. After evaluating the body of evidence from quantitative impact studies using the GRADE domains to determine the initial COE for each outcome of interest, the committee reviewed and considered the coherence of evidence from other methodological streams, including findings from the qualitative evidence syntheses (generally related to harms) with associated CERQual confidence assessments; findings from the modeling study analysis; quantitative data from individual cross-sectional surveys, case reports, and AARs regarding practice effectiveness in a real public health emergency; mechanistic evidence (defined earlier in this chapter); and parallel evidence. Although the committee did not undertake to do so, findings from a Delphi-type activity or other systematically collected expert evidence (Schünemann et al., 2019) could be brought to bear in grading the overall body of evidence.