Appendix C

Technical Appendix to Chapter 5

This appendix draws on research from an earlier version of Myers and Lanahan (2020). Specifically, Myers and Lanahan (2020) measured patenting in technologies that DOE was pushing forward through its SBIR/STTR topic announcements, showing that including potential spillovers from firms building on SBIR/STTR awardees’ work leads to a substantial reduction in cost of a patent from government-funded research. Myers and Lanahan matched the language used in DOE topic announcements with patent classes. They controlled for the possibility that DOE was picking technologies that were already being patented by looking at the differences between states that offer SBIR/STTR Phase I matching grants and states that do not offer such funding. For the purposes of this report (and reflecting the time constraints of the committee reporting process), we drew on a subset of their analyses used within the main body of the report (particularly Chapter 5). The purpose of this appendix, then, is to provide a clear summary of the data, methodology, and results from Myers and Lanahan (2020) that the committee drew upon in the writing of the report. For a further discussion, as well as a number of extensions of the research design posed here with additional results, see Myers and Lanahan (2020).

INTRODUCTION

Although the SBIR program has been likened to a venture capital arm of the government (e.g., Lerner, 2000), the committee focused on the role of this program as an investor in technologies, not firms. Myers and Lanahan created a new dataset of SBIR/STTR grants and U.S. inventions that is at the level of “technology class”—i.e., solar panels, wind turbines, electric batteries, and nuclear reactors. Instead of evaluating outcomes for firms that did and did not receive SBIR/STTR awards, as has been done previously (Howell, 2017; Lanahan and Feldman, 2018), Myers and Lanahan evaluated outcomes across technology classes that DOE SBIR/STTR programs have invested in over the period of FY 2006 to FY 2017, using patents as the outcome of interest. Patents are a metric of commercialization cited by the Small Business Administration,1 and recent work

___________________

has lent further support to the notion that they do capture technological progress to some degree (Igami and Subrahmanyam, 2015).

Myers and Lanahan used textual analysis to map government investments into the patent classification scheme. This allowed them to connect SBIR/STTR awards and patents in the same technology class and ultimately arrive at estimates of the amount of funds needed to stimulate the creation of additional patents. Importantly, the method used to connect grants to patents allowed Myers and Lanahan to look beyond the grant recipients, allowing us to wholly account for spillovers that may occur between firms and inventors (e.g., Jaffe, Trajtenberg, and Henderson, 1993; Audretsch and Feldman, 1996; Bloom, Schankerman, and Van Reenen, 2013). Furthermore, although the awarding of SBIR/STTR grants is not random, state-specific match policies that award grant recipients additional funds in a non-competitive manner provide a randomized set of outcomes.

There are two main outcomes from the work done by Myers and Lanahan upon which the committee relied:

- Taking a strict stance on the match between new inventions and the objectives of each SBIR/STTR grant yields cost estimates for patents that can be three to four times larger than estimates that would be obtained if the match was loosened.

- Holding the match fixed, the net effect of spillovers from firms building on each others’ work leads to cost estimates that are two to three times less than what would be estimated if only award recipients’ patents were measured.

DATA AND METHODOLOGY

Data Sources

Topics

Each year, DOE releases several sets of topics that describe the specific technological areas that the agency would like to invest in. All applicants are required to submit their proposals in response to a particular topic. In the past, these topics were released at the same time as the funding opportunity announcement (FOA). More recently, the topics are released about two months prior to an FOA. Thus, these topics and the topic numbers assigned them provide the link between the data on ultimately awarded SBIR/STTR grants and the text of the topic or FOA announcement that describes the topic’s objectives. FOAs and topic lists were obtained from DOE for the fiscal years (FY) 2006 to 2017.

SBIR/STTR Award Data

All DOE SBIR/STTR awards from FY 2006 to FY 2017 were collected from the public reporting portal at SBIR.gov.

Patent Record

Information on all United States Patent and Trademark Office patents between 2005 and 2017 was collected from the PatentsView database.2 These records contained the year of application, disambiguated assignee and inventor information, and the Current Patent Classification (CPC) scheme classes attributed to the patent. The year of application was used as the timing of the invention. The assignee and inventor data were used to match patents to the firms listed in the SBIR/STTR award data as well as determine the locations of the parties. For simplicity, each patent was equally divided into the locations of all parties involved. The CPC scheme was used as the map to link both patents and SBIR/STTR awards to the same technologies through the process outlined below.

Mapping Awards to Patent Classes via Text Similarity

Myers and Lanahan (2020) classified SBIR/STTR grants and patents into the same categorization scheme in order to compare changes in investments to changes in patenting rates. To do so, they leveraged the CPC scheme, a hierarchy of categories used to organize the patent record. Much like ZIP codes index geographical space, the CPC provides a discretized version of the technology space.

Myers and Lanahan assumed that if the text of a DOE topic used the same words as the abstract of a particular patent, then the two were related to the same technology. Thus, if a DOE topic used many of the same words as patents that had been assigned a particular CPC class, and those words are mainly used only within that narrow technological area, then it is likely that the objectives of that particular topic were defined specifically to stimulate invention in that CPC class.

To connect each topic with the relevant CPC classes, Myers and Lanahan used modern text analysis tools to estimate the textual similarity between each topic description and all of the abstracts from patents granted in the preceding years. This generated a pairwise dataset of all topics announced in the FOAs and all CPC classes, with a similarity score for each pair. In order to provide the committee with a range of estimates, three degrees of stringency in the text match were used:

- Strict specifications counted only high-match CPC-FOA pairs.

- Medium specifications counted both high- and medium-match pairs.

- Loose specifications counted high-, medium-, and low-match pairs.

___________________

2 See www.patentsview.org.

Confirming Patent Cost Estimates from Prior Work

As mentioned above, most analyses of the SBIR or other public research and development (R&D) programs have been at the firm-level, and focused on outcomes only for subsidy recipients. Myers and Lanahan approximated those studies’ research designs by including patents only for award winners and using the loosest degree of stringency for the match between each CPC class and the FOA objectives. Just as for those studies, this allowed Myers and Lanahan to examine only changes in the cumulative number of any patent granted only to SBIR/STTR awardees. With this in mind, recall Howell’s (2017) finding that DOE’s SBIR/STTR program must invest as little as $150,000 and at most $1.5 million in a firm to produce an additional patent.3

As a control of their methodology, the committee asked Myers and Lanahan to estimate the cost per patent estimates at the firm level. Their cost estimate when looking at the firm-only level of patenting was roughly $1 million, providing confidence in their overall research design.

RESEARCH DESIGN

Myers and Lanahan followed a traditional approach to estimating production functions based on patent data, and used a Poisson regression model (Griliches, 1998) that relates the stock of patents in each CPC class to the stock of investments in that same class.

A key advantage of this approach is that no particular connection between patents and investments is assumed beyond their being in the same CPC class. For example, if firm A receives an SBIR/STTR award and develops a new technology, and then firm B builds on that technology and obtains a patent, this approach connects firm B’s patent to firm A’s SBIR/STTR award even if firm A never obtained a patent or there is no codified connection (e.g., a citation) between the two firms’ R&D efforts.

Accounting for Non-Random Funding

When estimating the productivity of investments, it is important to understand how investment decisions are made. If those investments are made for reasons that are correlated with their effectiveness, then productivity estimates not taking this correlation into account may be biased. For example, if more SBIR/STTR awards are targeted toward technologies that have the most potential to generate valuable spillovers for the U.S. economy, then it may appear that these investments are more productive than they truly are. This is because it would be

___________________

3Howell (2017) employed a regression discontinuity design using DOE SBIR/STTR applicants and awardees from the Office of Fossil Energy and the Office of Energy Efficiency and Renewable Energy. Effectively, she examined the differential effect of the SBIR/STTR award for firms just above the award cutoff to applicants just below.

impossible for researchers to disentangle any effects driven by the investments themselves or any of this unobservable “potential” surrounding those technologies.

To address this concern, Myers and Lanahan leveraged the fact that over this study’s period, a number of states enacted policies that provided a match for federal SBIR/STTR awards with additional non-competitive funds. Importantly, those states did so largely for reasons unrelated to the quality or nature of the projects that won the federal SBIR/STTR awards. Rather, this was a state-level effort to support early-stage ventures more broadly.

These policies were used by Myers and Lanahan to create an instrumental variable. Thus, instead of comparing outcomes for two technology classes that received differing levels of federal DOE SBIR/STTR awards, they compared outcomes for two technology classes that received the same amount of federal investments, but for which one received additional investments from a state program, referring to those estimates as state match “windfalls.”

SUMMARY STATISTICS

Since 2000, the DOE SBIR program has awarded roughly $2.45 billion to 2,064 firms. This comprises approximately 5,600 Phase I awards ($580 million) and 2,400 Phase II awards ($1.87 billion). Conditional on having received a DOE Phase I award, recipients received an average of 2.7 Phase I awards and 1.2 Phase II awards.

Table APP-C-1 summarizes the FOA and award data for their FY 2006 to FY 2017. Each year, DOE announces about 60 unique topics, and awards four to five new Phase I grants per topic. Including any follow-on Phase II grants (that are awarded in future years), an average of about $2.6 million is invested in each topic.

TABLE APP-C-1 DOE SBIR/STTR Funding Opportunity Announcements and Grant Awards Data, FY 2006-2017

| Category of Data | Per Year | Per FOA Topic |

|---|---|---|

| Phase I grants (Millions of Dollars) | 39.4 (15.1) | 0.66 (0.63) |

| Phase II grants (Millions of Dollars) | 111 (60.0) | 1.86 (2.54) |

| Number of Phase I grants | 276 (126) | 4.61 (4.11) |

| Number of Phase II grants | 117 (60.7) | 1.95 (2.50) |

| Number of unique firms receiving grants | 232 (52.8) | 5.59 (4.22) |

| Number of unique states with firms receiving grants | 39.3 (3.39) | 4.25 (2.90) |

| Number of FOA topics | 60 (7.95) | n/a |

| Observations | 12 years | 720 topic-years |

NOTE: This table reports mean values with standard deviations in parentheses.

Table APP-C-2 details the final dataset of CPC-year-level observations of patenting rates and investments. Of note, in their estimation of the production function, these patenting rates are converted into stocks. The table presents statistics for the key variables when using “strict” and “loose” specifications for determining which CPC classes correspond to which FOA topics and grants. Since all SBIR/STTR grants are awarded to U.S. firms, the patenting rates at this level of geographic aggregation—the largest explored—are independent of the relevance threshold being stricter or looser. By construction, as the relevance threshold is loosened, more CPC classes receive smaller investments.

Anywhere from 14 to 50 percent of the full set of CPC classes that Myers and Lanahan explored received DOE investments at some point in time. Patenting rates were highly skewed, with standard deviations often an order of magnitude larger than means. Unlike the patenting rates for geographic regions within the United States (which are based on where funding is directed, which itself is based on the stringency of the match), the U.S.-wide patenting rate is independent of the FOA-CPC match stringency because all SBIR/STTR grants go to U.S. firms.

To get a sense of the types of technologies that the DOE SBIR/STTR grants target, Table APP-C-3 identifies the top 15 CPC classes (aggregated to the three-digit code) per total investments in the sample. The top classes revolve around measurement tools, generating and transmitting electricity, computers, fuels, and engines.

RESULTS

Table APP-C-4 outlines the costs implied by the Myers and Lanahan model. To show how development costs depend on the match between the objectives of the grant and the ultimate nature of the patent, the first column

TABLE APP-C-2 DOE SBIR/STTR Funding Opportunity Announcements and Grant Awards Based on Stringency of FOA-CPC Match, FY 2006-2017

| Strict Match | Loose Match | Match N/A | |

|---|---|---|---|

| Any investment, {0,1} | 0.138 (0.345) | 0.482 (0.500) | |

| DOE investment if greater than 0 (Dollars) | 50,087 (80,989) | 14,317 (22,000) | |

| Patent rate for grant recipients | 0.0116 (0.222) | 0.0546 (0.647) | |

| + Firms in the same city | 0.491 (8.601) | 1.820 (22.47) | |

| + Firms in the same state | 3.317 (45.73) | 5.709 (51.16) | |

| + Firms in the rest of the United States | 7.682 (54.82) |

NOTE: This table reports means, with standard deviations in parentheses. It is based on FY 2006-2017 data for all 10,378 CPC classes. Total number of observations was 133,705.

TABLE APP-C-3 Top Technology Classes Invested in by DOE SBIR/STTR Programs, FY 2006-2017

| Rank | CPC Technology Class |

|---|---|

| 1 | G01: Measuring; testing (e.g., scales, mass spectrometers, seismometers) |

| 2 | H01: Basic electric elements (e.g., cables, resistors, magnets, capacitors) |

| 3 | H02: Generation; conversion or distribution of electric power |

| 4 | H03: Basic electronic circuitry (e.g., modulators, amplifiers, resonators) |

| 5 | H04: Electric communication technique (e.g., telecommunications) |

| 6 | G06: Computing; calculating; counting |

| 7 | C10: Petroleum, gas, or coke industries |

| 8 | F16: Engineering elements & units (e.g., couplings, springs, insulation, belts, gears) |

| 9 | C12: Biochemistry; microbiology; enzymology |

| 10 | B60: Vehicles in general |

| 11 | F02: Combustion engines |

| 12 | B01: Physical or chemical processes or apparatus (e.g., laboratory tools) |

| 13 | C08: Organic macromolecular compounds |

| 14 | F23: Combustion apparatus or processes |

| 15 | F24: Heating; ranges; ventilation |

NOTE: These are aggregated to the CPC subsection level, indicated by the 3-digit code. Rankings are based on total investments over this period, counting only “strict” matches between the FOA topic of each award and the CPC class.

reports costs from the strict specification only counting high-match patents, which are very closely aligned with the objectives of the grant. The second and third columns successively introduce other patents into the analysis that are less and less related to the objectives of each SBIR/STTR grant they are connected to.

The rows of the table report cost estimates where patents from concentric sets of firms are included in the analysis:

- “Firm” counts only patents from SBIR/STTR grant recipients;

- “City” counts patents from any firm located in the same city as these firms;

- “State” follows the same logic as “City”; and

- “US” includes patents from all firms in the US.

First, comparing the estimates based on the raw endogenous federal investment data to those based on the state match windfalls, Myers and Lanahan

TABLE APP-C-4 Total Cost of Research per Patent Received

| Patent Group | Strict Match (millions of dollars) | Medium Match (millions of dollars) | Loose Match (millions of dollars) |

|---|---|---|---|

| For Endogenous Investments | |||

| Firm | 4.01 [2.34 – 17.1] |

1.25 [1.04 – 1.55] |

1.04 [0.905 – 1.23] |

| +City | 5.60 [4.72 – 6.91] |

1.06 [0.972 – 1.17] |

0.685 [0.616 – 0.774] |

| +State | 1.77 [1.25 – 3.14] |

0.356 [0.286 – 0.367] |

0.215 [0.188 – 0.251] |

| +US | 2.64 [1.84 – 4.66] |

0.401 [0.331 – 0.510] |

0.248 [0.210 – 0.301] |

| For State-match Windfall | |||

| Firm | 3.52 [2.50 – 5.98] |

1.27 [1.08 – 1.55] |

1.05 [0.915 – 1.23] |

| +City | 5.18 [3.99 – 7.40] |

1.11 [1.01 – 1.25] |

0.710 [0.619 – 0.833] |

| +State | 1.91 [1.47 – 2.73] |

0.356 [0.308 – 0.424] |

0.244 [0.203 – 0.308] |

| +US | 2.31 [1.43 – 6.28] |

0.451 [0.365 – 0.590] |

0.298 [0.234 – 0.412] |

NOTE: The main estimate is reported with bounds based on 95 percent confidence intervals of the estimates, reported in brackets. Geographic levels include all concentric regions; e.g., “+City” includes all firms in any city where a SBIR/STTR grant is awarded for a particular class.

do not find any major differences. However, there is a trend that the cost estimates based on the instrumental variables approach tend to be lower for the strict specification but higher for the loose specification. This pattern could plausibly be explained by DOE staff directing more funds to firms and/or topics where it may be more difficult for the grant recipient to patent, but the potentials for spillovers into new technologies are larger. Still, the magnitudes of these differences are quite small relative to the cost estimates themselves.

Focusing on the variation in costs across each row, there is a clear decline in costs as less relevant patents are included into the analysis. In general, the difference between these costs ranges from 2- to 4-fold. This indicates that the costs of developing specific new technologies—those that DOE directs its funds towards—is much higher than would otherwise be suggested by an agnostic count of the patents produced by SBIR/STTR funds.

Looking down the columns of Table APP-C-4, there is a clear pattern of positive spillovers. In most cases, as Myers and Lanahan include patents from

firms in subsequently greater surrounding areas, the cost per patent declines. This is not entirely surprising given a long line of suggestive evidence from the patent record of geographically mediated spillovers (Jaffe, Trajtenberg, and Henderson, 1993). But quantifying these effects suggests that they may reduce the expected costs of producing new patents by a significant amount.

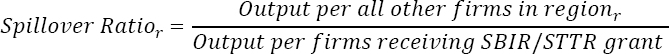

To better understand the nature of these spillovers, the next table presents what Myers and Lanahan termed the “spillover ratio.” This is defined as the additional expected patent output generated within particular regional bands divided by the expected patent output produced by the firms that receive the SBIR/STTR grants:

A spillover ratio of 1 for the geographical band of “state, excluding cities” would indicate that, for each additional patent that the grant recipients produce, Myers and Lanahan can expect that firms in that same state, but not in the same city, will also produce one additional patent. Thus, it can be interpreted as a multiplier that describes the additional output generated via spillovers. Myers and Lanahan also reported the “net” spillover ratio which has U.S.-wide output (minus SBIR/STTR recipients) in the numerator.

A negative value for this ratio is possible if firms and inventors in particular regions decrease their R&D in response to the output of other firms, in which case the numerators would be negative (e.g., a value of -0.5 would indicate that for every patent that SBIR/STTR grant recipients produce, Myers and Lanahan observed other firms in the region producing 0.5 fewer patents). Such behavior could be explained by strategic interactions among competing firms.

Table APP-C-5 presents these spillover ratios for three non-concentric regional bands and shows how spillovers propagate across geography, as well as

TABLE APP-C-5 Spillover Ratios

| Patent Group | Strict Match | Medium Match | Loose Match |

|---|---|---|---|

| City, excluding firms | -0.35 | 0.14 | 0.47 |

| State, excluding cities | 1.21 | 2.43 | 2.82 |

| United States, excluding states | -0.57 | -0.75 | -0.77 |

| Net | 0.51 | 1.82 | 2.53 |

NOTE: The firms or regions excluded refer to the regions where firms receiving SBIR/STTR awards were located. For example, if only a single firm in Durham, NC, received an SBIR/STTR award, then “City excluding firms” would include outputs for all other firms in Durham; “State, excluding cities” would include all cities in North Carolina except Durham, and “United States, excluding states” would include all states except North Carolina. All estimates are based on the state-match windfall results.

the net effect. Since these ratios are based on averages from multiple regression models, the spillover ratios of the geographic subsets need not directly sum to the net spillover ratio. The first two rows indicate that at least within the cities and states where firms receive SBIR/STTR grants to pursue particular technologies, there is almost always a net positive effect: other firms and inventors in that region increase their efforts on the same technologies. The only case demonstrating otherwise is when Myers and Lanahan used the strict specification in the case of the city-band.

However, the “United States, excluding states” row indicates that, on net and on average, firms and inventors outside of the states where the SBIR/STTR-awarded firm is located in tend to decrease their R&D efforts on the technologies targeted by SBIR/STTR grants. Not accounting for these negative spillovers would have led to an overestimate of the net effects of SBIR/STTR grants.

DISCUSSION

This approach to estimating the return to the DOE’s SBIR/STTR investments appreciates that “spillovers” may amplify the effect of these investments (and that strategic interactions among firms might depress them). The regression model estimates suggest that if, as is tradition, only award recipients are evaluated as inventors, outcome analyses will likely miss a significant number of inventions spurred through other indirect means. Still, it does appear that these spillover effects are concentrated within the states of the firms that do receive these grants. Furthermore, Myers and Lanahan showed that these spillovers occur not just through geographic space, but also through technological space.

In the loosest specification, Myers and Lanahan estimated that for every $300,000 the government invests in a technology—roughly the size of two Phase I SBIR/STTR grants—one additional patent’s worth of technology will be created. And roughly three-quarters of this new output is due not to the firm that receives these funds but to other firms in the same state. For every patent expected from a grant recipient, another 0.5 to 2.5 additional patents are expected from spillovers.

What is the dollar return on these investments? It is difficult to know or predict the value of any given patent. As a starting point, recent estimates by Kogan et al. (2017) suggest that the median patent granted to most large publicly listed companies could be valued upwards of $7 to 8 million (in 2017 dollars). So, unless the value of the patents spurred by DOE’s investments are more than 20 times less than these estimates (that is, less than $300,000 on average), then it appears that significant value is being created. Certainly, this increased production is likely to correspond to increased investments from the private firms producing these patents. But these must be profitable investments for the firms. Even when restricted to those patents that match DOE’s objectives the closest (a strict match), where cost estimates are near $2 million, a simple extrapolation of the Kogan et al. (2017) estimates could still find net positive returns.