This chapter features a presentation on grand views and potholes on the road to precision neuroscience by Russell Poldrack, Albert Ray Lang Professor of Psychology at Stanford University. He discussed the methodological techniques and standards for reliability that are necessary for measuring brain health and resilience; he also described how quality metrics and criteria can improve measurement of brain health and resilience in future research.

GRAND VIEWS AND POTHOLES ON THE ROAD TO PRECISION NEUROSCIENCE

Poldrack sketched an optimistic dream for the use of neuroscience around brain health as well as identifying challenges that may hinder achieving that vision. Achieving the dream of precision neuroscience will require avoiding potholes by improving reproducibility, improving predictive modeling (particularly for the development of biomarkers), and better understanding intraindividual variability over time so it can be couched within the context of a person’s life. He opened by laying out the idea of precision medicine, defined as prevention and treatment strategies that take into account individual variability in genetics, environments,

and lifestyles (Goossens et al., 2015). Precision medicine promises to provide targeted treatments that are more effective for everyone than one-size-fits-all treatments designed for the average patient. In recent years, substantial efforts have been devoted to developing this concept, including the 2015 Precision Medicine Initiative launched by the White House.1 This wave is being driven in part by success stories emerging from precision cancer drugs that are markedly improving outcomes based on genetic targeting and treatment. For example, Gleevec is a precision cancer treatment developed for people with a particular genetic mutation that causes chronic myeloid leukemia. This drug can improve survival drastically—from 30 percent 5-year survival to almost 90 percent 5-year survival.2 We are seeing this substantial improvement in outcomes in several other aspects of cancer and other diseases, as well.

Poldrack reflected on an optimistic dream of how a future precision neuroscience of brain health might process information from neuroscientific and other biological measurements to target the way that individuals are treated. A person who visits a physician with some kind of complaint related to cognitive or neurocognitive function would receive a range of tests that might involve imaging, genomics, gut microbiome analysis, or other new technologies. The test results would be analyzed by a complicated, indecipherable machine-learning system to generate specific recommendations about what the person can do to improve brain health—be it a medication, a particular diet, a certain type of exercise, or transcranial magnetic stimulation, for example. As a counterpoint to this optimistic vision, Poldrack cautioned that there are three “potholes” along the road to achieving the dream of precision neuroscience: (1) irreproducibility of results, (2) use of faulty predictive models, and (3) lack of understanding of intraindividual variability.

The Reproducibility Crisis

Poldrack explained that a focus on reproducibility is emerging in other domains of individualized precision medicine, because the reproducibility crisis undercuts the degree to which the results in the current literature can be believed. The reproducibility crisis is a phenomenon described by John Ioannidis in his seminal 2005 paper on why most published research findings are false (Ioannidis, 2005). Ioannidis pointed out three features that drive higher or lower reproducibility in a particular

___________________

1 See https://obamawhitehouse.archives.gov/precision-medicine (accessed November 18, 2019).

2 See https://www.cancer.gov/research/progress/discovery/gleevec (accessed November 18, 2019).

area of study. The first is the size of studies: the larger the number of studies conducted in a field, the more likely their findings are to be true. The second factor is the number of tested relationships in a field, with the likelihood of findings being true decreasing as the number of tested relationships increases. The third factor relates to flexibility in designs, definitions, outcomes, and methods of analysis—greater flexibility decreases the likelihood that findings are true.

A study published by Drysdale et al. (2016) in Nature used resting-state functional magnetic resonance imaging (fMRI) to define connectivity biomarkers that define neurophysiological subtypes of depression. Using this technique to cluster participants revealed that individuals with depression seem to have impairments in connectivity in different brain systems; the groups also differed substantially in their response to transcranial stimulation treatment. Although a replication of the full study has not yet been attempted, another group tried and failed to replicate the particular connectivity feature showed in the Drysdale study (Dinga et al., 2019). This reflects broader concerns about imaging studies: (1) a lack of understanding about what replicates and (2) the inability to replicate results.

Insufficient Scanning Time

Estimating connectivity reliably requires substantial scan time, but the brain imaging studies currently being carried out are collecting far too little information about each individual. Poldrack estimated that achieving reliable measurements of connectivity requires something in the range of 30–100 minutes of resting-state data from a subject, depending on the degree of reliability desired, but most studies collect less than 10 minutes of resting-state data (Laumann et al., 2015). For instance, the Drysdale study collected an average range of 4.5–10 minutes of data from each subject. This underlines the need to further investigate how the amount of data studies collect from each individual relates to the reliability of the results.

At a group level, findings across brain imaging literature are not sufficiently reliable, said Poldrack. A number of meta-analyses looked at around 100 neuroimaging studies of depression and reported that there are significant differences in brain activity between people diagnosed with depression and healthy individuals (Müller et al., 2017). However, a research group with great expertise in neuroimaging meta-analysis performed a conceptual replication of the previous work. Across those same 100 studies, the group found no convergent differences in brain activity between healthy and depressed individuals, which was directly at odds with the findings of the previous meta-analyses (Müller et al., 2017).

Underpowered Studies with Insufficient Sample Sizes

The issues highlighted by Ioannidis are at the forefront of concerns about neuroimaging, said Poldrack. A large proportion of neuroscience research is badly underpowered across both human structural neuroimaging studies and animal studies, undermining the reliability of neuroscience research (Button et al., 2013). If a study has less than 10 percent power, it means that even if there is an effect, it will only be found 10 percent of the time. Although controlling for type-1 error can help control the false-positive rate, it does not mean that positive findings will necessarily be true.

The primary focus of research should not be the number of positive findings but how many of those positive findings are actually true. Positive predictive value is the probability that a positive result is true (Button et al., 2013). Statistical power substantially affects the ability to believe positive results that are published in the literature, Poldrack emphasized. In addition to being more likely to generate false results, underpowered studies are also more likely to produce results in which the effect sizes are overestimated. The overestimation of effect sizes for significant results is known as the Winner’s Curse.

To illustrate the concept of positive predictive value, Poldrack described the following hypothetical scenario:

Imagine you are going to do 100 studies, but your detector is broken so you only have random noise. If you controlled the false-positive rate at 5 percent, then on average, five of those 100 studies will come out with significant results. But the positive predictive value—the likelihood of the proportion of those positive results that are true—is zero, because the detector is broken. If the detector is fixed, then it will begin to capture true signals, then the positive predictive value will increase.

Poldrack plotted the sample sizes from the studies from the Müller et al. (2017) depression imaging meta-analysis as a function of year of publication. Current practice for reproducible research holds that 20 observations is a fundamental baseline for a study to be powerful enough to detect most effects (Simmons et al., 2011). Unless there is a compelling cost-of-data-collection justification, it does not make sense to collect fewer than 20 observations. In general, published data with a sample size of 20 tends to reflect a more flexible sample size determination based on interim data analysis and other types of problematic analyses. A majority of studies in the meta-analysis had grossly insufficient N. In other words, researchers invited more than 600 people with depression to volunteer for fMRI studies that were almost certain to generate either null or false results because of insufficient power.

This issue abounds in the brain health literature, said Poldrack. He carried out an informal search on PubMed for the terms brain health and fMRI, yielding 22 studies published in 2011 or later. Based on the published sample sizes for each group in each study, 22 percent of the groups included fewer than 20 subjects. This undercuts the ability to believe a significant number of the results coming out of the brain health literature, he cautioned. Poldrack suggested that mandatory preregistration would contribute substantially to moving toward a neuroscience that is aimed at translating findings into more effective treatments (see Box 5-1).

Methodological Pluralism

Methodological flexibility is a major challenge in the field of neuroimaging, because researchers have a large degree of flexibility in how they analyze neuroimaging data. One study analyzed a single event-related fMRI experiment using almost 7,000 different unique analysis procedures, in order to highlight the amount of variability seen across neuroimaging research (Carp, 2012). Poldrack’s group is assessing the effects of this type of methodological pluralism in the Neuroimaging Analysis Replication and Prediction Study. They collected a dataset at Tel Aviv University on a

decision-making task and distributed the datasets to 82 different research groups, 70 of which returned their decisions on a set of given hypotheses3 using their standard analysis methods, as well as providing thresholded and unthresholded maps. Economists helped to perform prediction markets to assess the researchers’ abilities to predict outcomes. The findings of the study are still being prepared, but they have found that analytic variability leads to inconsistent results (Botvinik-Nezer et al., 2019). Even using exactly the same data in the reasonably well powered study, the variability across different groups in how the data are analyzed is substantial enough to drive a high degree of variability in the decisions that they make about particular hypotheses.

Faulty Predictive Models

Poldrack turned to the pothole of faulty predictive models. Predictive models are essential to precision science, but faulty predictive models are another pothole on the roadway to precision neuroscience. A focus on developing biomarkers has been a rising trend in the neuroimaging literature over the past decade, yet many researchers tend to misrepresent the concept in a way that oversells it. A claim in favor of a biomarker is generally based on some claim about prediction. In the literature, people often make claims about prediction using an observed correlation or regression effect in a dataset. He deemed this move fundamentally problematic because the observed correlation within a dataset typically—in fact, almost always—overestimates the degree that a prediction can be made in a new dataset, because the data are being reused both to fit the model and to assess how well the model fits.

An observed correlation does not equate to predictive accuracy (Copas, 1983). This issue is known as shrinkage in statistics and as overfitting in machine learning. In machine learning, out-of-sample predictive accuracy is generally quantified using cross-validation with a different dataset. This process of cross-validation involves iteratively training a model on a subset of the data (the training data) and then testing the accuracy of the model’s predictions on the remaining data (the validation data). Poldrack’s group looked at the recent literature on fMRI, finding that about half of the publications claim putative “prediction,” yet they are actually just demonstrating an in-sample correlation/regression effect within a single sample (Poldrack et al., 2019). The problem is that in-sample prediction inflates predictive accuracy. With increasing model complexity, in-sample prediction can be significant even with no true signal. However, using cross-validation or new data reveals that what looks

___________________

3 For example, “Is there activation in area X for contrast Y in this study?”

like significant classification accuracy is actually the result of overfitting to the training data.

Small samples also inflate predictive accuracy estimates (Varoquaux, 2018). The decline of effect size over time and with respect to sample size is particularly problematic in the use of machine-learning tools (Varoquaux, 2018). In brain imaging, early studies with small sample sizes tended to claim very high predictive accuracy (even up to 100 percent) of psychiatric diagnoses based on imaging data. In almost every case, direct evidence shows that this was the result of using small samples (Varoquaux, 2018). Poldrack’s group looked at sample sizes from publications claiming to show prediction based on fMRI, but more than half of the studies have sample sizes of less than 50 and 18 percent have sample sizes smaller than 20.4 “Doing machine learning with sample sizes smaller than 20 is almost guaranteed to give you nonsense,” he warned.

As the field of neuroscience moves toward greater appreciation of the problematic issues related to developing predictive models and how it may be contributing to the development of invalid biomarkers, it may be instructive to look to other fields to see their requirements for generating biomarkers. Biomarkers for cancer or other diseases are generally validated with very large samples of tens of thousands of samples. Damien Fair asked if studies with small sample sizes should be measuring cross-validation within the sample, or whether the same result is generated even with a small sample size for training and a completely independent dataset for testing. Poldrack replied that the results will be much more variable with a small sample size, but training on a small sample size and then testing on an independent small sample will help to modulate the variability and avoid overfitting.

Poor Understanding of Intraindividual Variability Over Time

Poldrack turned to his third pothole on the road to precision neuroscience, which is poor understanding of intraindividual variability over time. An increasing body of knowledge is providing insight into how brain function changes over time on both ends of the spectrum from milliseconds to decades (Sowell et al., 2004). However, there is still a dearth of knowledge in the middle of the spectrum, with respect to how brain function changes across days, weeks, or months. It has become clear that understanding brain disorders requires understanding individual variability in brain function. A person with schizophrenia or bipolar disorder,

___________________

4 Poldrack, R. Workshop presentation—Grand Views and Potholes on the Road to Precision Neuroscience. Available at http://www.nationalacademies.org/hmd/Activities/Aging/BrainHealthAcrossTheLifeSpanWorkshop/2019-JUN-26.aspx (accessed March 12, 2020).

for example, will tend to have significant fluctuations between high and low functional levels across daily life (Bopp et al., 2010). Over the course of a few weeks, an individual can go from completely disabled to reasonably functional (Kupper and Hoffmann, 2000). Labeling somebody as having a particular disorder glosses over the large degree of variability from day to day and week to week in how that disorder is being expressed.

Nearly all of human neuroscience assumes that the functional organization of the brain is stable outside of plasticity, development, and aging. But until very recently, this assumption has not been tested empirically. Understanding the variability and dynamics of human brain function at multiple time scales is critical for the development of precision neuroscience and neuroscientific interventions, he said. In 2013, Poldrack engaged in a study called the My Connectome project by collecting as much data about himself as possible, including imaging data from more than 100 scans (resting fMRI, task fMRI, diffusion MRI, and structural MRI), behavioral data (mood, lifestyle, and sleep), and other biological measurements (Laumann et al., 2015; Poldrack et al., 2015). They found that the pattern of variability between individuals is fundamentally different than the pattern of variability within individuals. They found high variability in Poldrack’s primary sensory motor networks across sessions. Imaging studies across 120 individuals did reveal some variability in somatomotor and visual networks, but that was dwarfed by variability in default, frontoparietal, and dorsal attention networks.

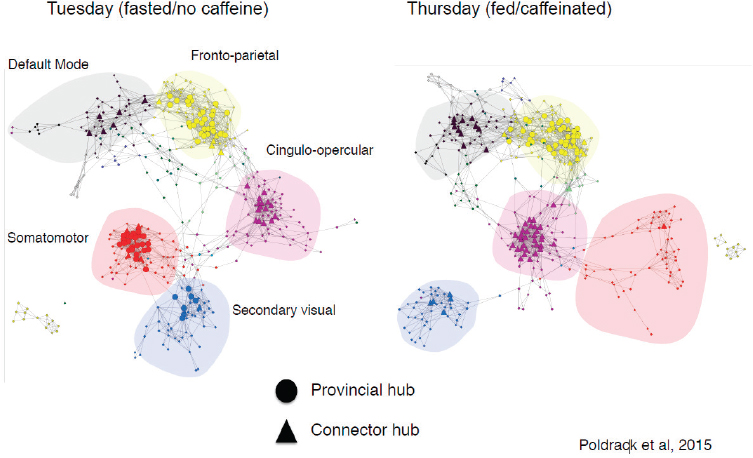

This highlights the need to study individual variability in more depth, in order to identify factors that may drive variance in resting-state connectivity within an individual. The My Connectome project revealed that intake of caffeine and food affects large-scale network structure, for example (Poldrack et al., 2015). Figure 5-1 shows that on days in which he fasted and did not drink caffeine in the morning before the scan, his somatomotor network and the secondary visual network were highly connected; on mornings in which he consumed food and caffeine before the scan, those networks were essentially disconnected. He emphasized that this is not an overall degradation in connectivity—it is a real change in the structure of connectivity. This has serious implications regarding the effect of these types of factors on neuroimaging.

Longer-Scale Dynamics Within Individuals

Evidence suggests that there are also longer-scale dynamics within individuals. An analysis of the data across the entire My Connectome session looked for patterns of connectivity recurring over time, and found two “temporal metastates” that were present throughout (Shine et al., 2016). Both seemed to be related to his being attentive, concentrating,

SOURCES: As presented by Russell Poldrack at the workshop Brain Health Across the Life Span on September 24, 2019; Poldrack et al., 2015.

or lively versus his being drowsy, sleepy, sluggish, or tired. These metastates were not significantly correlated with caffeine or food intake. When he was drowsy or sluggish, there was much greater integration of the visual and somatomotor networks, while the other networks became slightly more concentrated on the days when he was fed and caffeinated. Despite the wealth of potential knowledge to be mined from these types of individual scanning studies, very little of this work has been done—the number of dense longitudinally scanned individuals remains at approximately less than 20. Denser data collection from individuals will need to increase in order to better understand variability at multiple scales with sufficient power. He suggested that the field of imaging should draw from the literature on aging about characterizing the dynamics of behavioral processes at multiple scales over time (Ram, 2015). Different forms of intraindividual variability are situated within the context of much longer scale intraindividual change, as well as interindividual variability.

Discussion

Damien Fair, associate professor of behavioral neuroscience, associate professor of psychiatry, and associate scientist at the Advanced Imaging Research Center at the Oregon Health & Science University, asked about

behavioral measurements assessing outcomes such as cognitive ability or clinical status. Poldrack replied that his group has recently published papers, including one on the nature and quality of behavioral measures used in the domain of self-regulation and self-control. He noted that measures used in experimental psychology are not typically focused on reliability to the same extent as survey measures, which are designed to be reliable. That study found that, as expected, survey measures had good reliability. On average, the behavioral measures had bad reliability, particularly those that were designed to measure contrasts in task performance across different conditions.

Generally, tasks used in cognitive psychology to isolate particular cognitive components are not useful as measures of individual differences. These types of measures, such as the Stroop task, can be useful and have a robust effect, but the effect is not robust at the level of test reliability; therefore, they are not reliable enough to provide any validity as an individual difference measure. Huda Akil, codirector and research professor of the Molecular and Behavioral Neuroscience Institute and Quarton Professor of Neurosciences at the University of Michigan, added that just because a measure is useful as a group measure, it is not necessarily good as an individual measure; this distinction is an important one that should be more prominently taught to researchers.

Akil remarked that the field of brain science has generated a large body of real and actionable knowledge, but it would be helpful to know where the failures lie; for example, as to whether the nature of the measurements, the level of analysis, the use of human versus animal models, and so on. Poldrack replied that for imaging purposes, certain findings about the organization of the brain are replicable and reliable. He drew a line, however, between group mean activation and correlation of individual differences and group differences. The real failures of reproducibility are being seen in differences between diagnostic and control groups, as well as in correlations across the individuals—simply because the sample sizes are far too small.

Part of the issue is measurement, but another issue is that insufficient data are being collected at the individual level. “We should not expect DSM-5 diagnoses to really cleanly carve the brain at its joints, because we know that they are not biologically coherent phenotypes,” he said. Overlaid on these issues are the problems of analytic variability—because different methods of analyzing data will naturally lead to different results—and of publication bias, because journals are not willing to publish null results. This makes it tempting for researchers to perform many different analyses until one of them generates a non-null result. These issues are compounded in the context of underpowered studies on individual differences or group differences.

Gagan Wig, associate professor of behavioral and brain sciences at the Center for Vital Longevity at the University of Texas at Dallas, commented that identification of changes in measures of brain and behavior, or even assessment of reliability of measures of brain and behavior, could be confounded by differences in practice effects (unanticipated learning of the testing procedures), although he noted that this learning itself could also potentially provide an informative additional signal about individual variability. Poldrack replied that test-retest reliability could be useful if looking for a stable measure of an individual, but not if the intent is to be sensitive to change. The main idea is that the psychometric features of the task being used need to be appropriate for the construct that the study is attempting to measure. When building measures that are intended to be sensitive to learning, for example, it is important to ensure that the measures are reliably sensitive to learning—not necessarily in the test-retest sense, but perhaps in some other sense.

PANEL DISCUSSION ON THE WAY FORWARD IN MEASUREMENT AND RESEARCH

Akil asked the panelists to reflect on key issues in measuring brain health. With the caveat that much more work is needed to define brain health as a construct that can be measured at all, Poldrack noted that the field has integrated the concept of big data in a very limited way—that is, collecting a small amount of data from a relatively large number of people. Ultimately, the ability to carry out large-scale analyses is limited by the quality of those individual measurements. More sensitivity is needed in the degree to which a stable phenotype is being measured at the individual level, even if it is just stable within a day. The field should also be looking across phenotypes, he said, by collecting many different measurements from the same individuals. Monica Rosenberg, assistant professor in the Department of Psychology at the University of Chicago, emphasized the importance of sharing and integrating data and models (including feature weights and prediction algorithms) to allow for external validation and help move the field toward the identification of real biomarkers.

Research Domain Criteria Project

Elizabeth Hoge, director of the anxiety disorders research program at the Georgetown University Medical Center, noted that the Research Domain Criteria (RDoC)5 project is an alternative framework for mental

___________________

5 See https://www.nimh.nih.gov/research/research-funded-by-nimh/rdoc/index.shtml (accessed November 18, 2019).

illness than the Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5). It focuses more on the need to study and measure dimensions such as cognitive function and affective valence, for example, instead of focusing on measuring disease. The aim is that RDoC would yield more connections to biology and facilitate more translation between animal and human models. Fair said that RDoC is helpful in moving away from the discrete DSM categories, but it has its own limitations that will need to be addressed.

Akil expressed concern that like the DSM, the RDoC project was also developed by committee and is not biologically based, so one orthodoxy is essentially being replaced with another. The advantage of RDoC is that it encourages people to think in terms of function rather than disease; moving away from the disease-based approach will require considering dimensionality of different phenotypes. However, the RDoC project would benefit from being more biologically informed and appropriately validated. The reliability of measures will need to be established in order for biology to become the framework to inform treatment and prevention in the field of brain health, in keeping with the shift toward precision medicine.

Colleen McClung, professor of psychiatry and clinical and translational science at the University of Pittsburgh, remarked that the animal research community is struggling somewhat with the advent of RDoC. After much effort building animal models with various characteristics and brain–body features of psychiatric diseases, researchers are now being asked to study each characteristic in isolation. RDoC has been helpful in encouraging cognitive neuroscience to adopt a dimensional perspective, said Rosenberg. Symptoms exist on a continuum, so the dimensional approach of concrete predictive modeling of continuous measures of behavior or symptoms—or data-driven subtyping—can capture symptom variability and complement binary or categorical classifications. For example, predicting attention-deficit hyperactivity disorder (ADHD) symptoms yields significant prediction of symptoms even in people without ADHD diagnoses, reflecting the broader variability in attention function among healthy people.

Lis Nielsen, chief of the Individual Behavioral Processes Branch of the Division of Behavioral and Social Research at the National Institute on Aging, said that measures in psychological domains do not necessarily need to be derived from biology. They can be based on functional or behavioral categories; mental health and subjective states, for instance, can be assessed by self-report or performance-based tasks. Purely behavioral or purely psychological measures can achieve the same standard of quality as a biological measure. Akil clarified that she is calling for a system that is empirically evidence based, rather than committee based.

RDoC also harks back to the issue of trait versus state, said Akil. She believes that coping and affective disorders contain nested concepts that could be disentangled biologically, genetically, and environmentally. With a broad lens, the tendencies that we might call “temperament” are changeable, but fairly stable phenotypes. However, variability in features such as coping style or willingness to explore are adaptive for a species in an intermediate context—such as responding to stress during adolescence—or in moment-to-moment responses. These time-nested ways of thinking, including behaviorally and biologically, could be helpful in thinking about measurement. Poldrack said that the Midnight Scan Club data have shown that in general, connectivity patterns are quite stable within an individual. Rosenberg added that stable patterns have been observed consistently across several datasets that used high-frequency sampling of a small number of individuals, which bodes well for capturing models that predict trait-like behaviors.

McClung said that chronotypes are relatively stable after adolescence and before the age of 65 years or so. In fact, certain polymorphisms in circadian genes have been associated with traits of being a morning person or a nighttime person. Akil suggested that in the context of defining and measuring brain health, these are all examples of elements of the general framework or signature for how an individual is functioning; changes occur when things are either declining or improving.

Defining Resilience

Luke Stoeckel, National Institutes of Health, proposed a two-part working definition of resilience in the context of brain health. A person who is resilient could be defined as (1) having a variety of neurocognitive tools and networks that can be activated in the context of internal and external environmental and psychological challenges, and (2) being able to adaptively activate these tools and networks to optimize function in response to environmental and psychological challenges. Poldrack suggested that resilience could be framed as the ability to bring a wide range of cognitive tools to bear in challenging situations. In a sense, resilience is the opposite of test-retest reliability: people with more flexibility in their cognitive toolkit will look less like themselves from time point to time point. He suggested treating this variability and flexibility as a phenotype. Akil sketched her own definition of resilience. Neuroplasticity is a finite resource, but there may be ways to increase or maintain reserves of neuroplasticity. Similarly, maintaining intellectual, emotional, and physical flexibility is a component of being resilient that speaks directly to having more affective or cognitive resources to draw upon.

In early life, the brain has a large degree of flexibility and many available options and tools, so the natural pruning that occurs is necessary. However, this pruning should not be excessive to the point of eliminating too many of those options and coping tools, which would preclude the ability to respond effectively to adversity. In this context, resilience can be defined as maintaining access to a sufficient range of cognitive tools and an adequate degree of neuroplasticity over time. The ability to “roll with the punches” and rebound from adversity, for example, partly depends on having more than one coping strategy available. However, this personalized definition does pose certain challenges for measuring brain health, because it would require individualized measurement of a person’s cognition.