2

Evaluation of the TSCA Systematic Review Approach

INTRODUCTION

As scientific evidence on the risks of chemicals has grown, both in scope and in the contributing disciplines, the need for evidence-based approaches to addressing risks to human and ecosystem health has been increasingly recognized. Methods first developed and used in clinical medicine and other areas for assembling and evaluating bodies of evidence have now been extended to assessing risks to human health and the environment. Evidence-based methods—with their transparency, objectivity, comprehensiveness, and reproducibility—serve as a foundation of modern clinical practice. Evidence-based methods include protocols and comprehensive documentation of assumptions and decisions in compiling evidence, which allows for tracing of every step of the evaluation. This transparency is one primary advantage of using these practices. The principal tool for evaluating the evidence base on a topic is systematic review. Consequently, over the past decade, several approaches to applying this methodology to assessing evidence on risks of environmental agents have been elaborated, such as by the European Food Safety Authority (EFSA), the Office of Health Assessment and Translation (OHAT) of the National Toxicology Program (NTP), the Texas Commission on Environmental Quality, and the Navigation Guide (Morgan et al. 2018; OHAT 2019; Schaefer and Meyers 2017; Woodruff and Sutton 2014). Additionally, the World Health Organization and the International Labour Organization have collaborated to develop a risk-of-bias tool for assessing data on prevalence of exposure. The newly developed methods are based on review of existing methods, influenced by consultation with experts, tested for validity and reliability, and published in the peer-reviewed literature (Pega et al. 2020). Methods have also been proposed for applying systematic review methods to risk evaluations for ecological receptors in a framework integrated with human health risk evaluations (Suter et al. 2020).

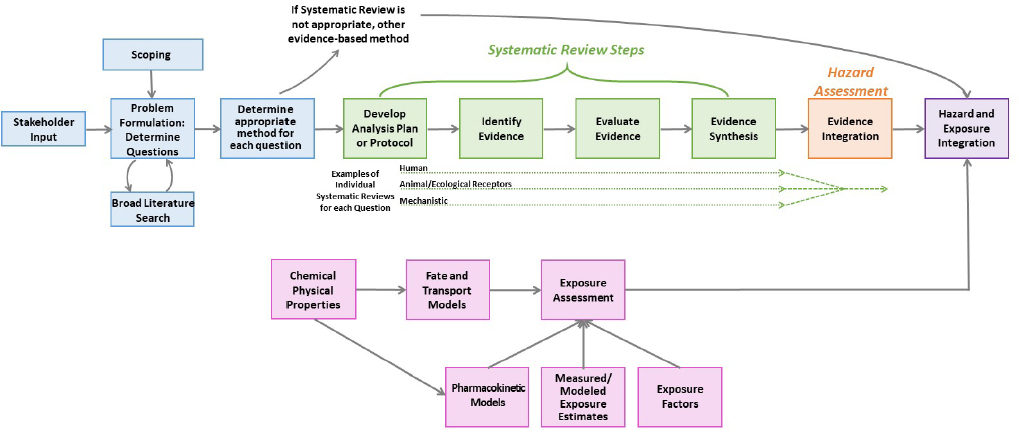

A chemical risk assessment includes an initial problem formulation as well as hazard assessment and dose-response assessment, exposure assessment, and risk characterization, as appropriate (NRC 2009). Figure 2-1 provides a schema for how systematic review is applied within chemical risk assessments. Prior to the conduct of a systematic review, planning and problem formulation should take place (see blue boxes in Figure 2-1). The planning and problem formulation should include stakeholder engagement and broad literature searching to find the evidence on the topic, and end with the identification of the most important questions and the best approach for answering such questions. The research questions and the approach should inform the first step of the systematic review—the development of the protocol. The planning and problem formulation should also determine if another evidence-based method could be applied to answer the research question required for the risk evaluation.

If a systematic review is the appropriate approach, development of the protocol is the first step of the review (see green boxes in Figure 2-1). Evidence identification, which includes searching and screening the literature and finding the reports as prescribed in the protocol, is the next step. Evidence evaluation follows. This step includes evaluation of the internal validity of the individual studies (i.e., Are the study results at risk of bias?), using the appropriate risk-of-bias tool for the type of study being reviewed. Synthesis consists of a qualitative evaluation of the evidence and can be complemented by a quantitative pooling—a meta-analysis.

This synthesis of the various specific streams of evidence is followed by hazard assessment (see orange box in Figure 2-1) with integration of the multiple evidence streams of human, animal and other

ecological receptors, and mechanistic findings. Gathering information on human and ecological exposures could also be approached with systematic review, but systematic review tools for those questions are not yet well developed (see pink boxes in Figure 2-1). For this reason, these steps are not shown as including the systematic review steps of protocol development, evidence identification, evaluation, and synthesis. Exposure and hazard data are integrated to characterize risk (see purple box in Figure 2-1).

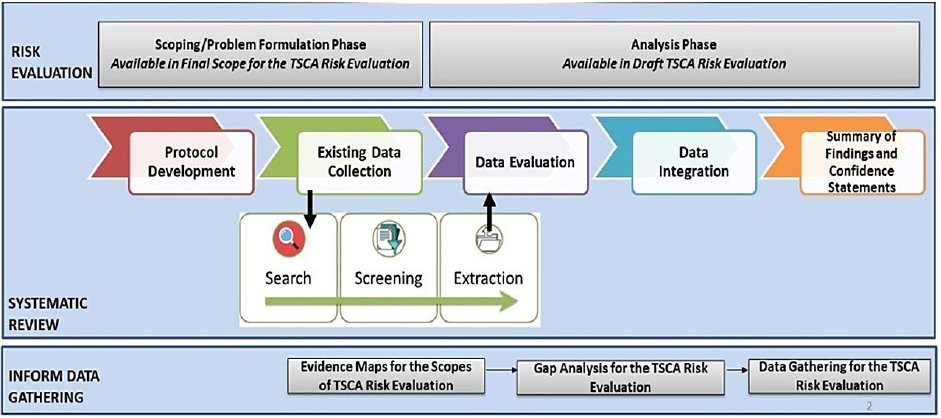

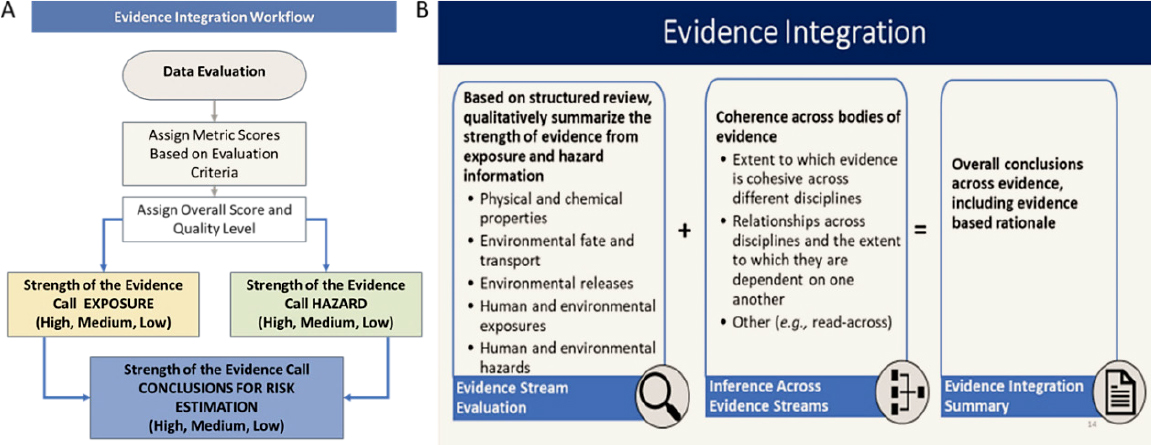

Figure 2-2 illustrates the Office of Pollution Prevention and Toxics’ (OPPT’s) approach to systematic review, which differs to an extent from the description in Figure 2-1 and includes systematic review as part of the broader process of risk evaluation. The committee has organized this chapter by the steps that are integral to systematic review as used generally (i.e., matching the current standards outlined by the Institute of Medicine [IOM] report Finding What Works in Health Care: Standards for Systematic Reviews [Bero et al. 2018; IOM 2011]) rather than following the specific steps outlined by OPPT. These steps, per the IOM report, are protocol development, evidence identification, evidence evaluation, and evidence synthesis. The systematic review protocol is informed by a problem formulation, which guides the development of a protocol. The evidence identified and evaluated for the systematic review is synthesized and then the evidence from the various streams is brought together for the data integration step to determine if a hazard exists. If the review conducted by OPPT is indeed a systematic review, then the committee expects to find each of the steps of a systematic review (even if not specifically called out as such). Additionally, the committee would expect that all systematic reviews should meet the definition and principles from the IOM (2011) report—explicit prespecified methods to identify, select, and synthesize the evidence from studies. When reviewing the methods and assessments used by OPPT, the committee compared all streams of evidence that OPPT specified as applying to the systematic review process to this definition. To determine the approach to systematic review being used within the Toxic Substances Control Act (TSCA) risk evaluations, the committee reviewed the Draft Risk Evaluation for Trichloroethylene (TCE) and the Risk Evaluation for 1-Bromopropane (n-Propyl Bromide) (1-BP) (EPA 2020a,c) and considered comments on the evaluations from the public and from peer-review evaluations (e.g., the Science Advisory Committee on Chemicals), as well as OPPT’s responses to the comments.

Systematic review approaches have been used widely and increasingly to assemble the evidence needed to assess human health and ecological receptors. The number of such systematic reviews focusing on environmental risks has doubled from 2016 to 2019 (Whaley et al. 2020). Yet, the use of systematic review to collect, evaluate, and synthesize the non-hazard evidence streams required for TSCA risk

evaluations, such as data on exposure, fate and transport, and chemical and physical properties, is not established and very little precedent exists for applying systematic review to these streams of evidence. Within the U.S. Environmental Protection Agency (EPA), the Guidelines for Human Exposure Assessment, the Guidelines for Ecological Risk Assessment, and the operating procedures for the use of the ECOTOXicology (ECOTOX) knowledgebase (EPA 1998, 2019b, 2020b) dictate how exposure, fate and transport, and physical chemical property data should be assembled for decisions about risks to human health and ecological receptors.

The agency is beginning to consider how to advance systematic review of these other streams of data. A recent paper from EPA’s Office of Research and Development (ORD) describes considerations required to advance systematic review for exposure science (Cohen Hubal et al. 2020). The authors describe how the tools for searching and organizing the literature can be applied to evaluating exposures. The article discusses Population (including animal or plant species), Exposure, Comparator, and Outcome (PECO) statements but stops short of addressing how PECO could be used to assemble exposure data. A checklist is provided for evaluating exposures, but, as written, it is proposed for evaluating exposures within the context of environmental epidemiology. The paper argues that in scientific literature, exposure information should be presented in a way that facilitates the determination of applicability to a PECO statement. Another paper authored by scientists in ORD proposes that systematic review and weight of evidence (WOE) are integral to ecological and human health assessments and provides an integrated framework, but the paper does not detail a process for how systematic review should be applied to all streams of evidence that are integrated to make a final risk determination (Suter et al. 2020).

The committee evaluated systematic review of the exposure and data on exposure, fate and transport, and chemical and physical properties similarly to how it evaluated other streams of evidence, such as whether there were explicit prespecified methods to identify, select, and synthesize the evidence from studies. The committee also considered whether OPPT followed the appropriate agency guidelines for these evidence streams (i.e., Guidelines for Human Exposure Assessment, the Guidelines for Ecological Risk Assessment, and the operating procedures for the use of the ECOTOX knowledgebase [EPA 1998, 2019b, 2020b]). The committee chose to use these agency sources as a reference because the Guidelines for Human Exposure Assessment are cited in the approach described for estimating consumer exposures in TSCA evaluations on the EPA website,1 and the Guidelines for Ecological Risk Assessment state that they are intended to be agency-wide.

___________________

1 See https://www.epa.gov/tsca-screening-tools/approaches-estimate-consumer-exposure-under-tsca, accessed November 13, 2020.

GENERAL FINDINGS

In planning its approach, the committee intended to review all systematic reviews (e.g., those focused on hazards and exposures) conducted within the Draft Risk Evaluation for Trichloroethylene and the Risk Evaluation for 1-Bromopropane (n-Propyl Bromide) with a tool used to assess bias in systematic reviews (AMSTAR-2) (Shea et al. 2017). Given that systematic review has been applied within the human health hazard assessment, the committee piloted this approach by reviewing the hazard evaluation of the TCE evaluation. The committee’s strategy was to test whether the use of the AMSTAR-2 instrument would be helpful and, if so, then the instrument would also be applied to assess the OPPT approach for the other streams of evidence in the TCE and the 1-BP evaluations. In this pilot, the committee found that the TCE evaluation was lacking adequate descriptions of many elements of a systematic review—the details about review methods that are needed to apply the AMSTAR-2 tool were either absent or provided in a disparate and varied set of documents, not all of which were referred to in the risk evaluation.

In this evaluation with the AMSTAR-2, four committee members reviewed the hazard stream in the TCE risk evaluation. In that pilot attempt only the element “Does this review contain the elements of a patient-intervention-comparison-outcome (PICO) statement?” received a positive evaluation by all reviewers. All reviewers gave a partial yes to the elements “Did the review authors use a comprehensive literature search strategy?” and “Did the review authors describe the included studies in adequate detail?” For all other elements of the AMSTAR-2 tool, reviewers gave a “no” response. The overall confidence in the results of the TCE hazard review would be considered “Critically Low,” indicating that the review “should not be relied on to provide an accurate and comprehensive summary of the available studies.” Given that the TCE risk evaluation was released in February 2020, about 1.5 years after the first risk evaluation under TSCA, the committee judged this document to be a reasonable exemplar of the program’s processes for the first 10 risk evaluations. Additionally, the committee also concluded that the quality of the systematic review was unlikely to be better for the exposure, chemical properties, and fate and transport streams of evidence, as systematic review is not as well developed in those areas. Consequently, the approaches to these evidence streams were given a general review and the AMSTAR-2 tool was not used.

Another crosscutting finding, discussed in detail in the evidence integration section of this chapter, relates to terminology and specifically to the interchangeable use of the terms “weight of evidence” and “systematic review.” The Procedures for Chemical Risk Evaluation under the Amended Toxic Substances Control Act, referred to as the “Risk Evaluation Rule” (40 CFR Part 702), specify that weight of the scientific evidence “means a systematic review method.” However, this definition may not be intended to mean a systematic review as defined by the IOM. Furthermore, the Draft Risk Evaluation for Trichloroethylene refers to a “weight of the evidence analysis” for an individual outcome involving successive determinations: first, considering individual lines of evidence; and second, integrating these disparate lines of evidence. The committee considers these two steps as data synthesis and data integration, respectively, and notes that the integration step is outside of systematic review. It is worth noting that a 2014 National Research Council (NRC) report found that the terms “systematic review” and “weight of evidence analysis” have been used interchangeably, leading to confusion. That report distinguished systematic review as including “protocol development, evidence identification, evidence evaluation, and an analytic summary of the evidence” while WOE analysis is a judgment-based process to infer causation that follows the systematic review (NRC 2014, p. 4). That report found “evidence integration to be more useful and more descriptive of the process that occurs after completion of systematic reviews” (NRC 2014, p. 4).

The remainder of this chapter is organized by systematic review step (problem formulation and protocol development, evidence identification, evidence evaluation, evidence synthesis, and evidence integration). For each step, the committee describes “the state of the practice,” or how the step is generally conducted; describes how the committee thinks the step is being conducted within TSCA risk evaluations; offers a critique of the approach being used in TSCA; and makes recommendations to improve

the approach to the step. The committee notes that describing what OPPT does in order to critique the process was challenged by the lack of documentation of the systematic review approaches used by OPPT.

PROBLEM FORMULATION AND PROTOCOL DEVELOPMENT

Planning the review and carrying out problem formulation generally precede the systematic review process, typically comprising a scoping review and engagement of stakeholders. Many groups consider planning the review and subsequent problem formulation as the most important steps in a review because a high-level view is taken at this stage that sets the approach and protocol for the review.

The product of problem formulation is a well-defined question, appropriately guiding the selection of the methodology during the planning stage (e.g., systematic scoping review, systematic map, or systematic review). If a systematic review, systematic map, or systematic scoping review is determined to be the appropriate method to address the research question, a protocol is then developed and registered. Systematic review protocols need to report the following: (1) the research question, (2) the sources that will be searched with a reproducible search strategy, (3) the explicit inclusion and exclusion criteria for study selection described in an unambiguous and replicable way, (4) the methods used to select primary studies, (5) tools for critical appraisals of the risk of bias or quality in the included primary studies, and (6) information about the approaches for evidence synthesis with sufficient clarity to support replication of these steps (Krnic Martinic et al. 2019).

A protocol makes the methods and the process of the review transparent, provides the opportunity for peer review of the methods, and stands as a record of the review process. Having a protocol minimizes the potential for bias in many steps throughout the systematic review, such as in evidence identification, by ensuring that inclusion of studies in the review does not depend on the findings of the studies (NRC 2014). The risk evaluation process is typically much broader than that of systematic review alone, and generally not every stream of evidence is evaluated using systematic review, while other evidenced-based approaches may be used. This section discusses the state of the practice for development of systematic review research questions and protocols and describes OPPT’s approach.

STATE OF THE PRACTICE

Planning the Review

Because minimizing bias is a guiding principle of systematic reviews, even the initial planning should be conducted as rigorously, objectively, and transparently as possible. This step may involve iterative consideration of sponsor and stakeholder needs, scoping of the topic—including considerations of feasibility—and input and participation from a multidisciplinary team sharing a variety of roles.

Once the plan to conduct a review assumes shape, a decision is made as to which type of review to perform. In some cases, a narrative approach may be chosen for any of a variety of reasons, including limited access to data or to express an expert opinion. However, if the goal is to provide an objective and comprehensive summary of how the evidence on a certain topic answers a specific research question, a systematic review should be conducted. The committee would like to emphasize that only a review conducted following all the steps in the framework is a systematic review.

Various motivations exist to conduct a systematic review in toxicology. In the frameworks that have been created by agencies, including NTP, EPA, and EFSA, the motivation for doing so is driven by the respective public health mandates and needs of the agency conducting the review (Morgan et al. 2018). Whether conducted by an agency or not, a systematic review may seek to clarify the human health or ecological effects of an evidence-rich chemical. In other cases, a systematic review may be undertaken

when evidence is scarce, to identify data gaps, or to assess the accuracy of a toxicological test method (NASEM 2017b).

While not necessarily required, scoping the literature on the topic is typically done to assess the need for a systematic review. This approach is particularly useful in fields in which little is known regarding the current state of the literature and when systematic reviews have not been performed previously. Scoping may range from a simple non-systematic search in one or two databases to a more formalized, resource-intensive scoping review (described in Levac et al. [2010] and Peters et al. [2015]). The findings of a scoping exercise may reveal that the question has already been adequately addressed or may confirm that better understanding of the evidence could provide clarity. A scoping search can inform the planning process by revealing important details such as the expertise required, the stakeholders interested in the topic, and the resources needed. Scoping may be conducted before or after a review team is formed, but the approach used should be transparent and objective. By the end of the planning stage, the decision as to whether or not to conduct the review will have been confirmed. The resources and the timeframe will have been established, and the review team and advisory group will be in place.

One major challenge in the planning phase of systematic reviews in toxicology, environmental health, and ecological toxicology and exposure is to compose a skilled review team, as experience among these disciplines in systematic review is still being built. Until sufficient systematic review capacity is built, clinical or preclinical systematic review experts may need to be engaged. Similarly, ecological risk assessment is still evolving. Consequently, expertise from those familiar with applying systematic review to human health should be brought in to develop systematic review protocols for data streams supporting ecological risk assessment. Following the planning stage and once the type of review needed is established, the stakeholder group begins the work on problem formulations.

Developing and Refining a Research Question

The ultimate goal of a systematic review is to address a specific question. The question should be developed a priori as it will shape many of the steps in the remainder of the review, giving form to the literature search and screening strategies.

In many contexts, narrowing the research question to the health or ecological outcomes that are of potential concern may be challenging, as there may be an array of outcomes, extending from markers of injury to specific diseases. In addition, traditional narrative literature reviews supporting risk assessments might include many different outcomes to determine the most sensitive endpoint on which to base the risk characterization (NRC 2009, 2014). Reviews that encompass large topics may benefit from an initially broad PECO statement to identify the body of literature and then multiple narrow and well-defined PECO statements to tease out the individual questions within the review. Scoping reviews can be extremely useful in identifying the reach and breadth of a systematic review prior to developing a research question. Assessing the breadth of the body of literature before defining the research question can allow for selection of specific endpoints, evidence streams, routes of exposure, or developmental stages. The problem formulation exercise, including scoping review results and input from stakeholders, can also be used to prioritize outcomes, study designs, and populations of interest. Moreover, scoping reviews can significantly improve the quality and usefulness of the inclusion and exclusion criteria for the literature search and selection. For example, which type of mechanistic evidence is relevant to the research question being addressed by a systematic review should be determined in advance based on an understanding of the pathogenesis for the outcome of concern. This advance determination will allow the creation of inclusion and exclusion criteria that eliminate mechanistic evidence not relevant to humans. If the reviewers have a baseline knowledge of the available literature database, it is easier to define and refine the criteria needed to exclude studies outside the interest of the review. NRC’s Review of EPA’s Integrated Risk Information System (IRIS) Process provides an approach for how to narrow a research question using a scoping review (NRC 2014).

For a health hazard assessment, the research question is typically focused on a PECO statement. This varies slightly from the health-based reviews which use a PICO statement, which were primarily focused on interventions (I) rather than exposures (E) (Rooney et al. 2014). The PECO statement is used to define the objective of the review and lay out the framework for the research question to be answered. Groups should enlist experts in various fields relevant to the review (i.e., epidemiologists, information specialists, and data analysts) and/or stakeholders with an interest in the outcome of the review to provide input in the PECO statement (OHAT 2019). Similarly, expertise within fields of importance to ecological risk assessment (e.g., ecotoxicology, ecology, environmental chemistry, and environmental engineering) should be engaged. For example, assessment endpoints, which clearly link to and support ecological protection goals, and associated measures of effect must be identified during problem formulation. Thus, an ecologically focused research question and PECO statement need to encompass these assessment endpoints. Once finalized, the PECO statement becomes the primary source of information used during the literature search and for the inclusion and exclusion criteria during literature screening.

While secondary PECO review question(s) may be necessary for complex risk-based assessments and listed as sub-questions, a clear single primary review question should drive the formulation of the review. Because this question will be the systematic review’s guiding element and principal goal, defining it precisely and appropriately is of crucial importance. A properly framed review question will facilitate all the review’s subsequent steps, including the definition of the eligibility criteria and the literature search, how the evidence will be collected, and how the results will be presented and synthesized. In particular, the question should help define the criteria for the inclusion and exclusion of research studies in a way that ensures that all relevant evidence is included to answer a particular question. For example, the review question could focus on a specific study type, such as chronic toxicity studies in animals, and would thus exclude any other study type, such as acute or sub-acute toxicity studies.

Determining the Appropriate Approach

The approach to answer the questions of interest is determined based on the results of the planning, question refinement, and scoping review processes. This decision should be based on the most appropriate methods to address the question as well as issues of transparency and efficiency.

Different approaches can be used for conducting the hazard assessment, including a systematic review, a narrative review with a protocol, an assessment by an authoritative body, or an update to a high-quality narrative or systematic review (NASEM 2019). Use of an existing systematic review may be an efficient and transparent way to address a question, particularly when the scoping review identified potentially relevant reviews. In the approach of using an existing systematic review, there is a search to identify current relevant reviews (i.e., those that match the PECO elements of the question to be addressed), the relevant review(s) are evaluated to identify a trustworthy review (i.e., using ROBIS or AMSTAR-2), and a bridge search (and, as needed, screening, risk-of-bias assessment, and synthesis) is conducted to update the search results. This process was recommended and demonstrated in a prior National Academies report, Using 21st Century Science to Improve Risk-Related Evaluations (NASEM 2017a).

Developing a Systematic Review Protocol

After the approach to answering the question is determined, the next step is to develop the protocol. The protocol is a detailed plan or set of steps that should describe the methods that will be used to conduct all the steps of the systematic review from evidence identification through evidence synthesis. Protocols are critical in de novo systematic reviews and can also be used in other evidenced-based literature searching methods, such as scoping reviews and narrative reviews. The PECO elements of the refined questions determine the search strategy and the eligibility criteria. These elements should be as

specific as possible, including listing specific outcomes of interest. Furthering transparency, protocols should be publicly registered, such as on PROSPERO or Open Science Framework (Booth et al. 2012; Foster and Deardorff 2017), or posted on a public website where they can be reviewed. Protocols should be posted for review prior to the start of the systematic review for public comment and peer review. Any revisions to the protocol should be as an amendment to the protocol. All versions of the protocol will remain available upon request, although the evaluation will usually proceed according to the most updated version of the protocol.

Standards of practice for applying evidenced-based methods for developing a research question, planning an approach, and developing a protocol are not well established for questions about exposures and ecological risks. The Guidelines for Human Exposure Assessment suggest that the problem formulation for human exposure assessment should include the identification of the individual, life stage(s), and group(s) or population(s) of concern; a conceptual model presenting the anticipated pathway(s) of the agent from the source(s) to receptor(s) of concern; and an analysis plan that charts the approach for conducting the assessment. Additionally, the Guidelines for Human Exposure Assessment suggest that aggregate exposures resulting from all potential uses of the compound should be calculated unless not needed per the specific research question (EPA 2019b).

The Guidelines for Ecological Risk Assessment state that the problem formulation for ecological risk assessments should depend on high-quality assessment endpoints and include conceptual models and an analysis plan. Assessment endpoints, and specific measures of effect, should be linked directly to the ecological protection or management goals, as framed within the conceptual models, along with exposure characteristics, ecosystems, and species of particular concern and at potential risk (EPA 1998).

Committee Description of the Approach in TSCA Risk Evaluations

OPPT is using a variety of software tools and approaches to conduct broad searching and to map the available evidence. The process has problem formulation and scoping occurring somewhat in parallel with the protocol development and data collection process (see Figure 2-2). Systematic review approaches use problem formulation to determine the protocol (see Step 1 in Figure 2-1). In advance of the risk evaluation, OPPT publishes scope documents that describe what it expects to consider in its risk evaluation pursuant to TSCA section 6(b). The TSCA website states the following:2

The scope of a risk evaluation will include the hazards, exposures, conditions of use, and the potentially exposed or susceptible subpopulations the Administrator expects to consider. The scope will also include:

- A Conceptual Model, which will describe the relationships between the chemical, under the conditions of use, and humans and the environment.

- An Analysis Plan, which will identify the approaches and methods EPA intends to use to assess exposures and hazards.

- “Conditions of use” under TSCA means “the circumstances, as determined by the Administrator, under which a chemical substance is intended, known, or reasonably foreseen to be manufactured, processed, distributed in commerce, used or disposed of.” For purposes of prioritization, the Administrator may determine that certain uses fall outside the definition of “conditions of use.” During the risk evaluation scoping process, EPA may decide to narrow the scope of the risk evaluation further, potentially excluding conditions of use that present low risk.

___________________

2 See https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/risk-evaluations-existing-chemicals-under-tsca#determination, accessed November 13, 2020.

The committee reviewed the scope documents that accompanied the TCE and 1-BP risk evaluations and found that the scoping and problem formulation documents merged the steps of problem formulation, protocol development, and the conduct of systematic review (see EPA 2017a,b). For these two assessments, the scope documents did not include PECO statements, but the problem formulation documents that were released after the evaluation was started did contain PECO statements. The committee notes that some of the more recently released scope documents contain more detailed information, such as the evidence identification methods and PECO statements3 (see Box 2-1). Additionally, the scope documents did not contain protocols as typically defined in systematic review, as the scope documents did not prespecify the approaches for conducting each step of the systematic review process.

Although the 1-BP evaluation states that a “preliminary review of the health effects literature” was conducted, it is difficult to determine how that review affected the PECO statement, other than in the outcomes considered. For the human health hazard assessment, health outcomes were limited to several specific organ systems, although there is a note stating that other outcomes may be assessed as necessary (see Table 2-1). For the population element, specific species could have been limited to those that were either relevant to the outcome or that were available in the literature. For the exposure element in human epidemiology studies, a very broad search was conducted including not only the chemical of interest but also any metabolites or mixtures and studies with only qualitative estimates of exposure, which would have limited use in a dose-response assessment.

In the exposure assessment, the problem formulation includes occupational and non-occupational scenarios, as well as the environmental releases. As with the problem formulations for hazard assessment, the problem formulation, scoping, and data collection processes are also merged for these streams. The determination of what exposures are relevant to the risk evaluation is dictated by the “conditions of use.” “For purposes of prioritization, the Administrator may determine that certain activities fall outside the definition of ‘conditions of use.’ During the risk evaluation scoping process, EPA may decide to narrow the scope of the risk evaluation further, potentially excluding conditions of use that present low risk.”4 For example in the TCE scope documents, all indoor studies of exposure are included and evaluated, but in the final risk evaluation, measurements of indoor home exposures were determined not to be related to a specific condition of use and thus were not included in determining exposures. In the search for data, sources that provide measured concentrations, as well as sources related to models, and all potential model inputs, are included. Additionally, in both the 1-BP and TCE evaluations if the manufacturer provided safety data sheets stipulating that workers will be provided personal protective equipment (PPE), EPA assumes that workers will be given proper PPE by their employer, that they will be trained to use it correctly, and that they will have no medical conditions precluding that use.

___________________

3 See, for example, https://www.epa.gov/sites/production/files/2020-09/documents/casrn_117-81-7_di-ethylhexyl_phthalate_final_scope.pdf, accessed November 13, 2020.

4 See https://www.epa.gov/assessing-and-managing-chemicals-under-tsca/risk-evaluations-existing-chemicals-under-tsca#determination, accessed November 13, 2020.

For the ecological risks, OPPT describes the environmental fate and transport, releases to and occurrence in the environment, and probable environmental hazards (ecotoxicology) that will be considered in the scope documents. In the analysis plan of the scope documents, OPPT identifies its objectives, the conditions of use, and data types associated with physical-chemical properties, environmental fate parameters, and ecotoxicology information. Conceptual models are used to account for exposure pathways.

TABLE 2-1 Health Hazard Assessment PECO Statement from the 1-BP Risk Evaluation

| PECO Element | Evidence Stream | Features Included |

|---|---|---|

| Population | Human |

Any population All life stages Study designs:

|

| Animal |

All non-human, whole-organism mammalian species

All life stages |

|

| Exposure | Human |

Exposure based on administered dose or concentration of 1-BP, biomonitoring data (e.g., urine, blood or other specimens), environmental or occupational-setting monitoring data (e.g., air, water levels), job title, or residence

Primary metabolites of interest as identified in biomonitoring studies Exposure identified as or presumed to be from oral, dermal, or inhalation routes Any number of exposure groups Quantitative, semi-quantitative or qualitative estimates of exposure Exposures to multiple chemicals/mixtures only if 1-BP or related metabolites were independently measured and analyzed |

| Animal |

A minimum of two quantitative dose or concentration levels of 1-BP plus a negative control group

Acute, subchronic, or chronic exposure from oral, dermal, or inhalation routes Exposure to 1-BP only (no chemical mixtures) Quantitative and/or qualitative relative/rank-order estimates of exposure |

|

| Comparator | Human | A comparison population (not exposed, exposed to lower levels, or exposed below detection) for endpoints other than death from acute exposure |

| Animal | Negative controls that are vehicle-only treatment and/or no treatment | |

| Outcome | Human |

Endpoints described in the 1-BP scope document:

Other endpoints |

| Animal |

SOURCE: EPA 2020c.

OPPT created PECO statements that guided three large literature searches to gather exposure data (under a PECO exposure statement); occupational exposure and information on industrial uses (under a Receptor, Exposure, Scenario/setting, and Outcome [RESO] statement); and information on chemical properties and factors related to environmental fate, transport, and ecological exposures and hazards (under a Pathways and Processes, Exposure, Setting or Scenario, and Outcome [PESO] statement) (see Box 2-1).

The very broad PECO-exposure (see Box 2-2) and RESO statements (see Box 2-3), one for consumer exposures and one for occupational exposures, have the goal of assembling all the potentially relevant literature to identify any measured levels of exposure in consumer or occupational settings. Additionally, the PECO statements include any models and model inputs necessary for modeling estimates of exposures. In addition to the actual PECO-exposure or RESO statements, OPPT also creates a list of all specific uses and scenarios for which there is a need to gather data, based on the knowledge obtained about the use of the chemical in prior steps.

Critique of the TSCA Approach

Looking at the core review elements of the Statement of Task, which is to address whether the TSCA approach to systematic review is “comprehensive, workable, objective, and transparent,” the committee finds that the approach to problem formulation and protocol development could be improved broadly to better meet these characteristics.

Comprehensive

The approach to problem formulation and protocol development is not comprehensive as it did not result in refined research questions or a documented approach to how the reviews required to support the risk evaluations should be conducted. The ill-defined questions within TSCA risk evaluations hinder the necessary prespecification of systematic review methods, notably the eligibility criteria for studies. Failing to adequately refine the focus of a systematic review leads to overly broad questions, in turn leading to the identification of heterogeneous studies, to more complicated analysis, and to challenges in integrating across evidence streams to draw conclusions.

With respect to the ecological assessments, the conceptual models are not consistently accounting for all exposure pathways. For example, within the environmental conceptual model in the TCE evaluation, land application of wastewater effluent is not considered, yet this practice from centralized and decentralized (e.g., advanced aerobic systems) wastewater treatment plants introduces chemical contaminants to soils. Similarly, though the range of instream dilution considerations for point source discharges is important for predicting exposure scenarios, the TCE evaluation uses 10- to 15-year-old Exposure and Fate Assessment Screening Tool (E-FAST) models in the TCE assessment. Consequently, there may be underestimation of surface water exposure levels in regions experiencing decreased flows due to climate change (e.g., prolonged droughts) and increased water extraction (see EPA 2020a, p. 98). Additionally, the documents do not prespecify all cut-off values for environmental fate parameters, such as those used for bioconcentration factors and bioaccumulation. As with the human health hazard assessments, the TCE evaluation does not include sufficient protocols that prespecify how the systematic review for ecological outcomes will be conducted.

For the more typical exposure factors, TSCA assessments do rely on the Exposure Factors Handbook, which is a well-documented and regularly updated source of information. However, there are some activities not covered in the handbook, and searches for those data streams should be included in the data-needs list in the problem formulation. Examples noted relevant to TCE consumer use would be activity pattern information on the frequency with which the average gun owner cleans his or her gun, other product-specific activities not included in the handbook, or air exchange rates associated with horse stables (EPA 2011). Additionally, in the TCE and the 1-BP evaluation, searches are needed on the actual use, type, and effectiveness of PPE for the different occupational uses of the products. The as-

sumption that PPE would be used consistently and by all workers is overly optimistic, a criticism that the committee noted in the public comments on the TCE and 1-BP risk evaluations. Additionally, breathing rates during occupational activities where the products of interest are used to estimate chemical intake, should also be included in the data needs list. Inclusion of these additional search terms in the product formulation would result in an exposure assessment that is more consistent with the Guidelines for Human Exposure Assessment.

Workable

The current approach taken to problem formulation and protocol development is adding to a laborious process for searching, screening, and evaluating the literature. Completing a scoping review prior to the development of the PECO statements could narrow the search to appropriate studies.

Objective

OPPT is using a variety of software tools and approaches to conduct broad searching and to map the available evidence; however, it is not using those approaches in an objective way to determine the research question. This may be because the TSCA process has problem formulation and scoping occurring somewhat in parallel with the protocol development and data collection process (see Figure 2-2).

Transparent

The process for problem formulation is not transparent. It is not well documented in any of the risk evaluations or related scope documents reviewed for this report, and procedures for problem formulation are not included in Application of Systematic Review in TSCA Risk Evaluations (herein 2018 guidance document). Moreover, the transparency of the entire risk evaluation is compromised because in addition to not developing clear questions for the systematic reviews, there are no protocols for the reviews or to guide the synthesis step. Consequently, the review process is not documented or prespecified from its start, and clarity is lacking when the review is finished and published.

Specifically, it is unclear how OPPT is determining the list of data needs that will inform the human exposure assessment. The TSCA webpage states that OPPT is utilizing EPA’s Guidelines for Human Exposure Assessment (EPA 2019b) for exposure assessments. If this is the case, the committee notes several inconsistencies with the guidelines. First, the guidelines specify that exposure calculations should be aggregate exposures, resulting from all potential uses of the compound (EPA 2019b). For example, a typical consumer’s exposure includes both day-to-day exposures occurring indoors as well as increased exposure resulting from product use, which may occur on a semi-regular basis. TSCA assessments do include indoor concentrations that result from aggregate exposure in the problem formulation statement for consumer exposure. However, later steps determine that the indoor exposures could not be linked to any individual consumer product, and those exposures are omitted from the final exposure assessment.

The data needs list does include many of the parameters needed to run the existing EPA models but does seem to exclude some necessary model inputs that should be included in the data search based on the guidelines. OPPT could improve these assessments and make them better align with agency guidelines if clear questions on frequency of use for consumer products that may contain the chemical of interest were identified in the problem formulation.

Recommendations

In order to improve these issues with TSCA’s approach to problem formulation and protocol development, the committee recommends the following:

- Scoping and mapping exercises and stakeholder engagement should be used to conduct a full problem formulation prior to the conduct of the systematic review.

- The results from problem formulation should include refined questions and an approach for each research question. A systematic review may not be required for every stream of evidence that is part of a risk evaluation. The full problem formulation and understanding of the literature base for an evaluation should allow OPPT to determine which research questions may be evaluated with a systematic review and which questions should be evaluated with a different evidenced-based approach. Ecological research questions should be linked to assessment endpoints, and ecological receptors of concern should be identified (e.g., algae, aquatic macrophytes, invertebrates, fish, amphibians, and threatened and endangered species). When chemicals are identified as bioaccumulative, other receptors (e.g., birds and mammals) should also be included. When there is no adequate literature base to answer questions, OPPT should be transparent about its alternative approaches. Regardless of the approach taken, OPPT should ensure that the reviews are comprehensive, objective, transparent, and consistent. OPPT should also highlight and explain areas in which deviations from a systematic review process occur.

- Evidence streams should be clearly defined to facilitate the determination of which evidence-based methods should be followed for each stream. Such a definition is especially critical for the exposure and non-hazardous streams.

- Potential redundancies should be reduced by explicitly considering appropriate methods to address questions that may include updating an existing and adequate systematic review rather than conducting a de novo review.

- A systematic review protocol that details the prespecified methods, including eligibility and critical appraisal criteria, and that is peer-reviewed and publicly posted before the review commences should be prepared. Ideally, this would be one document or, if multiple documents are needed, there should be clear crosswalks between documents.

- The problem formulation for the exposure assessment should more closely follow the Guidelines for Human Exposure Assessment and include inputs on frequency of product use, exposure factors related to specific uses, breathing rates, and use of PPE.

EVIDENCE IDENTIFICATION

Evidence identification is the next step in the systematic review process (see Figure 2-1). This step includes searching for the evidence related to the particular question and strategies for selecting both the evidence to be considered and the evidence to be excluded from consideration. As noted in Finding What Works in Health Care: Standards for Systematic Reviews (IOM 2011), this step presents the first opportunity for bias to enter the review. Without a comprehensive search to identify evidence informing the PECO statement, the resulting systematic review “will reflect and possibly exacerbate existing distortions in the biomedical literature” (IOM 2011, p. 81). Therefore, it is critical to have pilot search strategies and to have quality assurance (QA) and quality control (QC) measures during the evidence identification step of the systematic review process (IOM 2011).

STATE OF THE PRACTICE

Searching for the Evidence

Searching for the evidence starts with the design of a search plan that is aligned with the PECO question(s). This plan needs to be sensitive enough that it does not inadvertently exclude evidence relevant to the review question, without returning an unmanageably large amount of irrelevant information. The search plan should be specified in protocol, include databases to be searched and search

strategies (for at least one database), discuss gray literature that will be searched, and may also include other methods of search such as snowball searching, scanning references of included studies, and using existing systematic reviews. The date of the searches that are planned for updating should also be documented. A comprehensive search strategy should be guided by the PECO question in the selection of search terms, be in line with the pertinent inclusion criteria (publication date or language(s) to be considered), strike a balance between sensitivity (the ability to identify relevant evidence) and specificity (the ability to exclude irrelevant information), and be appropriately documented in the protocol and made publicly available.

Selecting the Evidence

Literature searches can yield thousands of records. In order to prevent subjectivity and reduce bias in the evidence selection, systematic reviews prespecify eligibility criteria based on the PECO question in the study protocol. The protocol should also specify how the quality of the selection is controlled, usually by independent duplicate review (i.e., requiring that two screeners, or one screener and an artificial intelligence [AI] tool, independently carry out the selection, with a procedure to resolve disagreements). In addition, it should provide instruction on how the selection is documented to allow its replication by others.

The selection is usually carried out in two stages: (1) title and abstract and (2) full text. At the first stage, all identified records are screened on the basis of the title and abstract in order to exclude the records that are obviously beyond the scope of the review. Studies rejected at this stage of the process will either be completely off-topic or fail to meet one or more eligibility criteria.

The second stage of the selection is a full-text review, during which reasons for the exclusion of each study need to be documented. Detailed documentation of the decision(s) made in the selection process is essential for the transparency of the review. Screeners’ assessments should be captured, as well as the solutions in case of disagreements. All full texts retrieved should be kept in a database for the systematic review.

A widely accepted tool for summarizing the selection process is the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses5) flow diagram presented in Moher et al. (2009). Koustas et al. (2014) provide a practical example of its use for a toxicological systematic review.

In order to handle the vast amount of identified records and appropriately document the selection process, various software applications are now available that allow reviewers to implement an efficient and transparent record management process. Van der Mierden et al. (2019) provided an overview of informatics solutions to support various processes of a systematic review, and the systematic review toolbox contains more than 100 software tools for a broad range of systematic review tasks.6

The committee notes that there are no standards of practice to search for the evidence in the streams that are typically included in the exposure assessment (see Figure 2-1). The Guidelines for Human Exposure Assessment and the Guidelines for Ecological Risk Assessment do provide guidelines as to what should be included in an exposure assessment (EPA 1998, 2019b), and the approach described for searching for evidence for other streams is similar to the hazard assessment.

COMMITTEE DESCRIPTION OF THE APPROACH IN TSCA RISK EVALUATIONS

Searching for the Evidence

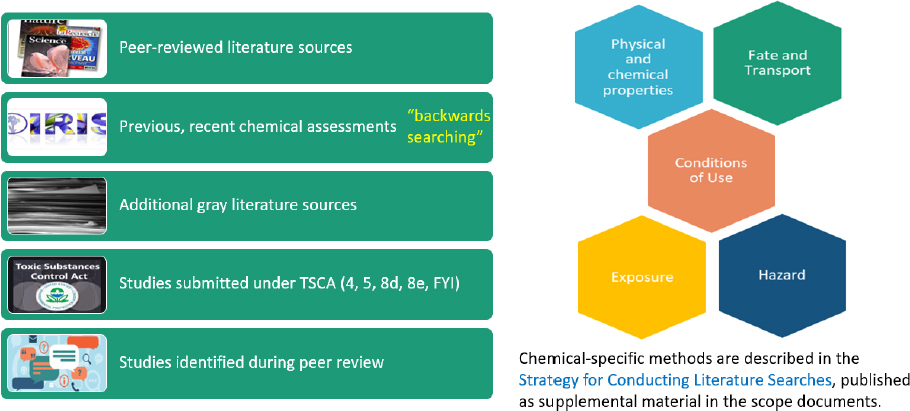

OPPT uses exhaustive search strategies that include major scientific databases, backward searching for studies in previous chemical risk assessments, additional gray literature sources, studies submit-

___________________

5 See www.prisma-statement.org, accessed November 13, 2020.

6 See http://systematicreviewtools.com/advancedsearch.php, accessed November 13, 2020.

ted under TSCA, and studies identified in peer review (see Figure 2-3). The terms used and databases searched were found to be exhaustive (see Table 2-2).

In the TCE evaluation, the searches conducted are wide-ranging as a result of the broadly framed questions. For example, to support the review of the occupational data, OPPT uses a grouped data acquisition strategy. Rather than conducting a separate search for each exposure to be determined, exposures are grouped into three data acquisition strategies. Specifically, one search looks for information that can be used to assess consumer exposures; a second assesses environmental release data for occupational exposures; and a third gathers physical chemical property data that can be used to run the models to calculate exposure. The search for the needed chemical properties was included in the ecological search, but data were used for all types of models. This approach improves the efficiency of the data search but makes following and evaluating the process difficult. However, it is evident that the process includes areas such as monitoring data, models, and completed exposure assessments. Addi-

TABLE 2-2 Search Strategies and Terms in TSCA Risk Evaluations

| Physical/Chemical Properties | Conditions of Use | Fate, Engineering, Exposure, Human Health | Environmental Health | |

|---|---|---|---|---|

| Search terms | CAS RN Chemical name Chemical structure |

CAS RN Chemical name Synonyms Trade names Common misspellings |

CAS RN Chemical name Synonyms AND Use terms OR Exposure, Engineering and Fate terms OR Health Effect Terms |

CAS RN Chemical name Synonyms as identified by STN International and Pesticide Action Network |

| Sources | STN REAXYS ChemSpider |

Information reported to EPA Trade publications Open literature reports Citations in other assessments Safety data sheets EPA’s Chemical and Product Categories NIH’s household product database Company websites |

Existing assessments Peer-reviewed sources (Pub Med, Web of Science, Toxline) Gray literature

|

ECOTOX Knowledgebase approach:

|

NOTE: ATSDR, Agency for Toxic Substances and Disease Registry; CAS RN, CAS Registry Number; ECOTOX, ECOTOXicology; EPA, U.S. Environmental Protection Agency; NHANES, National Health and Nutrition Examination Survey; NIH, National Institutes of Health; NIOSH, National Institute for Occupational Safety and Health.

SOURCE: U.S. Environmental Protection Agency, presentation to the committee, June 19, 2020.

tionally, the OPPT process is relatively thorough in using a wide range of search terms and considering both the published literature and the gray literature. Gray literature searching included the first 100 sites on Google, web scraping (e.g., Agency for Toxic Disease Registry and National Institute for Occupational Safety and Health documents), and databases (e.g., ChemView). The process allows for the inclusion of other data that are submitted during peer review. It was noted in the TCE evaluation that additional reports were suggested in the public comments; those reports were screened and in some cases included. These additional materials may be government reports or other gray literature, which can be difficult to search for in a comprehensive fashion.

OPPT collects information on physical-chemical properties and environmental fate parameters. These routine physical-chemical properties (e.g., water solubility, vapor pressure, log Kow, and Henry’s Law Constant) provide indications of environmental compartments (e.g., surface water, groundwater, sediment, and air) where exposure may occur and are required to support environmental modeling efforts. OPPT conducts literature searches to populate a database for further review. Similarly, OPPT also reviews the literature and the U.S. Geological Survey, EPA, and the U.S. Department of Agriculture databases for ambient surface water exposure data from the United States and other countries. In addition to identifying empirical datasets, OPPT uses the Estimation Programs Interface (EPI) Suite modeling for physical-chemical property and environmental fate information. Such practices are not surprising. As there are approximately 350,000 chemicals and chemical mixtures registered for commercial use around the world (Wang et al. 2020), empirical data on physical-chemical properties are not consistently available, and environmental fate parameters are relatively limited. Environmental fate modeling is thus necessary, though it remains challenging to cover the range of environmental exposure scenarios and compartments with the existing tools. More recent information is available through the National Hydrography Dataset. Its use would be advantageous to improve dilution expectations and thus aquatic exposure predictions, particularly since stream flow datasets within E-FAST 2014 are reported to be 15 to 30 years old (Card et al. 2017).

OPPT also relies on the Ecological Structure Activity Relationships (ECOSAR) program to estimate ecotoxicity data when empirical data are limited. It is not clear whether or how ECOSAR is consistently being used to predict acute toxicity information for fish, aquatic and terrestrial invertebrates, and algae during each risk evaluation. Similarly, it is not clear whether physical chemical property information is being evaluated a priori to ensure it is captured within ECOSAR applicability domains. When empirical ecotoxicology data are lacking, another ORD tool, the Web-based Interspecies Correlation Estimation model, is available to support cross-species predictions. In addition, EPA ORD has advanced adverse outcome pathway (AOP) conceptual models to support mechanistic ecotoxicology data integration within risk evaluations. It has developed Sequence Alignment to Predict Across Species Susceptibility (SeqAPASS), another innovative tool that presents bioinformatic opportunities to advance toxicity extrapolation efforts across species. However, it does not appear that these models have been identified during systematic reviews.

Many databases support the human exposure assessments, but the process for searching for these data is unclear. For example, in the TCE risk evaluation, OPPT relied on a consumer product use database that is more than 30 years old and may not reflect current usage patterns. However, a process could not be identified for obtaining more relevant and recent data. Information is not readily available on what chemicals are in particular products, as databases with such information are lacking both in quantity and quality.

It is also unclear whether the specific search statements are intended to identify factors that may be important for the exposure calculations for the conditions of use. For example, one pathway considered for TCE was related to hoof polish for horses. It was assumed that the duration of use and the mass of polish used was the same as for shoe polish. It was assumed that the barn where the product was being applied was the same size as a garage but that the air exchange rate was higher, with reference to a sin-

gle report from Pennsylvania State University supporting that value.7 It is unclear if a systematic search was conducted to obtain data for this parameter or if the search was limited to finding a source of data.

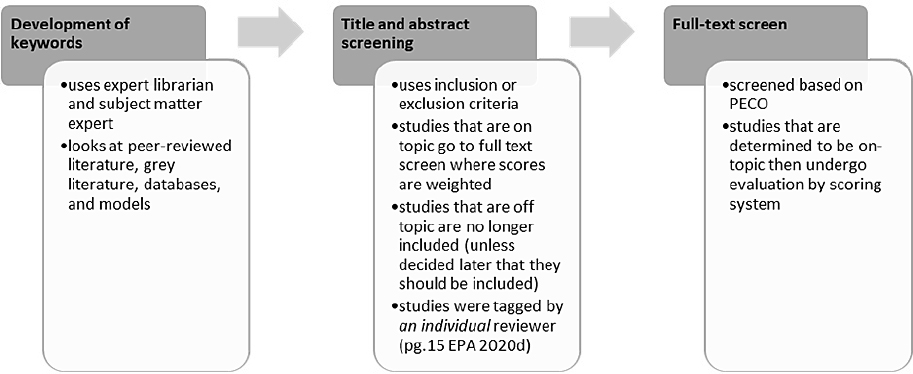

Selecting the Evidence

To select evidence, OPPT then screens the titles and abstracts against a list of needs for the evaluation. However, the committee was provided conflicting information on how this step was conducted. The 2018 guidance document states that OPPT uses PECO at title and abstract screening; the Strategy for Conducting Literature Searches for TCE: Supplemental Document to the TSCA Scope Document states that OPPT uses a list of data needs. Next, a full-text screening of the papers is conducted. As noted in the problem formulation section, OPPT uses PECO or PECO-like statements to compare articles to determine eligibility. For occupational data, there is a RESO statement to gather information on potential occupational exposures, and for the TCE and 1-BP evaluations such statements are used to inform the full-text screening. This process, as carried out for the evaluations of TCE and 1-BP, is illustrated in Figure 2-4. The broad PECO/PECO-like statements lead to unclear and shifting eligibility criteria and to an unclear or questionable selection process (i.e., changes in the process are allowed and reasons are not specified). In more recent evaluations, OPPT is using a number of AI-based tools to help with the large number of references (Kellie Fay, poster presentation to committee, June 19, 2020). These tools aim to make the screening of articles more efficient by automatically prioritizing articles by using user feedback to push the most relevant articles to the top of the list (Howard et al. 2016).

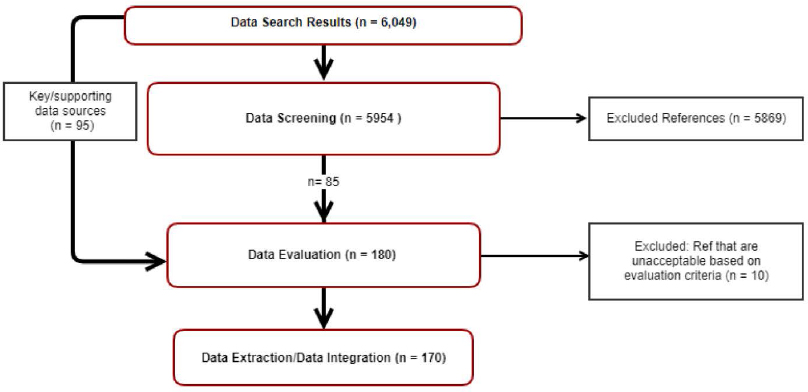

The literature flow diagram for the TCE human health hazard assessment shows that more than 6,000 studies were identified from the initial search using key words, 5,954 studies went through title and abstract screening, and 180 studies were evaluated during the full-text screen (including 95 key studies from previous assessment activities) (see Figure 2-5). Of the 180 studies identified for human health hazard assessment data evaluation, 119 studies were selected for full-text evaluation for animal and mechanistic data (see Risk Evaluation for TCE Systematic Review Supplemental file: Data Quality Evaluation of Human Health Hazard Studies—Animal and Mechanistic Data). No information was supplied in the risk evaluation documents identifying how these studies were selected. While it appears that the studies were selected from the general human health hazard assessment pool, the lack of details on how the agency arrived at the particular subset of animal and mechanistic studies makes it impossible to determine the process by which the studies were identified. Additionally, no information is supplied related to the excluded references (e.g., which studies were excluded and why).

___________________

7 See https://extension.psu.edu/horse-stable-ventilation, accessed November 13, 2020.

Using the inclusion/exclusion criteria based on the PECO statement for TCE (see Table 2-3), the populations identified in the PECO statement include any population and all life stages, and although fetal cardiotoxicity was an important outcome for the TCE evaluation, no justification was given for limiting outcomes of animal and in vitro studies to developmental toxicity.

Critique of the TSCA Approach

Looking at the core review elements of the Statement of Task, which address whether the TSCA approach to systematic review is “comprehensive, workable, objective, and transparent,” the committee finds that the TSCA approach to searching for evidence identification could be improved on all of these characteristics.

Comprehensive

TSCA risk evaluations include searches for evidence in most major scientific databases, backward searching for studies in previous chemical risk assessments, additional gray literature sources, studies submitted under TSCA, and studies identified in peer review (see Figure 2-3). The terms used and databases searched were found to be exhaustive. The ecological assessment and the human health exposure assessment rely often on databases that support the models for those evaluations. The committee found that the process for searching for these types of data may not be comprehensive. For example, the hydrology data used in the TCE evaluation were not the most recent, and the product use information was more than 30 years old.

Workable

The TSCA approach could be more efficient, as the broad PECO statements led to inclusion and exclusion criteria that allowed inclusion of studies that may not be relevant. OPPT is using a number of AI-based tools to help make the process of screening hundreds of references more efficient. Many of these tools have been validated on dozens of systematic reviews, and the committee is supportive of their use (Gartlehner et al. 2019; Howard et al. 2020; Van der Mierden et al. 2019). However, these tools will only work well when precise and explicit inclusion and exclusion criteria are used consistently by all screeners.

TABLE 2-3 Inclusion and Exclusion Criteria for the TCE Risk Evaluation

| PECO Element | Evidence Stream | Papers/Features Included | Papers/Features Excluded |

|---|---|---|---|

| Population | Animal |

|

|

| Mechanistic/Alternative Methods |

|

||

| Exposure | Animal |

|

|

| Mechanistic/Alternative Methods |

|

|

|

| Comparator | Animal |

|

|

| Mechanistic/Alternative Methods |

|

|

|

| Outcome | Animal | ||

| Mechanistic/Alternative Methods |

|

|

|

| General Considerations | Papers/Features Included | Papers/Features Excluded | |

|

|||

a Some of the studies that are excluded based on the PECO statement may be considered later during the systematic review process. For TCE, EPA will evaluate studies related to susceptibility and may evaluate, toxicokinetics and physiologically based pharmacokinetic models after other data (e.g., human and animal data identifying adverse health outcomes) are reviewed. EPA may also review other data as needed (e.g., animal studies using one concentration, review papers).

b EPA will review key and supporting studies in the IRIS assessment that were considered in the dose-response assessment for non-cancer and cancer endpoints as well as studies published after the IRIS assessment.

c EPA may screen for hazards other than those listed in the scope document if they were identified in the updated literature search that accompanied the scope document.

d EPA may translate studies as needed.

SOURCE: EPA 2018b.

Objective

The process for searching and selecting the evidence lacked objectivity, because the inclusion and exclusion criteria were broad and thus less objective. A benefit of systematic review is that clear, predefined inclusion or exclusion criteria increase objectivity of the process for selecting the evidence.

Transparent

Overall, the committee found that the lack of information about the specific processes used for the identification of evidence reduced confidence in the findings and were inconsistent with systematic review practices. Information about the search process was scattered across multiple documents within the docket for TCE, making the identification of details laborious and time consuming. The committee recommends organizing the information in one main document with clear references to supporting documents.

In the TSCA evaluation process, eligibility criteria are not predefined in the protocols and shift during the systematic review process. Outcomes specified are frequently too broad for true systematic review and would have been focused in scoping exercises. These shifts in inclusion and exclusion criteria are particularly problematic when used in building machine learning models as the shifting exclusion criteria may mislead and confuse the algorithm, resulting in exclusion of relevant studies. The committee also noted that the outcomes to be assessed are not specifically outlined in the protocol. If not the case, the systematic review and its conclusions are at risk of bias from incomplete reporting.

Recommendations

In order to improve these issues with OPPT’s approach to evidence identification, the committee recommends the following:

- Registering the protocol for each risk evaluation is important: That protocol should include an explicit search strategy, and search strategies for each database should be consistently listed in the appendix to the risk evaluation.

- OPPT could improve the evidence identification process by requesting information from manufacturers, such as ingredients for products, and from organizations that have provided data previously during the peer-review stage. Such requests made earlier in the process could lead to more complete data gathering. TSCA provides OPPT with authority to collect information on chemical manufacturing, processing, and use, which could be used to collect information in advance of the risk evaluation (TSCA section 8(a) Reporting Requirements, 15 U.S.C. 2607).

- Machine learning and AI-based tools should be used for searching and screening, especially if the tools are validated by the developer and users or there are publications available that document this validation.

- Eligibility criteria need to be based on PECO statements that are formulated in a standard way and need to be predefined in the protocol. The eligibility of outcomes needs to be carefully considered a priori to prevent a systematic exclusion of outcomes that could bias the results, such as excluding studies that have findings counter to those anticipated for the included outcomes.

- Documentation of all studies identified in searches should be more clear with the provision of a list of included studies, detailed evidence tables of included studies, and documentation of excluded studies with reasons for exclusion.

- OPPT should specify the methods by which the screening will be conducted. Examples include the number of reviewers (e.g., two screeners or one screener and an AI tool) and how disagreements are handled.

EVALUATION OF THE EVIDENCE

Following evidence identification, the next step in the systematic review process is evaluation of the evidence (see Figure 2-1). In a systematic review, the individual studies are critically appraised using predefined criteria and then the body of evidence (i.e., all of the included studies for a particular question and outcome) is synthesized (qualitatively and/or quantitatively) and evaluated to draw a conclusion and specify a level of confidence in that conclusion. The systematic review should assess the strengths and limitations of the evidence so that decision makers and stakeholders can judge whether the data and results of the included studies are valid (IOM 2011).

State of the Practice

Individual human, animal and other ecological receptors, and mechanistic studies are assessed for internal validity (commonly referred to as “risk of bias” in systematic review) by considering aspects relevant to the type of study (OHAT 2019). Bias is a systematic error that leads to study results that differ from the truth. Bias can lead to an observed effect when in truth there is not one, or to no observed effect when there is a true effect. Risk of bias is the appropriate term, as a study may be unbiased despite a methodological flaw. The risk-of-bias assessment differs from an assessment of study quality, which is the appraisal of included studies to evaluate the extent to which study authors conducted their research to the highest possible standards (Higgins et al. 2011). Some tools assess risk of bias and study quality separately because the risk of bias addresses how valid the individual studies are; a study can be of high quality and still have a high risk of bias. Many markers of a high-quality study (e.g., whether a study’s investigator has performed a sample size calculation and whether the study is reported adequately or has received appropriate ethical approvals) are unlikely to have any direct implication for the potential for a study to be affected by bias.

There are many tools for assessing risk of bias, such as those used by the Navigation Guide, OHAT, and the IRIS Program, and there is no consensus on the best tool for risk-of-bias analysis. However, there are best practices. For example, tools are preferred that rely on the evaluation of individual domains rather than the creation of overall quality scores (Eick et al. 2020). Such tools provide a structured framework within which to make qualitative decisions on the overall quality of studies and to identify potential sources of bias. Overall quality scores may not adequately distinguish between studies with high and low risk of bias in meta-analyses (Herbison et al. 2006). Importantly, there is also a lack of empirical evidence on the use of quality scores (Jüni et al. 1999).

While there is inevitably variation in the internal validity and risk of bias across individual studies, it is standard practice to include all studies, even the studies with a high risk of bias into the evidence synthesis. The most appropriate method to exclude studies from evidence synthesis is based on predefined exclusion criteria that should preclude an irrelevant study from being evaluated.

Although there is not a specific standard of practice for evaluating exposure data, the agency Guidelines for Human Exposure Assessment discuss the importance of critically reviewing data for use in an exposure assessment. To address the quality of analytical methods, the Guidelines for Human Exposure Assessment suggest a series of questions that should be asked when reviewing data for use in an exposure assessment: Has an authoritative body adopted these (and other considerations about whether the exposure data are useful for the research question being addressed in the exposure assessment)? Were the study objectives and designs suitable for the purpose of the exposure assessment? When evaluating the study data, consideration needs to be given to potential bias in the exposure data, which may be selective for high or low exposures; for example, some occupational monitoring data focus on the most highly exposed workers (EPA 2019b). For data on human exposures that are generated by mathematical models, the Guidelines for Human Exposure Assessment also discuss methods for model evaluation to test whether the analytical results from the model are of sufficient quality to serve as a basis for decisions.

To complete a model evaluation, the model operation and results are verified both qualitatively and quantitatively through calibration, or the process of adjusting selected model parameters within an expected range until the differences between model predictions and field observations meet selected criteria. Then, important sources of uncertainty, including measurement error, statistical sampling error, non-representativeness of data, and structural uncertainties in scenarios and formulations of models, are checked. Sensitivity analysis may also be conducted to determine the extent to which estimates are dependent on variability and uncertainty in the parameters within the model. The guidelines for model evaluation apply to several different types of models included in exposure assessment, such as physiologically based pharmacokinetic (PBPK) modeling and fate and transport models (EPA 2019b).

As yet, there is not a complementary tool matching the NTP’s OHAT Risk-of-Bias tool for application to ecotoxicology studies. One increasingly used method for assessing the quality of ecotoxicity studies is the Criteria for Reporting and Evaluating Ecotoxicity Data (CRED) (Moermond et al. 2016). CRED, which was built from Klimisch et al. (1997), presents a comprehensive and state-of-the-practice approach for evaluation of ecotoxicological information. CRED includes four reliability categories: reliable without restrictions, reliable with restrictions, not reliable, and not assignable. These are used for 20 reliability criteria falling into the categories of test set-up, test compound, test organisms, exposure conditions, and statistical design and biological response. CRED was developed, in part, to ensure that high-quality ecotoxicology information, including mechanistic evidence, is not excluded a priori from regulatory assessment processes simply because a study was not performed according to a standardized protocol using a standardized model species. The reliability of CRED as an assessment approach was determined from a ring trial that compared it to the Klimisch method (Kase et al. 2016). Results showed that the CRED evaluation method was a more detailed and transparent evaluation of reliability and relevance than the Klimisch method. Ring test participants perceived it to be less dependent on expert judgment, more accurate and consistent, and practical regarding the use of criteria and time needed for performing an evaluation.

Committee Description of the Approach in TSCA Risk Evaluations

OPPT has developed an extensive de novo critical appraisal tool, termed TSCA’s “fit-for-purpose evaluation framework,” which is applied to human, animal, ecological receptors, mechanistic, exposure, fate, and physical chemical property studies. OPPT has stated that the evaluation strategies were developed after review of various qualitative and quantitative scoring systems. OPPT considered items such as NTP’s OHAT Risk-of-Bias tool, CRED, and EPA ORD’s draft IRIS handbook. These tools were not adopted because they do not encompass the entirety of TSCA’s scope and specifically do not include either exposure assessment or fate and transport assessment (Francesca Branch, presentation to the committee, July 23, 2020).