4

Digital Infrastructure Needs for Operational Testing

For decades, the power and speed of, and connectivity afforded by, digital technologies have been increasing exponentially, with implications for every part of society. Not surprisingly, digital technologies are also dramatically reshaping both military technologies and the ways in which those technologies are developed, tested, and deployed. This will pose increasingly serious challenges to the military’s operational testing and evaluation (OT&E) over the coming years while simultaneously offering opportunities to make OT&E more responsive, effective, and flexible.

This chapter examines those two complementary aspects of digital technologies in operational testing—the challenges and the opportunities. Many of the challenges arise because of the appearance of novel military technologies whose operational testing requires approaches that are fundamentally different from anything in existence today. Perhaps the best example of this is found in the areas of artificial intelligence, autonomous systems, and machine learning, which are posing new and—so far—unresolved challenges for those who seek to test the operational performance of such technologies.

At the same time, digital technologies are providing new and powerful approaches to OT&E. One example is the rise of digital twins and high-performance modeling and simulation, which are enabling novel ways of testing technologies and systems. David Tremper, Director of Electronic Warfare for the Office of the Secretary of Defense, addressed the committee at the public workshop and shared successes realized in the AEGIS combat system’s use of an onboard digital twin that can operate simultaneously with the operational system. The appearance of digital

twins is particularly timely, given that the combination of new domains and operational constraints is increasingly making virtual testing the only practical approach for certain applications.

Finally, as ever more powerful digital technologies are enabling the collection, processing, and analysis of massive amounts of data from testing, military ranges will be increasingly challenged to collect, process, transmit, store, and analyze these data securely and effectively. An additional challenge will be securing these data in all of their states and ensuring the data is accessible, secure, and consumable to those who need it since data generated during operational testing may be at a mix of classification levels. These combined challenges are placing growing demands on the digital infrastructure of the nation’s military ranges.

MODELING AND SIMULATION

Modeling and simulation (M&S) is becoming an increasingly essential part of operational testing. This growing role is driven by a number of factors, some on the supply side and some on the demand side. On the supply side, rapid increases in computing power and memory combined with improvements in software capabilities and sophistication have dramatically expanded what is possible to do with digital models. M&S now plays a major role in the development of commercial products, such as automobiles (Biesinger et al., 2019) or pharmaceuticals (USFDA, 2021), dramatically shortening the time it takes to bring a product to market, and it has the potential to dramatically improve the testing of military weapons and systems as well. A case study in the effectiveness of this approach is the National Nuclear Security Administration’s Science Based Stockpile Stewardship (Reis et al., 2016) program in which high performance computer simulations across multiple physics and materials science disciplines play a leading role in certifying the safety and security of nation’s nuclear stockpile. In fact, the Deparment of Defense (DoD) is already building capabilities to use M&S to support future technologies such as advanced aircraft,1 space systems,2 and artificial intelligence.3

On the demand side, a variety of factors are driving the growing role of M&S in OT&E. For example, some test exercises would reveal sensitive information and capabilities. Since it is unrealistic to hide open-air tests

___________________

1 Panel discussion from John Pearson, Senior Evaluator, 5th/6th Generation Fighter Aircraft to Workshop on Assessing the Suitability of Department of Defense Ranges, January 28, 2021.

2 Panel discussion from COL Eric Felt, Director, AFRL Space Vehicles Directorate to Workshop on Assessing the Suitability of Department of Defense Ranges, January 28, 2021.

3 Panel discussion from Brian Nowotny, DoD Autonomy Test Lead, Test Resource Management Center to Workshop on Assessing the Suitability of Department of Defense Ranges, January 29, 2021.

or space-based tests from observation, any such testing risks providing information to U.S. adversaries about the capabilities of the systems being tested. If certain details need to remain secret, testing via simulation is often the best, or only, option.4

Another reason to simulate is that some systems simply cannot practically be tested across their entire application space. For example, open-air testing of hypersonic weapon systems requires large geographical areas at various altitudes, but these systems are expensive single-use devices that are too costly and time prohibitive to fully explore the operational envelope in test.

It would also not be feasible to carry out full-scale tests in a real-world environment of a weapon designed to compromise nearby computers and digital communications. Furthermore, some testing environments cannot be physically replicated on DoD test ranges. For example, artificial intelligence (AI) systems must train and execute on a stream of operational data that reflects their intended operating environment. During development and testing, an autonomous vehicle has access to the same roads as the operational system. However, an AI system to classify threat emitters cannot have constant access to the electromagnetic emissions of anticipated future threats. On the other hand, simulation models, informed and improved by intelligence over time, may be run to generate sample data.

Finally, running simulations is generally less expensive than running tests with expensive pieces of equipment, and while simulations cannot completely replace physical tests—real-world data will always be necessary for grounding models in reality—simulations can be used in various ways in conjunction with testing, and should be embedded in the planning of test programs.

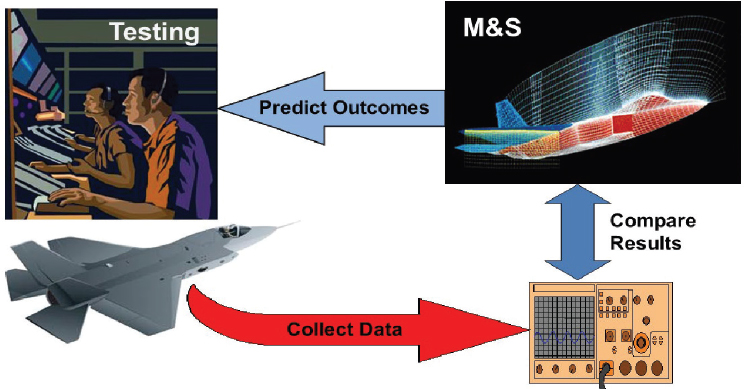

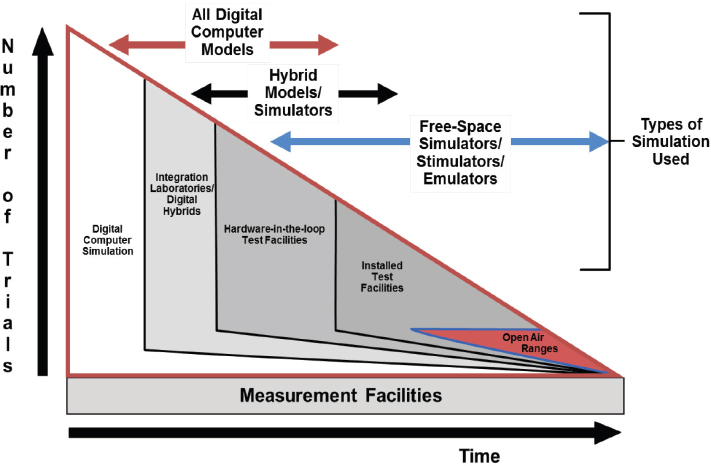

Traditional Use of Modeling and Simulation in Weapons Testing

The classical view of the role of M&S in testing is illustrated in Figures 4.1 and 4.2, taken from a Defense Acquisition University (DAU) training class on the role of M&S in testing (DAU, n.d.). Figure 4.1 illustrates a classical linear process where a test is developed, results are predicted by the M&S tools, the test is executed, and the results are compared to the predictions. This approach has proven successful in accelerating the pace of testing in many programs and has demonstrated some of the promise of M&S in system level testing. However, this view does not fully exploit the power of modern M&S.

___________________

4 Panel discussion from Dr. Raymond D. O’Toole, Acting Director, Operational Test and Evaluation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 28, 2021.

This classical view is expanded in Figure 4.2, which highlights the major role of M&S at the program outset in supporting design activities, but with that role diminishing over the program life cycle. Under this approach, the M&S tools developed early in the program are frequently not sustained or evolved to perform an integrated function with developmental testing (DT) and operational testing (OT). Too frequently, once the system enters test, models are redeveloped from scratch, without good linkage to the models that were employed early in the program. Another result of this approach is that the system will frequently be turned over to the warfighter without prior exposure to robust system-level models.

Benefits of Modeling and Simulation in Testing

Simulation should not be viewed only as a replacement for testing or as an alternative that is less expensive or more convenient or that can be carried out in situations where physical testing cannot. Rather, simulation is a fundamentally different approach to testing systems that has its own benefits and advantages that are different from and complement those of physical testing. This means, in particular, that a thoughtful combination of simulation with testing can be much more powerful and effective than either simulation or testing alone. These benefits are presented below:

- A robust simulation environment provides understanding that physical testing cannot. Physical testing will likely be considered the gold standard in operational testing for some time to come. It is important to recognize, though, that testing largely provides a binary result: either the system worked or the system failed in this one test. By contrast, the simulation environment can help a user understand the system’s margin in successful tests and identify—through Monte Carlo analysis, for example—those systems that might have been on the edge of failure. Thus, integrating simulation with physical testing will yield a richer characterization of the system and its performance for the warfighter.

- Embracing simulation will drive some testing needs. For a simulation to be useful, the community must have confidence in the validity of the results. Fortunately, a well-formed simulation environment makes it possible to study the sensitivity of the results to individual parameters in the model and to quantify the uncertainty of the overall result. The discipline of uncertainty quantification has matured to the point that it is possible both to understand the uncertainty in a simulation result and to understand what parameters drive that uncertainty. Testing can then be focused in a manner that maximizes its value in terms of driving down the overall uncertainty in a simulation and that

- Increased use of simulation at the program level can create opportunities at the campaign level. The major challenges of modern warfare include optimizing and assessing the performance of strike packages that integrate advanced technology systems with legacy systems or multiple advanced technology systems into a combined force. As individual programs establish more sophisticated simulations, the opportunity exists to conduct simulations at the campaign level. Such campaign-level simulations can provide powerful insights—revolutionary technologies can enable revolutionary operational strategies.

- The increased use of simulation is unlikely to reduce the load on test ranges. It is unrealistic to think that simulation will dramatically reduce the need for testing advanced technology systems. The flexibility offered by emerging systems increases the challenges in operational testing in demonstrating that the systems function properly across their entire potential application space. A well-formed operational testing program will integrate simulation with live testing to maximize the demonstrated capability of the system. For many of the advanced technology systems, the threshold testing requirements to develop good confidence in the system will be substantial.

consequently improves the understanding of the system. Note that the testing required to reduce uncertainty will include both ground testing and flight testing.

Taking Full Advantage of the Power of Simulation

The current OT&E enterprise has the opportunity to significantly broaden its use of M&S. In short, the increasing power of computers combined with the growing sophistication and effectiveness of digital models is opening up new possibilities in simulation that should be taken advantage of by the nation’s military ranges. To use M&S to greatest effect, it will be necessary to integrate testing and simulation more closely than is currently the case.

New Abilities in Computing Are Opening Up New Possibilities in Modeling and Simulation

Advances in modeling and simulation combined with high-performance computing now provide a powerful capability to employ physics models to understand the performance of advanced-technology weapon systems. Modern M&S tools can provide high-fidelity predictions of the behavior of systems under test with reasonable computing

times. Combined with approaches such as Monte Carlo analysis and uncertainty quantification, modern simulation capabilities can provide powerful insights into both the performance margin of the system under test and the sources of uncertainty in the behavior of that system. Cloud computing, virtualization, continuous integration/continuous delivery (CI/CD), and DevSecOps approaches allow simulation developers to provide simulation capabilities as a service (Siegfried, 2021), with ubiquitous access to simulation software and on-demand computational power. Such M&S capability is not only valuable in the design phases of a new system, but it can continue to be evolved to support an integrated role in both DT and OT. In addition, such models have sustained value in supporting both effectiveness assessment and eventual campaign level simulations.

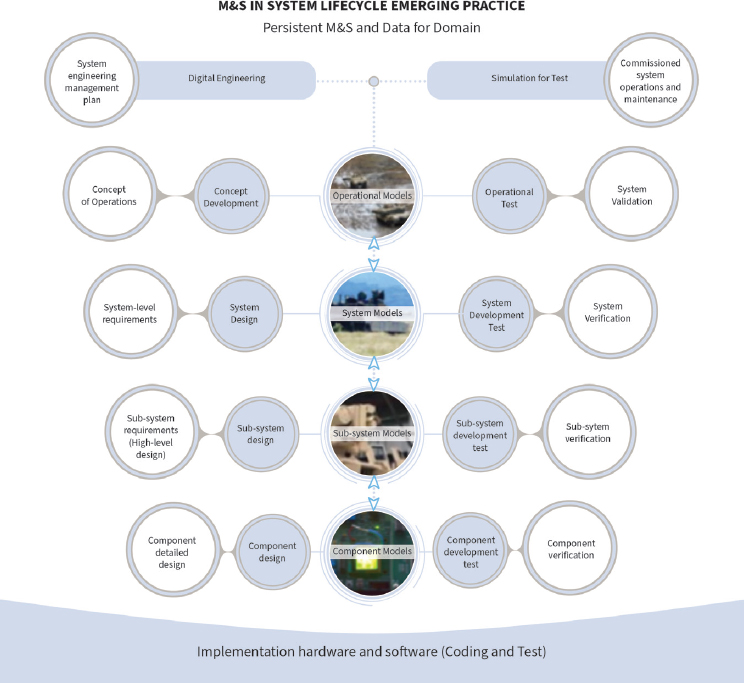

The Importance of Integrating Development and Testing with Simulation

An opportunity exists to further accelerate the pace of testing and to improve understanding of the performance of advanced technology systems by embracing a more integrated approach to development, testing, and simulation. In this approach, illustrated in Figure 4.3, DoD develops and sustains a persistent M&S and data environment for a particular military domain. As shown in the figure, a persistent M&S and data environment supports all phases of the life cycle. In the early phase, M&S supports concept development and digital engineering to inform requirements and rapidly assess the effectiveness of engineering choices. As system development begins, M&S supports developmental testing to verify the performance of components and subsystems. Finally, M&S supports operational testing to validate the system itself using realistic models of its operating environment throughout deployment to assess emerging operational uses as well as the effects of aging on system performance. Furthermore, M&S data and results are shared by different levels in M&S abstraction. For example, component and subsystem models are not necessarily re-used in system models, but their data and results enable the development of more abstract models suitable for system validation. Finally, M&S is not simply used to predict test results; it is used to focus testing in particular areas with the goal of driving down uncertainties in system-level understanding. Under this approach, M&S is sustained and evolves through the program life cycle to support the system throughout its life cycle deployment.

It is important to note that the traditional approach of saddling single acquisition programs with stand-alone M&S capabilities will not work for mission-driven testing. Mission-driven testing typically spans multiple programs. Furthermore, if DoD waits until program initiation to build a

test capability, it will not be available for digital engineering during the early life-cycle phases. Instead, a separate office must develop requirements for, fund, and sustain persistent M&S for critical warfighting domains.

The Joint Simulation Environment (JSE), originally built for F-35 Joint Strike Fighter (JSF) testing in a simulated environment, is an illustrative example. During his opening comments to the committee, Robert Behler, the Former Director of Operational Test and Evaluation, discussed the role of the JSE in evaluating the ability to perform high-level missions against near-peer threats,5 and he recommended a site visit to Patuxent River. For context, the JSE has had some challenges, and its delayed development has delayed the F-35 initial operational test and evaluation (IOT&E) and full rate production decision because of the daunting challenges of integrating the necessary threat simulators and friendly supporting system simulators in sufficient numbers to represent the theater of interest.6 However, analysis has shown that overcoming these challenges is feasible.7

During their site visit to Air Combat Environment Test & Evaluation Facility (ACETEF), committee members found the JSE to be an innovative and modern approach to simulation of complex DoD mission threads which could be replicated for other missions. However, the program provides some lessons learned. JSF missions typically involve several other DoD systems, so accurate representation of those external systems is difficult for a separately funded program to develop and manage. The JSE is still in development, even though the JSF is well into production, so the JSE was not available to support early life-cycle digital engineering. Additionally, multiple programs could utilize and benefit from the threat models and the theater representation built for JSE, but there is no clear mechanism to make the models accessible and consumable by other programs.

In considering the approach illustrated in Figure 4.3, it is important to keep in mind that programs do not need to decide between testing and simulation. The issue is often framed in terms of the question “Can

___________________

5 Robert Behler, Director of Operational Test and Evaluation, “Study Sponsor Perspective,” to Kickoff Meeting for Assessing the Suitability of Department of Defense Ranges, December 4, 2020.

6 Lieutenant General Eric Fick, Program Executive Officer, “Update on F-35 Program Accomplishments, Issues, and Risks,” F-35 Joint Program Office Testimony to House Armed Services Committee, Subcommittees on Tactical Air and Land Forces and Readiness Joint Hearing, April 22-23, 2021, https://armedservices.house.gov/2021/4/subcommittees-on-tactical-air-and-land-forces-and-readiness-joint-hearing-update-on-f-35-program-accomplishments-issues-and-risks.

7Defense Daily, “Lack of F-35 Full-Rate Production Decision Provides ‘Launching Point for Criticism of Program,’ PEO Says,” May 13, 2021.

simulation be used to replace testing (and reduce cost)?” when the better framing is to ask “How can simulation be used with testing to maximize the effectiveness of advanced technology systems for the warfighter?” The optimal strategy may involve integrating testing and simulation in a way that produces the greatest value, maximizing understanding and minimizing the cost for testing the program.

A Vision for the Future of Modeling and Simulation in OT&E

Modeling and simulation have tremendous potential to transform OT&E into a much faster, more powerful, and more cost-effective enterprise, but the overall effectiveness of M&S will depend on the details of its implementation. With this in mind, the committee offers the following recommendations for the use of modeling and simulation in support of the nation’s military ranges.

Sustain Modeling and Simulation Throughout the Program Life Cycle

The role that M&S will play in the development of a system should be a consideration during Technology Maturation and Risk Reduction (TMRR) and be well matured prior to entry into Engineering and Manufacturing Development (EMD), since it is a key driver to the associated activities, schedules, and cost. To effectively apply M&S for design decisions, system integration, and verification, the detailed requirements for specific models and simulations must be provided early to the M&S community and embedded in program milestones. Delivery of defined modeling capability in terms of functionality, fidelity, and maturity frequently paces system development and will have increasing importance in meeting schedules.

A significant challenge with using M&S and data in program development is that the development of the data and the initiation of M&S often come late in the program so they are only available for system test. If M&S and data resources for a particular set of mission threads were persistent, architected and curated for sharing and joint use, and funded independently of a particular program, they would be available to support many related programs throughout their life cycles. This is why Recommendation 3-1 for a joint program office also includes responsibility to sustain the M&S and data ecosystem necessary for integrated development and test of critical mission threads.

Since M&S has traditionally been used to demonstrate that a preliminary design meets requirements, the associated funding has often been applied well past program inception. However, the most effective use of M&S arises from a well-planned and well-architected infrastructure that

provides defined capabilities that are available and scheduled to support early feasibility studies, inform design decisions for multi-disciplinary design optimization, complement integration and test, optimize military operations, support predictable production, guide system sustainment, and realize the benefits of the potential reuse and sharing of models, simulations, analysis tools, and data. This strategy requires a funding profile that is primarily front-loaded and that drives requirements to the M&S community, akin to requirements for prime hardware and software, in order to ensure that well-planned models and simulations are available as scheduled to meet critical program milestones.

The M&S and data ecosystem must be designed so that they support the exchange of attributes among models running in various native applications, with metadata that defines those attributes as well as the functionality, fidelity, and pedigree for each model. The models will evolve over the course of the program and be adaptable and extensible for use at different levels within DoD, providing the required capability at each.

Create a Central Modeling and Simulation Resource

There currently exists service-level offices to support modeling and simulations efforts, such as the Air Force Simulation and Analysis Facility (SIMAF),8 the Air Force Agency for Modeling and Simulation (AFAMS),9 and the Army Modeling and Simulation Office (AMSO).10 However, without a DoD infrastructure to provide common and maintained M&S, each program develops or acquires its own models and emulators to support integration and test against various system interfaces and threats, including command-and-control systems, cues from multiple sources, threat radars, targets, etc. This is inefficient and will likely result in system deficiencies from the use of outdated or inaccurate model representations, which are often developed by organizations that may not have expertise or full insight into those systems. There must be a single, managed, trustworthy source for common DoD models that is maintained as part of a core DoD infrastructure. One particular aspect of these centralized M&S resources should be a collection of digital twins that represent adversary equipment or threats against which multiple development systems will be tested.

M&S also plays an increasingly important role in meeting the challenges associated with cybersecurity, which drives rapidly evolving threat

___________________

8 Modern Technology Solutions, “Simulation and Analysis Facility (SIMAF),” https://www.mtsi-va.com/modeling-simulation/, accessed August 10, 2021.

9 U.S. Air Force, “Air Force Agency for Modeling and Simulation (AFAMS) Mission and Vision,” https://www.mtsi-va.com/modeling-simulation, accessed August 10, 2021.

10 U.S. Army Modeling and Simulation Office (AMSO), “AMSO’s Mission,” https://www.ms.army.mil, accessed August 10, 2021.

capabilities and corresponding changes in requirements for the system under development, as well as in the engineering and test infrastructures. Digital twins that can be subjected to repeated cyberattacks as the threats and their tactics, techniques, and procedures (TTPs) evolve will allow the cyber resiliency of the systems and mission to keep pace.

Use Uncertainty Quantification

The current DoD instruction on M&S verification, validation, and accreditation recognizes the importance of having and using a process to verify, validate, and accredit M&S tools (DoD, 2018b). Although such a process provides the necessary steps to ensure that a model meets the expectations of the service, the process is insufficient in that it does not take advantage of uncertainty quantification (UQ), a powerful tool for understanding the limitations of models.

A number of sources of uncertainty exist in modeling and simulation; a good discussion is found in Roy and Oberkampf (2011). Some uncertainty is the result of the modeling process itself and is introduced by modeling assumptions and numerical approximations employed in the simulation. Other sources of uncertainty result from the characteristics of the system itself, such as dimensional variations, variability due to manufacturing processes, wear, damage, and uncertainty in the system surroundings. UQ provides a framework to estimate the uncertainty of the result as well as the sources of those uncertainties. This information can be used to inform testing activities as to which tests may be most useful in driving down the uncertainty in the understanding of the system.

Recommendation 4-1. A Department of Defense joint program office should establish a shared, accessible, and secure modeling and simulation (M&S) and data ecosystem to drive development and testing across the life cycles of multiple supporting programs. M&S should be planned from early concept development to support the entire life cycle of the system, from requirements generation, through design development, integration and test, and sustainment. Uncertainty quantification should be employed to identify the primary sources of uncertainty in the understanding of the system being developed and to define an integrated testing and simulation activity to reduce those uncertainties to an acceptable level.

The M&S ecosystem should:

- Be shared within a DoD mission space for a set of critical mission threads;

- Contain DoD validated and accredited scenarios, threat models, system models, and common metadata that defines the pedigree, applicability, and limitations;

- Be accessible by concept developers, requirements developers, research and development programs, acquisition programs, and test facilities; and

- Integrate across DoD services and industry partners so that industry models can be used in the ecosystem and DoD models can be used to support digital engineering by industry partners.

INCREASING THE USABILITY AND VALUE OF DATA

The role that digital technologies play in testing and evaluation is not limited to modeling and simulation. These technologies, for instance, make it possible to record, store, process, and analyze huge amounts of data from testing—data that can provide a much clearer and complete picture of the performance of a system or system of systems under test. Digital technologies also enable the rapid and secure communications and transfer of data. But taking advantage of these capabilities will require overcoming various challenges. Two of the biggest challenges will be handling massive amounts of data in a way that maximizes the value of that data and ensures the interoperability of data among the various segments of the OT&E establishment and across multiple programs in critical warfighting domains.

The Challenge of “Big Data” in Operational Testing

The growing power of digital storage—that is, the ability to hold increasing large amounts of data in increasingly small spaces and at increasingly low costs—combined with the increasing ability of computers to manipulate and analyze those data quickly and efficiently has created an era of “big data” in which previously unimaginable amounts of data are collected, stored, analyzed, and communicated. This is turn has revolutionized many fields that rely on large amounts of data, from artificial intelligence and machine learning (AI/ML) to autonomous systems, and it has the potential to have a similar positive effect on OT&E—if the data can be handled effectively.

In the January 2021 workshop, James Amato, the executive test director of the Army Test and Evaluation Command, observed that, as a result of the digital revolution, the military ranges are collecting more and more data from tests. “The amount of data that we push around and that we have to push between ranges, has grown exponentially,” he told the committee. But the ranges are being overwhelmed by that data. “We

don’t have the [data] infrastructure today,” he said. “We don’t have the technology and solutions in place today to be able to do that at scale, at speed that will be required to link those.”11 Similarly, another speaker at the workshop, Arun Seraphin, a professional staff member of the Senate Armed Services Committee, identified the lack of an efficient data infrastructure as a major OT&E challenge. The ranges generate large volumes of test data, he said, but they do not manage those data well, and the main reason for that failure is that the ranges’ data infrastructure is inadequate (NASEM, 2021, pp. 9–10).

As Conrad Grant, the chief engineer of the Johns Hopkins University Applied Physics Laboratory, explained at that workshop, there are a variety of data and measurement challenges in large-scale tests, such as those carried out across multiple domains or multiple ranges. “We need instrumentation, telemetry, data collection, data handling, and data analysis that will work at the scale of these large ranges we’re talking about,” he said, “and this is made difficult because of the desired volume of the data we’re trying to collect from the system under test and the desire to make it available for analysis very quickly.”12 Speed is necessary, he noted, because evaluators often must analyze the data that have been collected from range tests on one day in order to determine which tests should be run the next day, but this speed is only possible if the large amounts of data collected from the tests can be quickly transmitted to the centers where the data analysis is done.

Also at that workshop, Joshua Marcuse, the head of strategy and innovation in the global public sector at Google, spoke about what will be necessary for the ranges to handle the large amounts of data generated by the tests. In particular, he argued that it is crucial to start planning for how those data will be handled early in the design phase of a project. However, he said, in his work with DoD, he has observed that program officers often design and build systems without a data strategy, with the result being that much of the most meaningful data—the data that can be used to inform operational testing—are not collected (NASEM, 2021, p. 9). “Thinking about the data requirements for a digital engineering approach to this has to begin at the beginning and not be a requirement that comes in at the end when the system is handed over the wall to someone that’s meant to test it and then they realize what’s missing,” he said. This will require program officers to develop a new mindset, he said.13

___________________

11 James Amato, presentation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 29, 2021.

12 Conrad Grant, presentation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 28, 2021.

13 Joshua Marcuse, presentation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 29, 2021.

More generally, Marcuse told the committee at the workshop that military ranges lack the necessary digital resources to handle both the data-intensive and the computation-intensive aspects of OT&E. To properly carry out modeling and simulation of the sort required for testing and evaluation requires a tremendous amount of computing capacity—generally more than DoD has available (NASEM, 2021, p. 9). He continued on to observe that some military ranges hardly seem to have entered the digital age at all, and he spoke about a time he was at an Army testing facility where people “were complaining to us enormously because they had a difficult time keeping track of all the paper copies of the testing results that they needed to get from the range that they were supposed to inspect.”14

Data Communication Issues

One particular challenge related to the vast amounts of data that will be generated by the tests of the future is simply moving those data from one place to another among the relevant platforms, range assets, and participating test ranges. This requires a highly connected, high-capacity, highly secure communications system that is far beyond anything that exists in the nation’s system of military ranges today, and the requirements will only increase as time goes on, testing becomes even more sophisticated and data-intense, and the need for rapid and dependable communication of data grows. Addressing these challenges will require improvements in both hardware and software, and the particular issues related to data communication range from simple infrastructure needs (establishing connectivity, increasing data rates, expanding communications frequencies and data formats) to more complex issues such as a lack of standardized data formats and conflicting information security approval authorities.

A recent effort supported by DoD to enable the fast transfer of large volumes of data is the Defense Research and Engineering Network (DREN).15 DREN is a fiber optic network connecting supercomputing centers for scientific research as well as test and evaluation missions. A secret version of DREN, the SDREN, provides a network for transferring secret level data. While this could be a promising effort for improving intra-range connectivity for complex test events, it is unclear if DREN or

___________________

14 Ibid.

15 Defense Research Engineering Network (DREN)/Secret Defense Research Engineering Network (SDREN), “Network Capabilities and Technical Overview,” https://www.hpc.mil/program-areas/networking-overview/dren-sdren, accessed August 18, 2021.

SDREN can accommodate data transfers at multiple classification levels and if they resolve issues with data interoperability.

Data Security Issues

DoD has unique needs for data security. Test range data is commonly a mix of security classification levels and needs to support sharing via a multi-level security mode of operation and not simply be defaulted to a “system high” mode of operation. The data generated at the test range may also be proprietary. Properly facilitating the sharing of required information is both a technical issue and a computer security/bureaucratic approval issue. The sharing of data, models, and other digital assets among ranges and among services is going to become increasingly important in coming years, but such sharing leads to a number of security issues. As Ed Greer, former Deputy Assistant Secretary of Defense for Developmental Test and Evaluation, said at the public workshop, it is difficult to share data securely among various entities because of the lack of a “robust common IT [information technology] infrastructure that can support multi-level security and the switching of classification levels quickly” (NASEM, 2021, p. 10).

The committee site visits revealed specific examples of security issues related to testing and sharing of information. For example, the Air Force Capability and Encroachment Assessment Detail at the Eglin Test and Training Complex needs T&E infrastructure upgrades to support next-generation testing. The range cannot support the multi-level classification needs for the T&E environment. Furthermore, net-centric warfare requires realistic test environments for systems-of-systems interoperability (Figure 3-36 in DoD, 2018a), which will further exacerbate these security issues.

In speaking with personnel at the White Sands Missile Range (WSMR), the committee learned that the Test Resource Management Center (TRMC), the Survivability/Lethality Analysis Directorate at the Army Research Laboratory, and other DoD resources provide assistance to WSMR and other test facilities to help secure their existing cyber systems as well as to assist in the creation of high-fidelity, mission-representative cyberspace environments for testing and evaluation. In order for WSMR to maintain up-to-the-minute awareness of cybersecurity and cyber T&E advances, WSMR leadership must bring together a multi-directorate group to create a roadmap forward (WSMR, 2016).

Data Interoperability and Security Challenges to Sharing Data

In addition to the basic challenge of moving huge amounts of data from place to place, as described above, military ranges face two other

challenges related to moving data—and other digital resources, such as models—from range to range and system to system quickly and securely. Specifically, this sort of sharing is limited by two basic issues: limitations in data interoperability and difficulties in ensuring security when transferring and sharing data.

Data Interoperability

The current lack of data interoperability among ranges and systems has its roots in a variety of factors. To begin with, legacy systems, which generally have been developed with unique data definitions, pose a major challenge to interoperability. Individuals in different places and at different times made choices about their data that were tailored to fit their own particular requirements without much, if any, concern about whether those data could be combined or compared with data generated by others making decisions about their data based on very different considerations. The result is that the ranges have a mishmash of different data systems with varying data definitions and formats. Even today, when the value of data interoperability is more widely recognized than in the past, the designers of individual systems will often make locally optimal decisions about data definitions and formats. The result is that the various data systems operating on military ranges have limited data interoperability. This can be overcome—with some effort—when the goal is to improve the data interoperability between two systems or among a small number, but the task becomes more and more complicated as more systems are involved, and the issue is most apparent regarding the data interoperability of complex systems of systems.

DoD has been aware of this issue for quite some time, and, indeed, in the 1990s the department launched two major efforts to address application interoperability with the goal of preserving meaning and being mutually interpretable (NRC, 1999). The first of these efforts was the Enterprise Data Model Initiative, which sets forth a DoD process through which standard data definitions in functional areas (e.g., command, control, communications, computers, and intelligence [C4I]; logistics; and health care) are developed and then subjected to a cross-functional review process prior to being adopted as DoD standards (DoD, 1994). The second was the Shared Data Environment (SHADE) Program, which enables different C4I systems to share data segments and to use standardized access methods using middleware for translating data elements from one system for another (DISA, 1996).

Personnel from the Nevada Test and Training Range spoke with the committee about how there are instrumentation challenges in providing fourth- and fifth-generation aircraft with encrypted capability.

This requires costly instrumentation infrastructure on the aircraft and in ground support. In the 2025 Air Test and Training Range Enhancement Plan (USAF, 2014) it was noted that the Common Range Integrated Instrumentation System (CRIIS) project will provide most major range test and facility bases with the capability to collect highly accurate time, space, position information, and selected aircraft data bus information needed for advanced weapon systems testing. The enhancements provided by CRIIS are expected to enable interoperability across the major test ranges and support future F-35 testing (DoD, 2018a).

While the elements of DoD’s strategy for achieving interoperability are positive, they are not being fully executed. A 1999 study from the National Research Council found that both the formulation and the implementation of this strategy had gaps and shortfalls (Finding I-1 from NRC, 1999). And according to what committee members heard from staff at TRMC, data interoperability continues to be an issue more than two decades later. Box 4.1 provides a sample of data challenges voiced at the workshop (NASEM, 2021).

To ensure the usability and value of the data collected on the nation’s military ranges, the committee makes the following recommendations:

Recommendation 4-2: A Department of Defense joint program office should adopt and promulgate modern approaches for standardization, architectural design, and security efforts to address data interoperability, sharing, and transmission challenges posed by the complexity of next-generation systems. The joint mission office should determine how to develop and maintain a protected data analysis tool and model repository for testing, increase the interconnectivity of test ranges, and ensure the development of data protocols for the real-time transfer of data at multiple classification levels.

Few ranges have sufficient bandwidth and clear protocols for the real-time transfer of test data generated at various classification levels. For data that are not prioritized for real-time transfer, the transfer can take weeks to reach appropriate analysts, potentially resulting in significant scheduling delays. A pragmatic phased adoption approach will need to take account of the maturity of data tools and processes, and that implementation will require both up-front investment and concerted effort.

Software Is a Challenge in Operational Testing

With the digital revolution, software has become an increasingly important part of military weapons and systems, to the point that today’s systems, from the F-35 to artillery, are almost completely dependent on the proper functioning of their software. This means that the testing and evaluation of military systems includes a major software testing component. However, what the committee found from its study is that the military’s testing ranges have not kept up with software capabilities.

For instance, in the workshop sponsored by the committee as part of this study, Marcuse said that a fundamental challenge facing DoD is that, despite the digital revolution, testing remains optimized for hardware. The implications of that revolution, Marcuse said, have not permeated DoD’s rules, processes, institutions, or its personnel (NASEM, 2021). Going forward, military testing and evaluation should focus more on the digital elements of systems. The committee heard similar testimony from Raymond O’Toole, the acting director of OT&E at DoD, who said that “dramatically increasing and improving the test and evaluation of software-intensive systems” should be one of DoD’s priorities in OT&E (NASEM, 2021, p. 4). And Seraphin told the committee, “We have a real concern over the department’s ability to test software, both on the workforce side and on the infrastructure side.”16 Seraphin pointed to

___________________

16 Arun Seraphin, presentation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 29, 2021.

a number of specific software areas as presenting challenges in testing and evaluation, including software for emerging AI systems, software for command-and-control systems, and software for business systems.

Testing Artificial Intelligence and Autonomous Systems

The greatest software challenge for OT&E—and for T&E in general—is likely to be in the area of AI software and AI-based autonomous systems, as the committee heard from a number of sources. AI and autonomous systems are expected to play a major role in the nation’s defense in coming decades (Ray et al., 2020), but, to date, relatively little has been done to prepare for the testing of such systems. At the workshop, for instance, Jane Pinelis, the chief of testing, evaluation, and assessment at DoD’s Joint Artificial Intelligence Center (JAIC), said that the military’s testing and evaluation capabilities “have not been keeping pace with the speed of AI technology development” (NASEM, 2021). And in site visits to various ranges, committee members heard on multiple occasions that the ranges are completely unprepared to test systems running AI, including autonomous systems.

There are multiple reasons why the testing of AI and autonomous systems is challenging for the ranges. This is a technological area in which rapid progress is being made, which means that it is difficult to anticipate and prepare for the sorts of systems that might employ AI and to predict what the capabilities of those systems might be. But, to a degree, this is true about any technology in which rapid advances are being made. However, because of the nature of AI and autonomous systems, they pose testing challenges that are unlike any other.

For example, Devin Cate, the director of test and evaluation for the U.S. Air Force, told the committee during the workshop that because AI and autonomous systems are learning systems, they inevitably change and evolve throughout testing, which makes it difficult to characterize their performance in a repeatable manner. Overcoming this issue, he suggested, will require the testing enterprise to work closely with the system developers so that the AI-enabled and autonomous systems are designed from the start with tests in mind; in particular, he suggested, it would be useful to design these systems to collect all the data that will be needed to characterize and judge their performance (NASEM, 2021).

Another testing challenge will be simply setting performance goals for these systems since it is difficult to make a connection between specific performance parameters of the systems and the outcome of operational or mission tests. Things get even more complicated when the testing involves humans teaming with AI or autonomous systems. It will be critical to do such integrated tests in order to evaluate how the systems

will perform in actual missions, but at present there is no well-established approach for carrying out tests of such combinations.

Perhaps the most challenging aspect to testing AI and autonomous systems will be determining how to detect and evaluate emergent behaviors—actions that the systems take that have not been programmed into them but rather that appear as the result of complex interactions among a system’s various components or because of machine learning. A non-military example would be the selection of a chess move by an AI chess system—the machine chooses its moves through its own study of chess, and the machine’s creators have no idea what a move will be until it has been made. The performance of a chess-playing computer can be judged by, for instance, having it play multiple games against human grandmasters (or against other chess-playing computers). It is not clear, however, how to judge the emergent behavior that will appear in AI-enabled military systems. As Pinelis told the workshop, “We need methods for defining, diagnosing, and understanding emergent behavior as well as human training so that the operator can identify emerging behavior as it occurs and do things about it if it is undesirable.”17

A related issue will be how to judge a particular performance—to decide what is “passing”—when an AI-driven system is being evaluated. This was mentioned at the workshop by Marc Bernstein, the chief scientist under the Assistant Secretary of the Air Force for Acquisition, Technology, and Logistics. As an example, he pointed to the Advanced Battle Management System (ABMS) now being developed by the Air Force. Evaluating the ABMS properly will require testing it in complex environments where there is no single “correct” action but rather a collection of options, each with its own advantages and disadvantages, so that the “best” choice is a judgment call. How, he asked, do you set up your operational testing and evaluation in such an ambiguous, gray environment? Furthermore, given that AI systems do their own “thinking” and do not simply behave in ways that have been programmed into them, it is quite possible that the AI-enabled system will come up with an optimal solution that is different from what its evaluators believe is best—and perhaps it would even come up with a solution that its evaluators had never thought of—and in these cases it can be difficult, if not impossible, to judge the system’s performance accurately (NASEM, 2021, p. 3).

Yet another issue was pointed out at the workshop by Grant. In testing weapon systems on autonomous vehicles where the weapons may be under the control of AI, how can the safety of others on the ranges

___________________

17 Jane Pinelis, presentation to Workshop on Assessing the Suitability of Department of Defense Ranges, January 29, 2021.

be assured, given that the AI’s decisions are not generally predictable? (NASEM, 2021).

Given all of these considerations and the fact that AI-enabled systems under test can have some very severe consequences, Pinelis told the workshop that it is crucial that DoD “push the test and evaluation for AI-enabled systems to where it needs to be with respect to science, data, knowledge, skills, workforce, and infrastructure” (NASEM, 2021, p. 3). At the same workshop, Missy Cummings, professor in the Department of Electrical and Computer Engineering at Duke University, offered a sobering warning about the difficulty of modeling autonomous systems. “Simulation can maybe help you do some baby testing early in the phases of autonomous systems, but it simply cannot represent the uncertainty of the real world” (NASEM, 2021, p. 9). In a review of the literature and site visit discussions, the committee found that the ranges are not adequately prepared for the testing and evaluation of AI and autonomous systems.

Finding 4-1: DoD test ranges are unprepared for the operational testing and evaluation of the increasing integration of AI and autonomous systems in military systems.

In an effort to develop a collaboration platform to support autonomy and AI projects and programs for DoD, the Office of the Under Secretary of Defense for Research & Engineering established the Assured Development and Operation of Autonomous Systems (ADAS) Project, which is overseen by TRMC.18 ADAS was initiated in 2020 to solicit proposals for making data, DevSecOps, software, and infrastructure resources accessible for collaborative settings to support autonomy and AI projects. To enable seamless collaboration across the services and domains for AI and autonomous systems testing, the committee makes the following recommendation:

Recommendation 4-3: The Test Resource Management Center should continue monitoring and supporting the Assured Development and Operation of Autonomous Systems Project, and prioritize efforts to develop a common set of standards, measurement approaches, and operational scenarios from which to evaluate the performance of artificial intelligence (AI) and autonomous systems, while recognizing that testing approaches may differ between AI and autonomous systems.

___________________

18 Arcnet Consortium Press Release, June 5, 2020, https://www.arcnetconsortium.com/trmc-coeus-white-paper-request/.

It is critical that program managers, TRMC, and DOT&E recognize that next-generation systems that continuously evolve as a result of changing data, AI integration, and similar technological advancements will require new methods for testing. For example, ABMS processes large volumes of data to inform decision making on the joint domain battlefield. Alternatively, autonomous systems require more work in the integration of human-machine teams. Given that changes in data will result in different outputs, testing the evolving ABMS may require continuous operational testing exercises year after year to ensure its suitability and survivability. Further research is necessary to advance testing technologies and strategies to test the integration of AI and autonomous systems.

REFERENCES

Biesinger, F., B. Krab, and M. Weyrich. 2019. “A Survey on the Necessity for a Digital Twin of Production in the Automotive Industry.” 23rd International Conference on Mechatronics Technology (ICMT). doi: 10.1109/ICMECT.2019.8932144.

DAU (Defense Acquisition University). n.d. Modeling and Simulation (M&S) and Distributed Testing. Fort Belvoir, VA. https://myclass.dau.edu/bbcswebdav/institution/Courses/Deployed/TST/TST303/Student_Materials/Student%20Lessons%20%28PDF%29/L05S-Model%20%26%20Sim/L05-M%26S. Accessed May 5, 2021.

DISA (Defense Information System Agency). 1996. “Defense Information Infrastructure (DII) Shared Data Environment (SHADE) Capstone Document.” Fort Meade, MD.

DoD (Department of Defense). 1994. The DoD Enterprise Model. Volume 1: Strategic Activity and Data Models. Office of the Secretary of Defense. January. https://www.hsdl.org/?view&did=469009.

DoD. 2018a. Report to Congress on Sustainable Ranges. Washington, DC.

DoD. 2018b. “Department of Defense Instruction Number 5000.61.” https://www.esd.whs.mil/Portals/54/Documents/DD/issuances/dodi/500061p.pdf.

NASEM (National Academies of Sciences, Engineering, and Medicine). 2021. Key Challenges for Effective Testing and Evaluation Across Department of Defense Ranges: Proceedings of a Workshop—In Brief. Washington, DC: The National Academies Press.

NRC (National Research Council). 1999. Realizing the Potential of C41: Fundamental Challenges. Washington, DC: The National Academies Press.

Ray, B.D., J.F. Forgey, and B.N. Mathias. 2020. “Harnessing Artificial Intelligence and Autonomous Systems Across the Seven Joint Functions.” Joint Force Quarterly 96:115–128.

Reis, V., R. Hanrahan, and K. Levedahl. 2016. “The Big Science of Stockpile Stewardship.” Physics Today 69(8):46. https://physicstoday.scitation.org/doi/10.1063/PT.3.3268.

Roy, C.J., and W.L. Overkampf. 2011. “A Comprehensive Framework for Verification, Validation, and Uncertainty Quantification in Scientific Computing.” Computer Methods in Applied Mechanics and Engineering 200(25-28):2131–2144. https://www.sciencedirect.com/science/article/abs/pii/S0045782511001290.

Siegfried, R. 2021. “Special Issue: Modeling and Simulation as a Service.” Journal of Defense Modeling and Simulation: Applications, Methodology, Technology 18(1).

USAF (U.S. Air Force). 2014. 2025 Air Test and Training Range Enhancement Plan. Report to Congressional Committees. http://www.nttrleis.com/documents/review/2025%20Air%20Test%20and%20Training%20Range%20Enhancement%20Plan_Jan2014.pdf.

USFDA (U.S. Food and Drug Administration). 2021. “Model Informed Drug Development Pilot Program.” https://www.fda.gov/drugs/development-resources/model-informed-drug-development-pilot-program. Accessed August 5, 2021.

WSMR (White Sands Missile Range). 2016. White Sands Missile Range 2046 Strategic Plan. https://www.wsmr.army.mil.