4

Situation Awareness in Human-AI Teams

Situation awareness (SA) is defined as “the perception of the elements in the environment within a volume of time and space [level 1 SA], the comprehension of their meaning [level 2 SA], and the projection of their status in the near future [level 3 SA]” (Endsley, 1988, p. 97). SA is critical to effective performance. For example, in a recent meta-analysis, Endsley (2021a) found 47 studies in a variety of domains in which SA was shown to be predictive of performance, including military operations (Cummings and Guerlain, 2007; Salmon et al., 2009; Stanners and French, 2005) and military aviation (Endsley, 1990; Sulistyawati, Wickens, and Chui, 2011). It is widely recognized that human SA of AI systems (including current and projected performance, status, and the information known by the system) is critical for effective human interaction with and oversight of AI systems (Boardman and Butcher, 2019; USAF, 2015).

Over the past 30 years, extensive research on human-automation interaction has generated a large database on the importance of the display interface, the automation-interaction paradigm, the mental model, and trust for developing high levels of SA in demanding and dynamic environments (Endsley, 2017). Each of these components will be important for the successful performance of human-AI teams in the future.

SITUATION AWARENESS IN MULTI-DOMAIN OPERATIONS

Based on extensive empirical research on SA, cognitive models of SA have been established (Adams, Tenney, and Pew, 1995; Endsley, 1995a, 1995b, 2015; Wickens, 2008, 2015), and user-centered design principles have improved system design to allow for high levels of SA, including in the design of automation and AI (Amershi et al., 2019; Endsley and Jones, 2012; McDermott et al., 2018). In the committee’s opinion, these principles are foundational for the design of effective system interfaces for human operators in multi-domain operations (MDO) and also for human interactions with the automation and AI that could be used in new systems developed for MDO.

In the committee’s judgment, MDO poses significant challenges to SA due to the high volumes of information involved and the need to integrate data across multiple stove-piped systems. The high data load affects not only the SA of individuals, but also the formation of accurate SA across the team of human operators, who may come from very different operational backgrounds and specializations and may be performing different operational roles. Team SA is defined as “the degree to which every team member has the SA required for his or her responsibilities” (Endsley, 1995b, p. 39). This means that it is not sufficient for some members of the team to have information if the team member who needs it does not know it. This also means that people involved in MDO will have very different SA needs, in terms of information inputs and the transformations of information necessary to generate

the appropriate comprehension and projections required by their roles (Bolstad et al., 2002). Related to team SA, shared SA is “the degree to which team members possess the same SA on shared SA requirements” (Endsley and Jones, 2001, p. 48). Systematic methods for determining the specific SA requirements at each level of SA (perception, comprehension, and projection) for any given operational role have been established and used extensively in many domains, including military aviation (Endsley, 1993) and command and control (Bolstad et al., 2002). Overall team SA has been shown to be predictive of team performance in a number of settings (Cooke, Kiekel, and Helm, 2001; Crozier et al., 2015; Gardner, Kosemund, and Martinez, 2017; Parush, Hazan, and Shtekelmacher, 2017; Prince et al., 2007).

Shared SA has also been shown to predict team performance (Bonney, Davis-Sramek, and Cadotte, 2016; Cooke, Kiekel, and Helm, 2001; Coolen, Draaisma, and Loeffen, 2019; Rosenman et al., 2018). As a key advantage, while it can be quite difficult to objectively measure concepts such as shared mental models, there is a well-developed research base on objective measures of SA that have been applied to assess team and shared SA (Endsley, 2021b). That is, for the subset of information common across shared goals, a consistent picture is required to support effective, coordinated actions.

It is critical that information displays for MDO be tailored to the individual SA requirements of each role, to reduce overload (Bolstad and Endsley, 1999, 2000). In addition, to support team coordination and interaction, the displays need to explicitly support team SA by providing a window into the relevant SA of other team members (Endsley, 2008). For example, displays that allow one MDO position to quickly see not only what another position is looking at, but also how information is translated into specific comprehension and projections for other roles, can be useful. This might include understanding the impact of weather on flight patterns or operational delays for an air operations role, and the impact of weather on troop positions and supply vehicles for an army role. While a given individual may not want to see information relevant to other roles constantly, effective shared displays can be designed to provide the ability to turn filters on and off to show such information. Such displays are very useful for supporting integrated operations in which the performance and actions of one teammate effect those of other interrelated operations across the joint battlespace. System displays that support the rapid transformation of information, in terms of physical vantage points, terminology, and mission perspectives of other team members, are needed (Endsley and Jones, 2012).

In many cases, MDO teams may form rapidly and uniquely, in an ad hoc manner, for short-term tasks and missions. Ad hoc teams create many challenges for SA that can negatively affect team cohesion, trust, and effectiveness. These challenges stem from the fact that team members (1) are often not co-located and are heterogeneous with respect to knowledge bases, terminology, training, and information needs; (2) participate during different shifts and along different timelines, joining and leaving the team at different times, and often have multiple responsibilities, such that they require frequent and efficient updating; (3) may have goals that are not well defined, including unclear hierarchies and lines of communications; (4) may have different security clearances; and (5) frequently have not worked with the team enough to form a good understanding of the capabilities and perspectives of their teammates, and thus lack good team mental models (Strater et al., 2008). In the committee’s judgment, these SA challenges necessitate that information displays for MDO explicitly support both individual SA and SA of other team members, so that people can rapidly understand the implications of new information for both their own plans and actions as well as for activities supporting the mission of the entire team. Methods for supporting this goal have been developed and applied to army command and control operations under the Future Combat Systems/Brigade Combat Team program that would apply to MDO (Endsley and Jones, 2012; Endsley et al., 2008).

Another significant challenge in future operations will be the actions of adversaries to attack the information network through cyber attacks or manipulation of information flows. These attacks may be obvious, such as denial of service or shutdown of trusted sensors and assets, or more subtle, such as an attack on the integrity of data flowing into the system (Stein, 1996). Such information attacks can have a significant negative impact on the accuracy of human SA and decision making (Endsley, 2018a; Paul and Matthews, 2016), or could lead to difficult-to-detect AI biases, such as data poisoning.

Key Challenges and Research Gaps

The committee finds three key gaps in the research around SA in MDO.

- Work is needed to establish displays and information systems for managing overload and providing team and shared SA across joint and distributed MDO.

- Methods to support information integration, prioritization, and routing across MDO need to be investigated.

- Methodologies for detecting and overcoming adversarial attacks on SA need to be developed.

Research Needs

The committee recommends addressing two major research objectives to improve SA in multi-domain operations.

Research Objective 4-1: Team Situation Awareness in Multi-Domain Operations.

Methodologies for supporting individual and team situation awareness (SA) in command and control operations need to be extended to multi-domain operations (MDO) (Endsley and Jones, 2012). Research is needed to determine effective methods for managing information overload in MDO and for supporting SA across joint operations, to include high levels of situation understanding and projection of current and potential courses of action. Interface designs to support the unique needs of ad hoc teams in MDO are needed. Methods for using AI to support information integration, prioritization, and routing across the joint battle space are needed, as are methods for improving information visualization to support SA. Human-AI teaming methodologies are needed to achieve high levels of SA when operating on-the-loop, allowing effective oversight of AI operations that occur at fast frames or contain high volumes of data that cannot be managed manually. In on-the-loop situations, there is no expectation that people will be able to monitor or intervene in operations prior to automation errors occurring; however, it may be possible to take actions to turn off the automation or change automation behaviors in an outer control loop.

Research Objective 4-2: Resilience of Situation Awareness to Information Attack.

Methodologies are needed to improve the ability of humans to detect and deflect adversarial attacks on information integrity, accuracy, and confidence, which can affect the situation awareness of both humans and AI. These methods need to take human decision biases and potential AI biases into account (see Chapter 8).

SHARED SA IN HUMAN-AI TEAMS

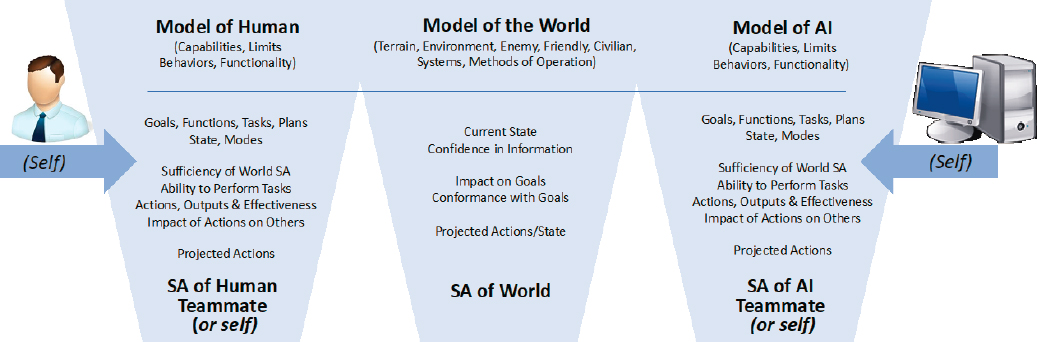

With the move toward the expectation that AI will function as a teammate as opposed to simply a tool, a new emphasis on the importance of creating effective team SA and shared SA within the human-AI team arises (Shively et al., 2017; USAF, 2015). As AI becomes more capable as a teammate it will, in many cases, be expected to collaborate actively to support task achievement (including anticipating human needs and providing back-up when needed), ensure goal alignment, and share status on functional assignments and task progress. These expectations create new requirements for the development of shared SA between humans and AI systems (USAF, 2015). Both humans and AI systems will need to develop internal SA of the world, themselves, and others, which will need dynamic updating within the context of more static and general mental models (Figure 4-1).

The situational models required for the development of shared SA between humans and AI systems include the following:

- Situation: Just as the humans involved in MDO command and control need high levels of SA to support their decision making, AI will need to form and maintain an accurate situational model of the world for its own decision making (Burdick and Shively, 2000; Endsley, English, and Sundararajan, 1997; Jones et al., 2011; Kokar and Endsley, 2012; SAE International, 2013; Salerno, Hinman, and Boulware, 2005; USAF, 2015; Zacharias et al., 1996).

- Task environment: As future human-AI teaming is envisioned to involve dynamic function allocation, in which responsibility for tasks may shift between human and AI teammates dynamically over time based on current capabilities and needs (see Chapter 6), an up-to-date model of the work to be performed, including current goals, functional assignments, plans, task status, and the states and modes of human and automation involved in the work, is needed (Endsley, 2017; USAF, 2015). Whereas this type of information may have been more static in the past (and therefore part of a relatively stable mental model), systems in which roles and responsibilities may shift dynamically between human and AI teammates necessitate active maintenance of this information as a part of SA.

- Teammate awareness: Just as humans need to accurately understand the reliability of AI for a given situation, AI may also need to maintain a model of the state of its human teammates to perform its tasks (Barnes and Van Dyne, 2009; Carroll et al., 2019; Chakraborti et al., 2017a).

- Self-awareness: People need to maintain meta-awareness of their own capabilities for performing their assigned tasks. For example, awareness of the effects of fatigue, excessive workload, or insufficient training could trigger team members to shift tasks to optimize team performance (Dierdorff, Fisher, and Rubin, 2019; Dorneich et al., 2017; NRC, 1993). Similarly, AI may need to formulate a model of its own performance and limitations (i.e., AI self-awareness) (Chella et al., 2020; Lewis et al., 2011; Reggia, 2013), to alert humans to step in when needed or to assign accurate confidence levels to its outputs.1

In addition to the development of an accurate understanding of the situation, tasks, and teammates by both the humans and the AI system, it is important that these situation models be aligned to facilitate smooth team functioning. That is, to facilitate team performance, teammate dyads need to maintain consistent shared SA on the situational goals and responsibilities that are in common with those of each teammate (Endsley, 1995b), along with maintaining shared SA on the state of the task environment (Endsley and Jones, 2001; USAF, 2015). Similarly, to create effective teamwork, models of self and teammate may need to be aligned. Shared SA has also been referred to as “common ground” (Klein, Feltovich, and Woods, 2005), a term borrowed from verbal discourse literature (Clark and Schaefer, 1989); however, some researchers have found common ground methodologies difficult to apply in practice and lacking in measures (Koschmann and LeBaron, 2003). The shared SA literature contributes well-developed measures and models that can be applied in the context of human-AI teams (Endsley, 2021b).

The committee finds that research to date has developed models of human-human team SA that may be leveraged to understand the factors underlying effective human-AI team SA, which include a focus on (1) team SA requirements, including methodologies for determining both individual and shared SA needs; (2) team devices,

___________________

1 AI self-awareness in this sense means only its ability to track its own performance and capabilities and does not imply any form of consciousness.

such as shared displays, shared environments, and communications; (3) team mechanisms, including shared mental models; and (4) team processes (Endsley, 2021b; Endsley and Jones, 2001). Other models have focused on team processes involved in establishing team SA (Gorman, Cooke, and Winner, 2006) (see Chapter 3).

The opportunities for SA mismatches within human-AI teams are significant. People and AI systems have very different sensors and input sources for gathering information and will likely have quite different mental models for interpreting that information. Thus, in the committee’s judgment, significant emphasis is needed on the development of effective displays for aligning SA in human-AI teams (see Chapter 5), and on the creation of effective communications and team processes (see Chapter 3). Further, the development of human-AI interaction methods that reduce workload while maintaining engagement is important (see Chapter 6), as well as the creation of training and other processes for building team mental models (see Chapter 9). Establishing appropriate levels of trust within the human-AI team also has a direct effect on how people allocate their attention, and directly affects SA (see Chapter 7).

Key Challenges and Research Gaps

The committee finds five key research gaps that exist for SA in human-AI teams. These gaps exist in

- Methods for improving human SA of AI systems;

- Methods for improving shared SA of relevant information between human and AI teammates, across diverse types of teams, tasks, and timescales;

- The ability of an AI system’s awareness of the human teammate to improve coordination and collaboration, and best methods for implementation;

- The effectiveness of an AI system’s self-awareness for improving human-AI coordination; and

- The development of AI situation models to support robust decision making and human-AI coordination.

Research Needs

The committee recommends addressing five outstanding research objectives for developing effective SA and shared SA in human-AI teams.

Research Objective 4-3: Human Situation Awareness of AI Systems.

Methods for improving human situation awareness of AI systems are needed. This research would be well served to consider AI systems developed for distinct types of applications (e.g., imagery analysis, course-of-action recommendations, etc.), learning-enabled systems versus static AI systems, and operations at various timescales. It would be advantageous for research on situation awareness to include a consideration of AI status, assignments, goals, task status, underlying data validity, effective communication of system confidence levels, ability to perform tasking, and projected actions.

Research Objective 4-4: Shared Situation Awareness in Human-AI Teams.

Research to determine the amount of shared situation awareness (SA) needed when working with AI systems needs to consider various aspects of SA (e.g., SA of the environment, broader system and context, teammates’ tasks, teammates’ performance or state, etc.), and the effects of various types of tasks and concepts of operation on SA needs (e.g., flexible function allocation versus rigid functional assignments). It would also be beneficial for this research to consider the challenges of differing timescales of operation for humans and AI systems that may occur in various settings (e.g., cyber operations or imagery analysis), and the effects of team composition (e.g., multiple humans or multiple AI systems). Methods for improving shared SA between human and AI teammates need to be identified. Furthermore, it would be advantageous to study the evolution of beliefs about how much and what type of SA and shared SA is needed, as these beliefs will govern information-seeking behaviors in operational environments.

Research Objective 4-5: AI Awareness of Human Teammates.

Does an AI system’s awareness of the state of the human operator improve its performance? What factors of human state, processes, or performance can be

leveraged? How can an AI system’s awareness of the human teammate be best utilized to improve coordination and collaborative behaviors for a human-AI team? If used, what methods are best for helping the human to understand any changes that occur in AI system performance or actions, and for maintaining two-way communication regarding state assessments? Tradeoffs need to be considered as to whether to support adaptation to the individual, the role, or the notion of any human collaborator, as well as whether to support co-adaptation of human to AI system and vice versa (Gallina, Bellotto, and Di Luca, 2015).

Research Objective 4-6: AI Self-Awareness.

Can AI self-awareness be employed to improve human-AI coordination? Can an AI system develop a self-awareness of its own limitations that can be actively employed to improve hand-offs to human teammates in certain environments and tasks? What types of AI self-awareness are needed?

Research Objective 4-7: AI Situation and Task Models.

To perform effectively in complex situations, AI systems need to form situation models that account for a variety of contextual information, so that these systems can appropriately understand the current situation and project future situations for decision making. Although current machine learning-based AI generally performs only simple subsets of tasks (e.g., categorization of images or datasets), more complex and capable AI systems will need combined situation models across multiple objects, environmental features, and states to create more robust situation understanding. These approaches to AI will require causal models to support situation projections, and will need to incorporate methods for handling uncertainty, prioritizing information, dealing with missing data, and switching goals dynamically. Further methods are needed to create AI models of the dynamic task environment that can work with humans to align or deconflict goals and to synchronize situation models, decisions, function allocations, task prioritizations, and plans, to achieve appropriate, coordinated actions.

SUMMARY

A considerable amount of research has been conducted on supporting human SA in complex systems, including military operations. This research is directly applicable to MDO, and detailed design guidance on improving SA using automated systems and AI has been established. In addition, models and methods for supporting team and shared SA in human-human teams have been developed, which can be applied to human-AI teams. More research is needed to better understand the role of shared SA in human-AI teams for complex MDO settings, and to develop and validate effective methods for supporting shared SA.