3

Information Content

INTRODUCTION

The previous chapter discussed the information infrastructure to support information superiority. This chapter examines issues related to the content that will be carried by that infrastructure. In particular, this chapter focuses on three aspects of information that affect the value and utility of information content: (1) sources, (2) applications, and (3) processing. Sources of information are proliferating, and many new publicly accessible sources will be utilized by the naval forces of the future. Applications that manipulate information content are highly varied but can be classified according to requirements for timeliness of the application outputs. Certain applications require rapid decision-making based on deliberately acquired information, whereas other applications will use intelligent software agents and other means to probe large preexisting databases. Finally, improved processing methods enable applications to be executed more efficiently, thus enhancing the value of information content. Automatic target recognition (ATR) requires advanced algorithmic techniques and is an example of a class of applications where current limitations on processing capability represent a significant impediment to implementation. ATR can be viewed as a special case of information understanding that uses algorithms to recognize patterns in databases of information.

From the user's point of view, representation is an essential aspect of information content. How information is presented, whether to a human operator or to an automatic analysis system, is often a principal determinant of its utility. Information can also take on new significance when organized in useful ways. The

Department of the Navy has a particular need for compact representations of information because of the constraints imposed by the relatively limited bandwidth available for ship-to-ship and ship-to-shore communications.

Information content conveyed to the warfighter at any level of command is the end product of a system that is integral to the security of the nation. The information system, consisting of the infrastructure, information content, sensors, and security systems to safeguard the information, forms an essential asset in the repertoire of defensive systems, on a par with platforms and weapons.

INFORMATION SOURCES

The continuing development of inexpensive, powerful processing capabilities ensures that the coming decades will be marked by ongoing advances in information technology. Increasing access to advanced information processing and information management capabilities will lead to a proliferation of activities that generate, maintain, manage, and exploit information, and it is certain that the military will be one of the many important players in the new world of information-centered activities.

The DOD and the Department of the Navy need to be in a position to exploit a wide variety of available information sources. Certain of the military's information needs are unique and highly specialized, and will require focused investment to develop the requisite technology. This is particularly true in the area of mapping, charting, and geodesy. Here, continued R&D and infrastructure upgrades will be required to produce geospatial data for the warfighter in a timely fashion. Other needs, which may be less unique and less specialized, will be met by appropriately exploiting sources of information that will be available in the public domain.

New sensor systems and the increasing use of indigenous sensors are emerging from the dramatic growth of the commercial communications infrastructure, and the data they generate represent a new class of public-domain information. These systems can be classified into two categories: commercial systems that will be developed in order to sell information for profit, and sensors used in conjunction with information systems for the benefit of the user. The first category includes commercial satellite imagery, databases and mailing lists available for purchase, and commercially operated data mining sources. The second category includes automobile sensors communicating with a ''smart" highway; smart homes providing communication links between appliances and manufacturers for maintenance and monitoring; remote camera systems operated by organizations for the benefit of the public, such as town-square imaging systems accessible over the World Wide Web; and water measurement sensors that transmit reservoir fill levels to public water works. Together, these two categories constitute an enormous body of information that, typically, will reside within the public domain, and from which it may be possible to extract, for example, data

regarding the location of an individual or vehicle, or the state of a particular system at any given time. This type of information will become increasingly available to friend and foe alike. The Navy and Marine Corps can either attempt to exploit the information in a way that is more timely and deeper than all potential adversaries, or can attempt to deny the information (or utility of the information) to adversaries. While a balanced approach is advisable, it would be prudent to assume that all information is persistently available to all parties, and that information superiority will be derived from greater awareness, planning, and exploitation capabilities.

Accordingly, it is incumbent on the Department of the Navy to position itself such that it is capable of exploiting this rich new class of sensors and information. In some cases, directly relevant information can be purchased or procured. More often, however, the required information must be inferred from public sources and those inferences then transformed into a form relevant to Navy Department needs. For example, publicly accessible town hall sensors and reservoir data can be used to infer local conditions. Traffic analysis can indicate levels of activity, and movements of individuals can indicate deployments. Information on local conditions that can be inferred from the direct data could be extremely useful when appropriately presented to a commander or operator. Data mining technologies and collaborative filtering techniques can be used to deduce information and compact it succinctly for analysis and presentation.

Indeed, the body of information that will be available can, if properly exploited, lead to a revolution in the intelligence field, and provide the data sources for intelligent software agents and automated inferencing engines that can be crucial to Navy and Marine Corps missions.

APPLICATIONS

The utility of information depends, in large measure, on applications that take raw data as an input, analyze them, and transform them into a representation that is meaningful to operators and commanders. This task is so demanding that, ultimately, a new class of applications technology will be required, which could be called information understanding, and which will include a suite of advanced methods for processing, analyzing, and representing information. Information understanding could greatly extend the capability of ATR systems, for example, as well as other technical means of gathering intelligence. Such enhanced capability could be important not only for target recognition using sensors designed to acquire battlefield information, but also for reasoning about disparate information sources on a longer time scale, to provide deep understanding and facilitate planning for potential military operations. Traditionally, sensor information is fed to a processor that performs pattern recognition functions in order to detect targets. This methodology assumes, however, that sensor data is a rare and precious commodity that must be processed immediately. It also assumes that the

relevant information is localized in a sensor stream. In a sensor-rich environment, the timing of the processing can be matched to the requirements of the application. New applications for the exploitation of sensor information are afforded by the ability to consider processing outputs from multiple disparate sensor sources over longer periods of time.

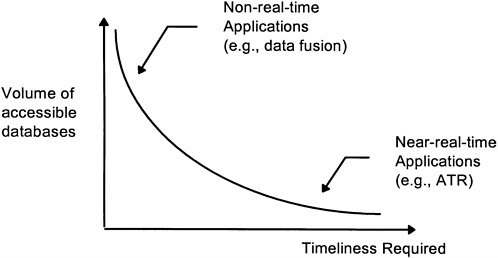

As mentioned above, certain applications require that decisions must be made immediately, and so require rapid access to information with minimal latencies. Other applications make decisions that are based on information that has a long time constant, and thus might involve processing times that could involve hours or days or weeks. As such, these applications can afford the luxury of accessing massive databases. If the processing must occur in time t, and the bandwidth is B, then the maximum amount of information available to the application in order to make a decision will be at most tB. In order to make an intelligent decision, a certain amount of information is always necessary, and thus bandwidth requirements are necessarily high for applications that require timely decisions. However, applications that can be executed at a more leisurely pace have the opportunity to make more intelligent decisions by massively increasing the total amount of information available to the processor, either by virtue of the additional time, or through large bandwidth capabilities, or both. Accordingly, requirements for timely decisions impose constraints on the amount of data that can be accessed, whereas longer-term applications can access large, distributed, disparate databases and make use of more intensive intelligent processing. This relationship is illustrated schematically in Figure 3.1.

Not only is there a tradeoff between timeliness and the amount of information accessible to the process, but the kinds of information sources that are useful will also be affected by the type of application. The value of some information decays over time, and applications with long processing times will, in general, only be utilized for processing information whose value persists over a reasonable time scale. On the other hand, applications that make relatively fast decisions will need immediate access to timely information, and thus will likely be tightly coupled to sensor systems.

Indeed, there is an overriding need for awareness of what information is available and where it can be located, as well as for timeliness and assurance of the information sources. With such awareness, information can be matched to the application, and action can be taken in advance to ensure that the information will be available when needed. Finally, it is important to be able to perform inferencing, to adapt information to representations that are useful for military needs, and to fuse information from multiple sources. Naval forces have a particular need for automated inferencing for many applications due to the comparatively limited bandwidth that will connect naval platforms to the information sources. That is, while shore-based human analysts will be able to make deductions based on presentation and visualization of massive databases connected by ever-increasing fiber channels, analysts resident on naval platforms will have to

Figure 3.1

Categorization of information applications.

work with data streams that are constrained by satellite and radio connectivity. Processing that performs inferencing and transformation of information is thus required not only to aid the interpretation, but also for compression.

Considering the proliferation of information sources, and the need to match sources to the classes of applications, it is incumbent on the defense establishment to develop an awareness of the available information. In order to perform these functions and to ensure timely and convenient access to those sources by naval assets, responsibility should be designated within the Department of the Navy for the identification, organization, and classification of all relevant information sources. Assembling links to information sources will include awareness of novel information providers, creation of maritime-specific databases, mirroring of certain databases for rapid accessibility, and vigilance in the maintenance of the quality of the databases.

PROCESSING OF INFORMATION

The growth of the information infrastructure and the proliferation of new information sources will enable new ways of exploiting information for military purposes. As discussed above, some applications require rapid decisions and are thus tightly coupled to local sensor streams, whereas other applications can make use of massive databases and can perform automated intelligence operations through processing occupying longer periods of time. More advanced algorithmic methods will be required to facilitate extraction of useful content from massive information databases. For example, there is a clear need for more effective means of using sensor data to locate targets, which is a special case of the recognition capabilities that typify the military uses of information processing.

The civilian financial sector has a similar need for advanced recognition capabilities, and commercial interests are currently helping to drive the development of database mining and information fusion techniques. Because of the critical importance of information to military systems, however, the Defense Department should strive to remain in the forefront of ongoing developments in this area Yet, before recognition capabilities can be developed that extend target recognition in localized sensor data, generalized capabilities for automatic target recognition need to be successfully demonstrated and refined.

The Department of the Navy should develop capabilities in information understanding by identifying database mining methods that are applicable to defense needs, and by funding research in the broad area of recognition theory. However, as a base, ATR technology should be assessed and understood in a broad context by the Navy and the Marine Corps. A summary assessment is presented below, together with some hints at a unifying theory. The intention of the following discussion is to develop a basis for the more general discussion of information understanding that makes up the balance of this chapter.

AUTOMATIC TARGET RECOGNITION

Introduction

With naval forces continuing to take the leading role in power projection and management of the early stages of regional conflicts, a central focus of technology for naval forces will be the determination and tracking of targets. The environment is necessarily target-rich and will likely pose difficult problems for target recognition algorithms, with targets and nontargets in close proximity. The ability to distinguish between targets and non-targets automatically and non-cooperatively is generally considered to be the province of automatic target recognition (ATR). Despite the fact that ATR technology is not yet mature, it is already clear that this technology will be an essential component of naval activity in the future. The Navy has a particularly high stake in the ability of ATR to effectively distinguish among target types, in order to provide accurate on-site intelligence in advance of conflicts and to properly, efficiently, and safely effect strike missions during conflicts.

One cannot expect a single black-box that will work for all scenarios and all applications and, in general, ATR is not a single technology per se, but rather a suite of technologies that incorporates a variety of approaches tailored to varied target acquisition scenarios. Indeed, the variety of ATR algorithmic approaches will likely continue to mature for several decades to come, and the ability to rapidly train a system on new target types, and to adjust to new environments, including concealment and introduction of decoys, will remain challenges. On the other hand, there already exists ATR technology that shows military significance for automatic cueing of potential targets.

Classification of ATR

Automatic target recognition refers to the ability to recognize instances from a collection of models given sensor data and other information, and to be able to effect recognition despite viewpoint variations, occlusion, obscuration, camouflage, deception, model variability, and other confounding factors. The panel identified the following three application domains for ATR:

-

Surveillance. The goal is to locate and track targets from a distance, and the emphasis is on detection; there is typically a less urgent need for identification. Often, surveillance involves cueing a human operator.

-

Battlefield awareness. The emphasis is on identification, particularly of friend, foe, or neutral, and integration of multiple sensor modalities and multiple data sources is highly desirable.

-

Precision guidance. The emphasis is on accurate orientation and pose estimation in order to perform course correction and other functions related to positioning.

Note that these application domains are distinguished by a timeliness requirement, although even in the case of surveillance, ATR typically connotes a relatively short time scale, involving perhaps a maximum of a few hours. In the case of precision guidance, decisions must be made on the millisecond scale.

Naval forces in the future will make use of advanced technology in all three areas to support missions. Despite slow progress in fielding working ATR systems, it is likely that both continued progress and technology breakthroughs will lead to performance capabilities in all these areas that exceed human recognition capabilities and that can be executed at speeds that would have been unimaginable to image analysts a few decades ago. Progress in processors, memory, and sensors, as well as improved algorithmic techniques for processing signal data, image formation, and coherent combination of information (such as from moving targets), all point to major advances in a very few years.

Sensors and Automatic Target Recognition Everywhere

Automatic target recognition can be thought of as a bandwidth enhancer. Given the need to transmit information about targets and threats to the commanders in as succinct and timely a manner as possible, ATR allows the naval forces to concentrate on the information that is explicitly required, dropping (preferably early in the transmission chain) what is irrelevant or redundant, such as distracting background and clutter.

Once transmission requirements are reduced, then the ATR products can be shipped everywhere, and knowledge of the battlefield, and indeed targets in the world, can be made accessible on demand everywhere. In much the same way

that Internet capacity is providing wide access to information, increasing transmission capacity, provided by the global information infrastructure, will provide increased information access to warfighters. The panel envisions a world replete with sensors, in UAVs and satellites, the potential battlefield area, third-party sources, traffic lights, and even people's hats. These data will be widely accessible, but, in their totality, overwhelming.

ATR systems will likely be placed as close to the sensors as possible in order to minimize bandwidth needs, although in some cases sensor data will need to be acquired through a network, perhaps at great distances from the actual sensor. Ultimately, there will be a long list of target types that can be located and tracked from any position in the world.

One could imagine an Internet-like system where, given the appropriate permissions, a user could call up a view from any location of any other location, in order to be cued to targets and threats of interest. When the synthetic view corresponds to the viewer's current position, then the system gives the capability of looking around corners, through walls, and over hills and mountains.

Technology to Achieve this Vision

Considerable technological advances are required before the vision outlined above can be realized. Military needs are in some cases special and will require focused development. Some of the required advances are formidable, but others are straightforward, given sufficient attention and resources. The panel anticipates that these advances can occur in many different nations but are most likely to occur first in the United States, providing that sufficient attention is focused on ATR development.

Synthetic aperture radar (SAR) is currently the principal means for acquiring sensor data for target recognition. SAR's advantages include all-weather capability, high resolution, and imaging at a distance. Infrared sensing, on the other hand, demands proximity, but can provide extremely useful information at high resolution by passive means. It must be assumed that the suite of available sensor modalities will expand, and that ATR methods will be able to provide generic, multisensor recognition capabilities.

Technology advances are anticipated in the following three areas:

-

Better sensing methods,

-

Better algorithmic methods for performing recognition, and

-

Faster and better computer processing.

In the area of improved sensing, SAR image formation methods can be considerably improved. New methods for improving the resolution, for coherently adjusting and improving the combination of raw signal data, and for adap-

tively forming the best image promise to dramatically improve SAR capabilities for ATR applications. While the capability does not exist today, it may be possible in the future to form SAR images of moving objects. Some progress has been made in this area, but the algorithms are more delicate. It is reasonable to expect that developments will occur to permit high-accuracy radar imaging of moving objects at long distances. Much of the Navy Department's investment in ATR development has focused on the inverse SAR (ISAR) modality, such as imaging a moving ship from a fixed radar platform. These algorithmic methods for image formation, applied to other targets such as moving ground targets, may prove useful for achieving high-resolution imaging of moving targets at a distance, although at this point, the use of ISAR techniques for general ATR applications is only in the earliest stages of development.

Inexpensive infrared (IR) sensors, especially ultraminiaturized sensors, are likely to be available in the near future. Depth sensing, by light detection and ranging (LIDAR), and chemical analyses from a distance might also enable a wealth of discrimination capabilities. ATR is normally associated with image processing, but other signal data such as hyperspectral and multispectral techniques can be used as well, as long as the information assists in discriminating among targets and non-targets. Since the image formation process can involve discarding information, there may be improved methods that deal directly with raw sensor data.

Better algorithmic methods are also likely, but there is a pressing need for a theory that provides a basis for comparing ATR approaches. The components of such a foundational theory are sketched in the next section. Currently, algorithm development in the ATR field is mostly a matter of art and parameter tuning, and the resulting software codifies methods as opposed to real algorithms. The academic fields of computer science and information systems are still organizing into subdisciplines, and computer science is rapidly bifurcating into theory and systems areas. Within the systems area, experimental computer science is slowly emerging as that area that concentrates on the development of computer applications. The broad nature of ATR development requires that a certain amount of theory guide the experiments and construction of systems. At this time, there is insufficient guidance in algorithm development, and an insufficient appreciation of the desirability of foundations.

Finally, improved computer processing will aid ATR development. It is a truism that computer processor power is increasing geometrically. More important to ATR development is the increasing capacity for dense memory storage, and high-bandwidth data transfer within a processor. Since ATR involves comparing relatively small amounts of sensor data with large databases of model data, the ability to index into that model data and to rapidly access the relevant objects largely dominates the processing time. Although researchers have long considered simulations that operate in minutes or hours to be acceptable approximations

of potential real-time methods (when specialized processors are applied, or when computer processor power catches up, in order to provide several orders of magnitude in improvement), the inability to test and process large databases of training data has hampered evaluation and subsequent development. Throughput is improving, however, and current workstations are able to run ATR algorithms intended for real-time implementation on large images in under an hour. These speeds provide some capability for large-scale test and evaluation, and several more doublings in throughput will make near-real-time testing practical.

Toward a Foundation of ATR Theory

Approaches to ATR have typically involved variations on basic methods that can be categorized into the following three classes:

-

Matched filtering,

-

Pattern recognition, and

-

Model-based vision.

Matched filtering is the most common approach, and the most successful at this time. Matched filtering is based on the equation ![]() , so that an image f can be compared to a template g by computing the inner product <f, g> and normalizing by bias terms. By computing many inner products and finding a best fit among a large class of possible targets, a best-target hypothesis is determined. Of course, different templates are needed for different views and operating conditions. Translation invariance can be obtained by computing convolutions in place of the inner product (effectively computing the inner product at all possible translations positions), whereas rotation invariance has to be built in using multiple templates.

, so that an image f can be compared to a template g by computing the inner product <f, g> and normalizing by bias terms. By computing many inner products and finding a best fit among a large class of possible targets, a best-target hypothesis is determined. Of course, different templates are needed for different views and operating conditions. Translation invariance can be obtained by computing convolutions in place of the inner product (effectively computing the inner product at all possible translations positions), whereas rotation invariance has to be built in using multiple templates.

Pattern recognition is based on segmenting the signal data, to extract the target region, and then making measurements of that segment. Typical measurements involve area and shape features. The vector of measurements describing the segment is then compared to prestored vectors defining target classes. A difficulty with pattern recognition is the dependence on the segmentation.

Model-based vision uses a geometric description of the target classes, in such a way that a description can be quickly generated based on any hypothesis or the position and orientation of a given target. The description, rather than being a vector of measurements of a single segment, is more typically a collection of unordered features representing significant events in the data that are likely to be extracted in a view of the target.

These three methods have more in common than is at first apparent. It is possible to unify the approaches in a single theory, which provides for increasing sophistication of each of the three methods. Indeed, one formulation of a model-based vision provides a matched filtering interpretation, where the filtering takes

place in a feature space. At the simplest level, matched filtering makes a comparison between an observed scene and a stored model of a target. By repeating the comparison at all positions, with all possible poses of the target, over all targets of interest, a best fit can be found. The comparison can be made based on the relationship of intensity levels in the image to predicted intensity levels, but it is more common and more robust to use image features that are less sensitive to natural variations. For example, extracting edge maps, and comparing observed edges against predicted model edges, can provide recognition that is less sensitive to the overall intensity of the image. With SAR imagery, it is usual to extract peaks, target, shadow, and background pixels, and to compare iconic images at the symbolic level.

Improvements are possible by extracting and grouping features from the sensor data that provide more localized, independent information. For example, whereas edge information provides useful discrimination power, groups of edges can be clustered to form line segments, or curves, that can be described by a few succinct parameters per grouped edge. Apart from providing more compact representations, the grouped information provides opportunities for more accurate reasoning about the components of the image, and the likelihood that the independent groups form an instance of a target. Methods for grouping raw sensor data into clusters of independent meaningful localized features will be dependent on the sensor type, the kinds of target models, and the ingenuity of the researchers.

The benefit of a foundational theory is that the needs and requirements of the system can then dictate design considerations, rather than the other way around. Indeed, there is a pressing need to be able to predict and assess likely performance according to operational conditions, sensor resolution, and target variability. Performance prediction then allows the development of sensor resolution requirements. The goal is to graduate ATR development from tinkering with demonstrations to development of advanced systems.

Domains of Applicability

Current ATR algorithms, such as the feature comparison algorithms mentioned above, are able to perform reasonably under the following assumptions:

-

Targets must be unobscured and in the open;

-

There are no more than a dozen or so target types, and the targets demonstrate relatively little intraclass variability between instances at the same orientation;

-

The platform cues the system with certain imaging parameters, such as depression and squint angles; and

-

The adversary does not employ significant cover, concealment, and deception (CC&D) techniques.

These assumptions represent necessary constraints because current ATR systems can only deal with a limited number of potential hypotheses at each location. Even with these undesirable limitations to their capability, ATR systems are still potentially significant in a variety of military applications. Although there are no fielded systems, the feasibility of deploying ATR systems has been demonstrated through the use of multistage methods of comparison of system features with prestored models.

In order to extend the applicability of ATR systems, further performance improvements are needed for operations in more challenging environments. For example, future ATR systems will be required to make accurate determinations regarding possible targets that are articulated, or that are partially obscured. This capability is more profound than it may at first seem, because it is not possible to enumerate all possible articulations and all possible partial obscurations as individual and separate models. Not only does the number of models grow exponentially, but the cross-talk between models also becomes large, and the ability of the system to discriminate among competing hypotheses becomes difficult. Instead, it becomes necessary to reason about subparts of a model, each of which might be recognized only indistinctly, but which, in conjunction, might form strong evidence for the presence of a potentially deformed or modified target. In order for ATR systems to operate effectively in the complex combat environments that are likely to characterize future naval engagements, they must be capable of analysis and recognition in the face of uncertainty and partial evidence. They must be capable of creating and utilizing combinations of data and must be able to take into account dependent information. Such capabilities may be within reach and, if so, will lead to ATR systems capable of operating in complex and variable combat environments.

In many parts of the world, military vehicles and other targets of interest will operate in wooded regions under foliage, or be otherwise hidden from the view of normal optical and radar sensors. Foliage-penetrating radar can help image targets under these conditions, usually at the expense of resolution because the bandwidth of the radar signal becomes limited. Preliminary studies indicate that considerable progress is possible at penetrating a layer of canopy. Further, foliage cover, as well as netting, often hides a target from many aspect angles but leaves open the potential for recognition at particular viewing angles. Accordingly, it becomes necessary to collect and integrate information from multiple viewpoint directions, as well as from multiple sensor platforms. Platoons of unmanned surveillance platforms, such as UAVs, will need to cooperate and coordinate in examining regions of interest in difficult terrain. Once again, technology that reasons about multiple pieces of uncertain evidence becomes critical to a fully functioning system. Such semicognitive capabilities are certainly realizable but require advances over current customary computational practices.

The ability to image and recognize moving targets is another key capability that will be required of future ATR systems. Certain targets, such as mobile

missile launchers, are visible while in transit. Current SAR techniques are only effective in imaging fixed targets. A nonmoving target is required to coherently deconvolve and sum the return signals from probing radar beams emitted from a range of aspect angles relative to the target. If the target moves with an unknown motion at the same time that the platform is moving, the disambiguation process becomes seemingly impossible. It is, of course, possible to obtain a measure of the motion of a potential target by observing and tracking the Doppler shift, but currently fielded systems provide no information about the potential target other than an indication of the motion. Alternatively, ISAR techniques allow for the imaging of moving objects (with uniform motion) by a fixed sensor.

Algorithmic techniques that re-register the information based on methods related to auto-focusing show promise for enabling radar-based ATR systems to obtain resolution on moving targets. Such highly specialized signal processing methods require nurturing and development, and considerable experimental validation, and tend to require nontraditional thinking about the image formation and signal processing theory. It is expected, however, that imaging of moving targets will become a viable technology. Such a capability could be extremely powerful, since the speed, direction, and pattern of movement of a target provide considerable evidence of the mission of the object, as well as constraints as to the orientation and variability of the target. Together with a few distinguishing features on the oriented target, full identification becomes much more likely due to the motion analysis.

Temporal integration of information also offers powerful potential but has been largely untapped for ATR processing. For example, in automatic mine detection, land mines that are placed sufficiently far underground can fall into the ''too hard to detect" category soon after placement. Suppose, however, that a small collection of vehicles is observed positioned on a field in a particular pattern, and that a half year later, an explosion yields evidence that there are buried mines in the field. Correlation of the information of the single explosion with the position of the suspicious vehicles at the earlier time can lead to determination of the location of the remaining mines. Such simple and obvious techniques nonetheless require large storage capacity, and the ability to retrieve seemingly trivial data. This ability to bring into correspondence multiple pieces of information, often separated temporally, with terabytes of information juxtaposed in between, offers formidable challenges that nonetheless promise great advances in our ability to recognize and reason about targets. Such information understanding, which extends beyond that enabled by simple ATR capabilities, is the topic of the section below titled "Information Understanding."

Performance Estimation

There are two ways to estimate the performance of a proposed target recognition system. One is to build it and test it on a large database of acquired images, and to evaluate the performance. Another method is to analyze the algorithms,

using simulations, with an emphasis on the discriminability of the models, to determine, before actually building the system, if the method has any chance of working. Current practice is closer to the former methodology than to the latter.

It is possible to predict the performance of ATR systems by measuring performance statistics associated with the underlying features used for the recognition process. Even if a system makes use of complex combinations of partial evidence from competing models and operates in a hierarchical indexing fashion, it is nonetheless possible to determine the likely degree of overlap between configurations of targets, which can vary due to sampling and extraction uncertainty as well as in-class variability, and competing configurations, which can occur from random clutter and background or from competing models. Models of the variability of the features are needed in order to perform this analysis, and it is often necessary to assume that the features are independent. However, it can be quite useful in development to know how much information is required for an ATR system to perform at an acceptable response operating level, before actually building the complete system.

Consider as an example a two-class problem, where there is a single target type such that all locations in the image domain are to be identified as either target or background. A target is indicated by the presence of a collection of features and a specified percentage of the designated features will actually be present and extracted when the target is actually present. There is a statistical variability to the number and quality of these features, and so the total score indicating the presence of a target has a certain density function when the target is actually present. On the other hand, when the target is not present, random noise and other potentially confusing elements will cause patterns of features to be present with certain probabilities, and so there is also a density distribution for the total score for a target when the target is not present. By adjusting the threshold on the score, different operating points can be obtained so as to vary the expected detection rate versus the false alarm rate. The entire operating curve can be predicted if the density distributions of the scores can be computed. Computation of these curves requires a statistical analysis of the properties of the features, with and without a target being present, and requires either independence of the features in the two cases or an understanding of the joint statistics.

Generally, a certain number of independent features are required to enable discrimination between targets and background. In one kind of sample analysis applied to a simple image processing example, a 90 percent detection rate with a reasonable false alarm rate (one per kilometer) is possible only when there are features defining the target with an aggregate of 35 coordinate values. Different assumptions lead to different requirements, but these results are typical. Since features are rarely completely independent, the estimate provides a lower bound for the requisite number of features. A feature can be a component or measured attribute, generally associated with a location in the image, based on extraction and grouping of particular patterns of intensities or edges. For example, the

opening angle of a corner is a feature associated with detection of the corner and can be used to assist in discriminating between the particular corner in question and a corner to be matched in a model. If it is determined that 30 to 50 features are required in order to perform reasonable discrimination (i.e., keeping the detection rate high without incurring an unreasonable number of false alarms), then the estimate implies certain resolution requirements for the image acquired by the system. A critical quality measure is the number of pixels on target that are extracted from the sensor data. Whereas typical imaging systems provide sampling rates of 1 pixel per 2 feet, or per single foot, resulting in 100 or so pixels lying on a typical target, it might well be the case that in order to obtain 50 features about a target, 500 pixels are necessary. The extra pixels might come from multiple views, or from multiple sensors, or from improved image formation and ultrahigh-resolution images. The important point is that a well-developed theory of discriminability can provide resolution and sampling requirements, which can greatly assist in the development process.

Indeed, although ATR is an extremely challenging problem that often involves identifying targets in a complex and highly variable environment with multiple levels of CC&D, it is the panel's expectation that significant evolutionary progress will be made. The advances in sensors, resolution, processing capability, and algorithms that conduct reasoning in the face of uncertainty will likely enable discrimination among dozens of target types, under conditions of partial obscuration and other challenging conditions.

INFORMATION UNDERSTANDING

Information understanding involves the fusion of data that may be spatially and temporally distributed in order to form a coherent picture of a situation of interest. Information understanding depends on the ability to recognize and extract relevant data from large and disparate data collections—extracting useful information from large sets of redundant, unstructured, and largely irrelevant will often be the first step in developing information understanding. In the commercial world, current extraction techniques utilize data mining.

Data mining, which has potential DOD applications, currently focuses on the need of credit card companies to automatically recognize spending patterns that indicate probable fraud, based not only on current purchases, but also on the extent to which the current pattern is unusual for the card in question. Other business uses of data mining and collaborative filtering include profiling of potential customers based on their spending patterns, so as to target marketing efforts to the most likely consumers of products and services. Since the marketplace rewards businesses that can exploit a comparative advantage, data mining tools for business applications will inevitably become an important part of mainstream commerce. In medical data processing, there is the possibility of developing automated diagnostic procedures that identify conditions or pathology from

multiple test results. Defense needs are conceptually similar, but broader and different in scope—information relevant to national security can be extracted from nearly all information sources. Further, rather than focusing on securing a competitive advantage in sales and marketing of goods and services, defense needs include more general intelligence, indications, and warnings, and other information that can facilitate combat planning and execution.

Information understanding technologies to meet defense needs may draw upon the same underlying theory that supports commercial information extraction techniques, but generally will require a different set of applications. Currently, ATR can be viewed as a primitive form of information understanding technology, which should ultimately lead to battle-force analysis and automated situation awareness, and all-source automated multisensor analysis. The development of these capabilities will be driven by user needs and will be facilitated by advances in sensors, communications, and computation.

Recognition Theory

Recognition theory refers to the body of knowledge underlying the development of tools for extracting information from large and varied data sets and is the underlying foundation of those technologies that are referred to in this report as information understanding. The theory of pattern recognition, which involves the identification of distinctive patterns in signal data, is a special case of recognition theory. Typically, pattern recognition uses a single image or a single return signal and attempts to distinguish among a fixed collection of possibilities in order to characterize the given data. More broadly, recognition theory encompasses systems with greater cognitive processing capability that are flexible enough to effect recognition in the context of situations and scenarios that have not been explicitly programmed into the recognition system. Further, recognition theory should enable the development of systems that can discover associations among disparate pieces of information.

Methods developed in the field of artificial intelligence (AI), including commonsense reasoning, nonmonotonic logic, circumspection, algorithms used in neural networks, and extensions to Bayesian calculi, have largely failed to provide the understanding required to develop a coherent theory of generalized recognition. Accordingly, recognition does not yet exist as a differentiated discipline. However, given the ongoing progress in AI research, the panel anticipates that a coherent theory of recognition will emerge. Further research and development is needed to develop the capacity to reason in the face uncertainty and to fuse information from disparate sources.

Automatic target recognition uses recognition theory in limited ways. Most ATR development is currently limited to the pattern recognition subset of recognition theory, being based on analysis of single image frames and segmented target regions. However, more generalized ATR processing would take advan-

tage of multiple geo-registered information sources and temporally displace data in order to dynamically reason about situations.

One of the main differences between the theory of pattern recognition and more general recognition theory is summed up in the standard distinction between bottom-up and top-down processing. Recognition theory seeks a solution to the problem of identifying and extracting information that is relevant to a particular working hypothesis from large and highly varied sets of data. Since extraction and analysis are driven by a hypothesis, recognition theory can be viewed as largely top-down processing. Currently, most recognition systems work in a bottom-up fashion, first extracting features from the given sensor data, and then looking for patterns among the features that support a model hypothesis. Although hypotheses are formed in the course of executing pattern recognition, it is the sensory data that largely dictates the flow of processing, and bottom-up processing is the more appropriate description for the information flow. When data sets become too large to carry out bottom-up processing, and when information must be extracted from multiple and highly varied sources, processing methods necessarily must use analogs of inverse indices and top-down processing.

The Future Information Environment

The panel assumes a future in which sensors and information will be ubiquitous. Encryption will be used to protect certain vital information, such as bank transactions, but massive amounts of other information will be available for analysis. Not only will personal and official messages be passed digitally, but every appliance will also be communicating by networks with remote controllers, and every individual can be expected to be in constant contact with a vast interconnected digital network. Highway tolls will be paid electronically, and packets containing information as to the whereabouts of any moving private vehicle will likely be available. This sea of information will include data about individuals from government and commercial sources. It is reasonable to assume that the whereabouts, movement, purpose, and plans of most individuals will be discernible from an analysis of specialized information, and that most businesses and companies will have massive incentives to perform such analyses in order to target their marketing to the appropriate potential customers. Although encryption of the information may afford some privacy to individuals, analysis of data traffic patterns may provide nearly equivalent information, at least in a statistical sense. To the extent that information can be captured, it can also be archived, and it is anticipated that a massive, distributed, dynamic database of archived information will be developed specifically for Navy and Marine Corps needs. The technology that will be developed to analyze and exploit the sea of information that will be available in the future will pose both challenges and opportunities for the DOD and the Department of the Navy.

In the same way that the needs and plans of an individual potential customer can be analyzed, so also can the plans and movements of potential adversaries be observed. Armies are made up of individuals, and commanders begin operations by setting plans and positioning people and materiel. National security will dictate that all appropriate sources of information be acquired, monitored, and assessed. The key to these capabilities is the ability to understand the information in ways that are relevant to particular needs. Understanding and managing information will be critical to defense needs.

ADVANCES NEEDED TO SUPPORT INFORMATION UNDERSTANDING

While much of the research that is required for the development of technologies to support information understanding is currently ongoing, in the view of the panel, it is not sufficiently focused on developing information understanding applications for Navy and Marine Corps needs.

The panel has identified the following six technology areas as meriting special attention in order to realize the information understanding capabilities that will be required to analyze and exploit the sea of information that will characterize the future information environment:

-

Information representation. Information representation involves extracting and representing features from data streams in such a way that the relevant information can be accessed efficiently from automated queries. Methods of information retrieval likely will include inverse indices and distributed processing using intelligent memory. It will be necessary to develop the means to appropriately represent information without prior knowledge of the likely hypotheses that might later be used to extract the representation or to associate other data with it.

-

Information reasoning. Information reasoning involves the capacity to reason in the face of uncertainty, and may include the use of models to predict degrees of dependence and independence between data sets and other strategies in order to effectively hypothesize and test premises for the purpose of extracting relevant information content. Further advances in recognition theory are needed, including methods for combining data and forming inferences. Recent developments in neural network theory suggest that it may be possible to create adaptive reasoning systems, but further advances are required before such systems can be realized.

-

Information search. Since information understanding will most likely work with a top-down structure, methods are needed to organize hypotheses hierarchically, in order to structure the search for content logically and efficiently. In the same way that model-based systems generate hypotheses that are verified and refined in a tree-search structure, analogs are needed to organize the search for information content. Further, the search cannot be hand-crafted for

-

each recognition system application. Instead, methods are needed to automatically generate the search trees and hypothesis organization strategies.

-

Information integrity. Because data might be corrupted, faked, or inaccurate, not all information sources should be trusted equally. While technologies exist for authenticating information and securing its transfer, means of assessing confidence in information sources, and the ability to discard untrustworthy information, are topics that need further development.

-

Information presentation. Information presentation, as opposed to representation, is the manner in which processed data is supplied to the human operator or commander. This involves the human-machine interface as well as the specific manner in which the data is displayed and its context established. Capabilities for data visualization and multimedia presentation of information will be important for the best performance of an information understanding system that necessarily includes a human operator as an integral subcomponent of the system.

-

Human-performance prediction. An information understanding system that includes the human operator as the final arbitrator and decision-maker can be effective only if the human-machine interface is optimized with respect to human performance in the context of the task at hand. Accordingly, it will be necessary to acquire greater understanding of human cognition and decision-making behavior.

Because information understanding is a cross-cutting endeavor, other technology enablers in addition to those listed above will play a role in its realization. For example, networking technology, including data transfer and connectivity standards, will be an important factor.

SUMMARY

In the future, sources of information will include DOD organic systems, systems from other government agencies, emerging space-based and airborne commercial imaging systems, other commercial information providers, and public-domain sources that will emerge from the burgeoning information infrastructure.

As information becomes increasingly central to commerce, society will move from an environment of relative information scarcity to one of information abundance, in which applications must locate and correlate information from massive sets of seemingly unrelated data. Technology development is required to develop the applications that will effect the transformation of raw data to higher levels of information understanding, which will include not only the extraction of fixed patterns from sensor data, but also the analysis and reasoning about correlations and co-occurrence of relevant observations. Current applications typically perform pattern recognition on real-time data collected by dedicated sensors; future information understanding systems will need to perform higher-order reasoning about information from the full range of available information sources.