4

SUPERVISORY CONTROL SYSTEMS

In the past 15 years the introduction of automation into working environments has created more and more jobs in which operators are given very high levels of responsibility and very little to do. The degree of responsibility and the amount of work vary from position to position, but the defining properties of such jobs are: (1) The operator has overall responsibility for control of a system that, under normal operating conditions, requires only occasional fine tuning of system parameters in order to maintain satisfactory performance. (2) The major tasks are to program changes in inputs or control routines and to serve as a backup in the case of a failure or malfunction in a system component. (3) Important participation in system operation occurs infrequently and at unpredictable times. (4) The time constraints associated with participation, when it occurs, can be very short, of the order of a few seconds or minutes. (5) The values and costs associated with operator decisions can be very large. (6) Good performance requires rapid assimilation of large quantities of information and the exercise of relatively complex inference processes.

These kinds of jobs are found in the process control industries, such as chemical plants and nuclear power plants. They are involved in the control of aircraft, ships, and urban rapid transit systems, robotic remote control systems for inspection and manipulation in the deep ocean, and computer-aided manufacturing. They are involved in medical patient-monitoring systems and law

The principal authors of this chapter are Thomas B.Sheridan, Baruch Fischhoff, Michael Posner, and Richard W.Pew.

enforcement information and control systems. As computer aids are introduced into military command and control systems, such jobs become involved in that area. For example, the Army alone currently has 70 automated or computer-aided systems at the concept development stage (U.S. Army Research Institute, 1979). The other services have similar projects under development.

The human factors problems involved in supervisory control systems can be classifed into five categories.

-

Display. In the past these systems have used large arrays of meters and gauges or large situation boards and control panels to display information, with the general goal of displaying everything, because one never knows exactly what will be needed. Little attention has been paid to the need to assimilate diverse information sources into coherent patterns for making inferences simply and directly. Today computers are being used more and more in the control of these operations; large display panels are being collapsed into computer-generated displays that can call up the needed information on demand. These developments in physical technology are pushing human factors engineers to devise better ways of coding and formating large collections of information to facilitate interpretation and reliable decisions by operators. Also needed are better means of accessing information, means that are not opaque and do not leave operators confused in urgent and stressful situations.

-

Command. The emergence of powerful computers and robotic devices has necessitated the development of better “command languages,” by which operators can convey instructions to a lower-level intelligence, perhaps giving examples or hints and providing criteria or preferences, and doing it in a communication mode that is natural and adaptable to different people and linguistic styles.

-

Operator’s Model. We also lack well-developed methodologies for identifying the internal conceptual model on the basis of which an operator attempts to solve a problem. (This has also been called the operator’s system image, picture, or problem space.) Incorrect operator’s models can lead to disastrous results (e.g., Three Mile Island); it is obviously a matter of utmost importance for operators of military command and control systems to acquire proper conceptual models and keep them updated on a moment-by-moment basis in times of crisis.

-

Workload. We have no good principles of job design for operations in supervisory control systems, in part because it has proved extremely difficult to measure or estimate the mental workloads involved. They tend to be highly transient, varying from light and boring when the work is routine to extremely demanding when action is critical. At present there is no consensus on what mental workload is or how to measure it, especially in the context of supervisory control.

-

Proficiency and Error. Issues of training and proficiency maintenance are critical in this kind of operation because each event is in some sense unique and is drawn from an extremely large set of possibilities, most of which will never occur during the operating life of the system. It is not easy to anticipate what types of errors will occur or how to train to prevent them.

SUPERVISORY CONTROL IN DIFFERENT APPLICATIONS

This section, adapted from Sheridan (1982), provides brief comparisons and contrasts among different applications of supervisory control systems: process control, vehicle control, and manipulators.

Process Control

The term process usually refers to a dynamic system, such as a fossil fuel or nuclear power generating plant or a chemical or oil production facility, that is fixed in space and operates more or less continuously in time. Typically time constants are slow—many minutes or hours may elapse after a control action is taken before most of the system response is complete.

Most such processes involve large structures with fluids flowing from one place to another and involve the use of heat energy to affect the fluid or vice versa. Typically such systems involve multiple personnel and multiple machines, and at least some of the people move from one location of the process to another. Usually there is a central control room where many measured signals are displayed and where valves, pumps, and other devices are controlled.

Supervisory control has been emerging as an element in process control for several decades. Starting with electromechanical controllers or control stations that

could be adjusted by the operator to maintain certain variables within limits (a home thermostat is an example), special electronic circuits gradually replaced the electromechanical function. In such systems the operator can become part of the control loop by switching to manual control. Usually each control station displays both the variable being controlled (e.g., room temperature for the thermostat) and the control signal (e.g., the flow of heat from the furnace). Many such manual control devices may be lined up in the control room, together with manual switches and valves, status lights, dials and recording displays, and as many as 1/500 alarms or annunciators—windows that light up to indicate what plant variable has just gone above or below limits. From the pattern of these alarms (e.g., 500 in the first minute of a loss-of-coolant accident and 800 in the second minute, by recent count, in a large new nuclear plant) the operator is supposed to divine what is happening.

The large, general-purpose computer has found its way into process control. Instead of multiple, independent, conventional proportional-integral-derivative controllers for each variable, the computer can treat the set of variables as a vector and compute the control trajectory that would be optimal (in the sense of quickest, most efficient, or whatever criterion is important). Because there are many more interactions than the number of variables, the variety of displayed signals and the number of possible adjustments or programs the human operator may input to the computer-controller are potentially much greater than before. Thus there is now a great need, accelerated since the events at Three Mile Island, to develop displays that integrate complex patterns of information and allow the operator to issue commands in a natural, efficient, and reliable manner. The term system state vector is a fashionable way to describe the display of minimal chunks of information (using G.A.Miller’s well-known terminology) to convey more meaning about the current state vector of variables, where it has been in the past, and where it is likely to go in the near future.

Vehicle Control

Unlike the processes described above, vehicles move through space and carry their operators with them or are controlled remotely. Various types of vehicles have come

under a significant degree of supervisory control in the last 30 years.

We might start with spacecraft because, in a sense, their function is the simplest. They are launched to perform well-defined missions, and their interaction with their environment (other than gravity) is nil. In other words, there are no obstacles and no unpredictable traffic to worry about. It was in spacecraft, especially Apollo, that human operators who were highly skilled at continuous manual control (test pilots or “joy stick jockeys”) had to adapt to a completely new way of getting information from the vehicle and giving it commands—this new way was to program the computer. The astronauts had to learn to use a simple keyboard with programs (different functions appropriate to different mission phases), nouns (operands, or data to be addressed or processed) and verbs (operations, or actions to be performed on the nouns).

Of course, the astronauts still performed a certain number of continuous control functions. They controlled the orientation of the vehicle and maneuvered it to accomplish star sighting, thrust, rendezvous, and lunar landing. But, as is not generally appreciated by the public, control in each of these modes was heavily aided. Not only were the manual control loops themselves stabilized by electronics, but also nonmanual, automatic control functions were being simultaneously executed and coordinated with what the astronauts did.

In commercial and military aircraft there has been more and more supervisory control in the last decade or two. Commercial pilots are called flight managers, indicative of the fact that they must allocate their attention among a large number of separate but complex computer-based systems. Military aircraft are called flying computers, and indeed the cost of the electronics in them now far exceeds the cost of the basic airframe. By means of inertial measurement, a feature of the new jumbo jets as well as of military aircraft, the computer can take a vehicle to any latitude, longitude, and altitude within a fraction of a kilometer. In addition there are many other supervisory command modes intermediate between such high-level commands and the lowest level of pure continuous control of ailerons, elevators, and thrust. A pilot can set the autopilot to provide a display of a smooth command course at fixed turn or climb rates to follow manually or can have the vehicle slaved to this course. The autopilot can be set to achieve a new altitude on a new heading. The pilot can lock onto

radio beams or radar signals for automatic landing. In the Lockheed L-1011, for example, there are at least 10 separate identifiable levels of control. It is important for the pilot to have reliable means of breaking out of these automatic control modes and reverting to manual control or some intermediate mode. For example, when in an automatic landing mode the pilot can either push a yellow button on the control yoke or jerk the yoke back to manually get the aircraft back under direct control.

Air traffic control poses interesting supervisory control problems, for the headways (spacing) between aircraft in the vicinity of major commercial airports are getting tighter and tighter, and efforts both to save fuel and to avoid noise over densely populated urban areas require more radical takeoff and landing trajectories. New computer-based communication aids will supplement purely verbal communication between pilots and ground controllers, and new display technology will help the already overloaded ground controllers monitor what is happening in three-dimensional space over larger areas, providing predictions of collision and related vital information. The CDTI (cockpit display of traffic information) is a new computer-based picture of weather, terrain hazards such as mountains and tall structures, course information such as way points, radio beacons and markers, and runways and command flight patterns as well as the position, altitude, heading (and even predicted position) of other aircraft. It makes the pilot less dependent on ground control, especially when out-the-window visibility is poor.

More recently ships and submarines have been converting to supervisory control. Direct manual control by experienced helmsmen, which sufficed for many years, has been replaced both by the installation of inertial navigation, which calls for computer control and provides capability never before available, and by the trends toward higher speed and long time lags produced by larger size (e.g., the new supertankers). New autopilots and computer-based display aids, similar to those in aircraft, are now being used in ships.

Manipulators and Discrete Parts Handling

In a sense, manipulators combine the functions of process control and vehicle control. The manipulator base may be carried on a spacecraft, a ground vehicle, or a submarine,

or its base may be fixed. The hand (gripper, end effector) is moved relative to the base in up to three degrees of translation and three degrees of rotation. It may have one degree of freedom for gripping, but some hands have differentially movable fingers or otherwise have more degrees of freedom to perform special cutting, drilling, finishing, cleaning, welding, paint spraying, sensing, or other functions.

Manipulators are being used in many different applications, including lunar moving vehicles, undersea operations, and hazardous operations in industry. The type of supervisory control and its justification differs according to the application.

The fact of a three-second time delay in the earth-lunar control loop resulting from round-trip radio transmission from earth leads to instabilities, unless an operator waits three seconds after each of a series of incremental movements. This makes direct manual control time-consuming and impractical. Sheridan and Ferrell (1967) proposed having a computer on the moon receive commands to complete segments of a movement task locally using local sensors and local computer program control. They proposed calling this mode supervisory control. Delays in sending the task segments from earth to moon would be unimportant, so long as rapid local control could introduce actions to deal with obstacles or other self-protection rapidly.

The importance of supervisory control to the undersea vehicle manipulator is also compelling. There are things the operator cannot sense or can sense only with great difficulty and time delay (e.g., the mud may easily be stirred up, producing turbid opaque water that prevents the video camera from seeing), so that local sensing and quick response may be more reliable. For monotonous tasks (e.g., inspecting pipelines, structures, or ship hulls or surveying the ocean bottom to find some object) the operator cannot remain alert for long; if adequate artificial sensors could be provided for the key variables, supervisory control should be much more reliable. The human operator may have other things to do, so that supervisory control would facilitate periodic checks to update the computer program or help the remote device get out of trouble. A final reason for supervisory control, and often the most acceptable, is that, if coramunications, power, or other systems fail, there are fail-safe control modes into which the remote system reverts to get the vehicle back to the surface or othewise render it recoverable.

Many of these same reasons for supervisory control apply to other uses of manipulators. Probably the greatest current interest in manipulators is for manufacturing (so-called industrial robots), including machining, welding, paint spraying, heat treatment, surface cleaning, bin picking, parts feeding for punch presses, handling between transfer lines, assembly, inspection, loading and unloading finished units, and warehousing. Today repetitive tasks such as welding and paint spraying can be programmed by the supervisor, then implemented with the control loops that report position and velocity. If the parts conveyor is sufficiently reliable, welding or painting nonexistent objects seldom occurs, so that more sophisticated feedback, involving touch or vision, is usually not required. Manufacturing assembly, however, has proven to be a far more difficult task.

In contrast to assembly line operations, in which, even if there is a mix of products, every task is prespecified, in many new applications of manipulators with supervisory control, each new task is unpredictable to considerable extent. Some examples are mining, earth moving, building construction, building and street cleaning and maintenance, trash collection, logging, and crop harvesting, in which large forces and power must be applied to external objects. The human operator is necessary to program or otherwise guide the manipulator in some degrees of freedom, to accomodate each new situation; in other respects certain characteristic motions are preprogrammed and need only to be initiated at the correct time. In some medical applications, such as microsurgery, the goal is to minify rather than enlarge motions and forces, to extend the surgeon’s hand tools through tiny body cavities to cut, to obtain tissue samples, to remove unhealthy tissue, or to stitch. Again, the surgeon controls some degrees of freedom (e.g., of an optical probe or a cauterizing snare), while automation controls other variables (e.g., air or water pressure).

THEORY AND METHOD

There are a number of limited theories and methods in the human factors literature that should be brought to bear on the use of supervisory control systems. A great deal remains to be done, however, to apply them in this context. The discussion that follows deals with five aspects of the problem. The first considers current formal models

of supervisory control. The second discusses display and command problems. The third takes up computer knowledge-based systems and their relation to the internal cognitive model of the operator for on-line decision making in supervisory control. The fourth deals with mental workload, stress, and research on attention and resource allocation as they relate to supervisory control. The fifth is concerned with issues of human error, system reliability, trust, and ultimate authority.

Modeling Supervisory Control

In the area of real-time monitoring and control of continuous dynamic processes, the optimal control model (Baron and Kleinman, 1969) describes the perceptual motor behavior of closed-loop systems having relatively short time constants. Experimentation on this topic has been limited, suggesting that this class of model may be broadened to represent monitoring and discrete decision behavior in dynamic systems in which control is infrequent (Levison and Tanner, 1971). There are also attempts to extend this work to explore its applicability to more complex systems (Baron, et al., 1981; Kok and Stassen, 1980).

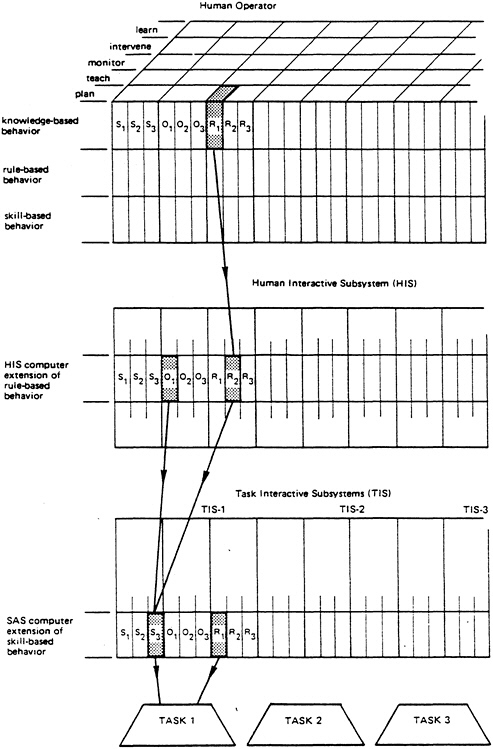

An increasing number of supervisory control systems can be represented by a hierarchy of three kinds of interaction (Sheridan, 1982): (1) a human operator interacting with a high-level computer, (2) low-level computers interacting with physical entities in the environment, and (3) the resulting multilevel and multi-loop interaction, having interesting symmetrical properties (Figure 4–1). Since there are three levels of intelligence (one human, two artificial), the allocation of cognitive and computational tasks among the three becomes central. Using Rasmussen’s (1979) categorization of behavior into knowledge-based, rule-based, and skill-based behavior, the operator may assign rule-based tasks (e.g., pattern recognition, running planning and predictive models, organizing) to the high-level computer (Figure 4–2). Similarly, skill-based tasks (filtering, display generation, servo-control) may be assigned to various low-level computers. The operator must concentrate on the environmental tasks that compete for his attention, allocating his attention among five roles: (1) planning what to do next, (2) teaching or on-line programming of the computer(s), (3) monitoring the (semi)

FIGURE 4–1 Multiloop Interaction in a Supervisory Control System

automatic behavior of the system for abnormalities, (4) intervening when necessary to make adjustments, maintaining, repairing, or assuming direct control, and (5) learning from experience.

Display and Command

Design of integrated computer-generated displays is not a new problem, and the military services and space agencies

have pioneered developments in this area for aircraft and various command and control systems. But the technology continues to create more possibilities. Operators of supervisory control systems need to have fewer displays, not more, telling them what they want or need to know when they want or need to know it. An additional design problem is that what operators think they need and what they really need may differ.

As computer collaborators become more and more sophisticated a useful type of display would tell the operator what the computer knows and assumes, both about the system and about the operator, and what it intends to do.

An important source of guidance regarding the design of displays has been and will continue to be the intuitive beliefs of experienced operators. The designer needs to know how much credence to give to these intuitions. Too little attention may mean forfeiting a valuable source of information; too much may result in inappropriate designs that fit untested folk wisdom (a pilot’s belief in the value of verisimilitude in displays is an example of the latter problem). Ericsson and Simon’s taxonomy (1980) of situations in which introspection is more and less valid is one point of departure for research. Studies of meta-cognition, people’s understanding of their own cognitive processes (as contrasted with current psychological understanding), are a second (Cavanaugh and Borkowski, 1980). The studies of clinical judgment conducted in the 1950s and 1960s (Goldberg, 1968) are a third. These studies found that in the course of their diagnoses expert clinicians imagine that they rely on more variables and use them in more complex manner than appears to be the case from attempts to model their diagnostic processes.

Although good-quality computer-generated speech is both available and cheap, and although it can give operators warnings and other information without their prior attention being directed to it, little imaginative use of such a capability has been made as yet in supervisory control.

The use of command language has arisen more recently in conjunction with teaching or programming robot systems. A more primitive form of it is found in the new autopilot command systems in aircraft. Giving commands to a control system by means of strings of symbols in syntax is a new game for most operators. Progress in this area depends on careful technology transfer from data processing that is self-paced to dynamic control in which the pace is determined by many factors. Naturalness in use of such language is also an important goal.

Command, in many circumstances, is not a solitary task. The operator must interact with many individuals in order to get a job done. This may be particularly the case when the nature of the emergency means that the technical system cannot be trusted to report and respond reliably—that is, an interacting human system may assume (and perhaps interface with) some of the functions of the interacting technical system. The kinds of human interaction possible include requesting information, monitoring the response of the system, notifying outsiders (e.g., for evacuation, to provide special skills), and terminating unnecessary communications. When are these interactions initiated? How valid are the cues? What features of technical systems make such intervention more and less feasible? How does having others around affect operators’ thoughts and actions (e.g., are they more creative, more risk-averse, more careful)?

Another question that arises with multiperson systems is whether one individual (or group) should both monitor for and cope with crises. In medicine it is not always assumed that the same individual has expertise in both diagnosis and treatment. Perhaps in supervisory control systems the equivalent functions should be separated, and different training and temperament called for in monitoring and in intervention.

Computer Knowledge-Based Systems and the Operator’s Internal Cognitive Model

It is not a new idea that, in performing a task, people somehow represent the task in their heads and calculate whether, given certain constraints, doing this will result in that. Such ideas derive from antiquity.

Human-Machine Control

In the 1950s the development of the “observer” in control systems theory formalized this idea. That is, a differential equation model of the external controlled process is included in the automatic controller and is driven by the same input that drives the actual process. Any discrepancy between the output of this computerized model of the environmental process and the actual process is fed back as a correction to the internal model to force its variables to be continuously the same as the actual

process. Then any and all state variables as represented (observed) in the internal model may be used to directly control the process, if direct measurement of those same variables in the actual environment may be costly, difficult, or impossible. This physical realization of the traditional idea of the internal model probably provoked much of the current research in cognitive science.

Running in fast-time, updating initial conditions at each of a succession of such calculations, the model becomes a “predictor display” that provides the operator with a projection of what will happen under given assumptions of input (Kelly, 1968). Further comparisons can be made between outputs of such real-time models run in the computer and those of the operator’s own internal model, not only for control but also for failure detection and isolation (Sheridan, 1981). Tsach has developed a realization of this as an operator aid for application to process control (Tsach et al., 1982).

Ideally the computer should keep the operator informed of what it is assuming and computing, and the operator should keep the computer informed of what he or she is thinking.

Cognitive Science

In the last several years cognitive psychology has contributed some theories about human inference that make the application of knowledge-based systems particularly relevant to supervisory control. The idea is that reasoning and decision making consist of the developing and searching of complex problem spaces (Newell and Simon, 1972) and of applying one or more inference procedures about information in a knowledge base that represents the decision maker’s understanding of the situation (Collins and Loftus, 1975). This is similar to but more inclusive and less well developed than the internal process model used by control theorists. Rasmussen’s (1979) qualitative model of human decision making about process control is entirely compatible with this view. And, the contribution of specialists in artificial intelligence concerning knowledge-based systems provides one way to implement the computer portion of such human-computer interaction.

A number of human factors problems relate to people’s ability to hold in mind the basic workings of a complex system and to update that view depending on the current state of the system. Recent studies of cognitive

processes in skilled operators such as taxi drivers (Chi et al., 1980) or chess players (Chase and Simon, 1973) begin to provide the kind of information that will be needed by human factors designers evaluating these issues. For example, how can people best be trained to develop effective problem spaces? What is the optimal mix of analog and digital representation? How can the computer’s data base system be used to aid the individual in developing and updating of such an internal model? What means can be used to ensure that the current state of the model fits with the current state of the system? With what frequency should a person be interrogated about his or her current view of the model to make sure that he or she is still “with it” in control of the system? For human supervision to be really effective, a detailed understanding of how the human controller grasps a complex system at any moment in time and updates it over time is necessary.

How can we determine a given operator’s internal cognitive model of a given task at a given time? One method is to ask the operator to express it in natural language, but the obvious difficulty is that each operator’s expression is unique, making it very difficult to measure either discrepancy from reality or to compare across operators. Verbal protocol techniques (Bainbridge, 1974) make use of key words and relations. More formal psychometric techniques (multiattribute utility assessment, conjoint or multidimensional scaling, interpretive structural modeling, policy capturing, and fuzzy set theory) offer some promising ways of telling a computer one’s knowledge and values in structural form.

A likely (and perhaps common) source of difficulty is a mismatch in the mental models of a system of those who design it and those who operate it. Operators who fail to recognize this disparity are subject to unpleasant surprises when the system behaves in unexpected ways. Operators who do recognize it may fail to exploit the full potential of the system for fear of surprises if they push it into unfamiliar territory (Young, 1981). On a descriptive level, it would be useful to understand the correspondence between the mental models of designers and operators as well as to know which experiences signal operators that there is a mismatch and how they cope with that information. On a practical level, it would be useful to know more about the possibility of improving the match of these two models by steps such as involving operators more in the design process or showing them how

the design evolved (rather than giving them a reconstruction of its final state). The magnitude of these problems is likely to grow to the extent that designers and operators have different training, experience, and intensity of involvement with systems.

Mental Workload

The concept of mental workload as discussed in this section is not unique to supervisory control, but it is sufficiently important in this context to be included here as a special consideration.

Human-Machine Control (This section is adapted from Sheridan and Young, 1982).

During the last decade “mental workload” has become a concept of great controversy, not because of disagreement over whether it is important, but because of disagreement over how to define and measure it. Military specifications for mental workload are nevertheless being prepared by the Air Force, based on the assumption that mental workload measures will predict—either at the design stage or during a flight or other operation—whether an operation can succeed. In other words, it is believed that measurements of mental workload are more sensitive in anticipating when pilot or operator performance will break down than are conventional performance measures of the human-machine system.

At the present time “mental workload” is a construct. It must be inferred; it cannot be observed directly like human control response or system performance, although it might be defined operationally in terms of one or several or a battery of tests. There is a clear distinction between mental and physical workload: The latter is the rate of doing mechanical work and expending calories. There is consensus on measurements based on respiratory gases and other techniques for measuring physical workload.

Of particular concern are situations having sustained mental workloads of long duration. Many aircraft missions continue to require such effort by the crew. But the introduction of computers and automation in many systems has come to mean that for long periods of time operators have nothing to do—the workload may be so low as to

result in boredom and serious decrement in alertness. The operator may then suddenly be expected to observe events on a display and make critical judgments—indeed, even to detect an abnormality, diagnose what failed, and take over control from the automatic system. One concern is that the operator, not being “in the loop,” will not have kept up with what is going on, and will need time to reacquire that knowledge and orientation to make the proper diagnoses or take over control. Also of concern is that at the beginning of the transient the computer-based information will be opaque to the operator, and it will take some time even to figure out how to access and retrieve from the system the needed information.

There have been three approaches to measuring mental workload. One approach, used by the aircraft manufacturers, avoids coping directly with measurements of the operator per se and bases workload on a task time-line analysis: the more tasks the operator has to do per unit of time, the greater the workload. This provides a relative index of workload that characterizes task demand, other factors being equal. It says nothing about the mental workload of any actual person and indeed could apply to a task performed by a robot.

The second approach is perhaps the simplest—to use the operator’s subjective ratings of his or her perceived mental workload. This may be done during or after the events judged. One form of this is a single-category scale similar to the Cooper-Harper scale for rating aircraft handling quality. Perhaps more interesting is a three-attribute scale, there being some consensus that “fraction of total time busy,” “cognitive complexity,” and “emotional stress” are rather different characteristics of mental workload and that one or two of these can be large when the other(s) are small. These scales have been used by the military services as well as aircraft manufacturers. A criticism of them is that people are not always good judges of their own ability to perform in the future. Some pilots may judge themselves to be quite capable of further sustained effort at a higher level when in fact they are not.

The third approach is the so-called secondary task or reserve capacity technique. In it a pilot or operator is asked to allocate whatever attention is left over from the primary task to some secondary task, such as verbally generating random numbers, tracking a dot on a screen with a small joy stick, etc. Theoretically, the better the performance on the secondary task, the less the time

required and therefore the less the mental workload of the primary task. A criticism of this technique is that it is intrusive; it may itself reduce the attention allocated to the primary task and therefore be a self-contaminating measure. And, in real flight operations the crew may not be so cooperative in performing secondary tasks.

The fourth and final technique is really a whole category of partially explored possibilities—the use of physiological measures. Many such measures have been proposed, including changes in the electroencephalogram (ongoing or steady-state), evoked response potentials (the best candidate is the attenuation and latency of the so-called P300, occurring 300 milliseconds after the onset of a challenging stimulus), heart rate variability, galvanic skin response, pupillary diameter, and frequency spectrum of the voice. All of these have proved to be noisy and unreliable.

Both the Air Force and the Federal Aviation Administration currently have major programs to develop workload measurement techniques for aircraft piloting and traffic control.

If an operator’s mental workload appears to be excessive, there are several avenues for reducing it or compensating for it. First, one should examine the situation for causal factors that could be redesigned to be quicker, easier, or less anxiety-producing. Or perhaps parts of the task could be reassigned to others who are less loaded, or the procedure could be altered so as to stretch out in time the succession of events loading the particular operator. Finally, it may be possible to give all or part of the task to a computer or automatic system.

Cognitive Science

It is important, for purposes of evaluating both mental workload and cognitive models as discussed in the previous section, to note that there has been an enormous change in models of mental processing in both psychology and computer science. In their recent paper, Feldman and Ballard (in press) argue that:

Contemporary computer science has sharpened our notions of what is “computable” to include bounds on time, storage and other resources. It does not

seem unreasonable to require that computational models and cognitive science be at least as plausible in their postulated resource requirement.

The critical resource that is most obvious is time. Neurons, whose basic computational speed is a few milliseconds must be made to account for complex behaviors which are carried out in a few hundred milliseconds…(Posner, 1978). This means that higher complex behaviors are carried out in less than a hundred time steps. It may appear that the problem posed here is inherently unsolvable and that we have made an error in our formulation, but recent results in computational complexity theory suggest that networks of active computing elements can carry out at least simple computations in the required time range—these solutions involve using massive numbers of units and connections and we also address the question of limitations on these resources.

There is also evidence from experimental psychology (Posner, 1978) that the human mind is, at least in part, a parallel system. From neuropsychological considerations there is reason to suppose that a parallelism is represented in regional areas of the brain responsible for different sorts of cognitive functions. For example, we know that different visual maps (Cowey, 1979) underlie object recognition and that separate portions of the cortex are involved in the comprehension and production of language. We also know more about the role of subcortical and cortical structures in motor control.

The study of mental workload has simply not kept up with these advances in the conceptualization of the human mind as a complex of subsystems. The majority of researchers of human workload have studied the interference of one complex task with another. There is abundant evidence in the literature that such interference does occur. However, this general interference may account for only a small part of the variance in total workload. More important may be the effects of the specific cognitive systems shared by two tasks. Indeed, Kinsbourne and Hicks (1978) have recently formulated a theory of attention in which the degree of facilitation or interference between tasks depends on the distance between their cortical representation. The notion of distance may be merely metaphorical, since we do not know whether it represents the actual physical distance on the cortex or

whether it involves a relative interconnectivity of cortical area; the latter idea seems more reasonable.

Viewing humans in terms of cognitive subsystems changes the perspective on mental workload (see Navon and Gopher, 1979). It is unusual for any human task to involve only a single cognitive system or to occur at any fixed location in the brain. Most tasks differ in sensory modality, in central analysis systems, and in motor output systems. There is need for basic research to understand more about the separability and coordination of such cognitive systems. We also need a task analysis that takes advantage of the new cognitive systems approach to ask how tasks distribute themselves among different cognitive systems and when performance of different tasks may draw on the same cognitive system. There is also an obvious connection between a cognitive systems approach and analysis of individual differences based on psychometric or information processing concepts, and much needs to be done to link analysis of individual abilities to the ability to time-share activity within the same cognitive system or across different systems (Landman and Hunt, 1982).

An emphasis on separable cognitive systems does not necessarily mean that a more unified central controlling system is unnecessary. Indeed, widespread interference between tasks of very different types (Posner, 1980) suggests that such a central controller is a necessary aspect of human performance. There are a number of theoretical views addressing the problem of self-regulation of behavior, particularly in stressful situations. Two principles have been applied by human factors engineers: The first is that attention narrows under stress. Thus, more attention is allocated to central aspects of the task while less attention is allocated to more peripheral or secondary aspects. Sometimes this principle has been applied to positions in visual space, arguing that peripheral vision is sacrified more than central vision under stress. The degree to which the general principle applies automatically to positions in visual space or to allocation of function within tasks is simply not very well understood—but it should be. A second principle of the relationship between stress and attention suggests that under stress habitual behaviors take precedence over new or novel behaviors. The idea is that behaviors originally learned under stressful conditions tend to return when conditions are again stressful. This view is particularly important with respect to the process of changing people from one task layout to

another. If the original learning takes place under high stress conditions while transition occurs under relatively low stress conditions, a stressful situation may tend to reinstate the responses learned in the original configuration.

Recently cognitive psychologists have begun to take into account emotional responses produced under conditions of stress (Bower, 1981). One development emphasizes links between individual differences in emotional responding and attention (see Posner and Rothbart, 1980, for a review). Although it is a highly speculative hypothesis at this time, this work suggests that attention may be viewed as a method for controlling the degree of emotional responding that occurs during stressful conditions. In particular, differences in personality and temperament may affect the degree to which attention and other mechanisms are successful in managing stress. These new models relate emotional responding to more cognitive processes. They have the potential of helping us understand more about the effects of emotion and how it may guide cognition and behavior under stressful,conditions. Since this work has just begun, there are few general principles to link the emotional responses to cognition as yet. Developments along this line could be useful for human factors engineers, particularly those involved in training and retraining and those involved in mangement of stress under battlefield conditions.

For the most part, this discussion has been from the viewpoint of the overloaded operator. For much of the time, however, the operator may be underloaded. In the field of vigilance research, which is concerned with human behavior in systems in which signal detection is required but the signals are infrequent and difficult to detect, a great deal is known about exactly what parameters of signal presentation affect performance. The signal detection model (Green and Swets, 1966) has been shown to be useful in analyzing such behavior. Again, its applicability has not been evaluated in more complex tasks in which signals are represented by more complex patterns of activity as would be the case in supervisory control systems of the types described above.

Human Proficiency and Error: Culpability, Trust, and Ultimate Authority

Designers of the large, complex, capital-intensive, high-risk-of-failure systems we have been discussing

would like to automate human operators out of their systems. But they know they must depend on them to plan, program, monitor, step in when failures occur with the automation, and generalize on system experience. They are also terrified of human error.

Both the commercial aviation and the nuclear power industries are actively collecting data on human error and trying to use it analytically in conjunction with data on failures in physical components and subsystems to predict the reliability of overall systems. The public and the Congress, in a sense, are demanding it, on the assumption that it is clear what human error is, how to measure it, and even how to stop it.

Human error is commonly thought of as a mistake of action or judgment that could have been avoided had the individual been more alert, attentive, or conscientious. That is, the source of error is considered to be internal and therefore within the control of the individual and not induced by external factors such as the design of the equipment, the task requirements, or lack of adaquate training.

Some behavioral scientists may claim that people err because they are operating “open loop”—without adequate feedback to tell them when they are in error. They would have supervisory control systems designers provide feedback at every potential misstep. Product liability litigants sometimes take a more extreme stance—that equipment should be designed so that it is error proof, without the opportunity for people to (begin to) err, get feedback, then correct themselves.

The concept of human error needs to be examined. The assertion that an error has been committed implies a sharp and agreed-upon dividing line between right and wrong, a simple binary classification that is obviously an over-simplification. Human decision and action involve a multidimensional continuum of perceiving, remembering, planning, even socially interacting. Clearly the fraction of errors in any set of human response data is a function of where the boundry is drawn. How does one decide where to draw the line dividing right from wrong across the many dimensions of behavior? In addition, is an error of commission, (e.g., actuating a switch when it is not expected), equivalent to an error of omission, (e.g., failing to actuate a switch when it is expected)? Is it useful to say, in both these instances, an error has been committed? What then exactly do we mean by human error?

People tend to differ from machines in that people are more inclined to make “common-mode errors,” in which one failure leads to another, presumably because of concurrency of stimuli or responses in space or time. Furthermore, as suggested earlier, if a person is well practiced in a procedure ABC, and must occassionally do DBE, he or she is quite likely in the latter case to find himself or herself doing DBC. This type of error is well documented in process control, in which many and varied procedures are followed. In addition, when people are under stress of emergency they tend more often to err (sometimes, however, analysts may assume that operators are aware of an emergency when they are not). People are also able to discover and correct their own errors, which they surely do in many large-scale systems to avert costly accidents.

Presumably the rationale for defining human error is to develop means for predicting when they are likely to occur and for reducing their frequency (Swain and Gutman, 1980). Various taxonomies of human error have been devised. There are errors of omission and errors of comission. Errors may be associated with sensing, memory, decision making, or motor skill. Norman (1981) distinguishes mistakes (wrong intention) from slips (correct intention but wrong action). But at present there is no accepted taxonomy on which to base the definition of human error, nor is there agreement on the dimensions of behavior that should be invoked in such a taxonomy.

There is usefulness in both a case study approach to human error and in the accumulation of statistics on errors that lead to accidents. Both these approaches, however, require that the investigator have a theory or model of human error or accident causation and the framework from which to approach the analysis. In addition there is a need to understand the causal chain between human error and accident.

One has only to examine a sampling of currently used accident reporting forms to realize the importance of the need for a framework for analyzing human error. They range from medical history forms to equipment failure reports. None that we have examined deals satisfactorily with the role of human behavior in contributing to the accident circumstances.

Furthermore, for accident reports to be useful, their aim needs to be specified. There is an inherent conflict between the goals of understanding what happened and attempting to fix blame for it. The former requires candor, whereas the latter discourages it. Other poten-

tial biases in these reports include: (a) exaggerating in hindsight what could have been anticipated in foresight; (b) being unable to reconstruct or retrieve hypotheses about what was happening that no longer makes sense in retrospect; (c) telescoping the sequence of events (making their temporal course seem shorter and more direct); (d) exaggerating one’s own role in events; (e) failing to see the internal logic of others’ actions (from their own perspective). Variants of these reporting biases have been observed elsewhere (Nisbett and Ross, 1980). Their presence and virulence in accident reports on supervisory control systems merits attention.

In addition to these fundamental research needs, there is a variety of related issues particularly relevant to supervisory control systems that should be addressed.

In supervisory control systems it is becoming more and more difficult to establish blame, for the information exchange between operators and computers is complex, and the “error,” if there ever was any, could be in hardware or software design, maintenance, or management.

Most of us think we observe that people are better at some kinds of tasks than computers, and computers are better at some others. Therefore, it seems that it would be quite clear how roles should be allocated between people and computers. But the interactions are often so subtle as to elude understanding. It is also conventional wisdom to say that people should have the ultimate authority over machines. But again, in actual operating systems we usually find ourselves ill prepared to assert which should have authority under what circumstances and for how long.

Operators in such systems usually receive fairly elaborate training in both theory and operating skills. The latter is or should be done on simulators, since in actual systems the most important (critical) events for which the operator needs training seldom occur. Unfortunately there has been a tendency to standardize the emergencies (classic stall or engine fire in aircraft, large-break loss-of-cooling accident in nuclear plants) and repeat them on the simulator until they become fixed patterns of response. There seldom is emphasis on responding to new, unusual emergencies, failures in combination, etc., which the rule book never anticipated. Simulators would be especially good for such training.

A frustrating, and perhaps paradoxical, feature of “emergency” intervention is that supervisors must still

rely on and work with systems that they do not entirely trust. The nature and success of their intervention is likely to depend on their appraisal of which aspects of the system are still reliable. Research might help predict what doubts about related malfunctions are and are not aroused by a particular malfunction. Does the spread of suspicion follow the operator’s mental model (e.g., lead to other mechanically connected subsystems) or along a more associative line (e.g., mistrust all dials)? A related problem is how experience with one malfunction of a complex system cues the interpretation of subsequent malfunctions. Is the threshold of mistrust lowered? Is there an unjustified assumption that the same problem is repeating itself, or that the same information-searching procedures are needed? How is the expectation of successful coping affected? Do operators assume that they will have the same amount of time to diagnose and act? Finally, how does that experience generalize to other technical systems? Do bad experiences lead to a general resistance to innovation?

A key to answering these questions is understanding the operators’ own attribution processes. Do they subscribe to the same definition of human error as do those who evaluate their performance? What gives them a feeling of control? How do they assign responsibility for successful and unsuccessful experiences? Although their mental models should provide some answers to these questions, others may be sought in general principles of causal attribution and misattribution (Harvey, et al., 1976).

CONCLUSIONS AND RECOMMENDATIONS

Supervisory control of large, complex, capital-intensive, high-risk systems is a general trend, driven both by new technology and by the belief that this mode of control will provide greater efficiency and reliability. The human factors aspects of supervisory control have been neglected. Without further research they may well become the bottleneck and most vulnerable or most sensitive aspect of these systems. Reseach is needed on:

-

How to display integrated dynamic system relationships in a way that is understandable and accessible. This includes how best to allow the computer to tell the operator what it knows, assumes, and intends.

-

How best to allow the operator to tell the computer what he or she wants and why, in a flexible and natural way.

-

How to discover the internal cognitive model of the environmental process that the operator is controlling and improve that cognitive representation if it is inappropriate.

-

How to aid the cognitive process by computer-based knowledge structures and planning models.

-

Why people make errors in system operation, how to minimize these errors, and how to factor human errors into analyses of system reliability.

-

How mental workload affects human error making in systems operation and refinement and standardization of definitions and measures of mental workload.

-

Whether human operator or computer should have authority under what circumstances.

-

How to coordinate the efforts of the different humans involved in supervisory control of the same system.

-

How best to learn from experience with such large, complex, interactive systems.

-

How to improve communication between the designers and operators of technical systems.

Research is needed to improve our understanding of human-computer collaboration in such systems and on how to characterize it in models. The validation of such models is also a key problem, not unlike the problem of validating socioeconomic or other large-scale system models.

In view of the scale of supervisory control systems, closer collaboration between researchers and systems designers in the development of such systems may be the best way for such research, modeling, and validation to occur. And perhaps data collection should be built in to the normal—and abnormal—operation of such systems.

REFERENCES

Bainbridge, L. 1974 Analysis of verbal protocols from a process control task. In E.Edwards and F.Lees, eds., The Human Operator in Process Control. London: Taylor and Francis.

Baron, S., Zacharias, G., Muralidharan, R., and Lancraft, R. 1981 PROCRU: A model for analyzing flight crew procedures in approach to landing. In Proceedings of the Eighth IFAC World Congress, Tokyo.

Baron, S., and Kleinman, D. 1969 The human as an optimal controller and information processor. IEEE Trans. Man-Machine Systems MSS-10(11):9–17.

Bower, G. 1981 Mood and memory. American Psychologist 36:129–148.

Cavanaugh, J.C., and Borkowski, J.G. 1980 Searching for meta-memory-memory connections. Developmental Psychology 16:441–453.

Chase, W.G., and Simon, H.A. 1973 The mind’s eye in chess. In W.G.Chase, ed., Visual Information Processing. New York: Academic Press.

Chi, M.T.H., Chase, W.G., and Eastman, R. 1980 Spatial Representation of Taxi Drivers. Paper presented to the Psychonomics Society, St. Louis, November.

Collis, A.M., and Loftus, E.M. 1975 A spreading activation theory of semantic processing. Psychological Review 82:407–428.

Cowey, A. 1979 Cortical maps and visual perception. Quarterly Journal of Experimental Psychology 31:1–17.

Ericsson, A., and Simon, H. 1980 Verbal reports as data. Psychological Review 87:215–251.

Feldman, J. A., and Ballard, D.H. in Connectionist models and their properties. In press J.Beck and A.Rosenfeld, eds., Human and Computer Vision. New York: Academic Press.

Goldberg, L.R. 1968 Simple models or simple processes? Some research on clinical judgments. American Psychologist 23:483–496.

Green, D.M., and Swets, J.A. 1966 Signal Detection Theory and Psychophysics. New York: John Wiley.

Harvey, J.H., Ickes, W.J., and Kidd, R.F., eds. 1976 New Directions in Attribution Research. Hillsdale, N.J.: Lawrence Erlbaum.

Kelly, C. 1968 Manual and Automatic Control. New York: John Wiley.

Kinsbourne, M., and Hicks, R.L. 1978 Functional cerebral space: a model for overflow transfer and interference effects in human performance. In J.Requin, ed., Attention and Performance VIII. Hillsdale, N.J.: Lawrence Erlbaum.

Kok, J.J., and Stassen, H.G. 1980 Human operator control of slowly responding systems: supervisory control. Journal of Cybernetics and Information Sciences 3:124–174.

Landman, M., and Hunt, E.B. 1982 Individual differences in secondary task performance. Memory and Cognition 10:10–25.

Levison, W.H., and Tanner, R.B. 1971 A Control-Theory Model for Human Decision Making. National Aeronautics and Space Administration CR-1953, December.

Navon, D., and Gopher, D. 1979 On the economy of the human-processing system. Psychological Review 86:214–230.

Newell, A., and Simon, H.A. 1972 Human Problem Solving. Englewood Cliffs, N. J.: Prentice-Hall.

Nisbett, R., and Ross, L. 1980 Human Inference: Strategies and Shortcomings of Social Judgment. Englewood Cliffs, N. J.: Prentice-Hall.

Norman, D.A. 1981 Categorization of action slips. Psychological Review 88:1–15.

Posner, M.I. 1978 Chronometric Explorations of Mind. Hillsdale, N. J.: Lawrence Erlbaum.

1980 Orienting of attention. Quarterly Journal of Experimental Psychology 32:3–25.

Posner, M.I., and Rothbart, M.K. 1980 Development of attentional mechanisms. In J. Flowers, ed., Nebraska Symposium. Lincoln: University of Nebraska Press.

Rasmussen, J. 1979 On the Structure of Knowledge—A Morphology of Mental Models in a Man-Machine System Context. RISO National Laboratory Report M-2192. Roskilde, Denmark.

Sheridan, T. 1981 Understanding human error and aiding human diagnostic behavior in nuclear power plants. In J.Rasmussen and W.Rouse, eds., Human Detection and Diagnosis of System Failures. New York: Plenum Press.

1982 Supervisory Control: Problems, Theory and Experiment in Application to Undersea Remote Control Systems. MIT Man-Machine Systems Laboratory Report. February.

Sheridan, T., and Ferrell, W.R. 1967 Supervisory control of manipulation. Pp. 315–323 in Proceedings of the 3rd Annual Conference on Manual Control. NASA SP-144.

Sheridan, T.B, and Young, L.R. 1982 Human Factors in aerospace. In R.Dehart, ed., Fundamentals of Aerospace Medicine. Philadelphia: Lea and Febiger.

Swain, A.D., and Guttman, H.E. 1980 Handbook of Human Reliability Analysis with Emphasis on Nuclear Power Plant Applications NUREG/CR 1278. Washington, D.C.: Nuclear Regulatory Commission.

Tsach, U., Sheridan, T.B., and Tzelgov, J. in A New Method for Failure Detection and press Location in Complex Systems. Proceedings of the 1982 American Control Conference. New York: Institute of Electrical and Electronics Engineers.

U.S. Army Research Institute 1979 Annual Report on Research, 1979. Alexandria, Va.: Army Research Institute for the Behavioral and Social Sciences.

Young, R.M. 1981 The machine inside the machine: users’ models of pocket calculators. International Journal of Man-Machine Studies 15:51–85.

Zajonc, R.B. 1980 Feeling and knowledge: preferences need no inferences. American Psychologist 35:151–175.