WORKSHOP IN BRIEF

| Board on Life Sciences | March 2016 |

| Division on Earth and Life Studies |

A Workshop in Brief for the Standing Committee on Emerging Science for Environmental Health Decisions

September 30–October 1, 2015

Interindividual Variability: New Ways to Study and Implications for Decision Making

Intrinsic variability across the human population is associated with variable responses to environmental stressors. Understanding both the sources and the magnitude of the variability is a key challenge for scientists and for decision makers. Understanding such variations has long been a key consideration for those tasked with risk-based decisions. The National Academies of Sciences, Engineering, and Medicine’s (the Academies) Standing Committee on Emerging Science for Environmental Health Decisions (ESEH), sponsored by the National Institute for Environmental Health Sciences (NIEHS), held its second workshop on interindividual variability on September 30 and October 1, 2015, in Washington, DC. The workshop highlighted state-of-the-art tools for studying variations. These tools include in vitro toxicology methods using highly diverse human cell lines, in vivo methods using highly diverse animal populations, and epidemiologic analytical approaches. The workshop focused on interindividual variability due to intrinsic differences in responses to chemical exposures rather than on variability due to differences in exposure.

Understanding interindividual variability in response to chemical exposures is extremely important, said Linda Birnbaum, director of the NIEHS. Traditional epidemiology has been limited in ability to examine factors contributing to differential susceptibility and often must generalize from the experiences of occupational or otherwise limited cohorts to the effects on the overall population. The methods for using animals to evaluate toxicity have historically used genetically homogeneous populations. Most in vitro systems are also genetically homogeneous. Similarly, there are few examples of mathematical physiologically based pharmacokinetic (PBPK) modeling that can be used to describe differences in how people’s bodies respond to a chemical exposure using populations with varying characteristics.

Birnbaum emphasized both that unaddressed variability, including variability from epigenetic changes, can lead to health disparities and that there has been a large increase in the knowledge that can be applied to under-

![]()

standing human interindividual variability. She and Lauren Zeise,1 acting director of the California Environmental Protection Agency’s Office of Environmental Health and Hazard Assessment, mentioned a number of recent studies highlighting important differences in how people respond to stressors. These include how a person’s health status (e.g., asthma) and life stage impact susceptibility. An example of genetic impact on susceptibility is the effect of copper on the 1% of people who carry genes linked to Wilson’s disease.2

In the past decade, the National Research Council published four reports that included suggestions for addressing individual variability in risk assessments:

- – 2009: Science and Decisions: Advancing Risk Assessment (known as the “Silver Book”)3

- – 2011: Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde4

- – 2014: Critical Aspects of EPA’s IRIS Assessment of Inorganic Arsenic: Interim Report5

- – 2014: Review of EPA’s Integrated Risk Information System (IRIS) Process6

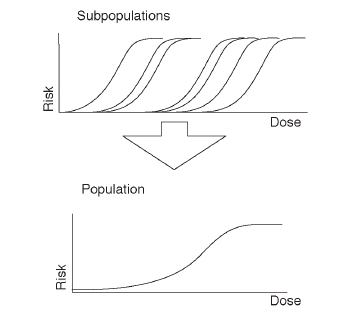

Zeise described how some individuals are substantially more sensitive than others to a given chemical exposure, making them more susceptible to disease. Heterogeneity in response at the individual level tends to “stretch out” the population-level dose response curve (see Figure 1).

Using 20th-century tools, in contrast with the newer tools, risk assessment decisions are often based on occupational exposure studies or homogenous animal model studies—populations that are not as varied as the human population. Uncertainty factors of 10 are generally used to account for population variability in response. If new tools, such as those discussed in this Workshop in Brief, can help tease out more information about variability, more data-driven variability factors and models may be possible, instead of just using default values (e.g., factor of 10) to account for the range of differences among humans.

FIGURE 1: A conceptual model to describe subpopulation and population dose-response relationships. See the NRC report, Science and Decisions.

Zeise described recent California determinations to illustrate the concept. When few toxicology data on individual variability are available, a risk-specific dose is divided by a default uncertainty factor to account for the variability we expect across humans. Zeise discussed California’s recent determination that the default uncertainly factor of 3 to account for how the body responds to a substance (pharmacodynamics) and an uncertainty factor of 3 to account for and how a substance moves into, though, and out of the body (pharmacokinetics) may both be too small. Based on an analysis of data, particularly in the young, the pharmacokinetic default was increased to 10. For pharmacodynamics, if there is specific information suggesting sensitive subpopulations, the uncertainty factor may be increased to 10.

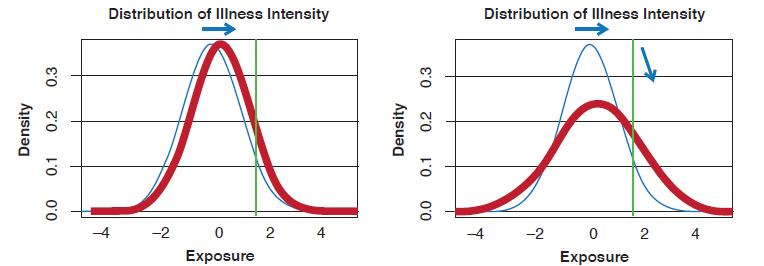

One way to conceptually illustrate a population’s baseline distribution of disease and how it shifts after a change in exposure is to construct population distribution curves. Important differences in individuals’ susceptibility can be found in the “tails” at both ends where a smaller number of people within the general population exhibit unusually small or large responses for a given dose.x Sensitive individuals can also belong to distinctly different subpopulations. The curves illustrating the impact of changes in exposure typically assume that resultant shifts in response are without a change in the

_________________

1 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

2 NRC (National Research Council). 2000. Copper in Drinking Water. Washington, DC: National Academy Press.

3 NRC. 2009. Science and Decisions: Advancing Risk Assessment. Washington, DC: The National Academies Press.

4 NRC. 2011. Review of the Environmental Protection Agency’s Draft IRIS Assessment of Formaldehyde. Washington, DC: The National Academies Press.

5 NRC. 2014. Critical Aspects of EPA’s IRIS Assessment of Inorganic Arsenic: Interim Report. Washington, DC: The National Academies Press.

6 NRC. 2014. Review of EPA’s Integrated Risk Information System (IRIS) Process. Washington, DC: The National Academies Press.

FIGURE 2: Schwartz showed how an exposure change can result in a shift in response or a change in the shape of the response distribution.

shape of the distribution, explained Joel Schwartz7 of the Harvard School of Public Health. But, when there is interindividual variability in the response, he said, the shape of the distribution itself can change (see Figure 2).

INCORPORATING INTERINDIVIDUAL VARIABILITY INTO RISK DECISIONS

In making decisions about chemical regulations, including how to protect sensitive populations, the legal statutes that guide decision makers are very important, stressed John Vandenberg, National Program Director for the U.S. Environmental Protection Agency (EPA) Human Health Risk Assessment Program. Some statues are more specific than others about identifying populations at increased risk, and economic factors may play a role in how standards are implemented. As an example, Vandenberg explained how these factors play out in the National Ambient Air Quality Standards (NAAQS) for ozone, where there are more than 2,800 epidemiology, toxicology, and controlled human exposure studies. The statutory language requires that the NAAQS provide an adequate margin of safety to protect the health of the public, including both the population as a whole and groups potentially at increased risk. To fulfill this mandate, since 2009 EPA has focused on determining which individual- and population-level differences result in increased risk of air pollutant–related health effects.

The most severe effects of ozone exposure—hospital visits and mortality—occur in a small percentage of the population. A somewhat larger group has reduced physical performance and increased medication use. Many more people experience low-level subclinical effects. In recent years, Vandenberg said, data have become available showing that some individuals respond differently to ozone. Approximately 15% of the “normal” population is more susceptible within the range of environmental exposures to ozone, and another 15% has what he called “cast iron lungs.” Coincidentally, Vandenberg pointed out that EPA was announcing lower NAAQS for ground-level ozone on the second day of the workshop (70 parts per billion), based on new studies, those which considered individual variability.

Decision makers outside EPA were also asked to describe how interindividual variability impacts decision making. Interindividual variability is important to the pharmaceutical industry due to the high variability in how people respond to drugs, both in terms of safety and efficacy, said John Cook, senior director of investigative toxicology at Pfizer. Cook described the industry’s precision medicine approach, focusing on genetic and non-genetic biomarkers to enhance clinical trials by identifying whether there are subpopulations who react, positively or negatively, to drugs differently.

Terry Gordon is with the American Conference of Governmental Industrial Hygienists (ACGIH) Threshold Limit Values Committee, which generates threshold limit values (TLVs), guidelines used to assist in the control of health hazards. The ACGIH’s goal is to protect “nearly all” workers, Gordon said. ACGIH protocols provide little guidance for using uncertainty factors to account for interindividual variability in developing occupational exposure

_________________

7 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

limits. They are calculated based on “common sense scientific judgment” and intended to offset the “healthy worker effect,” which recognizes that the least healthy individuals in a population are generally not employed in the workplace and that people with sensitivities to materials used in a workplace are likely to leave. While reproductive effects are considered, other factors related to age and gender effects are not.

The TLVs are preferably based on human data (e.g., studies of occupational exposures), but sometimes in the absence of occupational data, animal models are used. Although richer data from more genetically diverse animals may provide additional information for limit setting, these newer animal models have not yet been used in setting TLVs and in vitro data are not used in setting TLVs.

EPIGENETICS RESEARCH RESOURCES

One source of variation emphasized by Birnbaum is differences in epigenetics; epigenetic changes can lead to heritable variability Epigenetic changes have been implicated in a wide variety of human diseases, and epigenetic changes have been associated with environmental toxicants. John Satterlee of the National Institute on Drug Abuse described a resource for studying epigenetic variation: the data portal for International Human Epigenome Consortium, which includes data from the National Institutes of Health’s (NIH’s) Roadmap Epigenomics Program and other international programs. NIH’s Roadmap Epigenomics Program to map the epigenome provides data on “normal” human cells and tissues from both adults and fetuses and how they vary epigenetically. The database may eventually be a resource for studying epigenetic variation among individuals, but Satterlee stressed that a great deal of work remains to be done on how epigenetic modifications vary from person to person.

IN VITRO METHODS

Several new developments for using in vitro tools to characterize interindividual variability were discussed at the workshop, including approaches involving human cell lines and methods for integrating in vitro and in silico data.

Fred Wright of North Carolina State University told the audience about the 1000 Genomes High-Throughput Screening Study conducted in collaboration with Ivan Rusyn8 of Texas A&M University (TAMU). Their work involves conducting in vitro toxicity studies, but instead of conducting them on cells that are genetically the same, they use a set of 1,100 immortalized human lymphoblast cell lines that are each different genetically. The lines represent nine populations from the Americas, Asia, and Europe with around 2 million single nucleotide polymorphisms (SNPs). Each cell line is exposed to a range of chemical doses to generate dose–response relationships. Combined with the genetics of each cell line, the approach allows the identification of genes and pathways that contribute to variations in sensitivity of the different cells.

For example, Wright and colleagues described a cytotoxicity study exposing the 1,100+ cells to 180 compounds at 8 concentrations, producing approximately 2.4 million data points. Their analysis showed that the ancestral origin of the cells did not have a large impact on response. There was some clustering based on ancestry, but the results showed many more differences within the subpopulations (of ancestral origins) than between them.9

The researchers went on to identify what Wright termed toxicodynamic variability factors (TVFs) for the tested chemicals. They found that the median was very close to the frequently used uncertainty factor of 3 for toxicodynamics. However, many chemicals exhibited much greater variability, which suggests that for those chemicals, a default 3-fold factor may not adequately protect sensitive individuals. Importantly, this type of study enables forward-looking analyses for deriving a chemical-specific TVF in such cases. Work by Weihsueh Chiu at TAMU suggests that, for most chemicals, the TVF for a new chemical can be obtained by testing it on about 50 different cell lines.

Wright continued by explaining how heritability and mapping analyses can be conducted with the cell lines to look for genetic associations that may underlie sensitivity. More than half of the studied chemicals had evidence of high heritability of sensitivity. Overall, there was a general enrichment for polymorphisms in or near cellular membrane transport genes.

Although the results Wright’s group achieved are encouraging, Anna Lowit of EPA’s Office of Pesticide Programs pointed out that the in vitro testing was assessing cytotoxicity—an endpoint that applies to a relatively narrow chemical space. Wright

_________________

8 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

9 Abdo, N, et al. 2015. Population-based in vitro hazard and concentration—response assessment of chemicals: The 1000 Genomes High-Throughput Screening Study. Environmental Health Perspectives 123(5):458–466.

acknowledged other limitations of the in vitro environment, such as focusing on lymphoblasts, which are not involved in activities such as metabolism, and the use of immortalized cell lines. There are also many sources of technical variation, he acknowledged. The groups working with these cell lines are now exploring exposure to mixtures. Wright also pointed out that the new tools have tremendous potential to aid in hypothesis generation and potentially to inform risk assessment, particularly for highly exposed populations.

Barbara Wetmore of the Hamner Institutes for Health Sciences described her group’s efforts to relate in vitro testing concentrations to in vivo doses and real-world exposures. Her group uses reverse dosimetry methods with in vitro/in vivo extrapolation (IVIVE) modeling to study the impact of key differences in people, such as genetic or functional differences in genes important in chemical or drug metabolism. Reverse dosimetry is the estimation of dose levels required to achieve particular plasma concentrations of chemicals. Wetmore’s team creates what she called “virtual populations” that are used to estimate population-based oral equivalent doses required to achieve particular plasma concentrations of chemicals.

Wetmore noted that her group’s earlier work demonstrated the approach’s ability to incorporate pharmacokinetic parameters such as bioavailability, hepatic and renal clearance, and plasma protein binding—parameters that impact what amounts may end up circulating in the blood. More recently, Wetmore and her colleagues began to investigate interindividual variability in a way designed to include neonates, the elderly, the sick, and different ethnic groups. This is important because clearance differences exist across life stages. Individuals with diseases and ethnic groups can also have different clearances. Wetmore said the Hamner group is currently using what she termed “a slightly more complex” IVIVE model using the SimCyp computational simulation and modeling tool (http://www.certara.com/software/pbpk-modeling/simcyppbpk) and including variations of enzymes involved in metabolism, cytochrome P450 (CYP), and uridine 5’-diphospho-glucuronosyltransferases (UGT).

The Hamner researchers recently combined high-throughput pharmacokinetics data generated using the IVIVE model with data from hundreds of in vitro assays from EPA’s ToxCast and the larger interagency Tox21 programs. They were able to translate in vitro assay bioactivity to predicted external exposure. These values can be compared against anticipated exposures to derive a safety margin. The current approach also allows the researchers to incorporate differences in the genetic forms and abundances of the CYP and UGT enzymes to explore how different groups will metabolize the chemicals differently.

Although the inability to validate results of IVIVE is an issue in the chemical space she focuses on, Wetmore says her group uses information about in vivo concentrations of pharmaceuticals to study the models’ predictions. Although only a small amount of in vivo data was available for comparison, the Hamner researchers found that their in vitro data-based predictions for carbaryl, haloperidol, and lovastatin were within 2–5-fold of the in vivo data. Wetmore said the researchers were able to calculate chemical-specific adjustment factors (ranging from 3.5 to 11.5) for nine chemicals for individuals of different ages, stages, and at-risk groups.

DECISIONS MAKERS’ THINKING ON USING THESE IN VITRO APPROACHES

Decision makers from several different contexts were asked to comment on the in vitro and modeling approaches described. Lowit observed that Wetmore’s presentation on how to use in vitro assays with other evidence to assess interindividual human variability was “very exciting.” This approach may help regulators understand the information in the tails of disease incidence curves. EPA’s Offices of Pesticide Programs and Research and Development, she said, are thinking about strategies similar to the PBPK models Wetmore described to enable more incorporation of data related to interindividual variability. The assumptions that go into the model are important to consider, Lowit pointed out. For example, IVIVE algorithms are based on a steady state chemical distribution—a state that is not reached with a chemical like carbaryl. Wetmore said that Cmax—the maximum concentration achieved in blood—can be incorporated into the kind of testing she is doing to work with chemicals like carbonyl.

Other decision makers who reacted to the in vitro approaches described included Gordon and Vandenberg. Both indicated that the efforts to connect cell doses to human doses are not advanced enough to inform dose decision making. However, Vandenberg acknowledged a role for in vitro data in understanding variability beyond default uncertainty factors. Cook noted that while Pfizer looks at variations (i.e., SNPs) in proteins with which drugs

interact, the company’s use of the in vitro tools described at the workshop is very limited.

Gary Ginsberg, a toxicologist at the Connecticut Department of Public Health, questioned whether it is possible to perturb the system to collect more information that might be indicative of variability and how it may be impacted in real-life situations. He asked if variability is being quenched by growing lymphoblasts under tightly controlled conditions. He also called for understanding variability by going upstream from key adverse events. Some workshop attendees mentioned a number of other areas where in vitro systems add value or could be improved in order to provide more realistic data. Helmet Zarbl10 of the Robert Wood Johnson Medical School asked to what extent we are underestimating genetic variability because the epigenome is reprogrammed when cells are cultured.

IN VIVO METHODS

Researchers discussed using laboratory mice populations bred to include interindividual variability. Human variability is a result of our outbred nature, pointed out David Threadgill, director of TAMU’s Institute for Genome Sciences and Society. Historically, work with traditional animal models such as the B6C3F1 eliminated a great deal of variability in order to gain statistical power to detect small effects.

The Collaborative Cross (CC) project that Threadgill discussed grew out of an effort begun 15 years ago “to reinvent the mouse as an experimental population” due to the limitations in conducting genetic studies in laboratory mice because of their common ancestry.

The CC project began with eight strains of mice chosen to capture much of the variability present in laboratory strains. The population now includes about 150 lines, and Threadgill says it is as diverse as humans. It includes about 40 million polymorphisms, which he estimates is about twice the number in humans. Approximately 95% of all polymorphisms present in common laboratory strains of mice are captured in the CC mice. Because of the way the CC population was generated, it has a balance of all the genetic variation that is present. All strains are roughly equally represented, and all of the individual alleles occur at more than a rare frequency, facilitating researcher ability to detect their functions. Threadgill described the relatively low frequency of alleles in other populations to be a major limiting factor that the CC project attempts to overcome.

Threadgill also presented results from diet studies. There are a lot of data on the role of human diet in diseases and disease processes, but little is known about what is actually happening in experimental animals. Some of the group’s more recent work involved feeding mice a variety of diets. They were surprised to find that every strain responded uniquely to these diets. “When you look at the population-based response to the different diets, you really don’t get the full picture,” Threadgill said. “We’ve seen this for almost every phenotype we’ve looked at. If you average the four strains, it looks like what you would expect to see from human studies. But when you break it up into individual strains, you get very different results.”

Threadgill also used the CC project mice to look at the influence of the microbiome on interindividual variability. Research documents that making environmental changes, such as changing caging, can also alter the microbiome.11 More recent studies identified how mice’s microbiomes impact their response to dietary fiber.12 “Using the mouse system, we found that fiber [only] has a role when it is matched with a specific type of bacteria that can metabolize the type of fiber that the animals are consuming,” he said, adding that every strain is likely to have its own unique microbiome.

Threadgill and Rusyn said they are currently investigating ways to deploy the CC project mouse populations for the standard toxicology bioassays (e.g., 14- or 90-day study) to include a more genetically diverse set of mice than has traditionally been studied. Another study is under way to compare human cell–based studies with mouse cell–based studies derived from the CC project, Threadgill said. The goal is to compare how well the variability seen in vitro compares with the variability seen in vivo with the same populations.

The Diversity Outbred (DO) mice described by Michael DeVito, acting chief of the National Toxicology Program’s (NTP’s) Laboratory Division, are based on the CC project mouse populations. The DO mice were developed as an outbred population to complement the inbred CC project population.

_________________

10 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

11 Deloris, AA, et al. 2006. Quantitative PCR assays for mouse enteric flora reveal strain-dependent differences in composition that are influenced by the microenvironment. Mammalian Genome 17(11):1093–104.

12 Donohoe, RD, et al. 2014. A Gnotobiotic Mouse Model Demonstrates that Dietary Fiber Protects Against Colorectal Tumorigenesis in a Microbiota- and Butyrate-Dependent Manner. Cancer Discovery 4:1387–1397.

When the CC project mouse was designed as a resource for high-resolution mapping, its developers were not thinking about toxicology, DeVito said. “The advantage of the DO is that genetic variation is uniformly distributed with multiple allelic variants present,” he said. For both populations, each animal is genetically unique and the population includes approximately 40 million SNPs.

The DO model has been used at the NTP as part of an effort to better reflect population variability following exposure to chemicals in commerce. NTP researchers believe that the DO mice will help scientists better characterize or perhaps even identify some toxicities in humans that were not predicted by traditional rodent models, DeVito said. They also anticipate that the DO mice will be useful in mode of action assessment by identifying the genetic basis for a response.

The DO model has been around only since 2012 and not many studies have yet been published. Two published toxicology studies using the DO model focus on benzene13 and green tea.14 The benzene study was by a group including NTP researchers led by John E. French, who used the DO mice to replicate a study originally conducted with B6C3F1. DeVito said that the overall results were similar, but the DO mice proved to be 10 times more sensitive than the B6C3F1 mice. The researchers were unable to clearly identify any polymorphisms that would explain the increased sensitivity, but they did find a polymorphism that resulted in decreased sensitivity to benzene-induced chromosomal damage.

The second DO mouse model study focused on epigallocatechin gallate, a chemical in green tea that causes liver injury.15 The research team led by Allison Harrill of the University of Arkansas for Medical Sciences determined that about one-third of the studied mice were resistant, while another third were very sensitive to ingestion of the compound. Her analysis of the sensitive animals identified more than 40 candidate genes. By looking through human data available from the Drug Induced Liver Injury Network (http://www.dilin.org), the researchers were able to pinpoint three polymorphisms consistent between people and rodents. One of these, the mitofusin2 gene (mMFN2)—an essential component of mitochondrial fusion machinery—may be involved in the liver injury induced by epigallocatechin gallate.

Another study to explore the value of the DO mice was inspired by the recognition that B6C3F1 mice are not good for detecting metabolic syndrome or diabetes. NTP investigated the effect of a high-fat diet on the DO mice and documented significant variability in weight gain from the diet.

DeVito also delved into practical questions about the DO mice’s use and associated costs. How many animals are needed for genetic mapping studies using the DO mice? Recent work at The Jackson Laboratory determined that for genetic mapping studies, the studies with as few as 200 DO mice can detect genetic loci with large effects. However, loci that account for less than 5% of trait variance may require up to 1,000 animals.16 Because this is potentially very expensive, DeVito investigated what size study will provide more information than is available from traditional approaches. He asked how much variability 75 DO mice would exhibit in comparison with the B6C3F1 mice. Whereas the B6C3F1 mice endpoints vary by less than 5% across animals, the DO mice range from 10% to 50-60% variability, DeVito observed. DeVito’s calculation suggested that 20-30 DO mice per treatment group would be needed to produce a study with the same power to estimate population variability in response as a 10 mice/treatment group in a typical 90-day subchronic study. Although using the DO mice may cost more than conventional studies, by tripling the number of animals, the DO mice or the CC project mice can provide needed insights into population variability. Without models like those used with the DO/CC mice, we are left with using the default values of 10 for human population variability, which has a cost in and of itself, he stressed.

In light of the large group sizes required for the DO mice studies, agents should ideally have a known or anticipated effect of concern and some expectation of population variability, DeVito said. Ideal endpoints for testing with the DO mice would involve continuous variables, and the toxicodynamic time course should be fairly stable because a highly dynamic response may lead to false negative “nonresponders” due to small differences in time course.

A model is only as good as our understanding of it, DeVito stressed. NTP researchers have a good understanding of the DO mice genetics, but

_________________

13 French, JE, et al. 2015. Diversity Outbred Mice Identify Population-Based Exposure Thresholds and Genetic Factors that Influence Benzene-Induced Genotoxicity. Environmental Health Perspectives 123(3):237–245.

14 Church, RJ, et al. 2015. Sensitivity to hepatotoxicity due to epigallocatechin gallate is affected by genetic background in diversity outbred mice. Food and Chemical Toxicology 76:19-26.

15 Ibid.

16 Gatti, DM, et al. 2014. Quantitative Trait Locus Mapping Methods for Diversity Outbred Mice. G3 4(9):1623–1633.

their phenotypic variability is not as well known. The DO mice’s sperm counts vary widely, which limits their utility for male reproductive studies. As more research is conducted with females, other areas less suitable for study with the DO mice may come to light. Another challenge is how well variability needs to be characterized. Is it necessary for researchers to “prove” they found an SNP, DeVito asked, or is there a more pragmatic approach to characterizing variability?

Other participants commented on the value of having diversity and variation in animal studies. Lowit commended the new mouse models’ potential to highlight previously unseen effects on animals. Risk assessors struggle with epidemiology studies that show effects in humans that are not seen in traditional animal models including Organisation for Economic Co-operation and Development guideline studies, she said. Gordon observed that “it looks like the animal data is telling us that there might be a lot more inter-strain or interspecies variability than we thought.” Cook agreed on the new tools’ utility for helping researchers hone in on sensitive populations and evaluating the dose–response on that subpopulation.

Ginsberg saw potential in observing variability with the more genetically diverse animal systems without necessarily conducting detailed research to uncover the mechanisms. However, the extra data do not necessarily tell us a lot about human variability unless the SNP is identified and looked at epidemiologically.

Birnbaum of NIEHS, Vandenberg of EPA, and several others at the workshop stated that the DO mouse appears to have promise at a cost that makes it conceivable to use. Will the recognition that there are hypersensitive portions of the population ever lead us to regulate with a different paradigm that goes beyond safety factors based on dividing animal study results? The existence of the new tools is inspiring talk of changing how risk is assessed, according to several workshop participants. Vandenberg said the new types of data already have some application in some contexts. Lowit pointed out that we are talking about a fundamental shift in the science that underlies risk assessment decisions, stressing that she is not speaking for her agency and each part of EPA is regulated under a different federal statute with differing requirements.

Several participants noted the potential value of “ground-truthing” the DO and CC project models by comparing the findings with the results of human studies. Lowit said she would like to see better characterization of the DO mouse model, suggesting this could help in efforts to link variability shown with in vivo models to in vitro outcomes. Threadgill described a study under way to compare human cell–based studies with mouse cell–based studies derived from the CC project. The goal is to compare the variability seen in vitro with the variability seen in vivo with the same populations.

In addition, Rusyn’s group is using genetically diverse mouse strains to provide what he termed “useful quantitative estimates of toxicokinetic population variability.”17 They used mouse panel data on toxicokinetics of trichloroethylene (TCE) for population PBPK modeling. The mouse population–derived variability estimates for TCE metabolism closely matched population variability estimates previously derived from human toxicokinetic studies with TCE. “Comparing it to the parameters you can get in humans, they are right on top of each other,” Rusyn said.

Models such as the DO and CC may prove particularly helpful for “big ticket” chemicals where regulators and stakeholders worry about missing something and not having enough information, Rusyn said. Population-based experimental model systems are available now to address the uncertainty about the potential range of interindividual variability in adverse health effects on a chemical-by-chemical basis. Instead of using defaults, both human in vitro and mouse in vivo tools can be used, depending on the decision context and the regulatory needs, he said.

EPIDEMIOLOGY

The growing interest in characterizing interindividual variability has brought to light the value of some newer techniques in epidemiology, the study of human populations to understand the causes of disease. Schwartz provided a survey of a number of methods that can be used for addressing interindividual variability.

Quantile Regression as a Tool to Look for Heterogeneity in Response

Epidemiologists typically assess environmental risks by looking at mean changes in populations, such as the number of cases of cancer or the average reduction in lung function. This makes

_________________

17 Chiu, WA, et al. 2014. Physiologically based pharmacokinetic (PBPK) modeling of interstrain variability in trichloroethylene metabolism in the mouse. Environmental Health Perspectives 122(5):456–463.

sense if researchers can assume that a large population is exposed and each individual’s risk is small, Schwartz said. However, “if there is a lot of heterogeneity in the population that’s exposed, then that may not be sufficient information to provide to decision makers in a risk assessment,” Schwartz cautioned. Some populations may have risks that are high and warrant attention even though the overall risks are still low.

Schwartz described how quantile regression can be used for estimating effects in situations where exposures change the shape of the distribution curve. Instead of minimizing the sum of the square of the errors, as is typically done in a regression, the quantile approach minimizes the sum of the absolute value of the errors. Rather than generating an unbiased estimate of the mean effect, it produces unbiased estimates at each quantile.

The quantile approach was used in a study on exposure to ultrafine particles among a cohort of elderly men who may have greater susceptibility to air pollution exposure because of their age.18 It suggested shifts in methylation distributions associated with air pollution, identifying two candidate genes related to coagulation. Schwartz’s group’s results linked interindividual variability in these genes with increasing vulnerability in response to exposure to ultrafine particles.

Quantile regression provides evidence of heterogeneity in response irrespective of what is producing it, Schwartz said. The quantile approach can identify susceptibility linked to factors that have not been measured or hypothesized.

Does This Factor Lead to Greater Susceptibility?: Taking Advantage of Emerging Large Datasets to Identify Potential Factors That Lead to Greater Susceptibility

To determine if a factor makes people more susceptible to a given exposure, statisticians determine the significance of the “interaction term,” a product term that multiplies two variables. If one term is pollution and another is race, when those two terms are multiplied, the result is a separate slope relating pollution to outcome for each race. The existence of large datasets can provide sufficient statistical power to show whether or not there is an interaction. As the cost of genome-wide association studies and epigenome-wide association studies decreases, the number of opportunities will increase for replication and causal modeling approaches, Schwartz predicted. Using interaction terms to analyze pathways can be a more statistically powerful approach than genome-wide association/interaction studies if one has some idea of which pathways may be involved, Schwartz said. Instead of looking at whether genes are interacting, focusing on pathways reduces the multiple comparisons penalty required. An example is a recent study that found that people who are expressing high levels of TLR2 are more susceptible to the cardiovascular effects of exposure to fine particles.

Other approaches Schwartz mentioned for using quantile analysis to help shed light on interindividual variability include:

- Mixed models to get at whether different people’s dose–response slopes differ for random reasons or due to true variability.

- Case-crossover studies to identify susceptibility factors and estimate acute factors by controlling for slowly varying covariates.

- Case-only studies where hypothesized modifying effect is modeled rather than death. For example, is the hypothesized source of variability present as a function of air pollution on the day of death?

- Quantile survival analyses to examine whether exposure is primarily affecting people who have a high probability of having an event or impacting people who have a low probability and making it worse for them—or if it is shifting the entire distribution for everyone. This allows researchers to investigate if the distribution changes without testing a hypothesis about what caused the change.

- Causal modeling is a formal approach to make observational studies look more like randomized clinical trials. Researchers can use this approach to validate findings from the quantile expression tools to assess whether what they found is applicable to decisions around susceptibility.

Schwartz also mentioned that the form of machine learning known as kernel machine regression enables work with a larger number of variables such as SNPs, methylation probes, or exposures, such as in exposome studies. The basic idea is to ignore small errors in fitting the model, enabling each variable to be evaluated on its power to identify what is important.

_________________

18 Bind, MC, et al. 2015. Beyond the Mean: Quantile Regression to Explore the Association of Air Pollution with Gene-Specific Methylation in the Normative Aging Study. Environmental Health Perspectives 123(8):759–765.

Identifying Molecular Mechanisms That Drive Interindividual Variability with Mediation Analysis

Joshua Millstein of the University of Southern California talked about the potential value of an epidemiological approach known as mediation analysis, which uses statistical methods to disentangle the causal pathways that link an exposure to an outcome and determine whether anything mediates the effect. The approach can aid in identifying molecular mechanisms that drive interindividual variability.

Millstein is one of the developers of the Causal Inference Test (CIT), a hypothesis-testing approach for causal mediation that was created with genomic applications in mind.19 The CIT is based on a parametric approach, which requires a normal distribution of variabilities, that may not be appropriate for genomic studies. An alternative, nonparametric novel false discovery rate (FDR) approach allows weak signals to be detected with a confidence interval computed to provide a quantitative measure of uncertainty in the results. This approach can be important in cases where there are weak effects, as is often observed in studies of complex diseases.

Millstein provided a number of examples of the CIT’s utility. One involved SNPs hypothesized to affect expression of human leukocyte antigen genes, which in turn affects the risk of peanut allergies.20 The CIT also helped elucidate that variation at the level of genes involved in membrane trafficking and antigen processing significantly influences the human response to the influenza vaccination.21

In the epidemiology discussion session, Ginsberg raised the question of the population size required to analyze an effect distribution’s tail in terms of the dose–response and variability. Looking at the effects in the tail is harder because there are fewer people in the tail, Schwartz agreed. He acknowledged that his examples were for “intermediary biomarkers.” Cohort studies provide more power to see what the effects of exposure are on some of the intermediaries at the tails of the distribution than on the types of outcomes that risk assessors normally use, such as cases of death. “If I want to know which of the million SNPs is modifying the effect of lead on having a heart attack in the Framingham cohort, that’s going to be pretty hard,” Schwartz said. However, the quantile expression tool can tell researchers if some kind of an association exists, he said.

Standing Committee member Chirag Patel22 from Harvard University commented on an emerging tension in epidemiology: Larger sample sizes are needed to improve the power of the observations that can be drawn from a dataset, but some important datasets are “siloed.” He suggested the potential value of combining some datasets to add power to detect changes in variability. Schwartz agreed, but pointed out that many existing cohorts do not have the same environmental exposure measurements. To look at exposure interactions or effects requires much smaller subsets of the study and meta-analysis is not as powerful a technique as analyzing all of the data, he said.

Schwartz expressed optimism that this will be easier in the future. He is part of a consortium out of the London School of Hygiene & Tropical Medicine looking at studies of temperature and mortality that includes an agreement from all of the participating parties to share all of their data. He believes these kinds of agreements will produce results that are easier to combine.

John Balbus of NIEHS concluded the session by noting that the three sessions pointed to encouraging a two-way flow of information between lab toxicologists and epidemiologists. This includes exploring the hypotheses generated by epidemiology in the lab and vice versa.

IMPLICATIONS OF UNDERSTANDING INTERINDIVIDUAL VARIABILITY

The experts who spoke during the workshop’s final panel raised questions and concerns about the overall value of capturing information about interindividual variability. Michael Yudell, an associate professor at Drexel University’s Dornsife School of Public Health, drew attention to an editorial recently published in the New England Journal of Medicine where two prominent physicians raised concerns about medicine’s increasing focus on precision medicine.23 They suggested that such a focus may distract from taking the steps

_________________

19 Millstein, J, et al. 2009. Disentangling molecular relationships with a causal inference test. BMC Genetics 10:23.

20 Hong, X, et al. 2015. Genome-wide association study identifies peanut allergy-specific loci and evidence of epigenetic mediation in US children. Nature Communications 6:6304.

21 Franco, LM, et al. 2013. Integrative genomic analysis of the human immune response to influenza vaccination. eLife Sciences 2:e00299.

22 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

23 Bayer, R, and S Galea. 2015. Public Health in the Precision-Medicine Era. New England Journal of Medicine 373(6):499–501. PMID: 26244305.

needed toward the goal of producing a healthier population, arguing that “the challenge we face to improve population health does not involve the frontiers of science and molecular biology. It entails development of the vision and willingness to address certain persistent social realities, and it requires an unstinting focus on the factors that matter most to the production of population health…. Unfortunately, all the evidence suggests that we, as a country, are far from recognizing that our collective health is shaped by factors well beyond clinical care or our genes.” Along the same lines, while acknowledging it was not the focus of the workshop, Balbus described the role of exposure versus all of the other factors (genetic, epigenetic, etc.) as the gorilla in the room. Standing Committee member Ana Navas-Acien,24 an associate professor at the Johns Hopkins Bloomberg School of Public Health, also raised concerns about recognizing the importance of prevention as we move toward precision medicine. Might an environmental chemical be considered “okay” because it was only going to affect a certain percentage of the population, she asked.

A major subject of workshop discussion involved the use of race in efforts to identify interindividual variability—a topic of much debate in the medical and epidemiological literature. Attendees were divided as to whether race correlates with variability, as well as to the value of collecting information about variability that is tied to race. In a Science paper25 co-written with sociologists and evolutionary biologists, Yudell and his co-authors called for the Academies to consider weighing in on how race should be used in biological research, specifically, and more generally in scientific research. Yudell argued that because human racial groups tend to be highly heterogeneous, race may not make sense as a basis for classifying genetic differences. Gina Solomon of the California Environmental Protection Agency responded that the exposure side of the equation makes a case for why considering race may still make sense. Recent studies of California’s population made with the CalEnviroScreen tool brought to light that race is a more powerful predictor of a “bad” environment with high exposure to air pollution, lead, and other environmental stressors than low socioeconomic status, she said. “Whether or not we include race as a variable, it is predicting a lot of people’s risks and exposures,” she said. “If you think about exposures as affecting the epigenome, it all kind of blurs.”

Yudell pointed out that knowing that people have an extreme reaction within a population can help with planning and protections, but it can also create challenges for those individuals who have extreme reactions. For example, when a child’s whole genome is sequenced, the information is shared with parents and might bring to light something that is of immediate relevance to them, such as the presence of a BReast CAncer (BRCA) susceptibility mutation. James C. O’Leary of the Genetic Alliance, a nonprofit organization that helped pass the Genetic Information Nondiscrimination Act (GINA) of 2008, emphasized the importance of context when considering the value of interindividual variability information to individuals. Having this information available in a child’s medical record could impact his or her parents’ ability to get life insurance and long-term disability. That said, Birnbaum and others noted that study participants want the ability to decide whether or not they have access to data captured about them.

Kimberly White of the American Chemical Society expressed enthusiasm for finding ways to incorporate interindividual variability into chemical risk assessments, but she cautioned that it is important to try to do so in a way that makes comparison with existing study results possible.

Although there are many complex issues to be addressed, it is important to start where we are and focus on the questions that we can answer now with the tools we have, stressed Kristi Pullen, a staff scientist at the Natural Resources Defense Council. Several other workshop attendees agreed, including Birnbaum, who noted that action often is not taken due to calls for more information. Identifying “when you know enough to make some decisions,” is very important. The ultimate goal in increasing understanding of interindividual variability is to drive public health protections, Solomon said. “It’s easy to get caught up in who’s resistant and who’s sensitive, but fundamentally we need to be thinking about how to protect everybody insofar as we can.”

THE UPSHOT

The workshop’s presentations and discussions made clear that decision contexts are very important and that we have come quite a long way since the last time the Standing Committee discussed approaches for interindividual variability in 2012

_________________

24 Member of the Standing Committee on Emerging Science for Environmental Health Decisions.

25 Yudell, M, et al. 2016. Taking race out of human genetics. Science 351(6273):564–565.

(see http://nas-sites.org/emergingscience/meetings/individual-variability), Zeise said in her summation. A substantial amount of new knowledge and a number of new databases and analytic tools have become available or been refined. And some of the information presented suggests that traditional approaches to evaluating toxicology may need to be modified to incorporate interindividual variability.

Discussions at the workshop suggest that the field will see an increasing use of in vitro tools to better understand interindividual variability, which will help with making decisions about research priorities, Zeise said. The in vivo sessions highlighted the promise of the DO and CC project mice for overcoming some of the limits of toxicology’s historical focus on homogenous mouse strains and genetics in showing the extent of interindividual variability. Although much work needs to be done, Zeise said the initial data suggest that the standard uncertainty factor of 10 used to account for variability may be too small.

The new epidemiology tools discussed at the meeting provide new ways to incorporate interindividual variability and illuminate how chemical exposures can change the shape of the distribution of effects for some individuals. Zeise pointed out that the tools are important for revealing population distribution shifts, even if they do not shed light on the underlying causes.

The tools are likely to support and reinforce each other. Important insights may arise from using some of the new tools, such as the outbred mouse strains and the human cell–based in vitro systems, to explore hypotheses related to new epidemiological findings. Similarly, the epidemiological tools can be used to explore various factors that may impact sensitivity in humans discovered in the laboratory. “These tools can be especially powerful,” Zeise concluded.

DISCLAIMER: This Workshop in Brief was prepared by Kellyn Betts and Marilee Shelton-Davenport, PhD, as a factual summary of what occurred at the workshop. The planning committee’s role was limited to planning the workshop. The statements made are those of the authors or individual meeting participants and do not necessarily represent the views of all meeting participants, the planning committee, the Standing Committee on Emerging Science for Environmental Health Decisions, or the National Academies of Sciences, Engineering, and Medicine. The summary was reviewed in draft form by Ila Cote, U.S. Environmental Protection Agency; Gina Solomon, California Environmental Protection Agency; Duncan Thomas, University of Southern California; and David Threadgill, Texas A&M University, to ensure that it meets institutional standards for quality and objectivity. The review comments and draft manuscript remain confidential to protect the integrity of the process.

Planning Committee for Interindividual Variability: New Ways to Study and Implications for Decision Making: John Balbus (Chair), NIEHS; Ivan Rusyn, Texas A&M University; Joel Schwartz, Harvard School of Public Health; Lauren Zeise, California Environmental Protection Agency.

Sponsor: This workshop was supported by the National Institute for Environmental Health Sciences.

About the Standing Committee on Emerging Science for Environmental Health Decisions

The Standing Committee on Emerging Science for Environmental Health Decisions is sponsored by the National Institute of Environmental Health Sciences to examine, explore, and consider issues on the use of emerging science for environmental health decisions. The Standing Committee’s workshops provide a public venue for communication among government, industry, environmental groups, and the academic community about scientific advances in methods and approaches that can be used in the identification, quantification and control of environmental impacts on human health. Presentations and proceedings such as this one are made broadly available, including at http://nas-sites.org/emergingscience.

Copyright 2016 by the National Academy of Sciences. All rights reserved.