6

DEPLOYING ARTIFICIAL INTELLIGENCE IN CLINICAL SETTINGS

Stephan Fihn, University of Washington; Suchi Saria, Johns Hopkins University; Eneida Mendonça, Regenstrief Institute; Seth Hain, Epic; Michael Matheny, Vanderbilt University Medical Center; Nigam Shah, Stanford University; Hongfang Liu, Mayo Clinic; and Andrew Auerbach, University of California, San Francisco

INTRODUCTION

The effective use of artificial intelligence (AI) in clinical settings currently presents an opportunity for thoughtful engagement. There is steady and transformative progress in methods and tools needed to manipulate and transform clinical data, and increasingly mature data resources have supported novel development of accurate and sophisticated AI in some health care domains (although the risk of not being sufficiently representative is real). However, few examples of AI deployment and use within the health care delivery system exist, and there is sparse evidence for improved processes or outcomes when AI tools are deployed (He et al., 2019). For example, within machine learning risk prediction models—a subset of the larger AI domain—the sizable literature on model development and validation is in stark contrast to the scant data describing successful clinical deployment of those models in health care settings (Shortliffe and Sepúlveda, 2018).

This discrepancy between development efforts and successful use of AI reflects the hurdles in deploying decision support systems and tools more broadly (Tcheng et al., 2017). While some impediments are technical, more relate to the complexity of tailoring applications for integration with existing capabilities in electronic health records (EHRs), poor understanding of users’ needs and expectations for information, poorly defined clinical processes and objectives, and even concerns about legal liability (Bates, 2012; Miller et al., 2018; Unertl et al., 2007, 2009). These impediments may be balanced by the potential for gain, as one

cross-sectional review of closed malpractice claims found that more than one-half of malpractice claims could have been potentially prevented by well-designed clinical decision support (CDS) in the form of alerts (e.g., regarding potential drug–drug interactions and abnormal test results), reminders, or electronic checklists (Zuccotti et al., 2014). Although, in many instances, the deployment of AI tools in health care may be conducted on a relatively small scale, it is important to recognize that an estimated 50 percent of information technology (IT) projects fail in the commercial sector (Florentine, 2016).

Setting aside the challenges of physician-targeted, point-of-care decision support, there is great opportunity for AI to improve domains outside of encounter-based care delivery, such as in the management of patient populations or in administrative tasks for which data and work standards may be more readily defined, as is discussed in more detail in Chapter 3. These future priority areas will likely be accompanied by their own difficulties, related to translating AI applications into effective tools that improve the quality and efficiency of health care.

AI tools will also produce challenges that are entirely related to the novelty of the technology. Even at this early stage of AI implementation in health care, the use of AI tools has raised questions about the expectations of clinicians and health systems regarding transparency of the data models, the clinical plausibility of the underlying data assumptions, whether AI tools are suitable for discovery of new causal links, and the ethics of how, where, when, and under what circumstances AI should be deployed (He et al., 2019). At this time in the development cycle, methods to estimate the requirements, care, and maintenance of these tools and their underlying data needs remain a rudimentary management science.

There are also proposed regulatory rules that will influence the use of AI in health care. On July 27, 2018, the Centers for Medicare & Medicaid Services (CMS) published a proposed rule that aims to increase Medicare beneficiaries’ access to physicians’ services routinely furnished via “communication technology.” The rule defines such clinician services as those that are defined by and inherently involve the use of computers and communication technology; these services will be associated with a set of Virtual Care payment codes (CMS, 2019). These services would not be subject to the limitations on Medicare telehealth services and would instead be paid under the Physician Fee Schedule, as other physicians’ services are. CMS’s evidentiary standard of clinical benefit for determining coverage under the proposed rule does not include minor or incidental benefits.

This proposed rule is relevant to all clinical AI applications, because they all involve computer and communication technology and aim to deliver substantive clinical benefit consistent with the examples set forth by CMS. If clinical AI

applications are deemed by CMS to be instances of reimbursable Virtual Care services, then it is likely that they would be prescribed like other physicians’ services and medical products, and it is likely that U.S.-based commercial payors would establish National Coverage Determinations mimicking CMS’s policy. If these health finance measures are enacted as proposed, they will greatly accelerate the uptake of clinical AI tools and provide significant financial incentives for health care systems to do so as well.

In this chapter, we describe the key issues, considerations, and best practices relating to the implementation and maintenance of AI within the health care system. This chapter complements the preceding discussion in Chapter 5 that is focused on considerations at the model creation level within the broader scope of AI development. The information presented will likely be of greatest interest to individuals within health care systems that are deploying AI or considering doing so, and we have limited the scope of this chapter to this audience. National policy, regulatory, and legislative considerations for AI are addressed in Chapter 7.

SETTINGS FOR APPLICATION OF AI IN HEALTH CARE

The venues in which health care is delivered, physically or virtually, are expanding rapidly, and AI applications are beginning to surface in many if not all of these venues. Because there is tremendous diversity in individual settings, each of which presents unique requirements, we outline broad categories that are germane to most settings and provide a basic framework for implementing AI.

Traditional Point of Care

Decision support generally refers to the provision of recommendations or explicit guidance relating to diagnosis or prognosis at the point of care, addressing an acknowledged need for assistance in selecting optimal treatments, tests, and plans of care along with facilitating processes to ensure that interventions are safely, efficiently, and effectively applied.

At present, most clinicians regularly encounter point-of-care tools integrated into the EHR. Recent changes in the 21st Century Cures Act have removed restrictions on sharing information between users of a specific EHR vendor or across vendors’ users and may (in part) overcome restrictions that limited innovation in this space. Point-of-care tools, when hosted outside the EHR, are termed software as a medical device (SaMD) and may be regulated under the U.S. Food and Drug Administration’s (FDA’s) Digital Health Software Precertification Program, as is discussed in more detail in Chapter 7 (FDA, 2018; Lee and

Kesselheim, 2018). SaMD provided by EHR vendors will not be regulated under the Precertification Program, but those supported outside those environments and either added to them or provided to patients via separate routes (e.g., apps on phones, services provided as part of pharmacy benefit managers) will be. The nature of regulation is evolving but will need to account for changes in clinical practice, data systems, populations, etc. However, regulated or not, SaMD that incorporates AI methods will require careful testing and retesting, recalibration, and revalidation at the time of implementation as well as periodically afterward. All point-of-care AI applications will need to adhere to best practices for the form, function, and workflow placement of CDS and incorporate best practices in human–computer interaction and human factors design (Phansalkar et al., 2010). This will need to occur in an environment where widely adopted EHRs continue to evolve and where there will likely be opportunities for disruptive technologies.

Clinical Information Processing and Management

Face-to-face interactions with patients are, in a sense, only the tip of the iceberg of health care delivery (Blane et al., 2002; Network for Excellence in Health Innovation, 2015; Tarlov, 2002). A complex array of people and services are necessary to support direct care and they tend to consume and generate massive amounts of data. Diagnostic services such as laboratory, pathology, and radiology procedures are prime examples and are distinguished by the generation of clinical data, including dense imaging, as well as interpretations and care recommendations that must be faithfully transmitted to the provider (and sometimes the patient) in a timely manner.

AI will certainly play a major role with tasks such as automated image (e.g., radiology, ophthalmology, dermatology, and pathology) and signal processing (e.g., electrocardiogram, audiology, and electroencephalography). In addition to interpretation of tests and images, AI will be used to integrate and array results with other clinical data to facilitate clinical workflow (Topol, 2019).

Enterprise Operations

The administrative systems necessary to maintain the clinical enterprise are substantial and are likely to grow as AI tools grow in number and complexity. In the near term, health care investors and innovators are wagering that AI will assume increasing importance in conducting back-office activities in a wide variety of areas (Parma, 2018). Because these tasks tend to be less nuanced than

clinical decisions, and usually pose lower risk, it is likely that they may be more tractable targets for AI systems to support in the immediate future. In addition, the data necessary to train models in these settings are often more easily available than in clinical settings. For example, in a hospital, these tasks might include the management of billing, pharmacy, supply chain, staffing, and patient flow. In an outpatient setting, AI-driven applications could assume some of the administrative tasks such as gathering information to assist with decisions about insurance coverage, scheduling, and obtaining preapprovals. Some of these topics are discussed in greater detail in Chapter 3.

Nontraditional Health Care Settings

Although we tend to think of applying AI in health care locations, such as hospital or clinics, there may be greater opportunities in settings where novel care delivery models are emerging. This might include freestanding, urgent care facilities or pharmacies, or our homes, schools, and workplaces. It is readily possible to envision AI deployed in these venues, such as walk-in service in retail vendors, or pharmacies with embedded urgent and primary care clinics. Although some of these may be considered traditional point-of-care environments, the availability of information may be substantially different in these environments. Likewise, information synthesis, decision support, and knowledge search support are systematically different in these nontraditional health care settings, and thus they warrant consideration as a distinct type of environment for AI implementation purposes.

Additionally, it is worth noting that AI applications are already in use in many nonmedical settings for purposes such as anticipating customers’ purchases and managing inventory accordingly. However, these types of tools may be used to link health metrics to purchasing recommendations (e.g., suggesting healthier food options for patients with hypertension or diabetes) once the ethical consent and privacy issues related to use of patient data are addressed (Storm, 2015).

Population Health Management

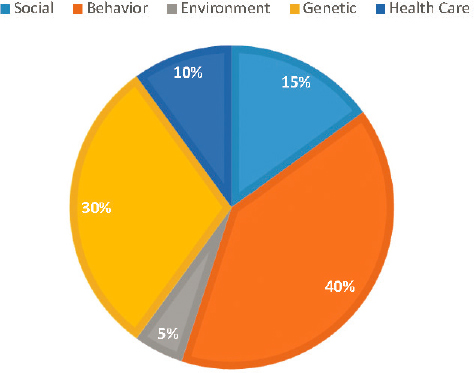

An increasingly important component of high-quality care falls under the rubric of population health management. This poses a challenge for traditional health systems because some research suggests that only a small fraction of overall health can be attributed to health care (McGinnis et al., 2002) (see Figure 6-1).

Nevertheless, other research suggests that health systems have a major role to play in improving population health that can be distinguished from those of

SOURCE: Figure created with data from McGinnis et al., 2002.

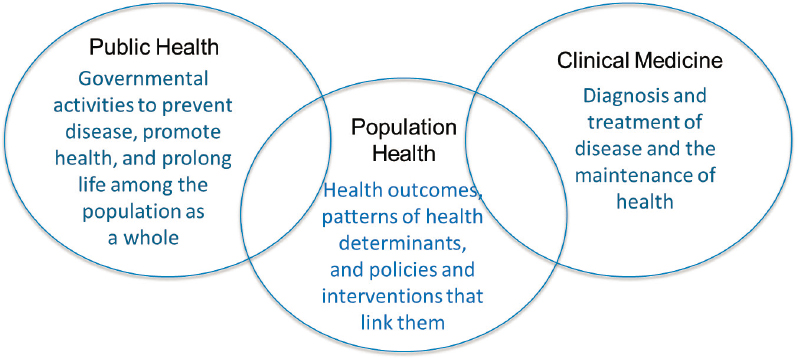

traditional medical care and public health systems (see Figure 6-2). Although there is no broadly accepted definition of this function within health care delivery systems, one goal is to standardize routine aspects of care, typically in an effort to improve clinical performance metrics across large populations and systems of care with the goal of improving quality and reducing costs.

Promoting healthy behaviors and self-care are major focuses of population health management efforts (Kindig and Stoddart, 2003). Much of the work of

SOURCE: Definition of population health from Kindig, 2007.

health management in this context is conducted outside of regular visits and often involves more than one patient at a time. AI has the potential to assist with prioritization of clinical resources and management of volume and intensity of patient contacts, as well as targeting services to patients most likely to benefit. In addition, an essential component of these initiatives involves contacting large numbers of patients, which can occur through a variety of automated, readily scalable methods, such as text messaging and patient portals (Reed et al., 2019). One such example in a weight loss program among prediabetic patients is the use of Internet-enabled devices with an application to provide educational materials, a communication mechanism to their peer group and health coach, and progress tracking, which showed that completion of lessons and rate of utilization of the tools were strongly correlated with weight loss over multiple years (Sepah et al., 2017). Such communication may be as basic as asynchronous messaging of reminders to obtain a flu shot (Herrett et al., 2016) or to attend a scheduled appointment using secure messaging, automated telephone calls, or postal mail (Schwebel and Larimer, 2018). Higher order activities, such as for psychosocial support or chronic disease management, might entail use of a dedicated app or voice or video modalities, which could also be delivered through encounters using telemedicine, reflecting the fluidity and overlap of technological solutions (Xing et al., 2019) (see Chapter 3 for additional examples).

Because virtual interactions are typically less complex than face-to-face visits, they are likely targets for AI enhancement. Led by efforts in large integrated health delivery systems, there are a variety of examples where statistical models derived from large datasets have been developed and deployed to predict individuals’ intermediate and longer term risk of experiencing adverse consequences of chronic conditions including death or hospitalization (Steele et al., 2018). These predictions are starting to be used to prioritize provision of care management within these populations (Rumsfeld et al., 2016). Massive information synthesis is also a key need for this setting, because at-a-glance review of hundreds or thousands of patients at the time is typical, which is a task in which AI excels (Wahl et al., 2018).

Patient- and Caregiver-Facing Applications

The patient- and caregiver-facing application domain merges health care delivery with publicly available consumer hardware and software. It is defined as the space in which applications and tools are directly accessible to patients and their caregivers. Tools and software in this domain enable patients to manage much of their own health care and facilitate interactions between patients and the

health care delivery system. In particular, smartphone and mobile applications have transformed the potential for patient contact, active participation in health care behavior modification, and reminders. These applications also hold the potential for health care delivery to access new and important patient data streams to help stratify risk, provide care recommendations, and help prevent complications of chronic diseases. This trend is likely to blur the traditional boundaries of tasks now performed during face-to-face appointments.

Patient- and caregiver-facing tools represent an area of strong potential growth for AI deployment and are expected to empower users to assume greater control over their health and health care (Topol, 2015). Moreover, there is the potential for creating a positive feedback loop where patients’ needs and preferences, expressed through their use of AI-support applications, can then be incorporated into other applications throughout the health care delivery system. As growth of online purchasing continues, the role of AI in direct patient interaction—providing wellness, treatment, or diagnostic recommendations via mobile platforms—will grow in parallel to that of brick-and-mortar settings. Proliferation of these applications will continue to amplify and enhance data collected through traditional medical activities (e.g., lab results, pharmacy fill data). Mobile applications are increasingly able to cross-link various sources of data and potentially enhance health care (e.g., through linking grocery purchase data to health metrics to physical steps taken or usage statistics from phones). The collection and presentation of AI recommendations using mobile- or desktop-based platforms is critical because patients are increasingly engaging in self-care activities supported by applications available on multiple platforms. In addition to being used by patients, the technology will likely be heavily used by their family and caregivers.

Unfortunately, the use of technologies intended to support self-management of health by individuals has been lagging as has evaluation of their effectiveness (Abdi et al., 2018). Although there are more than 320,000 health apps currently available, and these apps have been downloaded nearly 4 billion times, little research has been conducted to determine whether they improve health (Liquid State, 2018). In a recent overview of systematic reviews of studies evaluating stand-alone, mobile health apps, only 6 meta-analyses including a total of 23 randomized trials could be identified. In all, 11 of the 23 trials showed a meaningful effect on health or surrogate outcomes attributable to apps, but the overall evidence of effectiveness was deemed to be of very low quality (Byambasuren et al., 2018). In addition, there is a growing concern that many of these apps share personal health data in ways that are opaque and potentially worrisome to users (Loria, 2019).

APPLICATIONS OF AI IN CLINICAL CARE DELIVERY

Both information generated by medical science and clinical data related to patient care have burgeoned to a level at which clinicians are overwhelmed. This is a critically important problem because information overload not only leads to disaffection among providers but also to medical errors (Tawfik et al., 2018).

Although clinical cognitive science has made advances toward understanding how providers routinely access medical knowledge during care delivery and the ways in which this understanding could be transmitted to facilitate workflow, this has occurred in only very limited ways in practice (Elstein et al., 1978; Kilsdonk et al., 2016). However, AI is apt to be integral to platforms that incorporate these advances, transforming not only health care delivery but also clinical training and education through the identification and delivery of relevant clinical knowledge at the point of decision making. Key support tasks would include the intelligent search and retrieval of relevant sources of information and customizable display of data; these hypothetical AI tools would relieve clinicians of doing this manually as is now usually the case (Li et al., 2015). Furthermore, these features would enhance the quality and safety of care because important information would be less likely to be overlooked. Additionally, AI holds promise for providing point-of-care decision support powered by the synthesis of existing published evidence, with the reported experiences of similarly diagnosed or treated patients (Li et al., 2015).

Health care is increasingly delivered by teams that include specialists, nurses, physician assistants, pharmacists, social workers, case managers, and other health care professionals. Each of them brings specialized skills and viewpoints that augment and complement the care a patient receives from individual health care providers. As the volume of data and information available for patient care grows exponentially, innovative solutions empowered by AI techniques will naturally become foundational to the care team, providing task-specific expertise in the data and information space for advancing knowledge at the point of care.

Risk Prediction

Risk prediction is defined as any algorithm that forecasts a future outcome from a set of characteristics existing at a particular time point. It typically entails applying sophisticated statistical processes and/or machine learning to large datasets to generate probabilities for a wide array of outcomes ranging from death or adverse events to hospitalization. These large datasets may include dozens, if not hundreds, of variables gathered from thousands, or even millions, of patients. Overall, the risk prediction class of applications focuses on assessing

the likelihood of the outcome to individuals by applying thresholds of risk. These individuals may then be targeted to receive additional or fewer resources in terms of surveillance, review, intervention, or follow-up based on some balance of expected risk, benefit, and cost. Predictions may be generated for individual patients, at a specific point in time (e.g., during a clinical encounter or at hospital admission or discharge), or for populations of patients, which identifies a group of patients at high risk of an adverse clinical event.

Tools to predict an individual’s risk of a given event have been available for decades but due largely to limitations of available data (e.g., small samples or claims data without clinical information), the accuracy of the predictions has generally been too low for routine use in clinical practice. The advent of large repositories of data extracted from clinical records, administrative databases, and other sources, coupled with high-performance computing, has enabled relatively accurate predictions for individual patients. Reports of predictive tools that have C-statistics (areas under curve) exceeding 0.85 or higher are now common (Islam et al., 2019). Examples that are currently in use include identifying outpatients, including those with certain conditions who are at high risk of hospital admission or emergency department visits who might benefit from some type of care coordination, or hospitalized patients who are at risk of clinical deterioration for whom more intense observation and management are warranted (Kansagara et al., 2011; Smith et al., 2014, 2018; Wang et al., 2013).

Given the rapidly increasing availability of sophisticated modeling tools and very large clinical datasets, the number of models and prediction targets is growing rapidly. Machine learning procedures can sometimes produce greater accuracy than standard methods such as logistic regression; however, the improvements may be marginal, especially when the number of data elements is limited (Christodoulou et al., 2019). These increments may not necessarily compensate for the expense of the computing infrastructure required to support machine leaning, particularly when the goal is to use techniques in real time. Another issue is that, depending on the methods employed to generate a machine learning model, assessing the model’s predictive accuracy may not be straightforward. When a model is trained simply to provide binary classifications, probabilities are not generated and it may be impossible to examine the accuracy of predictions across a range of levels of risk. In such instances, it is difficult to produce calibration curves or stratification tables, which are fundamental to assessing predictive accuracy, although techniques are evolving (Bandos et al., 2009; Kull et al., 2017). Calibration performance has been shown to decline quickly, sometimes within 1 year of model development, on both derivation and external datasets (Davis et al., 2017), and this will affect CDS performance as the sensitivity and specificity of a model value threshold changes with calibration performance changes.

As indicated earlier, it is unlikely that simply making predictive information available to clinicians will be an effective strategy. In one example, estimates for risk of death and/or hospitalization were generated for more than 5 million patients in a very large health care system, using models with C-statistics of 0.85 or higher. These predictions were provided to more than 7,000 primary care clinicians weekly for their entire patient panels in a way that was readily accessible through the EHR. Based on usage statistics, however, only about 15 percent of the clinicians regularly accessed these reports, even though, when surveyed, those who used the reports said that they generally found them accurate (Nelson et al., 2019). Accordingly, even when they are highly accurate, predictive models are unlikely to improve clinical outcomes unless they are tightly linked to effective interventions and the recommendations or actions are integrated into clinical workflow. Thus, it is useful to think in terms of prediction–action pairs.

In summary, while there is great potential for AI tools to improve on existing methods for risk prediction, there are still large challenges in how these tools are implemented, how they are integrated with clinical needs and workflows, and how they are maintained. Moreover, as discussed in Chapter 1, health care professionals will need to understand the clinical, personal, and ethical implications of communicating and addressing information about an individual’s risk that may extend far into the future, such as predisposition to cancer, cardiovascular disease, or dementia.

Clinical Decision Support

CDS spans a gamut of applications intended to alert clinicians to important information and provide assistance with various clinical tasks, which in some cases may include prediction. Over the past two decades, CDS has largely been applied as rule-driven alerts (e.g., reminders for vaccinations) or alerts employing relatively simple Boolean logic based on published risk indices that change infrequently over time (e.g., Framingham risk index). More elaborate systems have been based upon extensive, knowledge-based applications that assist with management of chronic conditions such as hypertension (Goldstein et al., 2000). Again, with advances in computer science, including natural language processing, machine learning, and programming tools such as business process modeling notation, case management and notation, and related specifications, it is becoming possible to model and monitor more complex clinical processes (Object Management Group, 2019). Coupled with information about providers and patients, systems will be able to tailor relevant advice to specific decisions and treatment recommendations. Other applications may include advanced search and analytical capabilities that could provide information such as the outcomes of past patients who are similar to those currently receiving various treatments.

For example, in one instance, EHR data from nearly 250 million patients were analyzed using machine learning to determine the most effective second-line hypoglycemic agents (Vashisht et al., 2018).

Image Processing

One of the clinical areas in which AI is beginning to have an important early impact is imaging processing. There were about 100 publications on AI in radiology in 2005, but that increased exponentially to more than 800 in 2016 and 2017, related largely to computed tomography, magnetic resonance imaging, and, in particular, neuroradiology and mammography (Pesapane et al., 2018). Tasks for which current AI technology seems well suited include prioritizing and tracking findings that mandate early attention, comparing current and prior images, and high-throughput screenings that enable radiologists to concentrate on images most likely to be abnormal. Over time, however, it is likely that interpretation of routine imaging will be increasingly performed using AI applications.

Interpretation of other types of images by AI are rapidly emerging in other fields as well, including dermatology, pathology, and ophthalmology, as noted earlier. FDA recently approved the first device for screening for diabetic retinopathy, and at least one academic medical center is currently using it. The device is intended for use in primary care settings to identify patients who should be referred to an ophthalmologist (Lee, 2018). The availability of such devices will certainly increase markedly in the near future.

Diagnostic Support and Phenotyping

For nearly 50 years, there have been efforts to develop computer-aided diagnosis exemplified by systems such as Iliad, QMR, Internist, and DXplain, but none of these programs has been widely adopted. More recent efforts such as those of IBM Watson have not been more successful (Palmer, 2018). In part, this is a result of a relative lack of major investment as compared to AI applications for imaging technology. It also reflects the greater challenges in patient diagnosis compared to imaging interpretation. Data necessary for diagnosis arise from many sources including clinical notes, laboratory tests, pharmacy data, imaging, genomic information, etc. These data sources are often not stored in digital formats and generally lack standardized terminology. Also, unlike imaging studies, there is often a wide range of diagnostic possibilities, making the problem space exponentially larger. Nonetheless, computer-aided diagnosis is likely to evolve rapidly in the future.

Another emerging AI application is the development of phenotyping algorithms using data extracted from the EHR and other relevant sources to identify individuals with certain diseases or conditions and to classify them according to stage, severity, and other characteristics. At present, no common standardized, structured, computable format exists for storing phenotyping algorithms, but semantic approaches are under development and hold the promise of far more accurate characterization of individuals or groups than simply using diagnostic codes, as is often done today (Marx, 2015). When linked to genotypes, accurate phenotypes will greatly enhance AI tools’ capability to diagnose and understand the genetic and molecular basis of disease. Over time, these advances may also support the development of novel therapies (Papež et al., 2017).

FRAMEWORK AND CRITERIA FOR AI SELECTION AND IMPLEMENTATION IN CLINICAL CARE

As clinical AI applications become increasingly available, health care delivery systems and hospitals will need to develop expertise in evaluation, selection, and assessment of liability. Marketing of these tools is apt to intensify and be accompanied by claims regarding improved clinical outcomes or improved efficiency of care, which may or may not be well founded. While the technical requirements of algorithm development and validation are covered in Chapter 5, this section describes a framework for evaluation, decision making, and adoption that incorporates considerations regarding organizational governance and postdevelopment technical issues (i.e., maintenance of systems) as well as clinical considerations that are all essential to successful implementation.

AI Implementation and Deployment as a Feature of Learning Health Systems

More than a decade ago, the National Academy of Medicine (NAM) recognized the necessity for health systems to respond effectively to the host of challenges posed by rising expectations for quality and safety in an environment of rapidly evolving technology and accumulating massive amounts of data (IOM, 2011). The health system was reimagined as a dynamic system that does not merely deliver health care in the traditional manner based on clinical guidelines and professional norms, but is continually assessing and improving by harnessing the power of IT systems—that is, a system with ongoing learning hardwired into its operating model. In this conception, the wealth of data generated in the process of providing health care becomes readily available in a secure manner for

incorporation into continuous improvement activities within the system and for research to advance health care delivery in general (Friedman et al., 2017). In this manner, the value of the care that is delivered to any individual patient imparts benefit to the larger population of similar patients. To provide context for how the learning health system (LHS) is critical for how to consider AI in health care, we have referenced 10 recommendations from a prior NAM report in this domain, and aligned them with how AI could be considered within the LHS for each of these key recommendation areas (see Table 6-1).

Clearly listed as 1 of the 10 priorities is involvement of patients and families, which should occur in at least two critically important ways. First, they need to be informed, both generally and specifically, about how AI applications are being integrated into the care they receive. Second, AI applications provide an opportunity to enhance engagement of patients in shared decision making. Although interventions to enhance shared decision making have not yet shown consistently beneficial effects, these applications are very early in development and have great potential (Légaré et al., 2018).

The adoption of AI technology provides a sentinel opportunity to advance the notion of LHSs. AI requires the digital infrastructure that enables the LHS to operate and, as described in Table 6-1, AI applications can be fundamentally designed to facilitate evaluation and assessment. One large health system in the United States has proclaimed ambitious plans to incorporate AI into “every patient interaction, workflow challenge and administrative need” to “drive improvements in quality, cost and access,” and many other health care delivery organizations and IT companies share this vision, although it is clearly many years off (Monegain, 2017). As AI is increasingly embedded into the infrastructure of health care delivery, it is mandatory that the data generated be available not only to evaluate the performance of AI applications themselves, but also to advance understanding about how our health care systems are functioning and how patients are faring.

Institutional Readiness and Governance

For AI deployment in health care practice to be successful, it is critical that the life cycle of AI use be overseen through effective governance. IT governance is the set of processes that ensure the effective and efficient use of IT in enabling an organization to achieve its goals. At its core, governance defines how an organization manages its IT portfolio (e.g., financial and personnel) by overseeing the effective evaluation, selection, prioritization, and funding of competing IT projects, ensuring their successful implementation, and tracking their performance. In addition, governance is responsible for ensuring that IT systems operate in an effective, efficient, and compliant fashion.

TABLE 6-1 | Leveraging Artificial Intelligence Tools into a Learning Health System

| Topic | Institute of Medicine Learning Health System Recommendation | Mapping to Artificial Intelligence in Health Care |

|---|---|---|

| Foundational Elements | ||

| Digital infrastructure | Improve the capacity to capture clinical, care delivery process, and financial data for better care, system improvement, and the generation of new knowledge. | Improve the capacity for unbiased, representative data capture with broad coverage for data elements needed to train artificial intelligence (AI). |

| Data utility | Streamline and revise research regulations to improve care, promote and capture clinical data, and generate knowledge. | Leverage continuous quality improvement (QI) and implement scientific methods to help select when AI tools are the most appropriate choice to optimize clinical operations and harness AI tools to support continuous improvement. |

| Care Improvement Targets | ||

| Clinical decision support | Accelerate integration of the best clinical knowledge into care decisions. | Accelerate integration of AI tools into clinical decision support applications. |

| Patient-centered care | Involve patients and families in decisions regarding health and health care, tailored to fit their preferences. | Involve patient and families in how, when, and where AI tools are used to support care in alignment with preferences. |

| Community links | Promote community–clinical partnerships and services aimed at managing and improving health at the community level. | Promote use of AI tools in community and patient health consumer applications in a responsible, safe manner. |

| Care continuity | Improve coordination and communication within and across organizations. | Improve AI data inputs and outputs through improved card coordination and data interchange. |

| Optimized operations | Continuously improve health care operations to reduce waste, streamline care delivery, and focus on activities that improve patient health. | Leverage continuous QI and Implementation Science methods to help select when AI tools are the most appropriate choice to optimize clinical operations. |

| Policy Environment | ||

| Financial incentives | Structure payment to reward continuous learning and improvement in the provision of best care at lower cost. | Use AI tools in business practices to optimize reimbursement, reduce cost, and (it is hoped) do so at a neutral or positive balance on quality of care. |

| Performance transparency | Increase transparency on health care system performance. | Make robust performance characteristics for AI tools transparent and assess them in the populations within which they are deployed. |

| Broad leadership | Expand commitment to the goals of a continuously learning health care system. | Promote broad stakeholder engagement and ownership in governance of AI systems in health care. |

NOTE: Recommendations from Best Care at Lower Cost (IOM, 2013) for a Learning Health System (LHS) in the first two columns are aligned with how AI tools can be leveraged into the LHS in the third column.

SOURCE: Adapted with permission from IOM, 2013.

Another facet of IT governance that is relevant to AI is data governance, which institutes a methodical process that an organization adopts to manage its data and ensure that the data meet specific standards and business rules before entering them into a data management system. Given the intense data requirements of many AI applications, data governance is crucial and may also expand to data curation and privacy-related issues. Capabilities such as SMART on FHIR (Substitutable Medical Apps, Reusable Technology on Fast Healthcare Interoperability Resource) are emerging boons for the field of AI but may exacerbate problems related to the need for data to be exchanged from EHRs to external systems, which in turn create issues related to the privacy and security of data. Relatedly, organizations will also need to consider other ethical issues associated with data use and data stewardship (Faden, 2013). In recent years, much has been written about patient rights and preferences as well as how the LHS is a moral imperative (Morain et al., 2018). Multiple publications and patient-led organizations have argued that the public is willing to share data for purposes that improve patient health and facilitate collaboration with data and medical expertise (Wicks et al., 2018). In other words, these publications suggest that medical data should be understood as a “public good” (Kraft et al., 2018). It is critical for organizations to build on these publications and develop appropriate data stewardship models that ensure that health data are used in ways that align with patients’ interests and preferences. Of particular note is the fact that not all patients and/or family have the same level of literacy, privilege, and understanding of how their data might be used or monetized, and the potential unintended consequences of privacy and confidentiality.

A health care enterprise that seeks to leverage AI should consider, characterize, and adequately resolve a number of key considerations prior to moving forward with the decision to develop and implement an AI solution. These key considerations are listed in Table 6-2 and are expanded further in the following sections.

Organizational Approach to Implementation

After the considerations delineated in the previous section have been resolved and a decision has been made to proceed with the adoption of an AI application, the organization requires a systematic approach to implementation. Frameworks for conceptualizing, designing, and evaluating this process are discussed below, but all implicitly incorporate the most fundamental basic health care improvement model, often referred to as a plan-do-study-act (PDSA) cycle. This approach was introduced more than two decades ago by W. E. Deming, the father of modern quality improvement (Deming, 2000). The PDSA cycle relies on the intimate

TABLE 6-2 | Key Considerations for Institutional Infrastructure and Governance

| Consideration | Relevant Governance Questions |

|---|---|

| Organizational capabilities | Does the organization possess the necessary technological (e.g., information technology [IT] infrastructure, IT personnel) and organizational (knowledgeable and engaged workforce, education, and training) capabilities to adopt, assess, and maintain artificial intelligence (AI)-driven tools? |

| Data environment | What data are available for AI development? Do current systems possess the adequate capacity for storage, retrieval, and transmission to support AI tools? |

| Interoperability | Does the organization support and maintain data at rest and in motion according to national and local standards for interoperability (e.g., SMART on FHIR [Substitutable Medical Apps, Reusable Technology on Fast Healthcare Interoperability Resource])? |

| Personnel capacity | What expertise exists in the health care system to develop and maintain the AI algorithms? |

| Cost, revenue, and value | What will be the initial and ongoing costs to purchase and install AI algorithms and to train users to maintain underlying data models and to monitor for variance in model performance? |

| Is there an anticipated return on investment from the AI deployment? | |

| What is the perceived value for the institution related to AI deployment? | |

| Safety and efficacy surveillance | Are there governance and processes in place to provide regular assessments of the safety and efficacy of AI tools? |

| Patient, family, consumer engagement | Does the institution have in place formal mechanisms for patient, family, or consumer, such as a council or advisory board, that can engage and voice concerns on relevant issues related to implementation, evaluation, etc.? |

| Cybersecurity and privacy | Does the digital infrastructure for health care data in the enterprise have sufficient protections in place to minimize the risk of breaches of privacy if AI is deployed? |

| Ethics and fairness | Is there an infrastructure in place at the institution to provide oversight and review of AI tools to ensure that the known issues related to ethics and fairness are addressed and that vigilance for unknown issues is in place? |

| Regulatory issues (see Chapter 7) | Are there specific regulatory issues that must be addressed and, if so, what type of monitoring and compliance programs will be necessary? |

participation of employees involved in the work, detailed understanding of workflows, and careful ongoing assessment of implementation that informs iterative adjustments. Newer methods of quality improvement introduced since Deming represent variations or elaborations of this approach. All too often, however, quality improvement efforts frequently fail because they are focused narrowly on a given task or set of tasks using inadequate metrics without due consideration of the larger environment in which change is expected to occur (Muller, 2018).

Such concerns are certainly relevant to AI implementation. New technology promises to substantially alter how medical professionals currently deliver health care at a time when morale in the workforce is generally poor (Shanafelt et al., 2012). One of the challenges of the use of AI in health care is that integrating it within the EHR and improving existing decision and workflow support tools may be viewed as an extension of an already unpopular technology (Sinsky et al., 2016). Moreover, there are a host of concerns that are unique to AI, some well and others poorly founded, which might add to the difficulty of implementing AI applications.

In recognition that basic quality improvement approaches are generally inadequate to produce large-scale change, the field of implementation science has arisen to characterize how organizations can undertake change in a systematic fashion that acknowledges their complexity. Some frameworks are specifically designed for evaluating the effectiveness of implementation, such as the Consolidated Framework for Implementation Research or the Promoting Action on Research Implementation in Health Services (PARiHS). In general, these governance and implementation frameworks emphasize sound change management and methods derived from implementation science that undoubtedly apply to implementation of AI tools (Damschroder et al., 2009; Rycroft-Malone, 2004).

Nearly all approaches integrate concepts of change management and incorporate the basic elements that should be familiar because they are routinely applied in health care improvement activities. These concepts, and how they are adapted to the specific task of AI implementation, are included in Table 6-3.

It must be recognized that even when these steps are taken by competent leadership, the process may not proceed as planned or expected. Health care delivery organizations are typically large and complex. These concepts of how to achieve desired changes successfully continue to evolve and increasingly acknowledge the powerful organizational factors that inhibit or facilitate change (Braithwaite, 2018).

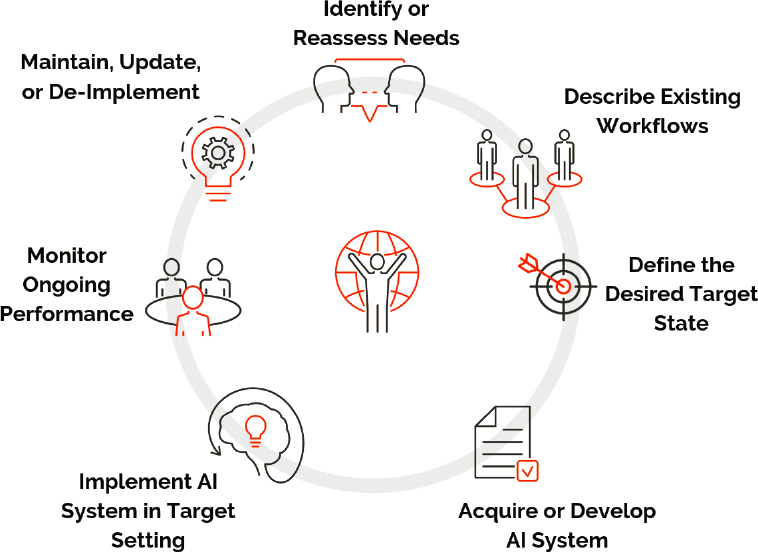

Developmental Life Cycle of AI Applications

As is the case with any health care improvement activity, the nature of the effort is cyclical and iterative, as is summarized in Figure 6-3. As discussed earlier, the process begins with clear identification of the clinical problem or need to be addressed. Often the problem will be one identified by clinicians or administrators as a current barrier or frustration or as an opportunity to improve clinical or operational processes. Even so, it is critical for the governance process to delineate the extent and magnitude of the issue and ensure that it is not idiosyncratic and that there are not simpler approaches to addressing the problem, rather than

| Implementation Task or Concept | Artificial Intelligence Relevant Aspects |

|---|---|

| Identifying the clinical or administrative problem to be addressed. | Consideration of the problem to be addressed should precede and be distinct from the selection and implementation of specific technologies, such as AI systems. |

| Assessing organizational readiness for change, which may entail surveying individuals who are likely to be affected. An example would be the Organizational Readiness to Change Assessment tool based on the Promoting Action on Research Implementation in Health Services framework (Helfrich et al., 2009). | It is important to include clinicians, information technology (IT) professionals, data scientists, and health care system leadership. These stakeholders are essential to effective planning for organizational preparation for implementing an AI solution. |

| Achieving consensus among stakeholders that the problem is important and relevant and providing persuasive information that the proposed solution is likely to be effective if adopted. | It is important to include clinicians, IT professionals, data scientists, and health care system leadership. These stakeholders are essential to effective planning for organizational preparation for implementing an AI solution. |

| When possible, applying standard organizational approaches that will be familiar to staff and patients without undue rigidity and determining what degree of customization will be permitted. | For AI technologies, this includes developing and adopting standards for approaches for how data are prepared, models are developed, and performance characteristics are reported. In addition, using standard user interfaces and education surrounding these technologies should be considered. |

| When possible, defining how adoption will improve workflow, patient outcomes, or organizational efficiency. | When possible, explicitly state and evaluate a value proposition, and, as important, assess the likelihood and magnitude of improvements with and without implementation of AI technologies. |

| Securing strong commitment from senior organizational leadership. | Typically, this includes organizational, clinical, IT and financial leaders for establishing governance and organizational prioritization strategies and directives. |

| Identifying strong local leadership, typically in the form of clinical champions and thought leaders. | Each AI system placed into practice needs a clinical owner(s) who will be the superusers of the tools, champion them, and provide early warning when these tools are not performing as expected. |

| Engaging stakeholders in developing a plan for implementation that is feasible and acceptable to users and working to identify offsets if the solution is likely to require more work on the part of users. | It is critical that AI tools be implemented in an environment incorporating user-centered design principles, and with a goal of decreasing user workload, either time or cognition. This requires detailed implementation plans that address changes in workflow, data streams, adoption or elimination of equipment if necessary, etc. |

| Providing adequate education and technical support during implementation. | Implementation of AI tools in health care settings should be done in concert with educational initiatives and both clinical champion and informatics/IT support that ideally is available immediately and capable of evaluating and remedying problems that arise. |

| Implementation Task or Concept | Artificial Intelligence Relevant Aspects |

|---|---|

| Managing the unnecessary complexity that arises from the “choices” in current IT systems. | Identify and implement intuitive interfaces and optimal workflows through a user-centered design process. |

| Defining clear milestones, metrics, and outcomes to determine whether an implementation is successful. | The desirable target state for an AI application should be clearly stated, defined in a measurable way, and processes, such as automation or analytics, put into place to collect, analyze, and report this information in a timely manner. |

| Conducting after-action assessments that will inform further implementation efforts. | It is important to leverage the human–computer interaction literature to assess user perceptions, barriers, and lessons learned during implementation of AI tools and systems. |

undertaking a major IT project. It is essential to delineate existing workflows, and this usually entails in-depth interviews with staff and direct observation that assist with producing detailed flowcharts (Nelson et al., 2011). It is also important to define the desired outcome state, and all feasible options for achieving that outcome should be considered and compared. In addition, to the greatest extent feasible, at each relevant step in the development process, input should be sought

from other stakeholders such as patients, end users, and members of the public. Although AI applications are currently a popular topic and new products are being touted, it is important to recall that the field is near the peak of the Gartner Hype Cycle (see Chapter 4), and some solutions are at risk for overpromising the achievable benefit. Thus, it is likely that early adopters will spend more and realize less value than organizations that are more strategic and, perhaps, willing to defer investments until products are more mature and have been proven. Ultimately, it will be necessary for organizations to assess the utility of an AI application in terms of the value proposition. For example, in considering adoption of an AI application for prediction, it is possible that in certain situations, given the cost, logistical complexity, and efficacy of the action, there may not be feasible operating zones in which a prediction–action pair, as described below, has clinical utility. Therefore, assessing the value proposition of deploying AI in clinical settings has to include the utility of downstream actions triggered by the system along with the frequency, cost, and logistics of those actions.

Components of a Clinical Validation and Monitoring Program for AI Tools

The clinical validation of AI tools should be viewed as distinct from the technical validation described in Chapter 5. For AI, clinical validation has two key axes:

- Application of traditional medical hierarchy of evidence to support adoption and continued use of the AI tool. The hierarchy categorizes pilot data as the lowest level of evidence, followed by observational, risk-adjusted assessment results, and places results of clinical trials at the top of the classification scheme.

- Alignment of the AI target with the desired clinical state. For example, simply demonstrating a high level of predictive accuracy may not ensure improved clinical outcomes if effective interventions are lacking, or if the algorithm is predicting a change in the requirements of a process or workflow that may not have a direct link to downstream outcome achievements. Thus, it is critically important to define prediction–action pairs. Actions should generally not be merely improvements in information knowledge but should be defined by specific interventions that have been shown to improve outcomes.

Given the novelty of AI, the limited evidence of its successful use to date, the limited regulatory frameworks around it, and that most AI tools depend on nuances of local data—and the clinical workflows that generate these data—ongoing

monitoring of AI as it is deployed in health care is critical for ensuring its safe and effective use. The basis for ongoing evaluation should be on prediction–action pairs, as discussed earlier in this chapter, and should involve assessment of factors such as

- how often the AI tool is accessed and used in the process of care;

- how often recommendations are accepted and the frequency of overrides (with reasons if available);

- in settings where the data leave the EHR, logs of data access, application programming interface (API) calls, and privacy changes;

- measures of clinical safety and benefit, optimally in the form of agreed-upon outcome or process measures;

- organizational metrics relevant to workflow or back-office AI;

- user-reported issues, such as perceived inaccurate recommendations, untimely or misdirected prompts, or undue distractions;

- records of ongoing maintenance work (e.g., data revision requests); and

- model performance against historical data (e.g., loss of model power due to changes in documentation).

Clinical Outcome Monitoring

The complexity and extent of local evaluation and monitoring may necessarily vary depending on the way AI tools are deployed into the clinical workflow, the clinical situation, and the type of CDS being delivered, as these will in turn define the clinical risk attributable to the AI tool.

The International Medical Device Regulators Forum (IMDRF) framework for assessing risk of SaMD is a potentially useful approach to developing SaMD evaluation monitoring strategies tailored to the level of potential risk posed by the clinical situation where the SaMD is employed. Although the IMRDF framework is currently used to identify SaMD that require regulation, its conceptual model is one that might be helpful in identifying the need for evaluating and monitoring AI tools, both in terms of local governance and larger studies. The IMDRF framework focuses on the clinical acuity of the location of care (e.g., intensive care unit versus general preventive care setting), type of decision being suggested (immediately life-threatening versus clinical reminder), and type of decision support being provided (e.g., interruptive alert versus invisible “nudge”). In general, the potential need for evaluation rises in concert with the clinical setting and decision acuity, and as the visibility of the CDS falls (and the opportunity for providers to identify and catch mistakes becomes lower).

For higher risk AI tools, a focus on clinical safety and effectiveness—from either a noninferiority or superiority perspective—is of paramount importance even as other metrics (e.g., API data calls, user experience information) are considered. High-risk tools will likely require evidence from rigorous studies for regulatory purposes and will certainly require substantial monitoring at the time of and following implementation. For low-risk clinical AI tools used at point of care, or those that focus on administrative tasks, evaluation may rightly focus on process-of-care measures and metrics related to the AI’s usage in practice to define its positive and negative effects. We strongly endorse implementing all AI tools using experimental methods (e.g., randomized controlled trials or A/B testing) where possible. Large-scale pragmatic trials at multiple sites will be critical for the field to grow but may be less necessary for local monitoring and for management of an AI formulary. In some instances, due to feasibility, costs, time constraints, or other limitations, a randomized trial may not be practical or feasible. In these circumstances, quasi-experimental approaches such as stepped-wedge designs or even carefully adjusted retrospective cohort studies may provide valuable insights. Monitoring outcomes after implementation will permit careful assessment, in the same manner that systems regularly examine drug usage or order sets and may be able to utilize data that are innately collected by the AI tool itself to provide a monitoring platform. Recent work has revealed that naive evaluation of AI system performance may be overly optimistic, providing a need for more thorough evaluation and validation.

In one such study (Zech et al., 2018), researchers evaluated the ability of a clinical AI application that relied on imaging data to generalize across hospitals. Specifically, they trained a neural network to diagnose pneumonia from patient radiographs in one hospital system and evaluated its diagnostic ability on external radiographs from different hospital systems, with their results showing that performance on external datasets was significantly degraded. The AI application was unable to generalize across hospitals due to differences between the training data and evaluation data, a well-known but often ignored problem termed dataset shift (Quiñonero-Candela et al., 2009; Saria and Subbaswamy, 2019). In this instance, Zech and colleagues (2018) showed that large differences in the prevalence of pneumonia between populations caused performance to suffer. However, even subtle differences between populations can result in significant performance changes (Saria and Subbaswamy, 2019). In the case of radiographs, differences between scanner manufacturers or type of scanner (e.g., portable versus nonportable) result in systematic differences in radiographs (e.g., inverted color schemes or inlaid text on the image). Thus, in the training process, an AI system can be trained to very accurately determine which hospital system

(and even which department within the system) a particular radiograph came from (Zech et al., 2018) and to use that information in making its prediction, rather than using more generalizable patient-based data.

Clinical AI performance can also deteriorate within a site when practices, patterns, or demographics change over time. As an example, consider the policy by which physicians order blood lactate measurements. Historically, it may have been the case that, at a particular hospital, lactate measurements were only ordered to confirm suspicion of sepsis. A clinical AI tool for predicting sepsis that was trained using historical data from this hospital would be vulnerable to learning that the act of ordering a lactate measurement is associated with sepsis rather than the elevated value of the lactate. However, if hospital policies change and lactate measurements are more commonly ordered, then the association that had been learned by the clinical AI would no longer be accurate. Alternatively, if the patient population shifts, for example, to include more drug users, then elevated lactate might become more common and the value of lactate being measured would again be diminished. In both the case of changing policy and of patient population, performance of the clinical AI application is likely to deteriorate, resulting in an increase of false-positive sepsis alerts.

More broadly, such examples illustrate the importance of careful validation in evaluating the reliability of clinical AI. A key means for measuring reliability is through validation on multiple datasets. Classical algorithms that are applied natively or used for training AI are prone to learning artifacts specific to the site that produced the training data or specific to the training dataset itself. There are many subtle ways that site-specific or dataset-specific bias can occur in real-world datasets. Validation using external datasets will show reduced performance for models that have learned patterns that do not generalize across sites (Schulam and Saria, 2017). Other factors that could influence AI prediction might include insurance coverage, discriminatory practices, or resource constraints. Overall, when there are varying, imprecise measurements or classifications of outcomes (i.e., labeling cases and controls), machine learning methods may exhibit what is known as causality leakage (Bramley, 2017) and label leakage (Ghassemi et al., 2018). An example of causality leakage in a clinical setting would be when a clinician suspects a problem and orders a test, and the AI uses the test itself to generate an alert, which then causes an action. Label leakage is when information about a targeted task outcome leaks back into the features used to generate the model.

While external validation can reveal potential reliability issues related to clinical AI performance, external validation is reactive in nature because differences between training and evaluation environments are found after the

fact due to degraded performance. It is more desirable to detect and prevent problems proactively to avoid failures prior to or during training. Recent work in this direction has produced proactive learning methods that train clinical AI applications to make predictions that are invariant to anticipated shifts in populations or datasets (Schulam and Saria, 2017). For example, in the lactate example, above, the clinical AI application can learn a predictive algorithm that is immune to shifts in practice patterns (Saria and Subbaswamy, 2019). Doing so requires adjusting for confounding, which is only sometimes possible, for instance, when the data meet certain quality requirements. When they can be anticipated, these shifts can be prespecified by model developers and included in documentation associated with the application. By refraining from incorporating learning predictive relationships that are likely to change, performance is more likely to remain robust when deployed at new hospitals or under new policy regimes. Beyond proactive learning, these methods also provide a means for understanding susceptibility to shifts for a given clinical AI model (Subbaswamy and Saria, 2018; Subbaswamy et al., 2019). Such tools have the potential to prevent failures if implemented during the initial phase of approval.

In addition to monitoring overall measures of performance, evaluating performance on key patient subgroups can further expose areas of model vulnerability: High average performance overall is not indicative of high performance across every relevant subpopulation. Careful examination of stratified performance can help expose subpopulations where the clinical AI model performs poorly and therefore poses higher risk. Furthermore, tools that detect individual points where the clinical AI is likely to be uncertain or unreliable can flag anomalous cases. By introducing a manual audit for these individual points, one can improve reliability during use (e.g., Schulam and Saria, 2019; Soleimani et al., 2018). Traditionally, uncertainty assessment was limited to the use of specific classes of algorithms for model development. However, recent approaches have led to wrapper tools that can audit some black box models (Schulam and Saria, 2019). Logging cases flagged as anomalous or unreliable and performing a review of such cases from time to time may be another way to bolster postmarketing surveillance, and FDA requirements for such surveillance could require such techniques.

AI Model Maintenance

As discussed above, there is a large body of work indicating that model performance—whether AI or traditional models—degrades when models are applied to another health care system with systematic differences from the system

where it was derived (Koola et al., 2019). Deterioration of model performance can also occur within the same health care system over time, as clinical care environments evolve due to changes in background characteristics of the patients being treated, overall population rates of exposures and outcomes of interest, and clinical practice as new evidence is generated (Davis et al., 2017; Steyerberg et al., 2013). In addition, systematic data shifts can occur if the implementation of AI itself changes clinical care (Lenert et al., 2019).

There are a number of approaches used to account for systematic changes in source data use by AI applications that have largely been adapted from more traditional statistical methods applied to risk models (Moons et al., 2012). These methods range from completely regenerating models on a periodic basis to recalibrating models using a variety of increasingly complex methods. However, there are evolving areas of research into how frequently to update, what volume and types of data are necessary for robust performance maintenance, and how to scale these surveillance and updating activities across what is anticipated to be a high volume of algorithms and models in clinical practice (Davis et al., 2019; Moons et al., 2012). Some of the risks are analogous to those of systems in which Boolean rule–based CDS tools were successfully implemented, but the continual addition of reminders and CDS in an EHR based on guidelines becomes unsustainable (Singh et al., 2013). Without automation and the appropriate scaling and standardization of knowledge, management systems for these types of CDS will face severe challenges.

AI AND INTERPRETABILITY

A lack of transparency and interpretability in AI-derived recommendations is one issue that has received considerable visibility and has often been cited as a limitation for use of AI in clinical applications. Lack of insight into methods and data employed to develop and operate AI models tends to provoke clinicians’ questions about the clinical plausibility of the tools, the extent to which the tools can provide clinical justification and reassurance to the patient and provider making care decisions, and potential liability, including implications for insurance coverage. In addition, there is a tendency to question whether observed associations utilized within the model can be used to identify specific clinical or system-level actions that should be taken, or whether they can reveal novel and unsuspected underlying pathophysiologies. These issues are particularly complex as they relate to risk prediction models that can readily be validated in terms of predictive accuracy but for which the inclusion of data elements may not be based on biological relationships.

Requirements for interpretability are likely to be determined by a number of factors, including

- medical liability and federal guidelines and recommendations for how AI is to be used in health care;

- the medical profession’s and the public’s increasing trust in their reliance on AI to manage clinical information;

- AI tools’ effect on current human interactions and design, which may be prepared for to some extent through advance planning for appropriate adoption and implementation into current workflows (Dudding et al., 2018; Hatch et al., 2018; Lynch et al., 2019; Wachter, 2015); and

- Expectations of the general public regarding the safety and efficacy of these systems.

One area of active innovation is the synthesis and display of where and how AI outputs are presented to the end user, in many cases to assist in interpretability. Innovations include establishing new methods, such as parallel models where one is used for core computation and the other for interpretation (Hara and Hayashi, 2018; Krause et al., 2016; Turner, 2016). Others utilize novel graphical displays and data discovery tools that sit alongside the AI to educate and help users in health care settings as they become comfortable using the recommendations.

There remains a paradox, however, because machine learning produces algorithms based upon features that may not be readily interpretable. In the absence of absolute transparency, stringent standards for performance must be monitored and ensured. We may not understand all of the components upon which an algorithm is based, but if the resulting recommendations are highly accurate, and if surveillance of the performance of the AI system over time is maintained, then we might continue to trust it to perform the assigned task. A burgeoning number of applications for AI in the health care system do not assess end users’ needs for the level of interpretability. Although the most stringent criteria for transparency are within the point-of-care setting, there are likely circumstances under which accuracy may be desired over transparency. Regardless of the level of interpretability of the outputs of the AI algorithms, considerations for users’ requirements should be addressed during development. With this in mind, there are a few key factors related to the use and adoption of AI tools that are algorithmically nontransparent but worthy of consideration by clinicians and health care delivery systems.

Although the need for algorithm transparency at a granular level is probably overstated, descriptors of how data were collected and aggregated are essential,

comparable to the inclusion/exclusion criteria of a clinical trial. For models that seek to produce predictions only (i.e., suggest an association between observable data and an outcome), at a minimum we believe that it is important to know the populations for which AI is not applicable. This will make informed decisions about the likely accuracy of use in specific situations possible and help implementers avoid introducing systematic errors related to patient socioeconomic or documentation biases. For example, implementers might consider whether the data were collected in a system similar to their own. The generalizability of data sources is a particularly important consideration when evaluating the suitability of an AI tool, because those that use data or algorithms that have been derived outside the target environment are likely to be misapplied. This applies to potential generalizability limitations among models derived from patient data within one part of a health system and then spread to other parts of that system.

For models that seek to suggest therapeutic targets or treatments—and thus imply a causal link or pathway—a higher level of scrutiny of the data assumptions and model approaches should be required. Closer examination is required because these results are apt to be biased by the socioeconomic and system-related factors mentioned above as well as by well-described issues such as allocation and treatment biases, immortal time biases, and documentation biases. At present, AI tools are incapable of accounting for these biases in an unsupervised fashion. Therefore, for the foreseeable future, this class of AI tools will require robust, prospective study before deployment locally and may require repeated recalibrations and reevaluation when expanded outside their original setting.

Risk of a Digital Divide

It should be evident from this chapter that in this early phase of AI development, adopting this technology requires substantial resources. Because of this barrier, only well-resourced institutions may have access to AI tools and systems, while institutions that serve less affluent and disadvantaged individuals will be forced to forgo the technology. Early on, when clinical AI remains in rudimentary stages, this may not be terribly disadvantageous. However, as the technology improves, the digital divide may widen the significant disparities that already exist between institutions. This would be ironic because AI tools have the potential to improve quality and efficiency where the need is greatest. An additional potential risk is that AI technology may be developed in environments that exclude patients of different socioeconomic, cultural, and ethnic backgrounds, leading to poorer performance in some groups. Early in the process of AI development, it is critical that we ensure that this technology is derived from data gathered from

diverse populations and that it be made available to affluent and disadvantaged individuals as it matures.

KEY CONSIDERATIONS

As clinical AI applications become increasingly available, marketing of these tools is apt to intensify and be accompanied by claims regarding improved clinical outcomes or improved efficiency of care, which may or may not be well founded. Health care delivery systems and hospitals will need to take a thoughtful approach in the evaluation, decision making, and adoption of these tools that incorporates considerations regarding organizational governance and postdevelopment technical issues (i.e., maintenance of systems) as well as clinical considerations that are all essential to successful implementation.

Effective IT governance is essential for successful deployment of AI applications. Health systems must create or adapt their general IT governance structures to manage AI implementation.

- The clinical and administrative leadership of health care systems, with input from all relevant stakeholders such as patients, end users, and the general public, must define the near- and far-term states that would be required to measurably improve workflow or clinical outcomes. If these target states are clearly defined, AI is likely to positively affect the health care system through efficient integration into the EHR, population health programs, and ancillary and allied health workflows.

- Before deploying AI, health systems should assess through stakeholder and user engagement, especially patients, consumers, and the general public, the degree to which transparency is required for AI to operate in a particular use case. This includes determining cultural resistance and workflow limitations that may dictate key interpretability and actionability requirements for successful deployment.

- Through IT governance, health systems should establish standard processes for the surveillance and maintenance of AI applications’ performance and, if at all possible, automate those processes to enable the scalable addition of AI tools for a variety of use cases.

- IT governance should engage health care system leadership, end users, and target patients to establish a value statement for AI applications. This will include analyses to ascertain the potential cost savings and/or clinical outcome gains from implementation of AI.

- AI development and implementation should follow established best-practice frameworks in implementation science and software development.

- Because it remains in early developmental stages, health systems should maintain a healthy skepticism about the advertised benefits of AI. Systems that do not possess strong research and advanced IT capabilities should likely not be early adopters of this technology.

- Health care delivery systems should strive to adopt and deploy AI applications in the context of a learning health system.

- Efforts to avoid introducing social bias in the development and use of AI applications are critical.

REFERENCES

Abdi, J., A. Al-Hindawi, T. Ng, and M. P. V izcaychipi. 2018. Scoping review on the use of socially assistive robot technology in elderly care. BMJ Open 8(2):e018815.

Bandos, A. I., H. E. Rockette, T. Song, and D. Gur. 2009. Area under the free-response ROC curve (FROC) and a related summary index. Biometrics 65(1):247–256.

Bates, D. W. 2012. Clinical decision support and the law: The big picture. Saint Louis University Journal of Health Law & Policy 5(2):319–324.

Blane, D., E. Brunner, and R. Wilkinson. 2002. Health and social organization: Towards a health policy for the 21st century. New York: Routledge.

Braithwaite, J. 2018. Changing how we think about healthcare improvement. BMJ 361:k2014.