4

Image Processing and Detection

The third session of the workshop, chaired by Chuck Stewart (Rensselaer Polytechnic Institute), discussed issues related to image processing, such as imaging platforms, color and illumination correction, segmentation, recognition, and species detection. Some useful references for this session’s topics, as suggested by the workshop program committee, include Dawkins et al., 2013; Kaeli and Singh, in review; Tolimieri et al., 2008; Treibitz et al., 2012; and Singh et al., 2007. Presentations were made by Clay Kunz (Google), Hanumant Singh (Woods Hole Oceanographic Institution), Ruzena Bajcsy (University of California, Berkeley), and Chuck Stewart.

Clay Kunz, Google

Clay Kunz began by stating that platforms to study fish populations are a solved research problem: there are now a variety of platforms (such as AUVs, diver-carried rigs, towed cameras, remotely operated vehicles, and variable-ballast floats) that can be used for a given application. He explained that the variety of platforms enables the production of significant amounts of video or still imagery—as much as 100 terabytes of image data. Many different research groups are examining this data, leading to a lack of coherence in the community about research methods. The community would benefit, Kunz said, from the automated semantic analysis of image content. However, he posited that techniques used to recognize and classify human faces do not apply well to the classification of fish for two possible reasons:

- Computer vision makes invalid assumptions underwater.

- Locally valid corrections may not generalize to other image groups.

One invalid assumption that can be made in computer vision, explained Kunz, is the geometric calibration of the camera when placed underwater. However, he stated that current lens distortion models can compensate adequately for refraction underwater (Treibitz et al., 2012). With reasonable calibration, one can develop 3D imagery from stereo cameras, develop structure from motion, and fuse mono or stereo imagery with bathymetry to image a fish against the seafloor.

A second class of invalid assumptions in computer vision, said Kunz, is in radiometric calibration, and these issues are more complicated. He said that certain radiometric assumptions in computer vision may no longer be valid, including the following:

- Underwater scattering and absorption,

- Non-diffuse light sources,

- Non-Lambertian surfaces,1 and

- Fluorescence and other adaptations.

To overcome radiometric calibration issues, Kunz suggested choosing the best platform to obtain physical proximity to the scene, using cameras with high dynamic range, using training data, and controlling the light sources as well as possible. He noted that radiometric correction techniques do not necessarily translate well across all underwater environments.

Kunz concluded by noting that today, the perception of fisheries work is that the problems are applied rather than theoretical and do not result in Ph.D. research or scholarly publications. He suggested this mind-set be changed. He also pointed out that with cloud computing, storage is now inexpensive, so data sets can be made more widely accessible. In addition, new types of sensors and higher-fidelity cameras are changing rapidly and in useful ways.

IMAGE UNDERSTANDING UNDERWATER

Hanumant Singh, Woods Hole Oceanographic Institution

Hanumant Singh began his presentation by emphasizing the need for vertical integration of all components that analyze underwater images: sensors, platforms, algorithms, and applications. He indicated that illumination-corrected,

__________________

1 A Lambertian surface is one in which the apparent brightness of the surface is independent of the viewing angle; a non-Lambertian surface brightness does vary by angle.

high-resolution imagery is now readily available. Similarly, a number of vehicles and platforms exist from which one can obtain high-quality data. Singh therefore hypothesized that algorithms provide the greatest opportunity for improvements to underwater image analysis, particularly algorithms in the areas of segmentation and classification.

In Singh’s view, fisheries-independent stock assessments have the following challenges: spatial (how to examine large areas and generalize the counting done in small areas), temporal (how to examine the same areas the following week or year), dynamics and biases (how to avoid counting fish multiple times due to fish attraction and/or avoidance), and processing time (real-time feedback).

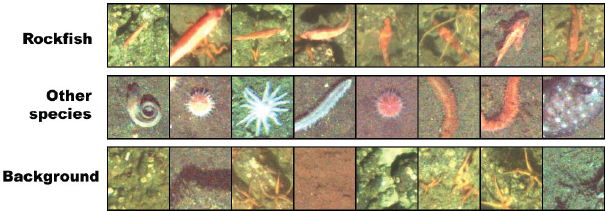

Singh described a specific example in the classification of rockfish. He indicated that rockfish identification and analysis is (or should be) a tractable problem, as rockfish are red and provide high contrast against their surroundings. He pointed out that when imaging rockfish one is also imaging the habitat—i.e., the background against which the fish is imaged. Habitat can be considered a conditional probability: certain types of habitat, such as rubble, mud, or sand, are more likely to result in an image with a fish. In the rockfish example, while their red color is relatively easy to find, they can be confused with other fish species or with elements in the background. This complexity is demonstrated in the images in Figure 4.1. While certain fish are attracted to a camera, AUV, or trawl, and other fish avoid the hardware, rockfish move slowly enough that avoidance is not a significant concern. Singh noted the value of computer-assisted systems, rather than fully automated systems, because even a partial identification would help the fisheries community to measure abundance and classify species.

FIGURE 4.1 Images showing the challenges associated with identifying images of rockfish, even though rockfish are a bright red. The top row shows rockfish images. The middle row show images of other species. The bottom row shows images of background objects. SOURCE: Courtesy of H. Singh, N. Loomis; copyright Woods Hole Oceanographic Institution.

Singh explained that camouflage can affect both human and automated classification. He described work in real-time segmentation of images to find camouflaged marine life, looking specifically at the classification of octopuses and skates. He noted that in counting camouflaged animals a certain number of false positives will need to be tolerated.

Light-field cameras, Singh said, have the potential to help with seeing through turbid water, provide 3D reconstructions, and provide high dynamic range. Calibration may be a concern for light-field cameras because of differences with the air/water interface, although Singh believes this is a solvable problem.

UNDERWATER TELE-IMMERSION: POTENTIAL AND CHALLENGES

Ruzena Bajcsy, University of California, Berkeley

Ruzena Bajcsy explained that current computer vision technology allows real-time, 360-degree capture of the surrounding world. Tele-immersion is the digital capture and representation of the surrounding world. Her vision, she explained, is to develop a Skype of higher order, one in which people could communicate and interact in the same environment when not co-located, and she posed the question of whether such a system would be useful to fisheries scientists. To enable tele-immersion, she explained, three systems are necessary: capture (computer vision), communication (networking to bring two people into the same environment in real time), and display (3D, real-time rendering of the environment).

Bajcsy described a 45-camera system developed at the University of California, Berkeley, to provide a field of view that encompasses a complete human body. Her research group conducted a test exercise in which two people (one at the University of California, Berkeley, and one at the University of Illinois, Urbana-Champaign) danced together. She then suggested extending this concept of tele-immersion to the underwater environment, in which fishermen can interact with the environment and the fish. There may also be teaching and analysis applications to fisheries tele-immersion.

Bajcsy pointed out that challenges associated with tele-immersion systems include the following:

- Large-scale camera systems distributed over networks (though she noted that camera technology is rapidly improving);

- Real-time processing (3D reconstruction and rendering);

- Configuration and coordination of 3D/4D services, including calibration;

- Software synchronization;

- Portability;

- Ease of system maintenance; and

- Real-time operating system services on multi-core architectures.

Challenges in networking include the following:

- Large bandwidth demands,

- Real-time communication needs,

- Multi-stream coordination,

- Synchronizing multiple streams, and

- Different scales.

Challenges in display and rendering include the following:

- Immersive display of 3D video. Bajcsy indicated that reconstruction should be improved for more lifelike rendering and improved scalability. In the dancing example, the dancers’ bodies rendered well, but there was insufficient detail in rendering their faces.

- Merging 3D video with a collaborative virtual workspace.

- Synchronizing virtual workspaces to enable face-to-face virtual conversations.

Bajcsy explained that tele-immersion can have a number of applications, including in the geosciences, in archaeology (to capture and digitally reconstruct information across different institutions as archaeologists remove objects), and in health care (medical data visualization). She noted that a user can interact with himself in the tele-immersive environment via pre-recording.

Bajcsy explained that she analyzes data to determine the minimum number of observations needed to describe a system (she contrasted this to Terzopoulos, who modeled and synthesized fish via a large number of individual bones and muscles). She provided a specific example in which the motion of 12 subjects was captured via cameras and sound recordings. The subjects were asked to do different, simple activities (such as sitting or bending over) while Bajcsy measured the most energetic joints and used that information to classify different activities. She noted that this type of measurement system has medical applications; for instance, one can measure the efficacy of different interventions on the range of movement of patients with muscular dystrophy. Bajcsy provided a second example in which muscle strength is measured with a single electromyography sensor. She said that this system could have medical applications for the aging; as an aging person loses strength in a particular muscle or joint, a device could be customized to supply additional force needed to reinstate some of the original function.

UNDERWATER IMAGING AND DETECTION

Chuck Stewart, Rensselaer Polytechnic Institute

Chuck Stewart explained that HabCam (described by both Dvora Hart and Benjamin Richards) returns 500,000 images per day, most of them uninteresting—in other words, most of the images do not have any marine life in them. A key in underwater imaging and detection is to identify those images that do contain something of interest.

Light intensity is non-uniform on the seafloor, explained Stewart, both because light sources are not uniform and also because light attenuation depends on wavelength. Image illumination can be estimated, and light maps can be “learned” at multiple altitudes above the seafloor, developing a 3D lookup table based on the angle of the HabCam sensor. The only light source is the one carried by HabCam; the lookup tables, therefore, can restore uniformity to the images.

Stewart then described the algorithm used to detect sea scallops in HabCam images, which consists of the following steps:

- Preprocess. Color is replaced with the likelihood of color, which enhances uncommon colors.

- Detect candidate regions that are likely to contain scallops. Four independent extractors from image processing are used in concert.

- Extract large feature vectors. The feature vectors used are about 3,800 units long and include such information as color, edge properties, and texture.

- Classify. Stewart explained that multiple AdaBoost classifiers2 were used to determine whether an object is a brown scallop, white scallop, dead scallop, sand dollar, or clam. The substrate (sand, mud, or gravel) is hand-specified, and that information is used to determine the type of classifier to use.

- Make a final detection decision.

Stewart explained that no one classifier is clearly the best in a particular environment, although they all are efficient and provide reasonably accurate results. Overall detection rates vary from about 60 percent to about 95 percent, depending on the type of training and the substrate. Stewart noted that this success rate is consistent with human classifications from images. Automatic determination of the substrate will lead to automatic selection of classifiers.

Stewart concluded by noting that individual animals on land can be identified in a surprising number of species, including the elephant, jaguar, rhinoceros, seal,

__________________

2 AdaBoost, short for adaptive boosting, is a method of machine learning in which a number of weak, inaccurate classifiers are combined to make a more highly accurate prediction rule (Freund and Shapire, 1997).

whale shark, wild dog, and nautilus. He suggested that the techniques used for recognizing individuals in such cases may be useful in the underwater context as well. He recognized, though, that computer vision techniques cannot be adapted to the underwater fish environment without considering a number of important, and potentially messy, technical details. He also emphasized the use of contests as powerful tools to motivate the study of well-defined problems with clear data sets.