Appendix A

Combining Models

Multiple, diverse models of components or subsystems are commonly linked together to form a collective model of a complex system. The use of multiple models, often referred to as multimodeling, reduces the time to develop models, supports validation, and enables multiple teams to take part in the exercise (Carley et al., 2012b). On the other hand, their use requires some special considerations. For example, multimodeling can be facilitated by the use of simulation testbeds, workflow systems, and infrastructure that supports linking models together. However, existing tools presume a common level of temporal and spatial resolution for all models, which is generally not the case, and the tools do not consider group resolution level, which is important in social system and geonetwork models. Hence, existing testbeds are insufficient for linking many types of models. In addition, the use of a model outside of the investigation for which it was designed can lead to serious misinterpretations of the results. This misinterpretation can be worse in a multimodeling framework, because most models will be used outside of their original contexts.

This appendix describes five methods for linking models (docking, collaboration, interoperation, integration, and coupling), and requirements for developing and using combined models of complex systems.

METHODS FOR LINKING MODELS

Analysts often need to rapidly analyze complex systems and answer diverse questions about those systems. In principle, this functionality could be provided either in a unified model and analysis package, or by a federation of models and tools. For a federated system, all models and tools need to be made interoperable using standardized data formats and exchange languages and aligning the temporal, spatial, and grouping processes. The set of approaches commonly used to meet these needs—docking, collaboration, interoperation, integration, and coupling—are described below. The different approaches are not mutually exclusive, and two or more may be used in a model-based investigation or in composing a new system out of component models.

Docking

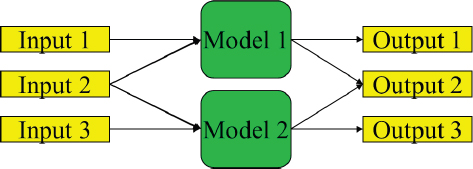

For computational models, docking refers to a type of alignment in which the docked models share some of the same input and generate some of the same output (Axtell et al., 1996; Burton and Obel, 1995; see Figure A.1). If two or more models can be shown to generate comparable output from the same input, they are docked. When two or more models are docked, they share some input but may each have additional unique input. In this case, all models that are docked will generate some output that is comparable and possibly some that is unique to the model. When inputs and outputs are the same, the internal processes in the model are aligned and the models are said to be docked. Within this scope, any differences in the theories inherent in the models or the details of the way they are programmed do not matter.

Advantages of docking are that it is economical, flexible, and extensible to many models. For sociotechnical models, where the traditional methods of validation do not apply, docking is viewed as a form of validation. The idea is that any one theory or instantiation of a theory might be wrong, but if multiple models with diverse theoretical perspectives are docked, a robust and possibly overdetermined relation exists and is being captured by the suite of models. Finally, docking supports aligning one model with another (Axtell et al., 1996; Burton and Obel, 1995), which clarifies what the modeling assumptions are and how they affect the results. Model alignment helps the user understand the tradeoffs and risks in using one model over another and brings to light the limitations in each of the docked models. Disadvantages of docking are that the procedure requires participation by model developers or great documentation of the models, provides no theory development, and does not support reuse.

Collaboration

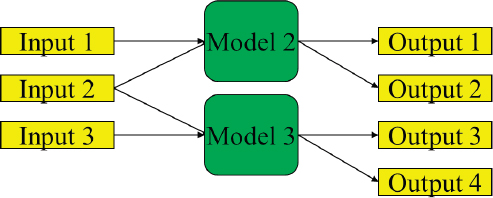

Collaboration is a federated approach in which two models sharing input data are used in conjunction to produce a collection of results that can be used together (see Figure A.2). The models must have some input in common, but each model may also use additional unique data. In this case, each model generates distinct outputs. Advantages of this approach are that it is economical, flexible, and extensible, is itself a form of validation (through triangulation), and supports the use of nonfused data. Disadvantages are that this approach requires moderate theory development and does not automatically guarantee reuse.

Interoperation

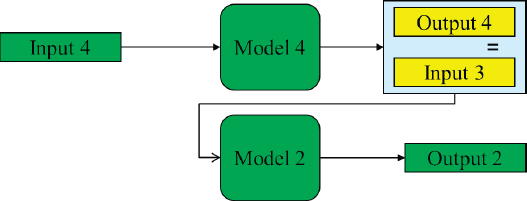

Interoperation occurs when the outputs of one model are the inputs to another. In this case, the models form a chain that is implicitly the merger of theories (Levis et al., 2011; see Figure A.3). Interoperation has numerous advantages. Interoperation can occur at many levels (e.g., Tolk and Muguira, 2003). A federated system formed by interoperation is generally easy to use, extensible, flexible, highly reusable, and economical to develop, and it supports the development of theory. The key disadvantages are that making models interoperable often requires some code development and some theory development. For models composed out of parts, interoperation is easier if the models are in the same framework; at the same geographic, temporal, and group level of fidelity; and from the same theoretic tradition. In addition, the extent to which a composed model is valid depends on whether the component models are validated, whether the systems denoted by the two different models can be segregated, or whether complex interactions occur as the two systems interact. The science of model integration and the limits of composability are active areas of research (Davis and Anderson, 2004).

Integration

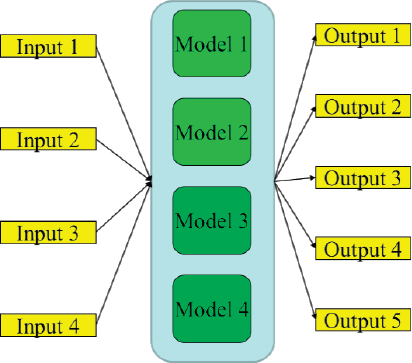

Integration produces a single monolithic system in which all submodels are incorporated into a single model, often by a single programmer (Morgan and Carley, 2012). In such a system, all inputs go into the monolithic model, which, in turn, generates all outputs (see Figure A.4). Monolithic models can be analytically powerful. However, they are often highly complex and require specialized functionalities or metrics, which can create a steep learning curve for analysts.

An advantage of integration is that the overall system may be easier to use and maintain because there is a single user interface and updates and changes are controlled by a single group. The model might also run faster because there are no duplicate subparts, and the overall system can be optimized. Finally, developing a monolithic system can lead to new theory development. The disadvantages are that monolithic systems are costly to develop and extend, difficult to validate and are never completely validated, have low extensibility and low flexibility, require extensive new code development, and are unlikely to be reused.

Integrated models can be modular. However, the difference between a modular integrated system and a system formed through interoperation and docking has to do, in part, with the pedigree of the models and the ease of bringing in new submodels. In a composable system, each of the modules may be from a different theoretical tradition, built by different model teams, and separately validated. In an integrated system, the modules are typically designed from a software performance perspective and are not driven by diverse theoretical conceptions. Integration is a more top-down approach to developing a complex model, whereas interoperation via docking and the other methods mentioned above is a more bottom-up approach. Integrated systems are typically controlled centrally and it is often more difficult to add new modules from radically different perspectives than it is to add them in bottom-up systems. In principle, both the bottom-up and top-down approaches could result in the same design. However, for simulations of sociotechnical systems, theoretical understanding is still relatively weak, and so the two approaches tend to produce different results. The integrative approach typically results in an early hardening of the model design, which prevents the integration of radical departures from early conceptualizations as new discoveries are made.

Coupling

In some situations, linking the models is insufficient because the models depend on one other. Combining dependent models is referred to as coupling. Coupled models are typically used to understand interactions between subsystems (e.g., see "Coupled Physical-Social System Models" in Chapter 4.

The development of coupled models requires an understanding of all components of the system being coupled and all the modeling paradigms being used (Zeigler et al., 2000). Whereas disciplinary experts may engage more heavily in the development of a subsystem model, those charged with integrating subsystems must understand the various subsystems and the way they are represented in the models. Typically, developing subsystem models with the intent of coupling ensures that common variables, units, spatial resolution, and temporal resolution are used and that the outputs produced by one subsystem are directly usable by another subsystem. Subsystem models that are developed independently and later coupled often require the development of methods for translating information from one subsystem to another or a lengthy redevelopment of one or more components. Developers often retain

the ability to operate each subsystem individually, a configuration that allows faster run time when the question of interest is specific to an individual subsystem.

Coupled models are often developed with an interface that handles the transfer of information between subsystem models. For example, the Community Earth System Model1 has a “coupler” that passes information from one component to another (e.g., precipitation is passed from the atmosphere component to the land component). The Global Change Assessment Model2 uses a marketplace to pass information from one component to another (e.g., supply of bioenergy is passed from the agriculture module to the energy module via the marketplace).

There are many challenges in developing and using coupled models. First, these models require interactions between different communities and models, which often have different languages and methods of operating. Second, coupled models are often computationally expensive. As a result, developers are often faced with a choice between including a complex subsystem model, developing an emulator of that subsystem, or excluding the subsystem. Third, coupled models include numerous processes and feedbacks, and so analysis of results can be challenging. Experiments need to be structured carefully to isolate the effect of different mechanisms on various systems.

Choosing an Approach to Linking Models

How models are linked together depends in part on their alignment. Three key dimensions need to be aligned: spatial, temporal, and organizational/group. At the spatial level, the issue is whether the models cover the same spatial region, at the same level of granularity. For example, two city-level models of Morocco are highly aligned, whereas one model at the block level and one at the state level, or one generic and one of Saudi Arabia, are highly unaligned. At the temporal level, the issue is whether the models cover the same time span, at the same level of granularity. For example, two monthly models for 2000 are highly aligned, whereas one model at the day level in 1990 and one at the year level from 2000 to 2015 are highly unaligned. At the organizational level, the issue is whether the models cover the collectives of individuals, at the same level of granularity. For example, two models of individuals as separate agents are highly aligned, whereas one model at the small group level and one at the organizational level are highly unaligned.

Geographic, temporal, and organizational alignment are necessary but may not be sufficient. For example, models might not be in alignment because of differences in the functions or processes used. One agent-based model might maximize a trivial utility function, whereas another might look at more complex matters and reflect cognitive biases. Even if aligned in space, time, and group, they would not be aligned cognitively.

The following questions can be used to help identify the best approach for combining models:

-

Are the models operating in the same time window?

- – Yes: docking, interoperation, or integration

- – No: collaboration or interoperation

-

Are the models taking into account the same actors?

- – Yes: docking, interoperation, or integration

- – No: collaboration or interoperation

-

Are the models considering the same spatial region in the same timeframe?

- – Yes: docking, interoperation, or integration

- – No: collaboration or interoperation

___________________

REQUIREMENTS FOR MULTIMODELING TECHNOLOGY

A toolkit to support the development and use of complex system models in a rapid, flexible, and robust manner has seven basic requirements: extensibility, interoperability, common and extensible ontological framework, common interchange language, data management, scalability, and robustness (Carley et al., 2007). These requirements are laid out below.

Extensibility

To help analysts answer a changing set of questions, modeling "toolkits need to be easily extensible so that already integrated tools can be refined and new measures and techniques can be added. Building toolkits as modular suites of independent packages enables more distributed software development and allows independent developers to create their own tools and connect them to a growing federation of toolkits. Such an integrated system can be facilitated by the use of common visualization and analysis tools, using XML interchange languages, and providing individual programs as web services, or at a minimum specifying their IO [input and output] in XML" (Carley et al., 2007, p. 1327).

Interoperability

To support interoperability "all tools embedded in a toolchain need to be capable of reading and writing the same data formats and data sets. As a result, output from one tool will be fit for input to other tools. This does not mean that each tool needs to be able to use all the data from the other tools, but each tool needs to be capable of operating on relevant subsets of data without altering the data format. While not a hard requirement for a toolchain, it would be beneficial to agree upon a common interchange language ... for input and output ... to ease the concatenation of existing tools created by various developers. Further key aspects of interoperability are the use of a common ontology for describing data elements, and the ability for tools to be called by other tools through scripts" (Carley et al., 2007, p. 1327).

Ontologies

Models of complex systems seek to represent the primary entities of the system, their relationships, and the attributes of the entities and relationships. Tools are needed to automatically derive ontologies of these entities, relationships, and attributes from the data or from theory. “For relational data, most of the focus has been on connections between agents (social networks). This approach has to be expanded for three reasons. First, multilink data sets became available recently, but there has been little success in defining ontologies with multiple entity classes. Second, most tools to date are built upon the assumption that there is only a single relation type at a time. Third, few data sets contain attributes, and so there has been little attention to what are appropriate attribute classes” (Carley et al., 2007, p. 1327). As a result, relatively few candidate ontologies exist.

Interchange Language

A common data interchange language ensures the consistent and compatible representation of various networks or identical networks at various states, and also facilitates data sharing and fusion. Therefore, network data collected using various techniques or by different people, stored and maintained in different databases with different structures, and used as input and output of various tools need to be represented in a common format. A common format or data interchange language—engineered for flexibility and compatibility with a variety of tools—can enable different groups to run the same tools and share results, even when the input data cannot be shared. Interchange language requirements exist for various classes of models, for example DyNetML for high-dimensional network models (Tsvetovat et al., 2003) or graphML for simple networks (Brandes et al., 2013), but there are no general requirements for complex system models.

Data Storage and Management

A data storage and management system, typically a database, is needed to manage inputs and outputs from multiple models. A database function allows information to be added to stored networks, and Structured Query Language (SQL)-type databases include tools for searching, selecting, and refining data. Because there is no common database structure in the intelligence domain, tools are needed to combine data from different data sets and to convert between interchange languages. "A key requirement here is a common ontology (as previously noted) so that diverse structures can be utilized and data can be rapidly fused together. A second difficulty is that most of the currently available relational data is contained in raw text files or stored in excel files, which complicates augmenting relational data with attributes, such as a person’s age or gender. Utilization of [SQL] databases instead of these other formats will facilitate the handling of relational data" (Carley et al., 2007, p. 1238).

Scalability of Results

Many models are designed and tested with small data sets, or are used to generate a small number of results. In general, however, these models should be analyzed from a response surface perspective. A response surface in a model is formed by the relationship between one or more explanatory variables and one or more response variables (i.e., the relation of inputs to outputs; Box and Wilson, 1951). For simulations, a good virtual experiment should be conducted in which the analyst systematically collects information on the response surface. When the number of variables is small, designs such as described by Box and Behnken (1960) can be used. However, as the number of variables increases, more exploratory techniques, such as active nonlinear tests (Miller, 1998), are needed. Once the virtual experiment has been completed, the results are analyzed and the best-fitting nonlinear model is estimated.

Tools supporting response surface methodology are available in many statistical and mathematical packages such as MATLAB. Analyzing models in this manner increases dramatically the number of replications needed and the overall size of the virtual experiment. Substantial work is typically required to make models sufficiently scalable to generate the response surface in a reasonable amount of time. The Tera-Grid (Catlett et al., 2007) can be used to generate enough data to obtain sufficient variation in underlying parameters and ensure robustness of results.

Robustness

Models need to be robust despite data gaps and errors. “There are two aspects of robustness. First, measures should be relatively insensitive to slight modifications of the data. Second, the tools should be able to be run on data sets with diverse types of errors and varying levels of missing data” (Carley et al., 2007, p. 1328).

This page intentionally left blank.