3

Forecasting and Anticipatory Thinking

The first workshop panel addressed research on and opportunities to improve forecasting and anticipatory thinking, or modeling, planning, and preparing for events. Moderator Sallie Keller, Virginia Polytechnic Institute and State University, opened the session by drawing attention to the differences between physical or engineering-based modeling and modeling of social systems. She identified five differences between the two types of models: (1) physical systems often evolve according to well-known rules, while social systems often evolve according to rules that are not well understood; (2) more directly applicable data are often available for physical models; (3) engineered systems are often designed to operate so that the various subprocesses behave linearly, while social systems do not (Keller noted that “humans simply do not have design specifications”); (4) behavior mechanisms in physical systems can often be decomposed because their effects are well-known and independent, while the mechanisms in social systems often interact; and (5) extrapolation of modeling results is difficult for both physical and social systems, but with social systems, this extrapolation may put them on a trajectory that is unlike previous experience. Yet, Keller asserted, despite these challenging differences, physical and social systems are similar enough that modeling techniques for physical systems can still be used successfully to inform modeling of social systems.

RETURNS TO PRECISION

Barbara Mellers, University of Pennsylvania, discussed the idea that analysts’ forecasts and their communication of ambiguities can be improved

if such forecasts are provided along with quantitative measures of uncertainty. She stated that numerical estimates using the entire range of a probability scale represent the easiest way to communicate clearly the degree of uncertainty associated with reported findings.

Mellers explained that new research, produced through a program of the Intelligence Advanced Research Projects Activity (IARPA), provides strong support for the use of quantitative measures of uncertainty. She described this program as consisting of four forecasting tournaments, held from 2011 to 2015, with the goal of understanding how to elicit and aggregate forecasts from a large, dispersed crowd. She reported that five research teams participated in the program, each of which designed methods to elicit forecasts about the same resolvable forecasting questions. For these tournaments, IARPA defined accuracy using the Brier scoring rule.1

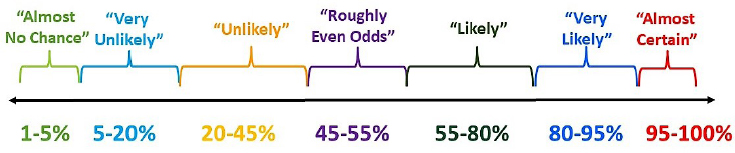

Mellers’ research team, whose Good Judgment Project was a participant in the IARPA forecasting program,2 studied more than 1 million forecasts made by thousands of participants from around the world who attached numerical probabilities to events ranging from pandemics and UN negotiations to leadership change and economic trends. Mellers explained that in making forecasts, intelligence analysts generally use such verbal phrases as “we believe,” “we assess,” and “we judge” or such terms as “low,” “medium,” and “high confidence” that can be difficult to interpret and can result in serious miscommunication. She pointed out that in 2015, the Office of the Director of National Intelligence recommended that analysts use the terms shown in Figure 3-1, which represent a compromise between qualitative and quantitative methods.

Mellers went on to discuss two basic problems with terms expressing uncertainty. The first problem, as she sees it, is that these terms mean different things to different people. Her team asked “superforecasters” from the Good Judgment Project (the top 2% of the forecasters among the thousands studied in the project) how they interpreted the terms shown in Figure 3-1, and found that the plausibility ranges the forecasters attached to the terms were generally much larger than the ranges specified in the analytic standards, with an average difference of 2 to 1. According to Mellers, this finding illustrates her point that such terms are imprecise and often interpreted inconsistently within and across individuals.

The second problem Mellers discussed is that terms used to denote uncertainty cannot be scored for accuracy, and without an accuracy metric, it is virtually impossible for analysts to learn how to improve. To illustrate the importance of an accuracy metric, she described an op-ed piece from

___________________

1 The Brier scoring rule is an accuracy metric for probabilistic predictions that goes from zero, which is perfect, to 2, which is “reverse clairvoyance,” perfect in the opposite sense.

2 Available: https://www.goodjudgment.com/ourStory.html [February 2018].

NOTE: This figure shows unequal intervals of probability across the seven different terms.

SOURCE: Office of the Director of National Intelligence. (2015). Analytic Standards. Intelligence Community Directive 203. Available: https://www.dni.gov/files/documents/ICD/ICD%20203%20Analytic%20Standards.pdf [March 2018]. Presented by Barbara Mellers at the workshop.

The Wall Street Journal written by Bill Gates in 20163 about a book by William Rosen, The Most Powerful Idea in the World, which tells the story of the steam engine and the technological innovations needed to develop it. One of these innovations was a micrometer that allowed inventors to see whether incremental design changes led to engine improvements. The larger lesson from the book, according to Mellers, quoting Gates, is that “you can achieve incredible progress if you set a clear goal and find a measure that will drive progress toward that goal.”

Mellers noted that the type of feedback that can drive progress in forecasting has not yet been achieved by the Intelligence Community (IC). She believes the IC will be unable to judge the usefulness of new analytic tools and training methods without a quantitative accuracy metric. Specifically, she questioned whether the seven-point rating scale shown in Figure 3-1 provides enough distinctions to convey accurately the amount of uncertainty in analysts’ projections.

To address that question, Mellers’ research team considered two questions: (1) How many distinctions did Good Judgment forecasters make when stating their predictions? and (2) How many distinctions did they actually need to state their predictions? To address the first question, Mellers explained that the unique probabilities used by forecasters in the IARPA tournament during a tournament year were simply counted. She reported

___________________

3 Gates, B. (2013). My plan to fix the world’s biggest problems. The Wall Street Journal, January 25. Available: https://www.wsj.com/articles/SB10001424127887323539804578261 780648285770 [March 2018].

that most of the forecasters used between 10 and 30 unique expressions of probability or uncertainty during the course of a tournament year. But she then turned to the question of how many of these expressions of uncertainty the forecasters actually needed. This question was addressed by comparing the original Brier score computed for each forecaster in the IARPA tournament to new Brier scores based on forecasters’ predictions after rounding to a limited number of categories (representing different probability scales, such as that in Figure 3-1); the research considered sets of both three and seven categories of uncertainty. The three-category rounding consisted of confidence ratings of low, medium, or high, and two seven-category roundings consisted of either equal or unequal intervals of the terms shown in Figure 3-1.

Mellers explained that the new Brier scores computed after rounding were compared with the initial Brier scores for the thousands of forecasters and for the superforecasters. She reported that by limiting the precision of uncertainty that forecasters could use, errors in accuracy were introduced. Use of the seven-category probability scale introduced about 1 to 2 percent of error for forecasters overall. But for superforecasters, additional error increased by 6 to 40 percent. Mellers concluded from these data that having a wider range of distinctions of uncertainty would improve precision. She added that the superforecasters, the very best people, are the ones affected most negatively by being constrained to a narrow scale. She argued for consideration of larger probability scales given the more significant impact on those identified as the most accurate in their forecasts.

Mellers concluded her talk by rejecting the idea advanced by some scholars and practitioners that the extra precision provided by numerical probabilities is simply noise. She also refuted the idea that numerical probabilities impose mental costs on analysts, arguing that the cost of not using numerical probabilities is much greater. She summed up her presentation with these words: “The world is a very messy place, and accurate predictions are extremely hard [to make]. That’s very clear to any of us who have tried to make them. But when the stakes are high, with billions of dollars and thousands of lives on the line, even small increases in precision could yield high returns.”

CHALLENGES FOR ENGAGING IN ANTICIPATORY THINKING

Gary Klein, MacroCognition LLC, defined anticipatory thinking as thinking ahead, looking for potential challenges, and recognizing threats and opportunities. He distinguished anticipatory thinking from prediction and forecasting by explaining that anticipatory thinking includes an element of preparation for possible outcomes, particularly low-probability, high-impact events.

Klein acknowledged that there have been cases in which anticipatory thinking failed and surprises happened, and he noted that some of those errors were extremely costly. As a result, he said, there is great interest in attempting to improve analysts’ performance to limit such surprises. He went on to discuss some common approaches for improving performance and to explain why he believes they are misguided.

The first approach he described is based on the idea that the number of mistakes analysts make simply needs to be reduced. The problem with this approach, he argued, is that reducing errors is only part of the equation in improving performance; the other important component, he said, involves increasing insights and discoveries. He asserted that many organizations fail to balance these two sides of the equation, focusing primarily on reducing mistakes.

The next approach that Klein discussed was the use of rigorous review processes. He maintained that these processes generally involve looking for problems or errors as opposed to looking for discoveries or new ideas.

Klein described reliance on critical-thinking protocols as another misguided practice. He provided the example of asking analysts to list all of their assumptions, with the rationale that errors can usually be traced back to incorrect assumptions that can thereby be identified and addressed. According to Klein, however, no actual data and research support this idea. He noted that errors can also be based on mistaken assumptions that are unconscious, and that unconscious assumptions are very difficult to list.

Encouraging analysts to follow procedures and tradecraft strictly is, in Klein’s opinion, another misguided practice because it limits curiosity and exploration. “I’ve been told by analysts,” he said, “that some of their major successes came when they violated tradecraft [and made discoveries].” He observed, moreover, that encouraging analysts to follow strict procedures can send the wrong message that errors can be tolerated as long as they do so.

Klein cited evaluating analysts solely on forecasting accuracy as another misguided practice because being accurate is only part of the forecasting process; it is also important, he said, for forecasts to be useful. Accordingly, he favors a process that encourages analysts to become smarter and more sophisticated and to build richer mental models.

Next, Klein pointed to three problems with the idea of improving anticipatory thinking by using ever larger datasets. First, he observed, more data are already being collected than analysts can possibly handle, and most are irrelevant to the problem at hand. Second, he noted that algorithms can be programmed to analyze the data in large datasets following historical trends, but when situations or assumptions change, the algorithms must be reset. The third problem with large datasets, said Klein, is that a critical event may not be one that happened, but one that did not happen. The

skill of an experienced analyst, he emphasized, is to anticipate an event, to watch for it, and to notice if it does not happen, because the absence of an anticipated event may be significant.

Klein also characterized as problematic the prospect of relying on artificial intelligence (AI) for improving analysts’ performance. He credited another workshop participant for the idea that “instead of building smarter machines, we should try to build machines that make us smarter,” which he described as an important difference. One use for AI, he observed, is sorting through large datasets to flag irregularities. An alternative use for AI, he suggested, is to help build analysts’ skill sets, perhaps by learning from analysts with stronger skills. Thus, he said, AI could help analysts be more efficient and effective in searching for information by drawing on effective strategies of proficient analysts.

Klein suggested further that attempting to increase discoveries and insights may also be a misguided way to improve analysts’ performance. He admitted that this suggestion appears paradoxical, but he clarified that plenty of useful insights already exist, and instead, organizational issues that suppress insights need to be addressed. He stated that there have been numerous instances of suppressed speculative insights and discoveries within the IC. Using the fall of the Berlin Wall as an example, he relayed the story of an IC analyst who noticed that the conditions keeping East and West Germany separate had changed and informed his superiors that Germany could reunify. Because this was an unexpected prediction, Klein continued, the analyst’s final product was published with so many caveats that it looked very different from the analyst’s original thesis. When reunification did occur a few years later, everyone was surprised, he said. The important aspect of this example, according to Klein, was that the IC had the insight but suppressed it. He concluded from this incident that it is incumbent on the IC to do a better job of harvesting insights even if they are surprising or inconsistent with existing beliefs.

To improve analyst performance, then, Klein suggested that the IC support analysts’ capacity to engage in speculative thinking. He defined speculative thinking as using abilities necessary to manage complexity, uncertainty, anomalies, and ambiguity. These abilities, according to Klein, are the natural strengths of analysts and are weakened when analysts are forced into procedural mindsets. He identified several aspects of speculative thinking in which agents should be free to engage:

- Mental simulation: imagining the implications of a given event.

- Abductive reasoning: using analogs and metaphors, as well as hypotheticals, contrasts, counterfactuals, and heuristics.

- Positive heuristics: employing methods of speculative thinking, including availability, representativeness, and anchoring and adjustment.

- Richer mental models: exhibiting curiosity about anomalies, as opposed to simply explaining them away.

- Perspective taking: using the human ability to get inside other people’s heads in order to recognize differences in culture and goals and understand another’s perspective more accurately.

Klein summed up by reiterating the importance of focusing on both aspects of the equation for performance improvement. This means that, instead of putting the majority of the effort into reducing errors, effort should also be channeled into developing ways of helping analysts engage in speculative thinking and increasing their insights.

PREDICTION AND ANTICIPATORY THINKING

Alyson Wilson, North Carolina State University, focused her talk on how interdisciplinary work, such as that done at the Laboratory for Analytic Sciences (LAS), can further the field of prediction and anticipatory thinking. She observed that clear lines no longer exist between research conducted by statisticians and those in the AI community regarding the questions surrounding social science, data management, and decision making.

LAS, Wilson explained, is an academic–industry–government partnership that works at the intersection of technology and the tradecraft of intelligence analysis to enable analytic innovation for the IC. The impetus for the creation of LAS was the need to address questions not unique to the IC, such as how to use the massive amount of data from social media for strategic advantage, which could be asked by almost every company in the United States. It was clear, Wilson continued, that to gain traction on such problems required the type of partnership that became LAS.4 She acknowledged that the work performed at LAS is not necessarily on the “bleeding edge” of research on either the statistics or the social science side. Instead, LAS is attempting to combine the expertise of different disciplines and professions to study substantive problems and advance the field of analytics.

Wilson described a few of the projects currently under way at LAS. The first is aimed at improving the Fragile States Index,5 which provides an indicator of whether a given state entity might collapse. Wilson explained

___________________

4 There are also 30 to 40 academics from 8 to 12 universities and 8 to 10 industry partners. These groups work together on approximately 40 individual projects. Ninety percent of the work at LAS is unclassified. For more information, see http://ncsu-las.org [April 2018].

5 Available: http://fundforpeace.org/fsi [February 2018].

that the Fragile States Index has been widely criticized for, among other things, being a lagging as opposed to a leading indicator. She noted that LAS research teams that include political scientists, anthropologists, and statisticians are working on incorporating more real-time data and applying time-series methods in the hope of converting the Failing State Index from a lagging to a leading indicator.

The second LAS project Wilson described involves studying a straightforward question about transliteration of Korean names in a way that would encourage consistent pronunciation. The research team assembled for this task includes experts in design of experiments and data collection and language analysts. The research not only has produced clear identification of the best transliteration method, but also yielded insight into other issues surrounding accurate translation of the Korean language. Again, Wilson emphasized, this was a step forward.

The third LAS project Wilson discussed addresses data veracity and news media reliability. In the IC, she explained, the people who use and analyze the data are not the same as those who collect the data. As a result, she said, analysts are generally unfamiliar with the data collection process, which can lead to questions about data veracity and utility. Some of the research in this area, according to Wilson, is drawing on open-source data to develop data analysis that could be performed by AI to identify features of media communications that would help analysts recognize propaganda automatically. She stressed that this research has a computational component, using machine learning to process the data and label features, but there is also a component to understand the human decision maker, particularly how people make decisions about the veracity of data.

Looking forward, Wilson presented her thoughts on some future challenges for the IC. She reiterated the point made by Thomas Fingar, Stanford University, at the start of the workshop (see Chapter 2) that collecting more data is not the answer to furthering the field of anticipatory thinking. Improvements cannot be made, she argued, by relying on AI to analyze vast amounts of data or by simply processing the data more rapidly. She characterized the issue as being more about understanding the questions the analyst is asking and assembling the specific data that will support the ability to answer those questions. The challenge of finding the appropriate data, according to Wilson, could be addressed by improvements in collaborative computing. She posed the question, “How do we make the computer work all the time to try to watch what we are doing?” She pointed out that this is both a computational and a social science question. Once a computer system understands a workflow, she proposed, it can anticipate what it needs to do, perform the task, and then make suggestions about what the analyst might need next.

Wilson referred to the talks of both Mellers and Klein, making two ad-

ditional points about anticipatory thinking. First, she spoke to the question of looking forward, predicting the probability with which a certain event will occur by a certain date. She characterized this as the “forecasting prediction problem,” and acknowledged that a great deal of research is needed in this area. But she also pointed to the problem of anticipatory thinking with regard to generating possible events in the first place, and of finding ways to help analysts think much more broadly about possible futures. She mentioned that looking into the future is one of the seven goals in the 2014 U.S. National Intelligence Strategy6 and contended that research is still needed to identify the appropriate tools for enabling analysts to do this kind of discovery of possible scenarios.

To conclude, Wilson emphasized the importance of shifting the focus of the IC from monitoring current events to anticipating future events, noticing patterns, and staying ahead of the actions of adversaries. To facilitate this shift, she highlighted the need to understand how to train analysts so they can more effectively look forward, generate insights, and use those insights to make accurate predictions about actions that might be taken by adversaries. She closed her presentation by emphasizing that research in such areas as cognition and perception, AI, statistics, and data analytics cannot, in isolation, solve the problems faced by the IC. Rather, she stressed, interplay between the social sciences and such data-centric fields as statistics, AI, and “big data” will be necessary to address the important issues the IC will continue to face.

DISCUSSION

Following the presentations summarized above, panelists participated in a discussion and responded to questions from the audience. Peter Pirolli, Institute for Human & Machine Cognition, asked the panelists to comment on the observation that creativity comes both from the psychological level—possessing expertise in a given area—and from the organizational level, through exposure to diverse ideas. Klein responded by describing a study of 120 examples of insight, in which the data indicated that in two-thirds of those cases, the insight would not have been gained without sufficient experience. Klein and others on the panel did, however, acknowledge a role for additional factors in the generation of insight, such as the flexibility of the individual in considering different ideas and an organizational structure that provides exposure to a variety of thoughts and frameworks.

A workshop attendee asked about the usefulness of the National Intel-

___________________

6 Available: www.dni.gov/files/documents/2014_NIS_Publication.pdf [February 2018].

ligence Council’s Global Trends report.7 Fingar expressed the view that being involved in writing the report is an extremely beneficial exercise for analysts because taking such a long-term perspective can change the way they assess problems and formulate questions. At the same time, however, he stressed that it is imperative for the IC analysts to understand both the goals of policy makers and the strategic context in which they are to make decisions so the analysts can communicate information from the Global Trends report and other intelligence products in ways that will be helpful and relevant to policy makers’ mission.

Danielle Albers Szafir, University of Colorado, asked the panelists how risk might be considered in anticipatory thinking, given that some decisions have a high cost if predictions are wrong. Fingar argued that analysts’ job is simply to convey their best interpretation of the probability that certain events will happen. He stressed that analysts should not be afraid of getting things wrong, remarking, “I’ll take a lot of errors in order to get a little bit of insight, because insight is what really makes a difference.”

Fran Moore, CENTRA Technology, Inc., asked the panelists to comment on strategies for improving the analytic process to include reframing the questions addressed by analysts as necessary—in other words, how do analysts assess whether they are grappling with the right questions? In response, Klein referred to the phenomenon of suppression of ideas. He described a study of groups of analysts working together on a problem, which found that in some cases, one or two analysts had uncovered contrary information but chosen not to report it so as to maintain the harmony of the team.8 Thus, all the groups continued to work on the same questions with the initial analytic plan.

Mellers was asked to provide further detail on superforecasters. She explained that expertise in the various topics and questions they addressed was not necessary, as the superforecasters were “supergeneralists” because they did well across the board. They excelled at figuring out how to think about questions and where to obtain the information needed to answer them. She also reported that superforecasters worked in dynamic teams that shared information and discussed different strategies. These dynamic interactions, she said, played a role in improving the accuracy of superforecasters’ forecasts. Mellers therefore believes that these superforecaster strategies are teachable skills.

A workshop attendee asked the panelists for thoughts on the value of

___________________

7 National Intelligence Council. (2017). Global Trends: Paradox of Progress. Washington, DC: Office of the Director of National Intelligence. Available: https://www.dni.gov/files/documents/nic/GT-Full-Report.pdf [March 2018].

8 Snowden, D., Klein, G., Chew, L.P., and Teh, C.A. (2007). A sensemaking experiment: Techniques to achieve cognitive precision. In Proceedings of the 12th International Command and Control Research and Technology Symposium, Newport, RI.

visual presentations as part of the sense-making and anticipatory thinking processes. The panelists agreed that visualizations are a valuable means of presenting data. Klein suggested that a visual way of presenting the confidence intervals described by Mellers might be preferable to expressing them in terms of probabilities, for example. Chapter 5 summarizes other workshop presentations and discussion that examined visualization tools and limits on human and machine capabilities.

A participant commented that better human–machine partnerships are needed so the cognitive load can be shared between analysts and computers. He asked the panelists to comment on the difficulties involved in developing such systems. Klein offered the example of chess, in which human–machine hybrids perform better than either humans or machines alone. He referred to research currently under way to improve the transparency of machine learning and other AI systems so that perhaps a machine’s output will be understood and trusted. But he also acknowledged that, given the technological advances in the field, uncovering the reasoning of these systems is difficult even for their developers.

Wolfe asked the panelists to predict advances that in the next 10 years will be transformational for the way IC analysts do their work. The answers from the panelists included collaborative computing technologies to aid decision making and improvements in model building that will increase the reliability of judgments. Wilson pointed to the potential for a partnership with AI such that machine-driven data analysis can be incorporated into human decision making, and the user can determine when the machine has something useful to contribute and when it does not. She noted that not every analyst can be expected to be a data scientist or have the ability to carefully tune machine learning models. Therefore, she argued, to make the partnership work, the development of collaborative computing would need to consider who does what best and how to integrate machine output with the intelligence analysis process. Klein added that, to advance collaborative computing in the next 10 years, the AI community needs to consider whether what is built supports analysts in making smarter decisions (and not AI-generated decisions), and the IC needs to consider what requirements collaborative computing would place on the analysts. Mellers commented that humans faced with making risky predictions will check their judgments against models, and there will be opportunities in the coming years to build better hybrid models.

This page intentionally left blank.