The first workshop panel focused on the current culture of science as it relates to transparency in proposing and reporting preclinical biomedical research (see Box 3-1 for corresponding workshop session objectives). Four researchers, each fulfilling different roles in the science ecosystem, shared their perspectives on the incentives, disincentives, challenges, and opportunities associated with transparent reporting and replicability in science.

Yarimar Carrasquillo, an investigator at the National Center for Complementary and Integrative Health (NCCIH) at the National Institutes of Health (NIH), shared her perspective as an early career researcher, describing the impact of non-replicability of published studies on setting up a new research program and achieving tenure. Brian Nosek, co-founder of the Center for Open Science, discussed the work of the center to effect behavioral change that better reflects the cultural norms in science and leads to greater openness and reproducibility of research. Arturo Casadevall, professor of molecular microbiology and immunol-

ogy at Johns Hopkins University and editor-in-chief of mBio, discussed approaches to reduce errors in published papers from a publisher’s perspective, including raising awareness of researchers, posting preprints, reviewer education about errors, and submission of original data to repositories. Carrie Wolinetz, acting chief of staff and associate director for Science Policy in the Office of the Director at NIH, described areas where NIH has been working to implement policies that enhance rigor, replicability, and transparency.

The session was moderated by Alexa McCray, professor of medicine at Harvard Medical School and chair of the National Academies of Sciences, Engineering, and Medicine consensus study committee that released the report Open Science by Design (NASEM, 2018). Researchers are generally not recognized or rewarded for making their data available, McCray observed. She highlighted the first recommendation from the report, which addressed overcoming cultural barriers:

Research institutions should work to create a culture that actively supports Open Science by Design by better rewarding and supporting researchers engaged in open science practices. Research funders should provide explicit and consistent support for practices and approaches that facilitate this shift in culture and incentives. (NASEM, 2018, p. 7)

The consensus study report Reproducibility and Replicability in Science also discusses efforts to “foster a culture that values and rewards openness and transparency,” she said, and describes “guidelines that promote openness, badges and prizes that recognize openness, changes in policies to ensure transparent reporting, new approaches to publishing results, and direct support for replication efforts” (NASEM, 2019, p. 104).

EARLY CAREER INVESTIGATOR PERSPECTIVE

Yarimar Carrasquillo, Investigator, National Center for Complementary and Integrative Health

Carrasquillo emphasized the importance of targeting younger investigators, not only early career faculty and researchers, but also undergraduate students, graduate students, and postdoctoral fellows, when working to raise awareness about the need for transparent reporting of biomedical research. Many of the issues directly affect new investigators, she said, who are under pressure to publish in high-impact journals and to report “flashy science” that, while interesting, is often not biologically relevant. Engaging emerging investigators could help to facilitate the cultural change needed.

Establishing a New Laboratory

Lack of transparency in the reporting of biomedical research affects all researchers, from students to senior investigators, but it presents a particular challenge for early career investigators who are starting a new laboratory. “The tenure clock starts ticking immediately,” Carrasquillo said. New principal investigators are working to build their research program, and they rely heavily on the literature for the information needed to develop methods and establish models for their studies. Many new faculty members find they cannot replicate studies in the literature.

Carrasquillo said her own efforts to establish her research program on pain mechanisms were set back by the inability to replicate published studies on the affective component of pain. Replicating the previous findings took 2 years, she said, because much of the information reported about experimental conditions and analyses, for example, was incomplete. This lengthy setback was frustrating for the trainee who was working to replicate the previous findings, and it could negatively impact Carrasquillo when her program is evaluated. Tenure-track investigators often find themselves revising their research plans because they cannot replicate the original findings. The inability to replicate the prior studies also raises concerns about the validity of the animal models of affective behavior, which she said impacts the ability to translate findings to clinical research.

By contrast, there were other models and methods that Carrasquillo wanted to incorporate into her program that she said were easy to replicate because there were detailed methods papers available (some with videos), and other publications that included raw values and individual data points. Carrasquillo observed that detailed methods papers are underappreciated in general and are not valued as scientific contributions for tenure review. She suggested that promoting and committing to transparent reporting is not enough. Transparency as a behavior needs to be valued and rewarded in concrete ways.

The Tenure Process

The tenure process values the quantity of publications and the impact factors of the journals in which they are published, Carrasquillo said. She reiterated that methods papers are not considered impactful and do not often appear in high-impact journals unless they describe innovative new protocols. Rigor, reproducibility, and transparency are time consuming, she continued, and are not generally rewarded in the tenure or promotion processes. She suggested the need for a system of both rewards and penalties for reproducibility and transparency, or the lack of them. Another challenge for early career investigators

is attracting trainees. “Trainees in your lab will compare themselves with trainees in other labs and will resist rigorous processes if proper rewards are not in place,” she said. It should also be recognized that early career investigators have fewer resources and less administrative and technical support compared with established investigators. An early career investigator must often work with trainees individually to review all data and foster a culture of rigorous science and transparency. Again, she said, this takes time, and it should be acknowledged that the research may not progress as quickly as it might in laboratories that do not espouse rigor and transparency, and laboratories that promote rigorous science should be appropriately rewarded. It also takes longer to publish, which is a problem for early career investigators because of the value the tenure process places on volume and impact of publications.

In response to a question, Carrasquillo said she has received support from senior faculty, especially from her scientific director, who encouraged her to draft a paper describing her laboratory’s inability to initially replicate findings previously described in the literature as well as the troubleshooting process required to finally replicate the findings. A paper discussing factors that affect the replicability of experiments and that are known to substantially vary between labs is essential to move the field forward, she added. Although some senior faculty are understanding of replicability challenges and supportive of rigor and quality, it is not clear if a tenure committee would reward high-quality research that resulted in fewer publications.

Creating a Culture Change

Carrasquillo offered the following solutions to help overcome some of the obstacles that early career investigators face:

- Restructure tenure evaluation criteria so that efforts toward reproducibility and transparency are valued (e.g., methods papers, negative data). Consider the rigor of the studies conducted and whether the published findings have been replicated by others in the scientific community.

- Create a system of awards to recognize investigators and trainees who are contributing to a culture of transparency and reproducibility.

Carrasquillo also described how she has been working to promote a culture of reproducibility and transparency in her laboratory. Practices she has established in her laboratory include

- Meeting one on one with her trainees to ensure that experimental procedures are documented and reported in the methods section of publications;

- Recording detailed information about the materials used; and

- Requiring that experiments are performed blind and are replicated, from beginning to end, at least once or twice.

She noted several other practices that have been more challenging to implement, including labeling and organizing raw data so that they are easily accessible; getting trainees to accept that reporting data that do not align perfectly with their model is acceptable and is important for transparency; and having trainees document methods and analyses in real time so that details are not forgotten. She also observed that her field has not yet embraced preregistration of studies.

CULTURE CHANGE ORGANIZATION PERSPECTIVE

Brian Nosek, Co-Founder, Center for Open Science

The experiences described by Carrasquillo are not limited to early career researchers. Nosek described problems with replicability as “pervasive.” As an example, he described the Replicability Project: Cancer Biology, a research project of the Center for Open Science. An analysis was done of the ability to replicate 197 experiments reported in 51 high-impact papers in preclinical cancer biology published from 2010 through 2012.1 None of the 51 papers had all of the associated data available in a repository, Nosek said. A full dataset was readily available for just 3 of the 197 experiments described in the publications. Furthermore, none of the papers provided enough information to design a full protocol for any of the experiments. This emphasizes the core challenge of even having enough information to attempt a replication, he said.

Cultural Norms in Science

Nosek framed the cultural issues of replicability relative to Merton’s norms of science and the associated counternorms (see Table 3-1). In addition to Merton’s four original principles, Nosek added that quality versus quantity is another commonly expressed norm–counternorm.

The cultural challenge was illustrated by a survey of 3,300 early- and mid-career NIH awardees. Anderson and colleagues found that 90 percent of those surveyed endorsed the norms of science, and the major-

___________________

1 For details, see https://osf.io/e81xl (accessed November 20, 2019).

TABLE 3-1 Norms and Counternorms in Science

| Norms | Counternorms |

|---|---|

| Communality | Secrecy |

| Open sharing of information with colleagues | Closed, keeping information to oneself |

| Universalism | Particularism |

| Evaluate research on its own merit | Evaluate research by reputation/past productivity of researcher |

| Disinterestedness | Self-interestedness |

| Motivate by knowledge and discovery, not by personal gain | Treat science as a competition with other scientists |

| Organized skepticism | Organized dogmatism |

| Consider all new evidence, even when it might contradict one’s prior work or point of view | Invest career in promoting and defending one’s own most important theories, findings, and innovations |

| Quality | Quantity |

| Seek quality contributions | Seek high volume |

SOURCE: Nosek presentation, September 25, 2019.

ity of respondents also believed they personally behaved according to those norms (Anderson et al., 2007). However, the majority perceived the behavior of other scientists to be counternormative. In fact, Nosek explained, although scientists “collectively endorse the norms of science over the counternorms,” the culture of science is “self-interested,” with individuals focusing on advancing their own careers through “publishing in the right journals, presenting the right results, selectively reporting, and ignoring negative results.”

Changing the Research Culture

Individual researchers, universities, publishers, funders, scientific societies, and other stakeholders populate the complex, decentralized ecosystem of scientific research. Nosek said that “all groups contribute to the incentives and reward structure and all are influenced by it.” Coordination and cooperation are needed to bring about cultural change.

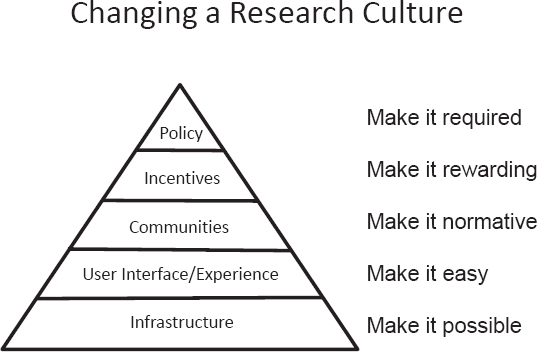

Nosek proposed a pyramid model for effecting cultural change and aligning researcher behavior with the values and norms that the scientific community espouses (see Figure 3-1). The necessary infrastructure to enable the desired behaviors forms the foundation of the pyramid. For successful adoption of behavioral change, that infrastructure should be accessible, easy to use, and adaptable to existing workflows. Making the

SOURCE: Nosek presentation, September 25, 2019.

desired behaviors visible in the scientific community encourages their adoption as norms. While some will readily conform to the new norms, others can be incentivized by rewards that align practically with their goals, Nosek continued. Some will not adapt their behavior unless it is mandated.

As an example, Nosek used preregistration of research as the desired behavior change. The infrastructure that makes preregistration possible includes project tools such as GitHub, Dropbox, Google Drive, Mendeley, and others. Integrating that infrastructure with other web applications, such as OSF Registries, helps to facilitate easy registration as part of routine workflow. Studies that conform to open science norms, such as preregistration, are awarded badges that make the practice visible in the community. Preregistration is now rewarded by more than 200 journals that are offering the Registered Reports publishing format as a submission option. Researchers submit their research plan to a journal for prestudy peer review. If the proposed study is accepted “in principle,” it will be published upon completion regardless of outcome (assuming there are no issues with quality, clarity, or deviation from the registered plan). This means, Nosek explained, that the incentive is now on designing quality studies to answer important questions, not on getting positive results. Nosek reported that “about 60 percent of the articles that have been published so far through Registered Reports have negative results as their primary outcome,” and these papers are cited as frequently as other articles. Finally, at the policy level, the Transparency and Openness Promotion (TOP) guidelines provide a framework for institutions,

journals, and funders to use in implementing transparency standards.2 Change is happening, Nosek said, and he shared data showing the increase from 38 preregistered studies in 2012 on OSF.io to a projected total of more than 35,000 in 2019. In response to a question, he clarified that the Registered Reports model specifically includes a commitment to publication as an incentive for preregistration, but other preregistration models are being used that do not use this incentive. Preregistration of research is broadly applicable to hypothesis testing and confirmatory experiments, he added.

Change needs to happen simultaneously, not sequentially, across all areas of the pyramid in Figure 3-1. Nosek said small communities (e.g., disease-specific funders) can have a large impact by changing their practices and then advocating to bring others into alignment.

SCIENTIFIC SOCIETY JOURNAL EDITOR PERSPECTIVE

Arturo Casadevall, Professor of Molecular Microbiology and Immunology, Johns Hopkins University, and Editor-in-Chief, mBio

Problematic figures and images are commonly found across the scientific literature. Casadevall said that “most problems are due to error, not misconduct, and new procedures are emerging to reduce error.” To illustrate the extent of the problem, he described the findings of a systematic analysis initiated by Bik and colleagues (2016). The analysis involved visual inspection of more than 20,000 papers published in 39 journals across 13 publishers from 1995 to 2014. The study found problematic images in about 1 out of every 25 papers analyzed (about 4 percent). The prevalence of problematic images varied across different journals, and there was a steep increase in problematic images after the advent of photo editing software. These findings underestimate the prevalence, Casadevall said, because only photographic images were included in the analysis.

A second study analyzed 960 randomly selected papers that had been published in a single journal, Molecular and Cellular Biology, from 2009 to 2016 (Bik et al., 2018). This study found that more than 6 percent of images were problematic. Most of the problematic images identified were the result of errors, but 10 percent of the publications with problematic images were retracted. Based on the number of retractions, estimates showed that as many as 35,000 published papers could have images that

___________________

2 Further information on the OSF Registries, Open Science Badges, Registered Reports, and TOP is available on the Center for Open Science website, https://cos.io (accessed November 20, 2019).

would prompt a retraction. Casadevall pointed out that finding and correcting problematic images in publications is costly and time intensive for journal staff, but increased image screening by journals prior to publication has led to a reduction in the number of these problematic images in published papers.

A similar analysis for errors was done of papers accepted for publication in the Journal of Clinical Investigation (Williams et al., 2019). Of the 200 papers analyzed, 28 percent were found to have “statistical issues” and 27 percent had problematic figures. Casadevall noted that the journal, of which he is a deputy editor, requires that authors submit copies of their original data when the paper is accepted for publication. When the problems with specific papers were investigated further, it was found that 89 percent were “minor transgressions,” 7.5 percent were considered to be “moderate problems,” and 1 percent of the papers had “major problems” that led the editors to rescind the acceptance.

In response to a question, Casadevall said that while the majority of retractions were due to misconduct, it is important to remember that retracted papers have multiple authors and the problems leading to retraction are often caused by a single author. Mechanisms are needed that do not stigmatize authors, but instead encourage retraction and allow for repeating of the studies and republication as appropriate.

Reducing Errors in Publications

An emerging option in biomedical publication is the ability to publicly post a preprint of the manuscript prior to peer review. Preprints increase transparency and allow researchers to rapidly share findings, but they can also help to catch errors before papers are published. Casadevall shared a personal example in which posting a preprint helped them to “avoid an embarrassing error.” After posting, a reader noticed that one of the figures included a photo that had already been published. It was a simple error that was immediately corrected, Casadevall said. The number of preprints posted is increasing each year, and posted manuscripts are often revised based on feedback.

Casadevall described some of the emerging solutions to safeguard the literature (summarized in Box 3-2). In the prepublication phase, as discussed above, preprints allow other researchers in the field to both send comments directly to the authors and/or post them publicly in the preprint server. Increased education is making researchers aware that these types of errors are a problem and teaching them to check for errors before manuscripts are submitted. At the review and publication phases, reviewer education has led to reviewers looking at figures in manuscripts

more carefully. There is also enhanced editorial scrutiny and requirements by some journals that the original data be submitted to the journal as a requirement for publication.

Errors identified by readers after publication are often disseminated on social media and websites such as PubPeer, Casadevall said, and there can be social media shaming. The blog Retraction Watch has exposed problems in many papers and investigates the reasons behind them. Comments are also submitted directly to journals. There are increasing numbers of retractions, and Casadevall said “scientists are beginning to realize the retracting of a paper is not a career-ending phenomenon.” It should be a part of the research process; there are cases in which a paper is retracted, the studies are repeated, and the corrected data are then published.

Cultural aspects need to be addressed as well. Casadevall expressed concern, for example, that publishing a problematic paper in a high-impact journal is still better for one’s career advancement than publishing a rigorous paper in a lower tier journal, and this is unacceptable, he said.

NATIONAL INSTITUTES OF HEALTH PERSPECTIVE

Carrie Wolinetz, Acting Chief of Staff and Associate Director for Science Policy, Office of the Director, National Institutes of Health

Wolinetz observed that rigor and transparency are sometimes considered to be barriers to scientific progress, and some believe that devoting resources to rigor and ensuring transparency takes resources away from research. NIH considers the tools of transparency to be essential components of science, not separate, she said, and resources should be spent on ensuring high-quality research. As a publicly funded research agency, NIH is accountable to the public, and transparency is a tool for demonstrating that NIH is a good steward of taxpayer dollars and is worthy of the public’s trust. Wolinetz gave several examples of why transparency matters to scientists, the public, and research participants (see Box 3-3).

As a funder, NIH is in a position to influence policy and practice in science. However, “this is not one entity’s problem alone to solve,” Wolinetz said. Stakeholders must work collectively to identify and address the

cultural barriers to rigor, transparency, and replicability in the scientific ecosystem. She observed that it is often assumed that “scientists know how to do science and are good at doing science and … are equally good at training others how to do science.” In reality, this is not necessarily true.

Reporting Clinical Trials Results

One area where NIH has been working to implement policies that enhance rigor, replicability, and transparency is the conduct of clinical trials, including the reporting of results (Hudson et al., 2016).3 Transparency starts with the grant solicitation and spans the length of the clinical trial process, from initial study design through reporting of results, she said.

Responsible Data Sharing

Another area of focus for NIH is enabling and incentivizing responsible data sharing, Wolinetz said. In developing future policy in this area, NIH is looking to establish a flexible framework that “sets a floor for good practices” and enables the sharing of diverse types and amounts of data. Researchers seeking NIH funding will be required to provide a plan for how they intend to manage and share their data responsibly. Wolinetz said NIH is considering how plans for data management and sharing might be assessed as part of funding decisions, and how best to implement incentives and accountability measures to ensure commitments to sharing are met. She noted that there is a “push–pull relationship” in the pyramid discussed by Nosek (see Figure 3-1) in that the foundational infrastructure facilitates the implementation of policy, but development of that infrastructure is sometimes resisted until it is required by policy.

NIH released “Proposed Provisions for a Draft NIH Data Management and Sharing Policy” in 2018 to gather input that would inform the development of the draft policy. Wolinetz anticipated that a draft policy would be released for public comment in October 2019, with the intention of finalizing a policy in early 2020.4 Angela Abitua, outreach scientist at Addgene, asked whether the forthcoming NIH policy on responsible data sharing will address the reporting and sharing of biological materials, such as plasmids, antibodies, and cell lines, which are used in the experiments. She observed that many journals have policies

___________________

3 See also NIH Policy on the Dissemination of NIH-Funded Clinical Trial Information, https://grants.nih.gov/policy/clinical-trials/reporting/understanding/nih-policy.htm (accessed November 20, 2019).

4 For current information, see https://osp.od.nih.gov/scientific-sharing/nih-data-management-and-sharing-activities-related-to-public-access-and-open-science (accessed November 20, 2019).

requiring the sharing of materials. Wolinetz said that biospecimens and other biological materials have been part of the discussions of sharing beyond data, specifically with respect to validation and sources of biological materials.

Rigor and Transparency

Wolinetz also discussed NIH’s efforts to enhance rigor and reproducibility in biomedical research. She observed that the emphasis is beginning to move from reproducibility to transparency. Biology is complex and “biological experiments might not be reproducible,” she said. Elucidating why an experiment could not be replicated could lead to a new discovery or a new research question. Transparency is essential to being able to replicate an experiment or to understand why it could not be replicated.

One current area of focus for NIH is rigor and reproducibility in animal research. Wolinetz pointed out that a large amount of NIH-funded research involves animal models, which she said are “incredibly important for advancing our understanding of biology and seeking new treatments for human diseases.” Rigor in the design and conduct of experiments using animal models relates not only to designing appropriately powered experiments, but also to whether the animal model chosen is the best model to address the research question. Wolinetz noted that addressing rigor in animal research presents cultural challenges. In many cases, for example, a given animal model has been used by many researchers for many years, and there are numerous publications. Raising concerns that that model might not be appropriate to answer the questions asked or to model a particular human condition can meet resistance, and the subject-matter experts on that model have a vested interest in continuing to use it. A working group of the NIH Advisory Committee to the Director has been charged with considering rigor in animal research and making recommendations for improvement.5

DISCUSSION

Preserving Mertonian Norms in Science

Richard Sever, co-founder of bioRxiv and medRxiv and executive editor at the Cold Spring Harbor Laboratory Press, observed that the environment for early career investigators is more competitive than ever. The number of doctoral students continues to increase, but the number of

___________________

5 See https://acd.od.nih.gov/working-groups/eprar.html (accessed November 20, 2019).

tenured faculty positions has remained steady. He suggested that the lack of job security may be related to the lack of willingness to be transparent with one’s research and that “the academic career structure … is now actively blocking transparency.” Casadevall said students should be made aware that scientists are needed to fill many roles outside of academic tenure-track positions.

Sever also suggested that the obsession with publishing in high-impact journals is one of the causes of the culture shift from the norms of science toward the counternorms. The consequences of not publishing in high-impact journals are perceived as severe (e.g., unemployment). Casadevall said the impact factor obsession is widely acknowledged as a problem, but it persists because scientists are invested in the system. He suggested that nothing will change unless a large number of prominent scientists decide to take action. “The problem is sociological,” he said. Valda Vinson, research editor at Science, added that impact factors were never intended to be used in this way (i.e., as a metric for hiring, tenure, or promotion).

McCray mentioned the Public Library of Science (PLOS) as an example of an initiative taken by scientists to improve openness and transparency. Casadevall described several examples of how he has been working to change these practices locally. As a department chair at Johns Hopkins, for example, he was able to change the hiring process for new faculty so that a curriculum vitae is not ranked by the impact factors of the papers listed. Rather, the content of the papers was considered, and applicants were selected for interviews based on the work described. Similarly, he and others were able to dissuade the tenure and promotions committee from requiring impact factors on a curriculum vitae. He described this as an ongoing battle that must be fought every day, by many people, on multiple levels before change will take hold. Casadevall and McCray encouraged participants to advocate for change in their departments and organizations. Goodman shared that Stanford is also moving away from counting publications and considering the journals they were published in for promotion review, and moving toward reading a sample of papers in depth for quality. The challenge of assessing quality instead of quantity, however, is reaching consensus on what constitutes quality, and how the quality of a candidate’s publications can be assessed efficiently and consistently given that several individuals will each read one paper and write their analysis and recommendation. He observed that peer reviews of a given manuscript submitted for publication can vary widely. Casadevall agreed that robust mechanisms are needed and that methods to assess quality and rigor need to be studied.

Nosek suggested that, while changing the overall structure of publishing is a long-term goal, the focus now should be on incentivizing

near-term behavioral changes. Scientists will continue to seek to publish in top-tier journals, he said. What can be changed is how those journals evaluate manuscripts for publication (e.g., implement requirements for rigor, transparency, preregistration) and how those publications are used by others, such as tenure committees (e.g., assess the quality of several papers rather than simply counting the number of publications in high-impact journals).

Facilitating Reproducibility

Jennifer Heimberg, senior program officer at the National Academies, pointed out that all publications in the Journal of Visualized Experiments (JoVE) include video recordings demonstrating the methods reported in the papers. In addition to increasing transparency, she said this approach allows for communication of nuances that might be missed in a written protocol. Casadevall noted that he has published in JoVE and Carrasquillo said that a JoVE paper contributed to the successful replication of a method by her laboratory, but both agreed that the weight of publications in JoVE and other journals of its type is lower than for publications in higher impact journals despite the valuable contribution of JoVE papers to replicability and training.

Guna Rajagopal, vice president of computational sciences, discovery science at Janssen R&D, shared that they and other pharmaceutical research programs often contact the authors of publications to discuss their studies and send company scientists to the authors’ laboratories for several months to work together to address any issues and to reproduce the data, with support from the company. Unfortunately, the resulting information remains within the company due to intellectual property concerns. Casadevall acknowledged this concern and urged Rajagopal to find a way to share the information learned from these replication studies through peer-reviewed publications.

Correcting the Literature

Thomas Curran observed that, in his experience, high-impact journals are not interested in correcting the historical literature. Vinson emphasized that the integrity of the material a journal publishes is “the most important thing,” and she supported efforts to change the metrics used for tenure and hiring decisions. She was very concerned about Curran’s comment and was interested in being provided with examples. A challenge, she said, is that standards evolve, and the journal receives inquiries looking to apply a current standard to papers that were published prior to that standard being implemented. Another challenge is that, although journals do try to

address concerns by negotiating corrections with authors, journals defer to the author’s institution to conduct an investigation.

Calling Out Bad Behavior

Carrasquillo observed that early career investigators can be hesitant to call out bad science because it could earn them a reputation as a “troublemaker” and result in them not being asked by journals to review papers anymore, or even in retaliation by other scientists. Casadevall responded that journals value rigorous review, and investigators should not be worried about expressing their concerns about a manuscript. On the other hand, public criticism is often made without having all of the facts. He encouraged researchers to work within the system, gathering data on published errors, publishing them in peer-reviewed journals, and only then discussing the findings publicly, as was done with the analysis of figure problems he described. Wolinetz agreed that many people are hesitant to call out bad behavior by others because the culture in science places extremely high value on reputation. The perception that pointing out bad behavior leads to retaliation and has adverse effects on reputation is in and of itself a problem, because then just the perception is enough to drive behavior and reinforce a culture that is not desirable even if the reality is different, Wolinetz said. Carrasquillo agreed with Casadevall about the need to remove the stigma of “bad behavior” from retractions and for researchers not to take personal offense to errors being flagged.

Sustainability and Cost Considerations

Curran raised the issue of sustainability, noting that in some cases, volumes of data from NIH-supported data acquisition studies have become inaccessible after the end of the study due to a lack of funding at NIH to maintain the online database. Wolinetz agreed and said NIH is aware of these sustainability issues. This example demonstrates the importance of infrastructure and tools for data sharing and transparency. She emphasized that experimentation and infrastructure should not be pitted against each other when it comes to determining funding priorities.

Malcolm Macleod, professor at the University of Edinburgh, observed that there are many who call for improving the quality of science, but few who are prepared to invest the resources and commit to the extensive work that is required to ensure that quality. Simply increasing incentives will not cause the system to self-correct. Casadevall agreed and added that it is expensive for journals to hire staff to address these issues, and doing so could lead to higher journal publishing fees. Correcting errors after publication is even more expensive, he said.

Wolinetz added that the practical realities and downstream consequences of implementing any policy need to be recognized. “Are we willing to pay for the values that we are espousing, and what are the downstream consequences?” For example, to increase rigor, certain standards of statistical analysis could be enforced that would result in the need for more animals per study, which increases the cost per study. Given a fixed amount of funding to award, this results in an agency being able to fund fewer studies. She emphasized the need to think holistically and balance the trade-offs.