2

Methods and Organization

This chapter addresses the methods used by EPA for the systematic reviews in the 2022 Draft Assessment (EPA, 2022a), for synthesis within evidence streams and evidence integration across streams, and for the selection of studies used to derive the reference concentration (RfC) and unit risk estimates. The chapter provides the committee’s assessment of these methods and addresses the organization of the 2022 Draft Assessment. Subsequent chapters provide the committee’s scientific review of the hazard identification conclusions and risk analyses of formaldehyde presented in the Draft Assessment for noncancer and cancer outcomes.

The committee’s approach to assessing the methods underlying the 2022 Draft Assessment encompassed several steps. First, the committee considered whether the methods used were aligned with the general state of practice for systematic review, evidence synthesis, and hazard identification (i.e., evidence integration). Second, the committee considered whether the methods used responded to the advice provided by the National Academies in the reports that offered advice for the IRIS Program and were incorporated in the development of the 2022 Draft Assessment. Finally, the committee reviewed the documentation of the methods in the Draft Assessment and its appendices. To evaluate whether the 2022 Draft Assessment was transparent enough to support replicability and evaluation of the process by an independent third party, the committee conducted a case study for the outcome of sensory irritation. The committee applied the documented steps of the assessment framework as contained in the three volumes of the 2022 Draft Assessment (i.e., the Assessment Overview, the Main Assessment, and Appendices), to test for replicability. It also completed a related case study for sensory irritation involving the calculation of reference concentrations based on two epidemiological studies.

THE STATE OF PRACTICE FOR SYSTEMATIC REVIEW

According to the Institute of Medicine report Finding What Works in Health Care: Standards for Systematic Reviews (IOM, 2011, p. 2), systematic review is “a scientific investigation that focuses on a specific question and uses explicit, prespecified scientific methods to identify, select, assess, and summarize the findings of similar but separate studies.” Systematic review allows for transparency of processes and methods used in an assessment and also provides structure for expert judgment. Expert judgment is integral to the systematic review process, from question formulation, protocol development, study selection, and evaluation through determination of the strength of evidence of each evidence stream. It is particularly influential at the evidence integration step. Throughout the IRIS assessment process, multidisciplinary expertise is needed from individuals able to view evidence holistically and reach judgments regarding its fit within the review schema. Expert judgment is needed to reconcile conflicting evidence and uncertainties, and to reach consensus and closure across the steps of the assessment process.

Comparison of the methods used for the 2022 Draft Assessment against a single gold standard approach are complicated by the protracted period over which the review was conducted—from 2012 to 2022. During this decade, the state of practice for systematic review related to environmental exposures was evolving, with national and international working groups developing guidance and tools for systematic reviews relating to environmental health. In addition to efforts at EPA, systematic review approaches were being advanced by the Navigation Guide group at the University of California, San Francisco; the Office of Health Assessment and Translation (OHAT)

of the National Toxicology Program (NTP); the Texas Commission on Environmental Quality (TCEQ); the European Food Safety Authority (EFSA); and a collaboration of the World Health Organization and the International Labour Organization (Woodruff and Sutton, 2014; Schaefer and Meyers, 2017; Descatha et. al., 2018; A. J. Morgan et al., 2018; NTP, 2019). The International Agency for Research on Cancer (IARC) Monographs Preamble approach for evidence integration, which has been adopted for many systematic review frameworks, was also updated during this period (IARC, 2019; Samet et al., 2020).

The 2011 National Research Council (NRC) committee anticipated the evolution of systematic review methods for environmental health and advised EPA that “although the committee suggests addressing some of the fundamental aspects of the approach to generating the draft assessment later in this chapter, it is not recommending that the assessment for formaldehyde await the possible development of a revised approach” (NRC, 2011, p. 151).

The 2014 NRC report Review of EPA’s Integrated Risk Information System (IRIS) Process provides a framework for the IRIS process, showing where systematic review fits into the process and documenting the parallel, but separate, reviews for the three relevant evidence streams: human, animal, and mechanistic. The framework describes the flow of evidence utilization through the steps of evaluation of studies, evidence integration for hazard identification, and derivation of toxicity values. The report was intended to provide overall guidance as EPA moved forward with changes to the IRIS process (NRC, 2014).

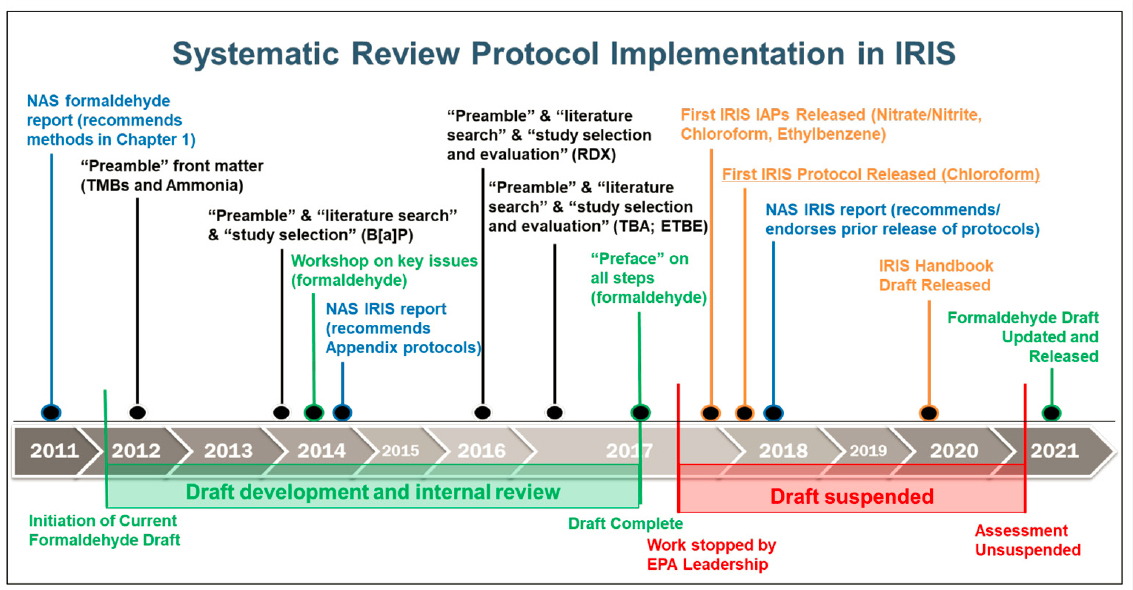

Consequently, the procedures used by the IRIS Program were evolving over the period during which the 2022 Draft Assessment was being developed. The IRIS Handbook (EPA, 2022b), which would provide detailed and codified guidance for developing IRIS assessments and make the IRIS process transparent to stakeholders, was also being prepared during this time (Figure 2-1). The use of prereviewed—that is, publicly released—protocols to document the methods to be used in an IRIS systematic review began in 2018 (EPA, 2018), and the IRIS Handbook was released in draft in 2020 and finalized in 2022 following a review by the National Academies of Sciences, Engineering, and Medicine (NASEM). Further, work on the 2022 Draft Assessment was suspended in 2018 after the draft’s completion in 2017. When EPA resumed work on the draft in 2021, systematic evidence mapping was used to identify newer publications that would potentially be “impactful” if added to the evidence gathered through 2017.

Thus, this committee’s evaluation of the methods used by EPA for hazard assessment and for dose-response assessment is complicated by the 11-year timeframe of the draft’s development, the fluidity of the IRIS Handbook over time, and the continued evolution of systematic review methods for environmental agents generally.

RESPONSE TO THE 2011 NRC REPORT

The 2011 NRC committee reviewed EPA’s 2010 Draft Assessment (NRC, 2011). That committee’s report identified many issues with the methods used and the organization and transparency of the assessment. Subsequent NRC and NASEM committees reviewed the procedures used by the IRIS Program and provided advice on how EPA could resolve many of these issues, recommending the incorporation of systematic review methodology and greater transparency of methods for evidence synthesis and integration (NRC, 2014; NASEM, 2018).

The overall approach to revising the 2010 Draft Assessment was motivated by the general and specific comments of the 2011 NRC report. Appendix D of the 2022 Draft Assessment provides a 37-page response to the comments in the 2011 NRC committee’s report, responding to both the specific and general comments in the 2011 report while also pointing out that the 2022 Draft

SOURCE: EPA’s responses to committee’s questions (see https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/D3A22C29743668E583CD8759F633481333EE3E7ECF54?noSaveAs=1 [accessed May 11, 2023]). Abbreviations: B[a]P = benz[a]anthracene; EPA = Environmental Protection Agency; ETBE = ethyl tertiary butyl ether; IAP = IRIS assessment plan; IRIS = Integrated Risk Information System; RDX = hexahydro-1,3,5-trinitro-1,3,5-triazine; TBA = tert-butyl alcohol.

Assessment “is a completely different document.” Given the scope of the revisions and methodological changes, the present committee did not review specific changes in the 2022 Draft Assessment against the recommendations in the 2011 NRC report, although it did find Appendix D to be responsive to the methodological concerns raised in that report.

For example, the committee that prepared the 2011 NRC report recommended that EPA use biologically based dose-response (BBDR) models for formaldehyde (Conolly et al., 2004) in its cancer assessment and discuss the strengths and weaknesses of using the BBDR models. The 2022 Draft Assessment presents rat and human risk estimates based on BBDR modeling to estimate points of departure from the animal nasal cancer data, and EPA explored uncertainties that occur when these models are used for low-dose risk estimation. Ultimately, EPA chose not to use the full rat and human BBDR models to estimate unit risks because its analysis showed instability of estimates in the human extrapolation modeling provided by Conolly et al. (2004). The cancer unit risks are based on human epidemiology data instead.

Finding: The 2022 Draft Assessment responds to the broad intent of the 2011 NRC report.

RESPONSE TO THE 2014 NRC REPORT

The 2014 NRC report provided general guidance on the IRIS Program, offering a framework for its review processes. The report assessed scientific, technical, and process changes made by EPA in response to the 2011 NRC recommendations. The 2014 NRC committee noted that EPA was making significant progress, and was incorporating systematic review principles as it implemented changes to the IRIS process. The 2014 NRC report highlighted the standards detailed in

the above mentioned Institute of Medicine report Finding What Works in Health Care: Standards for Systematic Review (IOM, 2011), providing specific recommendations for each step of the systematic review process.

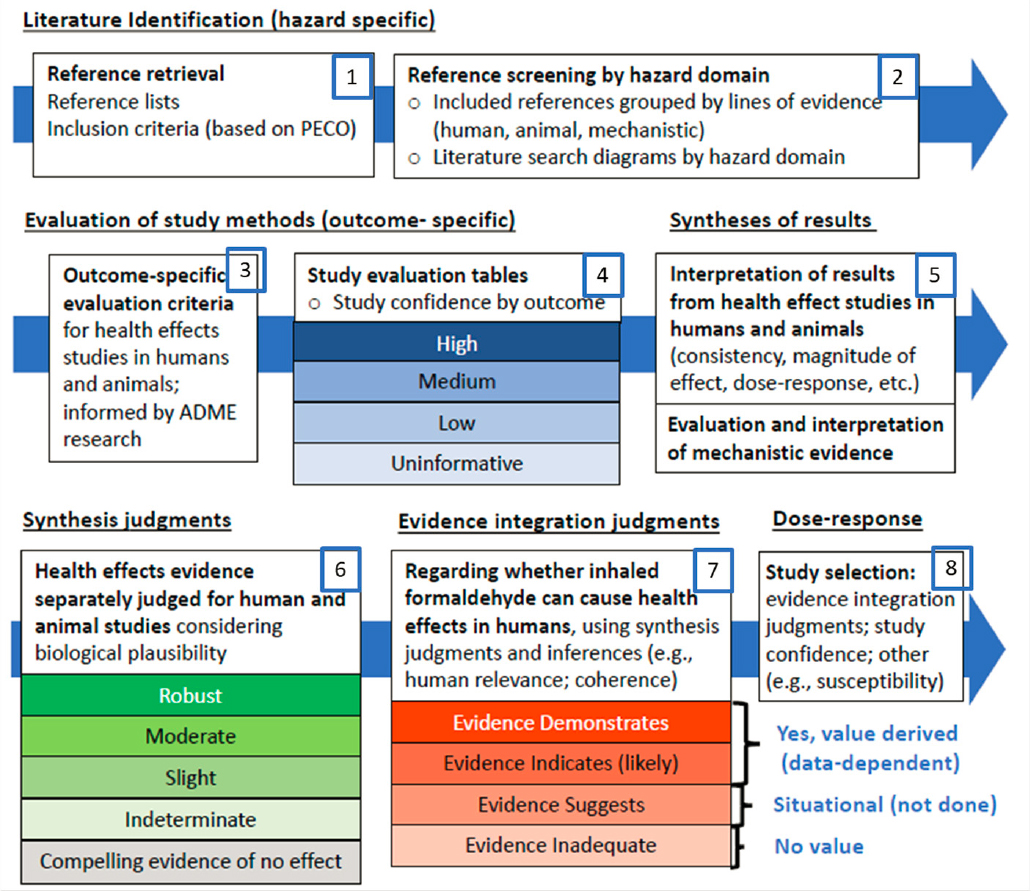

The methods used in the 2022 Draft Assessment encompass eight steps that generally align with the processes described in the 2014 NRC report: evidence identification, evidence evaluation, evidence synthesis, and evidence integration to inform hazard identification and dose-response estimation.

Finding: The 2022 Draft Assessment is responsive to the broad intent of the 2011 NRC review of EPA’s 2010 Draft Assessment and the 2014 NRC review of the IRIS process.

ALIGNMENT OF THE 2022 DRAFT ASSESSMENT METHODS WITH THE STATE OF PRACTICE

The following sections provide the committee’s critique of EPA’s methods for each aspect of the systematic review. Figure 2-2 shows an overview of the EPA process; the committee’s review is framed by these steps, numbered for convenience from 1 to 8. In discussing the steps, the committee provides a brief description of the current state of practice and prior advice to EPA from NRC/NASEM to frame the review that follows.

NOTE: Modified from EPA’s presentation to the committee on October 12, 2022.

DOCUMENTATION OF METHODS

State of Practice

A human health risk assessment of a chemical includes an initial scoping and problem formulation step to inform the development of the research questions and the best evidence-based approach for answering them (NRC, 2009). Problem formulation entails stakeholder engagement as well as a broad literature search, which then can inform more specific research questions. The research questions inform the development of the assessment methods, which are typically documented in a protocol that is established before the assessment begins. The protocol provides comprehensive documentation of inclusion and exclusion criteria for compiling evidence, as well as the methods for the subsequent steps of the systematic review. These steps include evaluating the internal validity of individual studies using appropriate risk-of-bias tools, synthesizing evidence within an evidence stream, and integrating evidence across evidence streams to reach overall hazard conclusions and support the selection of studies for dose-response analyses. The protocol needs to include a level of detail sufficient to support replication of the approach followed.

Documenting planned methods and any deviations from them allows for tracing of every step of the study compilation, study evaluation, and evidence synthesis and integration processes (Figure 2-2). This level of documentation is the foundation for transparency and has been recommended for systematic reviews in clinical fields since at least 2011 (Cochrane Collaboration, 2011; IOM, 2011). It remains the state of practice, although it had not been adopted for systematic reviews in environmental health until 2014, when the Navigation Guide published a protocol for a review of triclosan (Johnson et al., 2016). The IRIS Handbook specifies the publication of an assessment plan, which describes what the assessment will cover, and a systematic review protocol, which describes how the assessment will be conducted (EPA, 2022b).

Prior Advice to EPA from the National Academies

The 2011 NRC committee recommended that the revised EPA assessment include a full discussion of the methods used, including the following elements:

- Clear, concise statements of criteria used to exclude, include, and advance studies for derivation of the RfCs and unit risk estimates.

- Thorough evaluation of all critical studies for strengths and weaknesses using uniform approaches; the findings of these evaluations could be summarized in tables to ensure transparency.

- Clear articulation of the rationales for selection of studies that are used to calculate RfCs and unit risks.

- Indication of the various determinants of “weight” that contribute to the weight-of-evidence descriptions, so that the reader is able to understand what elements (such as consistency) were emphasized in synthesizing the evidence.

The 2014 NRC committee called for the development of a protocol outlining the methods for identifying and evaluating studies, for integrating evidence to reach hazard conclusions, and for supporting dose-response analyses.

The IRIS Handbook was developed over the last decade. The NASEM committee that reviewed the 2020 version of the IRIS Handbook recommended creation of a time-stamped, read--

only final version of the protocol before the assessment was performed. The 2022 NASEM committee also recommended clarifying that the protocol would constitute a complete account of planned methods (NASEM, 2022).

Approach to Documentation of Methods in the 2022 Draft Assessment

To conduct the case study on sensory irritation (Box 2-1), the committee followed methods that are described across several different documents, as the 2022 Draft Assessment does not document methods in specific protocols for the various outcomes. Instead, EPA describes the assessment methods generally in the preface to the Main Assessment and the Assessment Overview. Specific methods for health outcome are described in the Main Assessment and the Appendices of the 2022 Draft Assessment. EPA notes that presenting “the assessment methods within the assessment documents rather than in a separate protocol is consistent with the practices within the IRIS Program at the time the formaldehyde assessment was being developed during 2012–2017” (EPA, 2023, p. 2).

The committee asked EPA about the use of protocols and documentation of methods in the 2022 Draft Assessment. EPA provided a table outlining where procedures that map to the steps shown in Figure 2-2 are described in the 2022 Draft Assessment. In response to the committee’s questions, EPA pointed to the descriptions of its methods in the “Preface on Assessment Methods and Organization” and the additional and more detailed health effect–specific methodological considerations provided in the Appendices.

Finding: EPA did not develop a set of specific protocols for the 2022 Draft Assessment in a fashion that would be consistent with the general state of practice that evolved during the prolonged period when the assessment was being developed. Instead, EPA described the assessment methods across the three documents that together make up the 2022 Draft Assessment: The Main Assessment (789 pages), accompanying Appendices (1059 pages), and an Assessment Overview (192 pages).

Conclusion: Prepublished protocols are essential for future IRIS assessments to ensure transparency for systematic reviews in risk assessment.

Finding: The committee’s review of the 2022 Draft Assessment documents the challenges faced by users of the assessment in navigating the voluminous documentation and understanding the methods used and evidence assessed. EPA needs to revise the Draft Assessment to ensure that the methods used for each outcome can easily be found by providing a linked roadmap and merging the descriptions of the methods used for each outcome in a single location.

Recommendation 2.1 (Tier 1): EPA should revise its assessment to ensure that users can find and follow the methods used in each step of the assessment for each health outcome. EPA should eliminate redundancies by providing a single presentation of the methods used in the hazard identification and dose-response processes. A central roadmap and cross-references are also needed to facilitate access to related sections across the different elements of the assessment (e.g., Appendixes, Main Document) for the different outcomes analyzed. Related Tier 2 recommendations would amplify the impact of this Tier 1 recommendation in improving the assessment.

Recommendation 2.2 (Tier 2): In updating the assessment in line with the Tier 1 Recommendation 2.1, EPA should further clarify the evidence review and conclusions for each health outcome by giving attention to the following:

-

Using a common outline to structure the sections for each health outcome in order to provide a coherent organization that has a logical flow, by

- adding an overview paragraph to guide readers at the start of sections for each of the various health domains, and

- including hyperlinks to facilitate crosswalking among sections within the document;

- Moving lengthy, not directly used information to an appendix;

- Including a succinct executive summary in the Main Assessment; and

- Performing careful review and technical editing of the documents for consistency across the multiple parts of the 2022 Draft Assessment, including across the Assessment Overview and Appendices. (The Assessment Overview could be entirely removed if the above recommendations were carried out.)

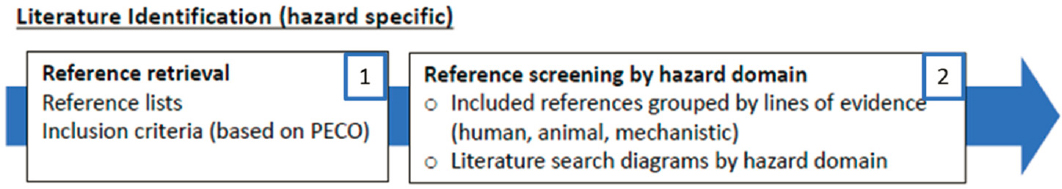

EVIDENCE IDENTIFICATION (STEPS 1 AND 2)

Aspects of EPA’s approach relevant to evidence identification are presented in Figure 2-3.

State of Practice

Evidence identification involves developing a PECO (population, exposure, comparator, outcome[s]) statement to guide the search strategy. As the next step, a trained librarian or informationist should search multiple databases to identify a broad pool of articles to be screened for inclusion. Abstract screening is typically then conducted by at least two reviewers working independently, and decisions are made as to whether to include or exclude each article. These decisions should be documented and made in accordance with criteria for inclusion and exclusion that are established a priori.

Prior Advice to EPA from the National Academies

The 2014 NRC committee advised EPA to include a section on evidence identification that “is written in collaboration with information specialists trained in systematic reviews and that includes a search strategy for each systematic-review question being addressed in the assessment. Specifically, the protocols should provide a line-by-line description of the search strategy, the date of the search, and publication dates searched and explicitly state the inclusion and exclusion criteria for studies” (NRC, 2014, p. 59). Additionally, the 2014 committee recommended that evidence identification involve a predetermined search of key sources, follow a search strategy based on empirical research, and be reported in a standardized way that would allow replication by others, and that the search strategies and sources be modified as needed on the basis of new evidence on best practices. The 2014 committee also recommended that contractors who perform the evidence identification for a systematic review adhere to the same standards and provide evidence of experience and expertise in the field.

Approach to Evidence Identification in the 2022 Draft Assessment

The steps EPA followed in conducting the literature search and the PECO assessment for inclusion and exclusion of studies are described in the Main Assessment as well as in the Appendices. For each outcome, the literature search strategy is documented, and inclusion and exclusion criteria are provided that are based on the PECO statement and are detailed in a table. EPA updated its literature searches annually through September 2016, after which systematic evidence mapping was used to search studies from 2017 through 2021 when work on the assessment was resumed.

Finding: EPA’s literature search strategies for the 2022 Draft Assessment are adequate and consistent with the state of practice at the time. EPA appears to have sufficiently harmonized the methods for the pre- and post-2016 literature searches that were conducted using two different methods and were consistent with the state of practice at the time. Although the search strategies are adequately documented, the origins of the various PECO statements are less clear. In particular, across noncancer outcomes, the rationale for study exclusion on the basis of the populations, exposures, and outcomes is not well documented. For the literature searches, EPA used four databases, curated reference lists in published reviews, and “other national or international health assessments of formaldehyde,” consistent with prior NASEM guidance (See Appendix C). As an example, see the literature search documentation for sensory irritation (pp. A-231–232).

Recommendation 2.3 (Tier 2): EPA should expand the text explaining the choices of the elements of the PECO statements.

STUDY EVALUATION (STEPS 3 AND 4)

In this step of the IRIS process (see Figure 2-4), the human and animal studies selected for inclusion are assessed for study quality, including risk of bias.

State of Practice

The state of practice for study evaluation is that each selected study be assessed for risk of bias using a tool that evaluates whether a study may be affected by systematic errors that impact internal validity and cause the results to deviate from the truth. The potential for error in a study can be assessed, but quantitative estimation of the magnitude of any bias may not be possible. Classes of bias include selection bias, information bias (such as misclassification of exposure or outcome), confounding, selective reporting of study findings, missing data, and inappropriate statistical analysis (Frampton et al. 2022). Two reviewers independently evaluate each study, and any disagreements in rating of the evaluation domains are resolved by consensus, as is described in the IRIS Handbook.

GRADE (Grading of Recommendations, Assessment, Development, and Evaluations) is an example of a transparent framework for developing and presenting evidence summaries that provides a systematic approach for making clinical practice recommendations (Guyatt et al., 2008a,b, 2011). GRADE is the most widely adopted tool for classifying the quality of evidence and for making recommendations, and is officially endorsed by more than 100 organizations worldwide.

While GRADE was developed for randomized controlled trials, since 2011 work has been in progress to adapt it for epidemiological and animal studies. Examples include the ROBINS-I tool for nonrandomized (epidemiological) studies (Sterne et al., 2016); its use is explained in Schünemann et al. (2019). Hooijmans’ SYRCLE risk-of-bias tool is typically used to grade risk of bias in animal studies of interventions (Hooijmans et al., 2014). OHAT has adopted these tools for toxicological applications in the OHAT risk-of-bias tool (NTP, 2015), which is based on GRADE. Tools and criteria that guide judgments for each bias domain and for each outcome are preestablished during the protocol development stage, thereby calibrating how judgments are made so that decisions will be transparent and replicable.

Prior Advice to EPA from the National Academies

The 2011 NRC review of EPA’s 2010 Draft Assessment provided considerations for a template for evaluating epidemiologic studies (NRC, 2011, p. 158). The elements include many of the sources of bias that are commonly addressed in risk-of-bias tools, including selection bias, information bias, and confounding (Box 2-2).

Approach to Study Evaluation in the 2022 Draft Assessment

EPA assessed the risk of bias for included studies using a domain-based approach. The domains included were related to study factors that influence internal validity, that is, whether the study is potentially affected by bias, which can lead to under- or overestimation of risk. The domains used for study evaluation are described in the Assessment Overview and the preface of the Main Assessment and in Appendix A. The descriptions are somewhat different in each location. For example, the Assessment Overview does not describe domains used but provides considerations when different types of research studies, observational epidemiologic studies, controlled human exposure studies, animal toxicological studies, and mechanistic studies are being reviewed. The preface of the Main Assessment (Figure II) describes four domains that are used to summarize the study quality of epidemiological studies, including the potential for selection bias, information bias, and confounding, along with other considerations.

Finally, in Appendix A, Section A.5.1 (pp. A-232 to A-233) five domains are described for epidemiological studies:

- Population selection: Recruitment, selection into study, and participation independent of exposure status, reported in sufficient detail to understand how subjects were identified and selected.

- Information bias: A validated instrument for data collection described or citation provided. Outcome ascertainment conducted without knowledge of exposure status. Timing of exposure assessment appropriate for observation of outcomes. Information provided on the distribution and range of exposure with adequate contrast between high and low exposure.

- Potential for confounding: Important potential confounders addressed in study design or analysis. Potential confounding by relevant coexposures addressed.

- Analysis: Appropriateness of analytic approach given the design and data collected; consideration of alternative explanations for findings; presentation of quantitative results.

- Other considerations not otherwise evaluated: Sensitivity of study (exposure levels, exposure contrast, duration of follow-up, sensitivity of outcome ascertainment).

Appendix A, Sections A.5.2 – A.5.9, includes the study-specific evaluations and provides study tables (e.g., Table A-34), with one row per study that considers six domains for noncancer endpoints: (1) consideration of participant selection and comparability, (2) exposure measure and range, (3) outcome measure, (4) consideration of likely confounding, (5) analysis and completeness of results, and (6) size. For studies of cancer outcomes, the domains are the same, except that instead of size, sensitivity is considered.

For noncancer outcomes, EPA documents how judgments are made for each domain, in a generic sense, by providing some description of classification of studies as having a high, medium, or low level of confidence or not being informative in tables documenting risk-of-bias decisions. These tables have two columns for exposure and for study design and analysis (see, e.g., Table A-33). The descriptions in the table cells sometimes mention risk-of-bias domains described elsewhere in the document. However, the documentation is not specific to each domain as a heading in the tables (because there are only two table headings rather than six for the high, medium, low, not informative classification). For cancer outcomes, the risk-of-bias domains are described generally for each outcome, but there is no clear description of how judgments were made concerning the classification of studies as having high, medium, or low confidence or not being informative for each risk-of-bias domain.

Animal studies are also evaluated in terms of domains that would influence internal validity or study sensitivity. Tables in Appendix A describe these domains, as does the text in the sections that precede the tables. For example, Section A.5.1 describes considerations for five general study quality categories: exposure quality, test animals, study design, endpoint evaluation, and data considerations and statistical analyses. The criteria for the epidemiology studies follow the same tabular organization described above (see Table A-57). For animal respiratory pathology, Section A.5.5 addresses additional factors for evaluating these studies, emphasizing certain aspects that would decrease study confidence and noting factors that are of less concern (e.g., methanol coexposure for portal-of-entry effects). Footnotes to the study results tables (e.g., Table A-59) provide characteristics of robust (++), adequate (+), or poor (gray shading) determinations for the individual evaluation domains. Some criteria are found only in the footnotes (e.g., protocol description, protocol relevance to humans), while others are included in different domains from those in the general categories presented in A.5.1 (“General Approaches to Identifying and Evaluating Individual Studies”).

Overall, while outcome-specific criteria used to evaluate studies were generally appropriate, the committee found it difficult to fully understand the final criteria that were applied, as well as the judgments made on overall study confidence for both human and animal studies. Comparable tables for different outcomes may not have sufficiently parallel descriptions.

Finding: EPA provides overall and outcome-specific evaluation criteria that are generally consistent with the common domains for risk-of-bias analysis and their application in practice. However, the criteria are found in several different locations in the documents and in some cases are inconsistently presented and integrated across the documents. As a result, the committee was challenged to reconstruct the study evaluation approach and how the criteria were applied for study evaluation.

Finding: The considerations listed for classification of study confidence and evaluation of each study by at least two independent experts are adequate. However, the committee’s case studies (see Box 2-1 and Appendix C) revealed inconsistencies in how evaluation criteria were described and applied in EPA’s evaluation of human and animal studies.

Recommendation 2.4 (Tier 2): EPA should thoroughly review the 2022 Draft Assessment documents to address issues of consistency and coherence so as to ensure that its methods can be applied and replicated with fidelity. The reviews for each outcome in Chapters 4 and 5 provide more specific guidance.

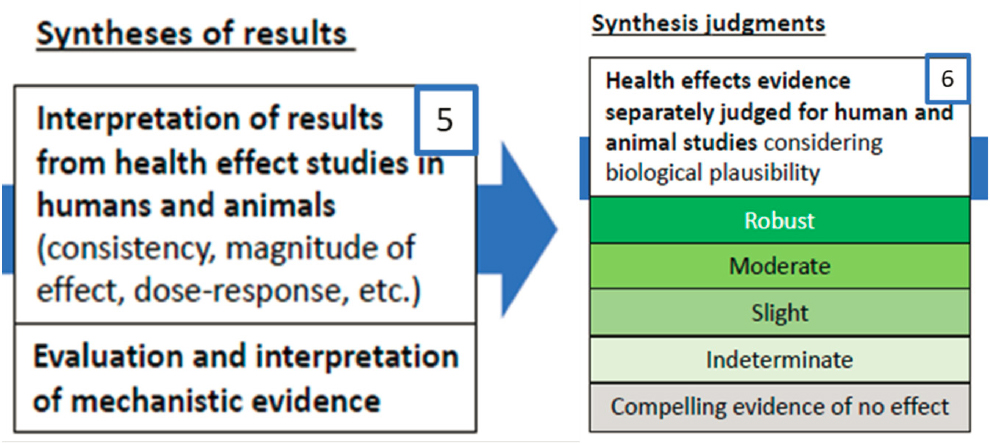

EVIDENCE SYNTHESIS (STEPS 5 AND 6)

Evidence synthesis involves separately interpreting the results from human, animal, and mechanistic studies and reaching judgments as to the strength of evidence in each evidence stream. Biological plausibility is an overarching consideration (Steps 5 and 6; see Figure 2-5).

State of Practice

Evidence synthesis is a process of bringing together data from a set of included studies with the aim of drawing conclusions about a body of evidence (Higgins et al., 2019). The process consists of summarizing study characteristics, quality, and effects, and combining results and exploring differences among the studies (e.g., variability of findings and uncertainties) using qualitative and/or quantitative methods. GRADE is the approach used most commonly in the clinical field for evidence synthesis, and several groups, including the National Academies (NASEM, 2017), are working to adapt it to environmental hazard assessments. The approaches developed by R. L. Morgan and colleagues (2016, 2019) and the Navigation Guide group (Woodruff and Sutton, 2014) are similar to the original GRADE approach, but use slightly different criteria for setting initial levels of certainty and for upgrading and downgrading certainty. The Navigation Guide was designed for human nonrandomized studies, whereas OHAT extended the approach to animal studies. Although there are slight differences in the environmental health field regarding evidence synthesis, there is some convergence on common baseline methods, such as the GRADE approach, to bring consistency. Moreover, the committee emphasizes that all of the above-mentioned methods have undergone pilot testing, stakeholder vetting, and peer review and have been made public.

Approach to Evidence Synthesis in the 2022 Draft Assessment

EPA applies a set of considerations and a framework for assessing the strength of evidence in each of the evidence streams within an outcome class, which are described in the preface of the Main Assessment (p. xxxiii). The primary considerations for assessing the strength of evidence for health effects studies in humans and, separately, in animals fall into six categories: (1) risk of bias, sensitivity (across studies); (2) consistency; (3) strength (effect magnitude) and precision; (4) biological gradient/dose-response; (5) coherence; and (6) mechanistic evidence related to biological plausibility. In Table III, EPA describes the information most relevant for informing causality during evidence synthesis for all categories other than risk of bias, sensitivity (across studies). In Table IV, EPA notes what information would increase or decrease the strength of evidence for animal or human data streams.

The Main Assessment presents a set of guidelines on how the literature for a particular endpoint and evidence stream should be considered in assigning a particular level of strength of evidence. The framework for strength-of-evidence judgments is provided for each evidence stream, with considerations for human evidence being discussed in Table VI and for animal evidence in Table VII. These tables describe the considerations required to make that overall evidence determination, using the categories robust, moderate, slight, indeterminate, or compelling evidence of no effect. The synthesis framework is applied to the endpoint and evidence stream-specific synthesis judgments.

Finding: In general, the strength-of-evidence categories are appropriate for the human and animal evidence streams and consistent with the state of practice.

EVIDENCE INTEGRATION (STEP 7)

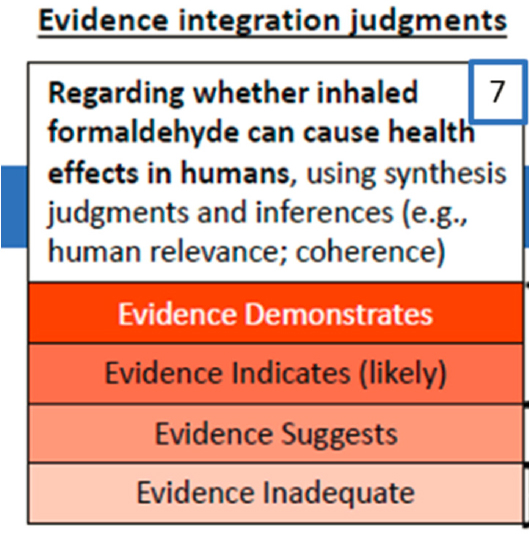

In this step in the IRIS process (see Figure 2-6), the evidence synthesized within the human, animal, and mechanistic streams receives an overall evaluation for the strength of evidence that an agent has caused one or more noncancer or cancer outcomes. This step completes the hazard as-

sessment, and classifies the relationship between exposure and health effect into one of four categories: (1) evidence demonstrates, (2) evidence indicates (likely), (3) evidence suggests (but is insufficient to infer), and (4) evidence inadequate.

State of Practice

The state of practice for evidence integration dates to landmark publications in the 1960s, including the 1964 report of the U.S. Surgeon General’s Advisory Committee on Smoking and Health (Smoking and Health: Report of the Advisory Committee to the Surgeon General) and a paper by Sir Austin Bradford Hill (1965). Both offered comparable guidelines for the evaluation of strength of evidence for causation (summarized in Box 2-3). Application of these guidelines is not algorithmic, but rather involves the expert judgment of one or more reviewers or a panel. Typically, a narrative review is provided that aligns the evidence with the guidelines, providing insights as to how the evidence fulfills the elements of the guidelines.

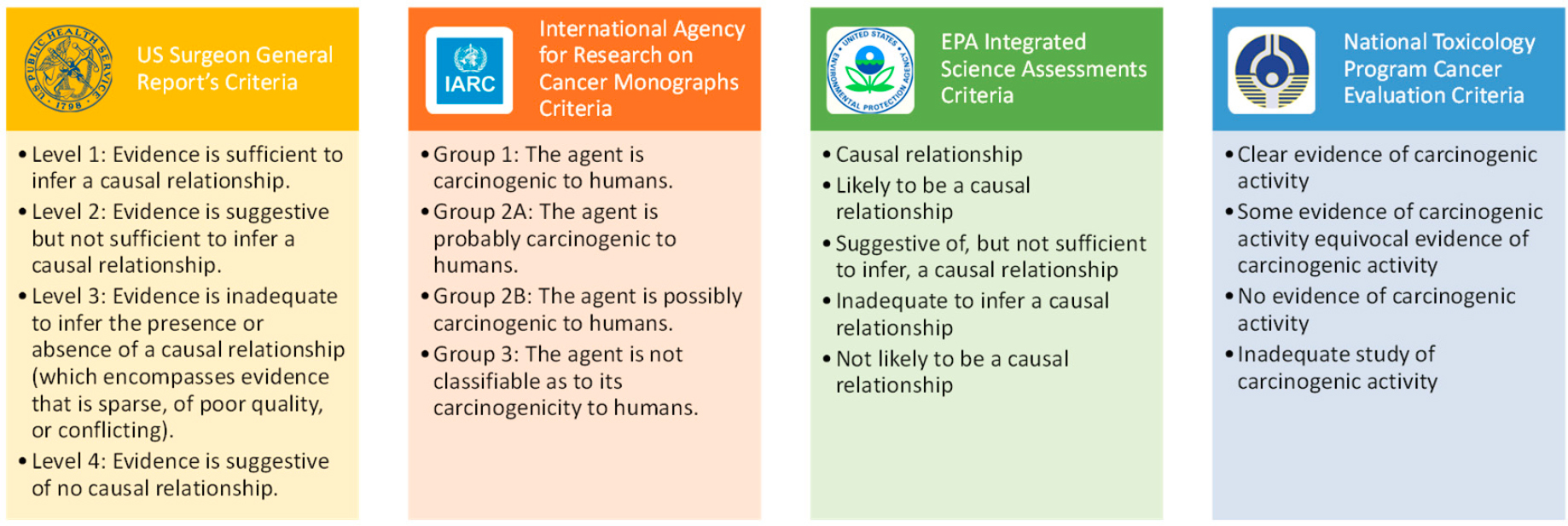

Additionally, the state of practice includes a hierarchical classification of the strength of evidence for causation. Four- and five-level schemes are generally employed, as with the Surgeon Generals’ reports on smoking and health, IARC’s Monographs on carcinogenicity, and EPA’s Integrated Science Assessments (ISAs) for the criteria air pollutants (Figure 2-7).

As mentioned, the GRADE framework, developed collaboratively by the GRADE Working Group and adopted by the Cochrane Collaboration for clinical evidence (Guyatt et al., 2008b), is being adapted to decision making in environmental health (Cochrane Collaboration, 2011; R. L. Morgan et al., 2019). In this framework, the quality (termed certainty) of evidence is ranked for each outcome. An overall GRADE certainty rating can be applied to a body of evidence across outcomes, usually by taking the lowest quality of evidence from all the outcomes that are critical to decision making.

SOURCE: Warren et al., 2014; EPA, 2015; IARC, 2019; NTP, 2023.

Several other systematic review frameworks (e.g., Navigation Guide, OHAT) are consistent with the GRADE approach and yield hierarchical classifications in line with those given in Box 2-4 (NASEM, 2017). The judgments using GRADE or other frameworks cannot be implemented mechanically; a considerable amount of expert judgment is of necessity required for each decision. Two persons evaluating the same body of evidence might reasonably come to different conclusions about its certainty. What GRADE and other frameworks do provide is a reproducible and transparent framework for grading the certainty of evidence (Mustafa et al., 2013).

The NRC committee that conducted the 2014 review of the IRIS Program recommended that EPA maintain its current process of guided expert judgment but make its application of the process more transparent or adopt a structured process for evaluating evidence and rating recommendations. The 2014 committee also recommended that EPA develop templates for structured narrative justifications of the evidence-integration process and conclusions.

Approach to Evidence Integration in the 2022 Draft Assessment

EPA described evidence integration as a two-step process (Figure III of preamble to the Main Assessment, p. xxxvii). The distinction between the evidence synthesis step (step 6) and the first step of the evidence integration step (step 7) is that in the latter, mechanistic evidence is considered

in making human and animal study judgments. As mentioned above, Tables VI and VII in the preamble provide the criteria for classifying human and animal evidence into five categories, ranging from robust to compelling evidence of no effect. Table V gives examples of how to interpret mechanistic evidence, with columns for mechanistic inferences considered and potential specific applications within the assessment. The description of the approach for considering strength of evidence emphasizes having a set of studies evaluated as having high or medium confidence, along with consideration of the number of studies and coherence of research, providing mechanistic evidence.

The second step of evidence integration brings together the strength of evidence for the human and animal streams and consideration of mechanistic evidence. The description of this step also mentions the coherence of the evidence streams and information on susceptible populations. This second step leads to an overall four-level classification of strength of evidence as (1) demonstrates, (2) indicates, (3) suggests, or is (4) inadequate that a toxicant, in this case inhaled formaldehyde, can cause adverse health effects in humans. Table VIII in the Preamble provides an algorithmic approach to use of the two evidence streams in tandem to perform the four-level classification. Cancer outcomes entail an additional step of using the cancer descriptors from EPA’s Guidelines for Carcinogen Risk Assessment to translate the four categories to the five levels of those guidelines (Table IX of the preamble, pp. xlvii–xlviii).

For each outcome considered, overall classification of the strength of evidence is supported by a table titled “Evidence Integration Summary for….” The table’s two columns are labeled “Evidence judgment” and “Hazard determination,” and its three rows are for human evidence, animal evidence, and “other inferences.” See, for example, Table 1-4, pp. 1–33, the evidence integration summary for sensory irritation. The “Evidence judgment” column gives the synthesis classification (e.g., robust), and the “Hazard determination” column gives the strength of evidence for the existence of a hazard (e.g., demonstrates). A brief narrative supports the table. The summary and evaluation of the hazard evaluation, Section 1.4, explores susceptibility and compiles the synthesis and hazard identification conclusions from all the evaluated outcomes.

The approach used in the 2022 Draft Assessment for determining the strength of evidence for the existence of a hazard draws on long-standing methods: summarizing evidence within different streams and applying long-used guidelines for evaluating evidence for a causal association. Transparent application of this approach involves carrying out the earlier steps of evidence identification and evaluation with a documented approach and synthesizing and integrating evidence with a specified and replicable protocol.

Finding: In general, the IRIS Program’s approach to evidence integration is appropriate and grounded in methods used by EPA and many other entities for reaching causal conclusions. Expert judgment is involved in this phase of the assessment, and the IRIS Program appears to draw on appropriate multidisciplinary groups to evaluate the evidence.

Finding: The committee identified aspects of the process for further consideration and reformulation. First, the IRIS Program’s schema blurs the distinction between evidence synthesis and integration, combining them into a single step, as exemplified by the summary tables for each health outcome that include both “evidence judgment” and “hazard determination.” (e.g., Table 1-4). A clear distinction between synthesis and assessment of the overall strength of evidence would be preferable conceptually and more consistent with the state of practice.

Recommendation 2.5 (Tier 2):

- The 2022 Draft Assessment should be edited to more sharply demarcate the synthesis and the integration of evidence discussions.

- EPA should expand the narrative descriptions of the evidence integration step, or should follow published methodology while providing detailed explanation of any adaptations.

Given the reliance on expert judgment for both the evidence synthesis and evidence integration aspects of hazard identification, expanded narrative descriptions would be useful for documenting the rationale for the classifications made. The tables are useful but terse, as is the accompanying text.

The committee notes that the terminology adopted for the four-level characterization of the strength of evidence for the existence of a hazard includes descriptors that are not inherently hierarchical. The terms used in the 2022 Draft Assessment—demonstrates, indicates, suggests, or is inadequate—are aligned with the IRIS Handbook, but are not obviously hierarchical, nor are they consistent with terms used elsewhere within EPA, (e.g., in the Integrated Science Assessments) or outside of EPA (e.g., the reports of the Surgeon Generals’ on smoking and health). The terms also are used inappropriately, as in the inherent proposition that it is the evidence itself that “demonstrates” or “indicates.” The use of these terms represents an unnecessary source of inconsistency with the state of practice.

Use of Mechanistic Evidence

Toxicological assessments have typically relied on evidence from human observational (epidemiological) or experimental and animal studies. However, mechanistic data have been used to modify or strengthen conclusions based on human or animal studies for several decades. In 1982, for instance, the Preamble to the IARC Monographs introduced the possibility of “upgrading” overall classifications based on results from short-term genotoxicity assays, and in 1991, an IARC expert group proposed principles and procedures for mechanistic “upgrades” and “downgrades” based on the extent of mechanistic understanding. Current evidence integration frameworks, including that of IARC (2019), include approaches for drawing hazard identification conclusions without evidence from human observational or experimental animal evidence. Mechanistic evidence has increased in volume and complexity over the past several decades, and is the predominant form of evidence for some agents. This shift in the mix of available evidence to support hazard conclusions has been supported by advances in molecular biology, automation, and ultrasensitive analytical methods, resulting in a large influx of in vitro and computational studies that provide insights into mechanisms of toxic response. Indeed, the number of in vitro and computational studies is growing exponentially, supported by the many agency roadmaps for transitioning away from animal-based toxicology studies, including EPA’s own New Approach Methods (NAM) work plan (EPA, 2021). Yet despite recommendations from the National Academies that date back to 2007 (NRC, 2007, 2009; NASEM, 2017), there are few examples of the use of EPA’s NAM data to inform risk assessment decision making. Recently, a committee of the National Academies urged the EPA to create a framework for assessing and gaining trust in new approach methods, recommending that these methods be transitioned from laboratory evaluation to inclusion in systematic-review-based risk assessments (NASEM, 2023).

EPA’s inclusion of mechanistic evidence as a separate evidence stream is appropriate, but EPA faced substantial challenges in considering this evidence, especially in the context of systematic review. First, generally accepted frameworks for systematically identifying, screening, and evaluating mechanistic data have only recently been advanced, tested, and formalized (Smith et al., 2016). Second, the available studies are voluminous and variable in quality. As of this writing, the risk-of-bias and study quality tools, developed and validated in intervention research and being adapted and validated for observational and animal toxicological studies, are in their infancy for

application to mechanistic evidence. This presented a particular challenge in 2011, when work on the formaldehyde assessment started. EPA has pioneered the use of mechanistic evidence and the committee evaluated the use of this evidence in the formaldehyde assessment with these challenges in mind.

In the 2022 Draft Assessment, EPA classifies mechanistic studies as in vitro or as results of modeling, and assesses them as a separate evidence stream (e.g., Section F.2.4, “Literature Inventory”). The literature search approach was developed as outlined in the health effect-specific population, exposure, comparator, and outcome (PECO) for the pre-2016 literature searches (Appendix A, Section A.5). EPA used the systematic evidence map methodology to identify the most recent relevant publications that might alter conclusions about hazards or toxicity values— that is, studies it considered “impactful”— for literature published from 2016 to 2021. Studies identified in the systematic evidence map as possibly impactful were incorporated into the updated 2022 Draft Assessment. EPA stated that it used a literature search approach identical to that outlined in the health effect–specific PECOs for the earlier searches (Appendix A, Section A.5). The searches were conducted in the same databases with the exception of ToxNet, which ceased to exist in 2019. For the 2022 Draft Assessment, EPA used a process that relied on information gathered from the literature inventory and expert judgment by two reviewers. The new term “impactfulness” was introduced in the 2022 Draft Assessment and defined as follows:

More apical endpoints and those most directly related to the mechanistic uncertainties identified in the 2017 draft as most relevant to drawing hazard or dose-response judgments were considered more impactful. The specifics of this consideration vary depending on the health outcome(s) of interest. In some cases, this relevance determination relates to the potential human relevance of the endpoints, while in others this relates to an ability to infer adversity.

Finding: Overall, the committee found that EPA has been thorough and transparent in identifying the scientific literature with regard to mechanistic evidence.” However, the definition of “impactfulness” and how this concept was applied are not well described. Similarly, the term “other inferences” is used in the sections on integration of cancer evidence, but the term is not explained.

Recommendation 2.6 (Tier 2): To increase the transparency of the evaluation of mechanistic data, EPA should clarify key terms (e.g., “impactfulness,” “other inferences”) and their application to specific studies. “Impactfulness” can be defined (in Table F-12 and elsewhere), and “other inferences” can be explained in discussing the approach to evidence integration in the “Preface on Assessment Methods and Organization.”

Finding on Evidence Integration

Finding: Evidence integration is the critical last step in hazard identification, and transparency is essential, requiring a sufficiently comprehensive narrative documenting the decision-making process. In completing its determination of hazard identification in the 2022 Draft Assessment, EPA drew on long-standing approaches for inferring causation, including the Bradford Hill considerations, first enumerated in a 1965 publication (Hill, 1965). Nonetheless, the 2022 Draft Assessment deviates from those long-established approaches in several respects, including (1) blurring of the boundary between evidence synthesis and integration, and (2) the choice of terminology used to describe the strata in the four-level schema for

classifying the strength of evidence. Additionally, in some instances the narratives concerning the evidence integration step are too terse to explain fully why EPA came to its confidence conclusions.

DOSE-RESPONSE ASSESSMENT (STEP 8)

The final step in EPA’s process is the selection of studies for derivation of RfCs and cancer unit risk values. This component of EPA’s process is depicted in Figure 2-8.

State of Practice

Dose-response analysis and derivation of RfCs—estimates of the amount of a substance in the air that a person can inhale daily over a lifetime without experiencing an appreciable risk of adverse health effects—are typically conducted for high-quality studies in which the exposure is associated with the outcome. Study selection is critical for RfC estimation, and the rationale for selection should be described clearly within the assessment. Then modeling can be used to estimate an effective dose (ED) for cancer effects or a benchmark dose (BMD) for noncancer effects. If the effects are nonmutagenic, a BMD approach can be applied for both cancer and noncancer effects (EPA, 2005a). When modeling is not possible because of a lack of data, a no observed adverse effect level (NOAEL) or a lowest observed adverse effect level (LOAEL) is used. Next, a unit risk for cancer effects or an RfC is calculated. This is often done by applying uncertainty factors to the BMD or the benchmark dose lower bound (BMDL) or the NOAEL or LOAEL. While the NOAEL–LOAEL approach is still used, the BMD approach is preferred because it uses dose-response information more fully and reduces uncertainty (NRC, 2014).

Approach to Dose-Response Assessment in the 2022 Draft Assessment

EPA used its causal judgments to determine when to complete a dose-response assessment. For each noncancer outcome for which EPA judged that the “evidence demonstrates,” or “evidence indicates,” a dose-response assessment was conducted. Similarly, for each cancer outcome for which the weight of evidence led to a determination of “carcinogenic” or “likely to be carcinogenic,” a dose-response assessment was carried out. Then a study was selected for dose-response and RfC derivation. The approach to dose-response analysis differed for noncancer and cancer outcomes, according to methods that are fully described in EPA guidance documents.

EPA presents the considerations for study inclusion for dose-response assessment in the preface of the Main Assessment, Table X. The categories are overall confidence conclusion, study confidence, population, and exposure information. Each category includes one or more considerations important for study selection. Additional considerations for study selection are described in the preface and in Section 2.1. These considerations include the accuracy of formaldehyde exposure, the severity of the observed effects, and the exposure levels analyzed, as well as a requirement that the study be of medium or high quality. To determine whether EPA’s choice of studies to use in deriving the RfC had an impact, the committee conducted a case study comparing RfCs derived from Hanrahan et al. (1984) and Liu et al. (1991) (Box 2-5).

Finding: The committee found EPA’s considerations for study inclusion to be reasonable, although the discussion of those considerations in multiple places in the documents made it more difficult to determine what the considerations were and how they were applied.

Finding: Although EPA provides criteria for study inclusion in the dose-response assessment, it does not include any discussion of how these criteria were applied to the specific studies chosen for dose-response. Using the Hanrahan et al. (1984) study as a test case, there are inconsistencies between the characteristics of the study and EPA’s criteria for studies that would be selected for dose-response analysis.

Recommendation 2.7 (Tier 2): EPA should consider using information from studies that are complementary to each other to derive benchmark concentrations for outcomes of interest (see also Appendix D). For example, multiple studies can be complementary by widening the exposure scale, broadening the age groups, and including vulnerable or susceptible groups.

Recommendation 2.8 (Tier 2): Given that EPA has requested additional information from some study authors, the authors of the Liu et al. (1991) study could be approached for additional information that would help EPA reconstruct an overall dose-response graph (see also Appendix D).

REFERENCES

Cochrane Collaboration. 2011. Cochrane handbook for systematic reviews of interventions version 5.1.0., edited by J. P. T. Higgins and S. Green. London, United Kingdom.

Conolly, R. B., J. S. Kimbell, D. Janszen, P. M. Schossler, D. Kalisak, J. Preston, and F. J. Miller. 2004. Human respiratory tract cancer risks of inhaled formaldehyde: Dose-response predictions derived from biologically-motivated computational modeling of a combined rodent and human dataset. Toxicological Science 82(1):279–296.

Descatha, A., G. Sembajwe, M. Baer, F. Boccuni, C. Di Tecco, C. Duret, B. A. Evanoff, D. Gagliardi, I. Ivanov, N. Leppink, A. Marinaccio, L. L. M. Hanson, A. Ozguler, F. Pega, J. Pell, F. Pico, A. Prüss-Ustün, M. Ronchetti, Y. Roquelaure, E. Sabbath, G. A. Stevens, A. Tsutsumi, Y. Ujita, and S. Iavicoli. 2018. WHO/ILO work-related burden of disease and injury: Protocol for systematic reviews of exposure to long working hours and of the effect of exposure to long working hours on stroke. Environment International 119:366–378.

EPA (Environmental Protection Agency). 2005a. Guidelines for carcinogen risk assessment. Washington, DC: Risk Assessment Forum.

EPA. 2015. Preamble to the integrated science assessments (ISA). U.S. Environmental Protection Agency, Washington, DC, EPA/600/R-15/067, 2015.

EPA. 2018. Systematic review protocol for the IRIS Chloroform Assessment (Inhalation) (Preliminary assessment materials). Washington, DC. https://cfpub.epa.gov/ncea/iris_drafts/recordisplay.cfm?deid=338653 (accessed July 12, 2023).

EPA. 2021. New approach methods work plan. Washington, DC. https://www.epa.gov/system/files/documents/2021-11/nams-work-plan_11_15_21_508-tagged.pdf (accessed July 12, 2023).

EPA. 2022a. IRIS Toxicological Review of Formaldehyde-Inhalation, External Review Draft. Washington, DC. https://iris.epa.gov/Document/&deid=248150 (accessed September 18, 2023).

EPA. 2022b. ORD staff handbook for developing IRIS assessments. Washington, DC: EPA Office of Research and Development. https://cfpub.epa.gov/ncea/iris_drafts/recordisplay.cfm?deid=356370 (accessed July 12, 2023).

EPA. 2023. EPA Responses to NASEM panel questions for the January 30, 2023 public meeting. Washington, DC. https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/D3A22C29743668E583CD8759F633481333EE3E7ECF54?noSaveAs=1 (accessed July 12, 2023).

Frampton, G. K., P. Whaley, M. G. Bennett, G. Bilotta, J. Dorne, J. Eales, K. L. James, C. Kohl, M. Land, B. Livoreil, D. Makowski, E. Muchiri, G. Petrokofsky, N. Randall, and K. A. Schofield. 2022. Principles and framework for assessing the risk of bias for studies included in comparative quantitative environmental systematic reviews. Environmental Evidence 11(1).

Glass, T. A., S. N. Goodman, M. A. Hernán, and J. M. Samet. 2013. Causal inference in public health. Annual Review of Public Health 34(1):61–75.

Guyatt, G. H., A. D. Oxman, R. Kunz, G. E. Vist, Y. Falck-Ytter, and H. J. Schünemann. 2008a. What is “quality of evidence” and why is it important to clinicians? BMJ 336(7651):995–998.

Guyatt, G. H., A. D. Oxman, G. E. Vist, R. Kunz, Y. Falck-Ytter, P. Alonso-Coello, and H. J. Schünemann. 2008b. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. BMJ 336 (7650):924–926.

Guyatt, G. H., A. D. Oxman, H. J. Schünemann, P. Tugwell, and J. A. Knottnerus. 2011. GRADE guidelines: A new series of articles in the Journal of Clinical Epidemiology. Journal of Clinical Epidemiology 64(4):380–382.

Hanrahan, L. P., K. Dally, H. R. Anderson, M. S. Kanarek, and J. S. Rankin. 1984. Formaldehyde vapor in mobile homes: A cross sectional survey of concentrations and irritant effects. American Journal of Public Health 74(9):1026–1027.

Higgins, J. P. T., J. López-López, B. J. Becker, S. W. Davies, S. Dawson, J. M. Grimshaw, A. M. Price-Whelan, T. H. Moore, E. Rehfuess, J. D. Thomas, and D. M. Caldwell. 2019. Synthesising quantitative evidence in systematic reviews of complex health interventions. BMJ Global Health 4(Suppl 1):e000858.

Hill, A. B. 1965. The environment and disease: Association or causation? Proceedings of the Royal Society of Medicine 58(5):295–300.

Hooijmans, C. R., M. M. Rovers, R. B. M. De Vries, M. Leenaars, M. Ritskes-Hoitinga, and M. W. Langendam. 2014. SYRCLE’s risk of bias tool for animal studies. BMC Medical Research Methodology 14(1):43.

IARC (International Agency for Research on Cancer). 2019. Preamble. IARC monographs on the identification of carcinogenic hazards to humans. Lyon, France: World Health Organization.

IOM (Institute of Medicine). 2011. Finding what works in health care: Standards for systematic reviews. Washington, DC: The National Academies Press. https://www.ncbi.nlm.nih.gov/pubmed/24983062 (accessed July 12, 2023).

Johnson, P. A., E. Koustas, H. M. Vesterinen, P. Sutton, D. S. Atchley, A. N. Kim, M. A. Campbell, J. Donald, S. Sen, L. Bero, L. Zeise, and T. J. Woodruff. 2016. Application of the Navigation Guide systematic review methodology to the evidence for developmental and reproductive toxicity of triclosan. Environment International 92–93:716–728.

Liu, K. S., F. Y. Huang, S. B. Hayward, J. Wesolowski, and K. Sexton. 1991. Irritant effects of formaldehyde exposure in mobile homes. Environmental Health Perspectives 94:91–94.

Morgan, A. J., N. J. Reavley, A. M. Ross, L. S. Too, and A. F. Jorm. 2018. Interventions to reduce stigma towards people with severe mental illness: Systematic review and meta-analysis. Journal of Psychiatric Research 103:120–133.

Morgan, R. L., K. A. Thayer, L. Bero, N. Bruce, Y. Falck-Ytter, D. Ghersi, G. Guyatt, C. Hooijmans, M. Langendam, D. Mandrioli, R. A. Mustafa, E.A. Rehfuess, A. A. Rooney, B. Shea, E. K. Silbergeld, P. Sutton, M.S. Wolfe, T. J. Woodruff, J. H. Verbeek, A. C. Holloway, N. Santesso, H. J. Schünemann. 2016. GRADE: Assessing the quality of evidence in environmental and occupational health. Environment International. Jul–Aug;92–93:611–6. https://doi:10.1016/j.envint.2016.01.004.

Morgan, R. L., B. Beverly, D. Ghersi, H. J. Schünemann, A. A. Rooney, P. Whaley, Y. Zhu, and K. A. Thayer. 2019. GRADE guidelines for environmental and occupational health: A new series of articles in Environment International. Environment International 128:11–12.

Mustafa, R. A., N. Santesso, J. Brozek, E. A. Akl, S. D. Walter, G. Norman, K. Kulasegaram, R. Christensen, G. H. Guyatt, Y. Falck-Ytter, S. Chang, M. H. Murad, G. E. Vist, T. Lasserson, G. Gartlehner, V. Shukla, X. Sun, C. Whittington, P.N. Post, E. Lang, K. Thaler, I. Kunnamo, H. Alenius, J. J. Meerpohl, A. C. Alba, I. F. Nevis, S. Gentles, M. C. Ethier, A. Carrasco-Labra, R. Khatib, G. Nesrallah, J. Kroft, A. Selk, R. Brignardello-Petersen, H. J. Schünemann. 2013. The GRADE approach is reproducible in assessing the quality of evidence of quantitative evidence syntheses. Journal of Clinical Epidemiology. Jul;66(7):736–42; quiz 742.e1-5. https://doi:10.1016/j.jclinepi.2013.02.004.

NASEM (National Academies of Sciences, Engineering, and Medicine). 2017. Application of systematic review methods in an overall strategy for evaluating low-dose toxicity from endocrine active chemicals. Washington, DC: The National Academies Press.

NASEM. 2018. Progress toward transforming the Integrated Risk Information System (IRIS) program. Washington, DC: The National Academies Press.

NASEM. 2022. Review of U.S. EPA’s ORD staff handbook for developing IRIS Assessments: 2020 Version. Washington, DC: The National Academies Press.

NASEM. 2023. Building Confidence in New Evidence Streams for Human Health Risk Assessment: Lessons Learned from Laboratory Mammalian Toxicity Tests. Washington, DC: The National Academies Press. https://doi.org/10.17226/26906.

NRC (National Research Council). 2007. Toxicity Testing in the 21st Century: A Vision and a Strategy. Washington, DC: The National Academies Press. https://doi.org/10.17226/11970.

NRC. 2009. Science and decisions: Advancing risk assessment. Washington, DC: The National Academies Press.

NRC. 2011. Review of the Environmental Protection Agency’s draft IRIS assessment of formaldehyde. Washington, DC: National Academies Press.

NRC. 2014. Review of EPA’s Integrated Risk Information System (IRIS) process. Washington, DC: The National Academies Press.

NTP (National Toxicology Program). 2015. OHAT risk of bias rating tool for human and animal studies. Washington, DC: Department of Health and Human Services, Public Health Service.

NTP. 2019. Handbook for conducting a literature-based health assessment using OHAT approach for systematic review and evidence integration. Washington, DC: Department of Health and Human Services, Public Health Service.

NTP. 2023. Cancer Evaluation Criteria. Research Triangle Park, NC: U.S. Department of Health and Human Services, Public Health Service. Available from: https://ntp.niehs.nih.gov/whatwestudy/testpgm/cartox/criteria.

Samet, J. M., W. A. Chiu, V. Cogliano, J. Jinot, D. Kriebel, R. M. Lunn, F. A. Beland, L. Bero, P. Browne, L. Fritschi, J. Kanno, D. W. Lachenmeier, Q. Lan, G. Lasfargues, F. L. Curieux, S. Peters, P. Shubat, H. Sone, M. A. White, J. Williamson, M. Yakubovskaya, J. Siemiatycki, P. A. White, K. Z. Guyton, M. K. Schubauer-Berigan, A. L. Hall, Y. Grosse, V. Bouvard, L. Benbrahim-Tallaa, F. El Ghissassi, B. Lauby-Secretan, B. Armstrong, R. Saracci, J. Zavadil, K. Straif, and C. P. Wild. 2020. The IARC monographs: Updated procedures for modern and transparent evidence synthesis in cancer hazard identification. Journal of the National Cancer Institute 112(1):30–37.

Schaefer, H. R. and J. L. Myers. 2017. Guidelines for performing systematic reviews in the development of toxicity factors. Regulatory Toxicology and Pharmacology 91:124–141.

Schünemann, H. J., C. Cuello, E. A. Akl, R. A. Mustafa, J. J. Meerpohl, K. Thayer, R. L. Morgan, G. Gartlehner, R. Kunz, S. V. Katikireddi, J. a. C. Sterne, J. P. T. Higgins, and G. H. Guyatt. 2019. GRADE guidelines: 18. How ROBINS-I and other tools to assess risk of bias in nonrandomized studies should be used to rate the certainty of a body of evidence. Journal of Clinical Epidemiology 111:105–114.

Smith, M. T., K. Z. Guyton, C. Gibbons, J. S. Fritz, C. J. Portier, I. Rusyn, D. M. DeMarini, J. C. Caldwell, R. J. Kavlock, P. C. Lambert, S. S. Hecht, J. R. Bucher, B. W. Stewart, R. Baan, V. Cogliano, and K. Straif. 2016. Key characteristics of carcinogens as a basis for organizing data on mechanisms of carcinogenesis. Environmental Health Perspectives 124(6):713-721.

Sterne, J. a. C., M. A. Hernán, B. C. Reeves, J. Savović, N. D. Berkman, M. Viswanathan, D. Henry, D. G. Altman, M. T. Ansari, I. Boutron, J. R. Carpenter, A. Chan, R. Churchill, J. J. Deeks, A. Hróbjartsson, J. J. Kirkham, P. Jüni, Y. K. Loke, T. D. Pigott, C. R. Ramsey, D. Regidor, H. R. Rothstein, L. Sandhu, P. L. Santaguida, H. J. Schünemann, B. Shea, I. Shrier,

P. Tugwell, L. Turner, J. C. Valentine, H. Waddington, E. Waters, G. A. Wells, P. F. Whiting, and J. P. T. Higgins. 2016. ROBINS-I: A tool for assessing risk of bias in non-randomised studies of interventions. BMJ, i4919.

Surgeon General’s Advisory Committee on Smoking and Health. 1964. Smoking and health: Report of the Advisory Committee to the Surgeon General of the Public Health Service. Washington, DC: Department of Health, Education, and Welfare, Public Health Service. http://purl.access.gpo.gov/GPO/LPS107851 (accessed July 12, 2023).

Warren, G. W., A. J. Alberg, A. S. Kraft, and K. M. Cummings. 2014. The 2014 Surgeon General’s report: The health consequences of smoking—50 years of progress: A paradigm shift in cancer care. Cancer 120(13):1914–1916.

Woodruff, T. J., and P. Sutton. 2014. The Navigation Guide systematic review methodology: a rigorous and transparent method for translating environmental health science into better health outcomes. Environmental Health Perspectives 122(10):1007–1014.