7

Integrating the Social and Behavioral Sciences (SBS) into the Design of a Human–Machine Ecosystem

With the next generation of artificial intelligence (AI), technologies and tools used to help filter and analyze data would not only be inserted into the current work of the intelligence analyst as they are now but also would transform the very way intelligence analysis is conducted. As a recent report on AI notes, “the field of AI [research] is shifting toward building intelligent systems that can collaborate effectively with people, and that are more generally human-aware,1 including creative ways to develop interactive and scalable ways for people to teach robots” (2015 Study Panel of the One Hundred Year Study of Artificial Intelligence, 2016, p. 9). Future technology could support the design of a human–machine ecosystem for intelligence analysis: an ecosystem composed of human analysts and autonomous AI agents, supported by other technologies that could work in true collaboration. This ecosystem could transform intelligence analysis by

- proactively addressing core analytic challenges more comprehensively than humans alone could, by, for example, systematically monitoring large volumes of data and mining large archives of potentially relevant background material;

- reaching across controlled-access networks within the Intelligence Community (IC) efficiently and securely; and

- identifying patterns and associations in data more rapidly than

___________________

1 Systems that are human-aware “specifically model, and are specifically designed for, the characteristics of the people with whom they are meant to interact” (p. 17).

humans alone could do, and in real time, uncovering connections that previously would not have been detectable.

The design and implementation of a successful human–machine ecosystem will depend on research from the SBS. Given the increasing sophistication of AI applications and the many possible modes of human–technology partnering, there are many unanswered questions about how best to integrate humans with AI agents in analytic tasks. Existing SBS research will be relevant, but the complexity of a human–machine ecosystem will pose new kinds of challenges for the human analyst, challenges that will require new research if the IC is to take advantage of this fundamental technological opportunity.

This chapter explores key questions about human–machine interactions that need to be addressed if the development of new AI collaborators is to produce trusted teammates, not simply assistive devices. The committee’s objective was not to propose a plan for developing a human–machine ecosystem, but to describe the SBS research the IC will need if it is to create, use, and maintain one. We begin with an overview of what would be different if such an ecosystem were developed for the IC: a look at the nature of the agents and technologies that would orchestrate the work and at how intelligence analysis would be transformed. We then turn to what is needed to exploit this opportunity. We examine primary insights from SBS fields that can guide designers, engineers, and computer scientists, at all stages from design to implementation, in creating technologies that will interact optimally with human analysts and support high-functioning systems of human and machines.

We also consider what new research will be needed to bring this opportunity to fruition. For simplicity, the discussion is divided into four domains: (1) human capacities, (2) human–machine interaction, (3) human–technology teaming, and (4) human–system integration. Although this list appears to suggest a hierarchy of work from studies of human capacity to system integration, an optimal research program will require the synergistic interplay of research in each of these domains as knowledge accumulates. Continuous research in each domain will form components of a larger research program that supports the development of an operational human–machine ecosystem to support intelligence analysis.

We note that the set of research topics that could potentially advance the development of a human–machine ecosystem is vast. We identified many other lines of inquiry that might be pursued, and this chapter offers a foundation for what would likely need to be an ongoing program of research. The chapter ends with conclusions about how the IC might move forward to pursue this opportunity, including ideas for planning and conducting the research that is needed, as well as key ethical considerations.

A NEW FORM OF ANALYTIC WORK

The development of a human–machine ecosystem for intelligence analysis would not alter the essential sensemaking challenge described in Chapter 4, but the nature of the activities an analyst might carry out in collaboration with AI agents would be very different. The primary benefits of such a system would lie in its capacity to marry capabilities that are uniquely human—those that presumably no machine could ever replace—with the computing power that outperforms human capacity for some tasks. By doing so, the human–machine ecosystem could, for example, filter and analyze vast quantities of data at an exponentially faster rate than would be possible for any team of humans; reveal questions, connections, and patterns that humans would likely or certainly miss; process a range of inputs—from text in multiple languages to geospatial data—that would require diverse expertise far beyond what a team of individual humans could offer; and tirelessly perform certain functions 24 hours a day.2

Many of the potential benefits relate to the availability of vast and constantly growing quantities and types of data. As discussed in Chapter 5, there are many computational approaches with potential application to issues of interest to the intelligence analyst, all of which require considerable computing power. Some types of data will need to be collected and integrated over long periods, while other streams of information will need to be monitored continuously for new data of value. Automated data collection, monitoring, and analysis supported by new AI techniques would offer means of exploiting large datasets. However, not all analytic work can be automated or turned over to AI. The human analyst will still play a critical role in information processing and decision making, exercising the complex capacity to make judgments in the face of the high levels of uncertainty and risk associated with intelligence analysis.

The key characteristic of a human–machine ecosystem would be the integration of contributions of multiple agents and technologies. There are many ways to describe such agents and technologies, but we distinguish here between those that have agency (humans and autonomous systems or AI) and those that simply provide services or information (e.g., cameras, sensing devices, algorithms for automatic data collection or interpretation). A simple depiction of a human–machine ecosystem in Figure 7-1 shows that people are an integral part of operations. The figure portrays three analytic teams that correspond to the three analytic lenses depicted in Figure 4-1 in Chapter 4. These teams, as well as individual agents, are working in collaboration with and connected to other teams through AI systems.

___________________

2 Several of the white papers received by the committee (see Chapter 1) provided valuable suggestions about what improved human–machine interactions might offer (see Dien et al., 2017; Phillips et al., 2017; Sagan and McCormick, 2017).

NOTE: This figure is a simple illustration of a human–machine ecosystem in which analysts work in collaboration with and are connected through AI systems. It shows three teams from the hypothetical illustration of analytic work in Chapter 4. The figure also reflects the fact that analysts will be able to work individually while remaining connected to the ecosystem. A variety of sensors (shown surrounding the ecosystem) provide information to the human and AI agents for a number of different purposes, from monitoring and analyzing data pertinent to intelligence analysis to collecting and processing data from interactions between analysts and AI to improve performance.

An agent has been defined as “anything that can be viewed as perceiving its environment through sensors and acting upon that environment through effectors” (Russell and Norvig, 2010, p. 34). Human agents are capable of perceiving through their senses; thinking with their brains; and acting with their hands, mouths, and other parts of the body. Machines that are designed as intelligent agents are able to perceive their environment through sensors (e.g., cameras, infrared rangefinders) and use actuators, motors, and embedded algorithms to make decisions and perform actions. Many intelligent machines (e.g., vacuums, robots for assembly lines, game systems) have been developed to accomplish finite, rule-based tasks.

Most machines perform automated functions, which means they are designed to complete a task or set of tasks in a predictable fashion and with predictable outcomes, usually with a human operator performing any tasks necessary before or after the automated sequence. A machine has autonomy when it can use knowledge it has accumulated from experience, together with sensory input and its built-in knowledge, to perform an action; that is, it has the flexibility to identify a course of action in response to a unique circumstance (National Research Council, 2014; Russell and Norvig, 2010). In a human–machine ecosystem, some machines would need to have that capability. Autonomous systems suitable for intelligence analysis may not be available now, but they are coming. We use the term “semiautonomous agents” for such machines to stress the essential involvement of humans in critical decisions.

These semiautonomous agents would receive inputs from their environment through sensors and supporting technologies, as well as from other agents; select action(s) in pursuit of goals; and then influence their environment either by passing information along or by engaging in physical actions. For example, a useful semiautonomous agent might be a robot capable of physically moving material from one place to another, or an agent capable of alerting an analyst of an event requiring attention through vibrations on a device such as a smartphone. Ideally, these agents would learn from feedback and from their own experiences so they could adapt future actions to improve performance within the ecosystem. There is a long history of research and AI development improving the capability of machines to learn (McCorduck, 2004).

Semiautonomous machines could work with human agents, augmenting working memory, for example, or indicating potentially useful information during an analytic task. They could also work independently, performing complex monitoring functions or data analysis that were beyond human capabilities, or taking on tasks normally performed by humans when the workload became excessive. Either way, they would need to be able to interact regularly with other semiautonomous and human agents, as well as other types of machines.

Other machines in the ecosystem would not have the capacity for adaptive operations; they would be designed to provide necessary and predictable information or services, automatically or on request, to support the work of human and semiautonomous agents. We refer to these types of machines either as sensors (devices useful for monitoring the state of the human and semiautonomous agents, as well as the environment within the ecosystem) or as tools or supporting technologies (devices that will be helpful in acquiring or processing data or executing analyses across many forms of information3).

The IC already uses many kinds of tools to track a broad range of security threats. This capacity will only expand as it becomes possible to implement more and improved automated analyses or data processing tools to monitor broad areas of interest. Semiautonomous AI agents could foreseeably be of particular benefit in meeting the challenge of identifying significant intersections in a vast range of data and analytic output and, as discussed in Chapter 5, their connections to sociopolitical developments and emerging threats. They would require the capacity to integrate critical information—such as data and findings culled from images, communications, environmental measurements, and other collected intelligence—into the workflow of human agents to help them uncover significant connections. (Box 7-1 illustrates the possible effect on information available to analysts.)

In a human–machine ecosystem, analysts would work collaboratively with these semiautonomous AI agents to conduct the analytic activities of sensemaking. Table 7-1 illustrates some of the specific ways in which analytic activities carried out by a human–machine ecosystem would differ from those carried out in the traditional manner.

RESEARCH DOMAINS

If AI technology becomes powerful and autonomous enough to support an ecosystem for intelligence analysis, that system will be useful only to the extent that human analysts benefit from and are able to take advantage of the assistance it offers. SBS research is essential to ensuring that developers of such an ecosystem for the IC understand the strengths and limitations of human agents. Numerous disciplines—such as cognitive science, communications, human factors, human–systems integration, neuroscience, and psychology—contribute to this understanding of human characteristics and their interactions with machines. Most of the questions of interest

___________________

3 Information of relevance to intelligence analysis includes varied types of data, such as those from satellite surveillance and open-source communications and the tracking of critical supply chains, environmental measurements, and indicators of disease contagion.

TABLE 7-1 Comparison of Analytic Activities Conducted Traditionally and in a Human–Machine Ecosystem

| Analytic Activity of an Individual Analyst | Traditional Human Process | Process in a Human–Machine Ecosystem (HME) |

|---|---|---|

| Maintain Inventory of Important Questions |

|

|

| Stay Abreast of Current Information |

|

|

| Analyze Assembled Information |

|

|

| Communicate Intelligence and Analysis to Others |

|

|

| Analytic Activity of an Individual Analyst | Traditional Human Process | Process in a Human–Machine Ecosystem (HME) |

|---|---|---|

| Sustain and Build Expertise in Analytic Area (when time permits) |

|

|

NOTE: Descriptions of analysts’ activities are based on discussion in Chapter 4.

will require interdisciplinary work that brings researchers together both from across the SBS disciplines and with those in AI fields and computer science. The discussion here divides the needed research into four domains: (1) human capacities, (2) human–machine interaction, (3) human–technology teaming, and (4) human–system integration. Although it is not possible to discuss all the relevant research questions comprehensively within the scope of this study, we offer in each domain some of the critical areas that should be investigated. As noted above, the optimal research program will require the synergistic interplay of research in each of these domains as knowledge accumulates.

Human Capacities

While human agents bring sophisticated and contextualized reasoning to the process of analysis and inference, they are also limited in significant ways in their ability to process information. Understanding those limits, particularly in the context of intelligence analysis, is an important step in designing the technologies to augment them. The limitations to humans’ capacity for perception, attention, and cognition are at the root of many errors, both in everyday life and in expert settings such as medicine (Krupinski, 1996; Nodine and Kundel, 1987; Waite et al., 2016), and there is every reason to believe that the same is true for intelligence analysis. While existing research provides insights into these limitations, the complexity of an environment of human–machine teams for intelligence analysis would require more detailed understanding, derived from many research areas, of how these limitations should be factored into a system’s design. In this section, we illustrate the possibilities with a discussion of two such areas: we explore findings from vision sciences that shed light on human limits in attention and memory, and review the literature on workload to consider what is known about how individuals manage interruptions and multiple tasks.

Fundamental Capacity Limits

Humans have finite capabilities. At a perceptual level, many of these limits are fairly self-evident, and centuries of research and development have been devoted to extending human capacity. For example, human acuity is limited to resolving details of about 1 minute of arc (a unit of measure for angles), so microscopes and telescopes were invented to bring small or distant objects into view. Other devices (e.g., infrared night-vision glasses) were created to detect and render visible electromagnetic radiation outside the range of wavelengths of 400–700 nanometers, which humans cannot see. Microphones and amplifiers allow humans to detect otherwise

inaudible sounds, while other devices extend their chemical senses of taste and smell. And the seismograph that detects a remote earthquake or underground nuclear blast can be thought of as an enhancement of humans’ sense of touch.

Attention limits are less fully understood than perceptual limits, and accordingly, less progress has been made in developing the technologies to support them. In fact, research has shown that humans are imperfectly aware of their own attention limits. Many people can recognize that they will fail to see something if it is too small to resolve or if the lights are out, but it may be less obvious to them that they can fail to notice a fairly dramatic change between two visible instances of the same thing (e.g., missing that someone previously had a beard [Simons and Levin, 1998] or failing to perceive an object right in front of them [Cohen et al., 2016; Simons and Chabris, 1999]).

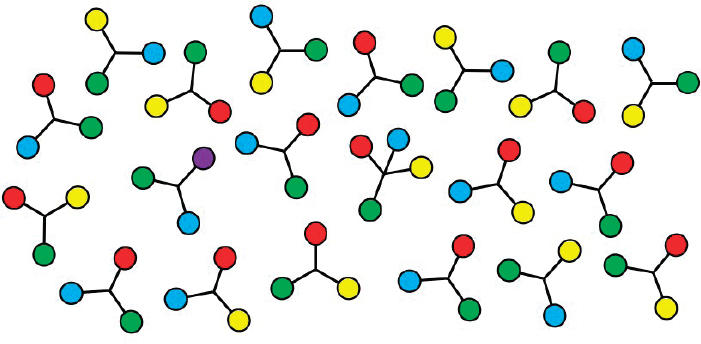

Figure 7-2 can be used to illustrate the complex relationship between attention and what is seen. In this figure, all the colors and lines seemingly can be seen at a glance, yet the presence of particular structures, such as one with four disks instead of three, or one with a blue, a yellow, and a red disk, is not immediately apparent. What makes attentional limits less intuitive than perceptual limits is that some version of “everything” can be seen clearly in this image. In this figure, the presence of a single purple

NOTE: This figure illustrates that some visual search tasks are easier than others. Finding the purple target is relatively easy because there is only one, and it is distinct. Finding a three-disk structure that includes blue, yellow, and red disks requires more effort, even though it is easy to confirm the correct colors once one has identified the right item. When the target is defined by a combination of colors, attentional scrutiny is required.

SOURCE: Redrawn from Wolfe (2003).

disk is immediately obvious because there is only one (although even in this case, one might not notice the purple disk until it became relevant). What is not obvious is that one needs to direct attention to a specific question to be sure of its specific features (its colors or orientation)—that is, attention is generally limited to one or maybe a few objects or locations at any moment. For the rest of what is presently in view, the visual system is generating something like a statistical statement. In this case, the statistical statement might be, “There are red, green, blue, and yellow circles, mostly in triangular structures (with one exception).” This estimate is surprisingly rough. For instance, only attentional scrutiny would identify whether there is a vertical line in the figure.

Interestingly, however, the perceptual experience is not so rough. Instead, people experience a “grand illusion” (Noë et al., 2000) that they are seeing a coherent visual world filled with meaningful, recognized objects. One way of conceptualizing this phenomenon is to say that people “see” their “unconscious inference” (Helmholtz, 1924) about the state of the world. This mismatch between the perceptual experience and what is actually held in attention is what leads people to miss something in plain sight.

Attention and memory are closely intertwined elements of cognitive performance, and there are related capacity limits in these domains. Failures of attention could be thought of as failures of memory as opposed to failures of attention. That is, the observer might have seen and recognized something, but then have simply failed to remember it for long enough to report it. Note that from a practical point of view, it matters little whether these errors are described as “blindness” or “amnesia” since versions of these errors (e.g., failure to see a tumor in a medical scan or a missile site in a satellite image) would have the same adverse consequences either way.

Limits to retention in long-term memory are familiar, but limits to short-term or working memory can feel more surprising (Cowan, 2001). There is considerable debate among researchers about whether the latter capacity should be understood as a continuous “resource” or a set of discrete “slots” (Suchow et al., 2014), but there is agreement that the capacity is small. Researchers have found a variety of ways to demonstrate the significant limits to what humans can successfully hold in short-term memory (e.g., see Pylyshyn and Storm, 1988; Vogel and Machizawa, 2004).

These findings could have implications for the design of a human–machine ecosystem, but additional foundational research is needed on the nature of the limits on attention and memory and their significance in IC contexts. Meanwhile, researchers pursuing the design of a human–machine ecosystem can use what is already known in developing technologies, systems, or processes that may augment these human capacities.

Managing Workload

The literature on workload demands, task switching, and interruptions and their effects on human performance is highly relevant to the design of communication protocols and priorities in a human–machine ecosystem. In this environment, the types of workload demands on individual analysts would be different than in the current analytic workflow. Many agents would likely be operating in the work environment, with other agents asynchronously providing new information to be assessed and possibly interrupting the task of another agent.

A promising research avenue is to seek ways to better characterize how and when the products of semiautonomous agents and supporting technologies can best be conveyed to human analysts. A large network of semiautonomous AI agents could generate notifications or push information to human analysts on a random, and sometimes rapid, schedule. From the point of view of the human, however, this capability could have costs as well as benefits. If the semiautonomous agents were to uncover evidence of unusually high-priority risks, this information likely should be pushed to the responsible human analysts as quickly as possible. Otherwise, in lower-risk situations, it would be important to schedule information transfer so as not to stress the human analyst and to increase the information’s usability. Human analysts may also initiate searches for relevant data or inputs from other agents. Research questions of interest include how best to interrupt analysts to transfer information and how analysts would manage switching between tasks in a human–machine ecosystem.

Interruptions. The current literature on interruptions and work fragmentation suggests that people who are interrupted can sometimes compensate by working harder when they return to the interrupted task, though there may be costs in terms of stress and frustration (Bawden and Robinson, 2008; Mark et al., 2008). In other cases, interruptions simply degrade human performance. Interruption is a frequent occurrence for information workers in many work environments (for a review, see Mark et al. [2005]), but are all interruptions the same, and what are their costs? Do people spontaneously interrupt their work patterns, and if so, why? Can external cues help keep things on track (Smith et al., 2003)? These questions require new research.

Work fragmentation, with correspondingly short work episodes, may damage performance, especially on complex problems. Even when an interruption delivers task-relevant information, switching between tasks takes time (Braver et al., 2003; Monsell, 2003; Pashler, 2000). If the current task involves several complicated steps, an interruption may require going back several steps to reinstate context (Altman et al., 2014). Recursive

interruptions, in which an interruption to handle a second task is in turn interrupted by a third, can pose high demands for recovery. On the other hand, some research has shown that multitasking can sometimes improve efficiency (Grier et al., 2008).

Researchers have coded the activities of individuals in their natural work environments and explored the costs of interruption and task switching (Mark et al., 2005, 2016). Such studies indicate that cycling between multiple tasks is common, and that interruptions are most detrimental if the interrupted task is complex, if the interruption does not occur at a natural breakpoint, and if the interruption requires a switch to a different work unit. Individuals who recover well following an interruption often note the current state of information within the interrupted task for later use or analysis (Mark et al., 2005). These observations have implications for the design of protocols for interaction between humans and machines.

Switching between tasks. A classic literature has examined when and how humans divide attention between tasks. Sometimes, tasks can be carried out concurrently without loss; more often, however, performance must be traded off between tasks (Sperling and Dosher, 1986; Sperling and Melcher, 1978; Wickens, 2008, 2010), and in overload situations, operators often carry out tasks and subtasks sequentially (Wickens et al., 2015). Some of the loss in performance caused by switching between tasks can be reduced with training or practice (Monsell, 2003; Strobach et al., 2012). Research in this area has focused on workload and task switching in laboratory tasks involving rapid stimulus classifications, such as alternating color or orientation judgments, where the switching to another task is triggered by the stimulus (Bailey and Konstan, 2006; Pashler, 2000). In voluntary task switching, also studied in laboratory settings (Arrington and Logan, 2004, 2005), operators who are instructed to switch between two tasks at their own rate generally prefer to avoid switch costs. However, research on factors that might be especially important for a human–machine ecosystem, such as the difficulty or priority of a task that might affect task choice, is sparse (Gutzwiller, 2014).

Task threading (a combination of concurrent and sequential task execution), task switching, and interruption have also been studied in human factors research, often using tasks related to flight deck or other complex operations scenarios. Such studies have consisted of observing how individual human agents (e.g., a pilot) facing a computer screen (e.g., on a flight deck) manage their duty cycle with two to four tasks, each with different incentives and time demands. Models predicting the pattern of switching between tasks include preferences for easy, interesting, or high-priority tasks (Gutzwiller et al., 2014; Wickens et al., 2015). These studies also have identified connections between task switching and the human agent’s

need for breaks (Helton and Russell, 2015). Other measured aspects of individual cognitive capacities or functions, such as working memory or perceptual abilities, and their interactions with task complexity or stress may be correlated with an individual’s ability to perform in these complex environments (Kane and Engle, 2003; Kane et al., 2007; Oberlander et al., 2007; Unsworth and Engle, 2007).

When work cycles between tasks in relatively rapid episodes, the workload demands can be visible in various physiological measures, such as pupil diameter or other measures of workload or related stress, such as heart rate (Adamczyk and Bailey, 2004; Bailey and Konstan, 2006; Haapalainen et al., 2010; Iqbal et al., 2004, 2005). (See the discussion of applications of neuroscience later in this chapter.)

Human–Machine Interaction

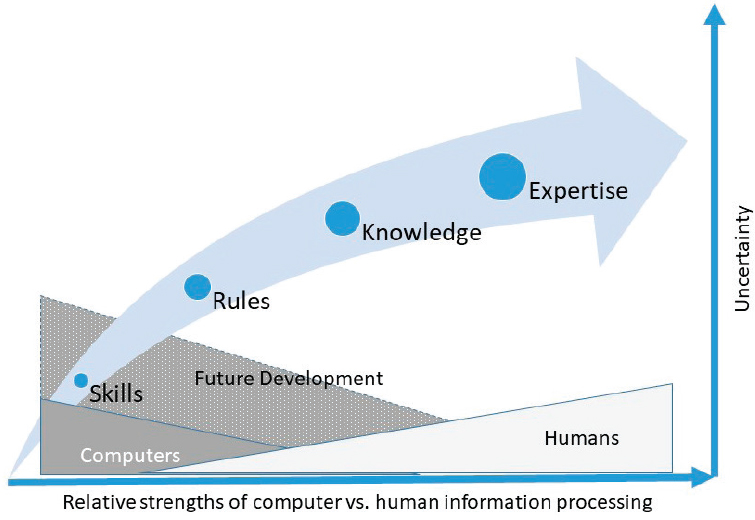

Research dating back decades has explored human–machine interaction, focusing primarily on the respective strengths and weaknesses of humans and machines and means of assigning tasks accordingly (see, e.g., Endsley, 1987; Kaber et al., 2005; National Research Council, 1951; Parasuraman, 2000; Parasuraman et al., 2000). More recent frameworks for human–machine interaction are helping system designers think about the possibilities for collaboration between humans and machines (Chen and Barnes, 2014). As shown in Figure 7-3, for example, Cummings (2014) drew on Rasmussen’s (1983) taxonomy of skills, rules, and knowledge-based behaviors to illustrate the synergy between computers and humans in relation to type of task and degree of uncertainty. Figure 7-3 shows that skills-based and rules-based tasks lend themselves to automation or execution by machines and that knowledge-based and expertise-based tasks are best performed by humans. However, the figure also indicates that the degree

NOTE: Skills-based tasks are defined as sensory–motor actions that can become highly automatic through training and practice. Rules-based tasks are actions guided by a set of procedures. Knowledge-based tasks are actions aided by mental models developed over time through repeated experience. Expertise-based tasks are actions predicated on previous knowledge-based tasks and dependent on significant experience in the presence of uncertainty.

SOURCE: Adapted from Cummings (2014).

of uncertainty inherent in the task at hand will help determine whether it can be fully automated, should be fully under human control, or can best be executed by human–machine interaction.

For repetitive or routine tasks, where the uncertainty is low and sensor reliability is high, machines have advantages over humans. For higher-level cognitive tasks, humans still outperform machines when judgment and intuition are key. However, current and projected advances in the reasoning capabilities of AI and machine learning show promise for enabling machines to take on more knowledge-based and even expertise-based tasks (Cummings, 2014; 2015 Study Panel of the One Hundred Year Study of Artificial Intelligence, 2016). With these advances, machines will be able to work alongside humans as teammates, changing the roles that both play and requiring a new understanding of how human–machine interactions can be most effective.

For intelligence analysis in the age of data overload, human analysts are likely to need assistance from machines in a number of ways. Examples include (1) providing decision support (e.g., helping to store critical information, search datasets, scan multiple images, monitor real-time data streams for anomalies, or recover situational awareness when switching between tasks); (2) generating data visualizations from large datasets in ways that help analysts discover patterns and critical information; and (3) augmenting forecasting capabilities to improve the IC’s ability to anticipate events. This section explores some of the questions that need to be considered and research needed to improve human–machine interaction for intelligence analysis, including research on applications of neuroscience, a field that is advancing rapidly and providing tools and methods.

Decision Support

A wide range of research has investigated technologies that support decision making, much of it focused on medical decision support. Greenes (2014) provides an overview of the topic’s scope within the medical field, which ranges from deep learning algorithms that support classification of images (e.g., Amir and Lehmann, 2016; Fraioli et al., 2010) to efforts to leverage big data methods so that genomic data on patients can be applied to support precision medical care (e.g., Hoffman et al., 2016). AI systems have also been developed to advise human decision makers. Examples of these applications include moving ships and cranes around a container port (Bierwirth and Meisel, 2015), moving energy around the grid (Ferruzzi et al., 2016), and maintaining supply chains (O’Rourke, 2014). At the level of individual consumers there are such applications as rules for conversing with one’s car (Strayer et al., 2016) and even options for presenting online reviews that could shape one’s choice of a restaurant (Zhang et al.,

2017). Efforts in the security realm include automating airport screening (Hättenschwiler et al., 2018).

For all of these applications, implementation requires making choices about the specific nature of the human–machine interactions involved, and the same will be true for applications of AI to intelligence analysis. Many different rules for transferring information between human and AI agents are possible. For example, does the AI offer its information before, during, or after the human’s initial decision? Different rules will produce different outcomes. Moreover, the nature of the task influences rule decisions and outcomes. For instance, if the task is to detect or predict something rare, even a good AI system is likely to produce many more false-positive than true-positive findings. As discussed further below, the interaction rule on how these findings are presented and used can affect the human user’s attitude toward and trust in the AI (Hoff and Bashir, 2015). Should the AI agent present only that information most likely to be useful, posing the risk of failing to draw attention to seemingly less important but actually critical pieces of information, or should the AI agent be programmed to deliver many possibilities to the human analyst, who would then have to separate out the useful material? Can the AI agent be programmed to base its behavior on the prior decision making of the human analyst? For instance, if the human agent were labeling all the information being provided by the AI agent as uninteresting, might the AI agent become more permissive in an effort to make the outputs of the task more comprehensive to increase its chances of providing useful information? There are no straightforward answers to such questions, and seeking those answers and developing ultimate rules for specific contexts will be a rich area for future research.

The remainder of this section reviews some of what is known about perception errors, rare events, biases, and trust to highlight the range of issues that need to be considered in developing the rules of interaction between humans and machines.

Perception errors. An error in perception might involve detecting something that is not present (a false-positive error) or failing to detect something that is present (a false-negative or miss error). Most existing research has focused on the latter class of errors. A useful taxonomy of such errors comes from work in medical image perception (Nodine and Kundel, 1987, p. 1):

- Sampling errors (also called “search” errors [Krupinski, 1996]) occur when experts fail to look in the right location or sample the right information. These are cases in which, in a world of too much information, the information that turned out to be relevant was simply never examined.

- Recognition errors are those in which the target was seen or the information reviewed, but no stimulus attracted any particular attention, and no action was taken. In these cases, the relevant information was examined but not regarded as important.

- Decision errors are those in which the observer recognizes that the stimulus or information might be important, but makes the wrong decision about what to do with that information.

Decisions depend on criteria (Macmillan and Creelman, 2005), either formally set by some standard or internally judged by the observer. A false-negative (or miss) error is made when the observer perceives a target and takes a conservative position by incorrectly concluding that it is not a target, whereas a false-positive error is made when the observer takes a more liberal position by incorrectly concluding that something is a target when it is not. The placement of a decision criterion is of particular importance for detection of rare events (see below). At an airport security checkpoint, for example, the same bag might pass through on one day but be sent for secondary inspection on another if the alert level were raised. The bag has not changed, but the decision criterion has. Signal detection theory (Green and Swets, 1966) makes it clear that shifting decision criteria simply changes the mix of errors made by observers and does not eliminate the possibility of errors.

Rare events. Many of the targets that intelligence analysts try to detect are rare events. It would be desirable, for example, to detect the warning signs of a terrorist’s intentions or of a coup d’état. But, the likelihood that any individual will become a terrorist is very, very small, and coups d’état are also quite rare. Detecting events that are naturally rare is more complicated then detecting more common ones. Research has shown that the nature of human cognition predisposes a person to miss rare events. Humans are, at least in a rough sense, Bayesian decision makers (Maloney and Zhang, 2010): they take the prior probabilities of an event or stimulus into account when making decisions. Missing the signs of a rare event can therefore be considered a form of decision error because humans are typically biased against deciding that they are detecting a rare event (after all, it is rare).

Laboratory studies of rare events in the contexts of screening mammography (Evans et al., 2013) and baggage screening (Mitroff and Biggs, 2014; Wolfe et al., 2013) have shown that low-prevalence targets are more frequently missed (Wolfe et al., 2005) and that using more conservative decision criteria is an important factor in these cases. This research also has shown that observers more readily abandon the search for a rare than for a more common target (Cain et al., 2013; Tuddenham, 1962; Wolfe and Van Wert, 2010). Other research has demonstrated that observers are less

vigilant in monitoring the world for rare events (Colquhoun and Baddeley, 1967; Mackworth, 1970; Thomson et al., 2015).

Rare events take different forms, falling on a continuum from predictable to unpredictable. The occurrence of breast cancer in a breast cancer screening program is an example of a predictable rare event: breast cancer will occur, but it will be rare (about 3 to 5 cases in 1,000 women screened in a North American population [Lee et al., 2016]). At the other end of the continuum are so-called “black swan” events.4 Obviously, truly unpredictable events will by definition, be impossible to predict. Early detection of predictable rare events, from cancer to coups d’état, is a more tractable but still difficult problem.

Technology would appear to offer a solution to the problem of detecting rare events since a computer does not get bored. An algorithm’s decision criteria can be set and will not drift to a more conservative value in the face of such events (Horsch et al., 2008). Yet even without a shift in the decision criteria, some false alarms will still occur. The extent of false alarms is one aspect of human–machine interaction that plays into human trust of technology, discussed further below.

Biases. Cognitive biases are thought processes that produce errors in decisions, as when an individual holds on to beliefs or ways of knowing in spite of contrary information (Gilovich and Griffin, 2002). Humans use information from prior experiences to understand current ones; they can often find connections and use related experience successfully in new applications. However, this ability to retrieve useful information from memory quickly is also subject to a number of biases. Individuals can, for example, be predisposed to search for information that is aligned with knowledge they already possess or that confirms a working hypothesis. While having hypotheses can be useful for sorting information, a person can become anchored to a particular working hypothesis and as a result, filter out discrepant information that may in fact be useful (Tversky and Kahneman, 1974; Yudkowsky, 2011).

Teams of people are just as susceptible to bias as individuals are. Consider the information-pooling bias—the tendency for a team to share and discuss information all members share over information known only to one team member (Stasser et al., 2000). In intelligence analysis, the capacity to consider other information that may be vital to the analysis is essential. Researchers have identified a number of simple cognitive prompts to guide

___________________

4 A black swan event is a metaphor for something that was not predicted but happened nonetheless. For example, the Fukushima nuclear reactor accident in Japan has been called a black swan event since officials believed they had prepared for all extreme threats to the reactor (Achenbach, 2011).

people to think more strategically about their decisions (e.g., Heath et al., 1998; Klein, 2007; Wittenbaum et al., 2004). Further research is needed to determine whether such prompts could work for the tasks of intelligence analysis and whether interactions with machines can be optimized to ensure that additional information critical to the problem at hand is reviewed and shared among analysts as appropriate.

Like humans, moreover, machines and AI systems are subject to errors and decision biases. In an increasingly digitized world, data mining algorithms are used to make sense of emerging streams of behavioral and other data (Portmess and Tower, 2014). Machine learning algorithms are used to identify inaccurate information automatically at the source (e.g., fraud alerts on credit cards) (Mittelstadt et al., 2016), and personalization and filtering algorithms are used to facilitate access to particular information for users (Newell and Marabelli, 2015). The issue, however, is that developers and users of such algorithms may, intentionally or inadvertently, insert bias into the algorithms’ operational parameters (Caliskan et al., 2017; Nakamura, 2013). Algorithms will reflect the gender, racial, socioeconomic, and other biases that are reflected in training data. For example, domestic U.S. criminal justice applications of machine learning and AI, such as facial recognition algorithms used in policing, have been found to be biased against African Americans (Garvie et al., 2016; Klare et al., 2012). (See Box 7-2 and Appendix D for more detail, and see Osoba and Welser [2017] and National Academies of Sciences, Engineering, and Medicine [2017a, 2018a] for further discussion of AI errors, bias, and associated risks.) This issue will continue to grow in importance as algorithms become more complex, and as they engage in semiautonomous interactions with other algorithms and are used to augment and/or replace analyses and decisions once the purview of humans.

Although research is under way to examine bias in training datasets and algorithms, one issue yet to be addressed is cultural bias, an issue of significance for the IC. Because data for training datasets are frequently collected in countries where relevant research is taking place, these algorithms are often biased to be more successful with certain cultures and geographic regions than others (Chen and Gomes, 2018). Analysts using computational models to assist in understanding and predicting the intent, behavior, and actions of adversaries may derive skewed results because of unrecognized cultural bias in the computational design process. Understanding of cultural nuances will be important in extending algorithmic applications to semantic and narrative analyses of use to the IC. For example, non-English data must be carefully defined and categorized for use in computational models to avoid mirror imaging and biasing the model toward one’s own cultural norms.5

___________________

5 Mirror imaging denotes analysts’ assumption that people being studied think and act like the analysts themselves, including their gender, race, culture, and so on (Witlin, 2008).

Designers can take steps to address and mitigate machine-based biases before algorithms and technologies are put into use by incorporating SBS insights on complex cultural, political, and social phenomena into their designs.

Trust. Lack of trust on the part of human agents will limit the potential of a human–machine ecosystem. On the other hand, excessive trust can lead to complacency and failure to intervene when the performance of technology declines (Cummings et al., 2008) or it is used for circumstances beyond its design (Parasuraman and Riley, 1997; Lee and Moray, 1994; Hoffman et al., 2013). Machine-based biases and errors will likely affect a human’s ability to trust machines completely, as demonstrated by the rare event example in Box 7-3.

The problem of low-prevalence events could adversely affect operations in a human–machine ecosystem. For example, an ecosystem’s detection of

two novel, otherwise undetected factors linking activities and information of the sort described earlier in Box 7-1 could be very useful. But if these two factors were embedded in a list of 100 spurious connections between activities, analysts might disregard the information. Given the vast number of possible interactions that might be uncovered by a human–machine ecosystem, the development of collaborative technologies capable of providing advice with high positive predictive value (i.e., number of correct conclusions/all conclusions) will be a daunting technical challenge.

Another challenge concerns the need to understand the reasoning behind the machine’s connections or conclusions. This issue has been termed “explainable AI” and is being examined by a number of research programs.6 In intelligence analysis or other types of decision making, it is not enough just to flag a connection or an anomaly; it would be useful if the machines could explain how they reached their findings.

The challenge for SBS research, then, is to understand how humans can make the best use of imperfect information from AI agents and supporting

___________________

6 See more information on Defense Advanced Research Projects Agency’s AI program at https://www.darpa.mil/program/explainable-artificial-intelligence [January 2019].

technologies. If the human–machine interactions are designed well, a feedback protocol will be in place to support both humans and machines in assessing any results critically, to allow more flexibility than human agents simply accepting or rejecting machine outputs.

Static and Dynamic Data Visualization

A human–machine ecosystem for intelligence analysis will use semiautonomous AI agents and supporting technologies to process massive digital datasets as an aid in discovering relevant patterns, including many that change over time. Because human analysts will continue to play critical roles in analysis and decision making, it will be important to consider human characteristics in the design and selection of ways to perceive the data. Sophisticated data visualizations7 are increasingly available in the public domain. Innovative new ways to present analyses of data are also likely to benefit the IC.

There are many approaches to data visualization. Textbooks and manuals have been written on the subject, many inspired by informal analysis of effectiveness, visual interest, and aesthetics (Tufte, 2003, 2006; Tufte and Graves-Morris, 2014; Tufte and Robins, 1997; Tufte et al., 1998). Visualization methods have been developed for statistical and computer applications (see, e.g., Rahlf, 2017). Indeed, the history of cartographic and visualization methods is long (Fayyad et al., 2002; Friendly and Denis, 2001; Fry, 2007; Keller et al., 1994; Steele and Iliinsky, 2010).

The volume and complexity of data available in the age of digital human behavior pose new challenges in analysis and visualization. Analyzing trends in time-varying multivariate data—that is, coding multiple variables at different times—creates special challenges for visualization and understanding, especially as large-scale time-varying simulations (Lee and Shen, 2009) are incorporated into analysis. As the volume of data potentially relevant both to research and to situations of interest to intelligence analysts increases, so do the challenges of how best to analyze and display these data for human interpretation and comprehension. Effectively conveying information to the human analyst may help support understanding, and therefore trust in the outputs of AI agents.

Researchers in the field of modern data visualization study how best to visualize data to support human perception and reasoning. An early literature on interpretation of simple graphs (e.g., Boy et al., 2014; Dujmović et al., 2010; Halford et al., 2005; Kosslyn, 1989; Wainer, 1992) focused on the distributions of single variables, differences in means, or interactive effects of variables. The aim of emerging work on data visualization is to support analysis and communication of more complex information. For example, researchers have proposed a rank ordering of different graphical formats to indicate how well they convey information about correlations

___________________

7 The term “data visualization” refers to the representation of information or data analysis in the form of a chart, diagram, picture, etc.

among variables (Harrison et al., 2014; Kay and Heer, 2016). Other recent advances include the following:

- Research has been conducted on the relative effectiveness of different visual features, including the relative discriminability of different CIE colors8 at different spatial scales (Stone et al., 2014); the effectiveness of coding by position, orientation, size, and color/luminance to reveal central tendencies, outliers, trends, and clusters in data (Healey, 1996; Szafir et al., 2016a); and how display size can influence the effectiveness of visualization (Shupp et al., 2009; Yost and North, 2006).

- Research has also been carried out on novel ways of visualizing word usage in text. Configurable color fields, such as clusters of color patterns, can help reveal patterns of word usage in documents, allowing comparison of those patterns across documents even when the relevant patterns may not be known a priori (Szafir et al., 2016b). In addition, complex word co-occurrence patterns based on frequently occurring words or word combinations can be used to derive the “topics” appearing in different bodies of text (Alexander et al., 2014; Dou et al., 2011). Keywords have been used to query financial transaction histories (Chang et al., 2007).

- Visualization of networks as graphs of connected points (nodes) or as points in multidimensional spaces in which distance conveys similarity has supported research on social networks. Study of the dynamics of social networks that change over time can potentially be aided by time animation displays or corresponding computer simulations of potential cascades of outcomes.

In practice, intelligence analysts will likely focus on the problems to be solved, not what can be accomplished with a specific visualization tool. Thus the most useful design focus will be on making the interactions with data tools natural, obvious, and transparent, permitting the analyst to move easily between different visualization applications (Shapiro, 2010; Steele and Iliinsky, 2010). Other predictable challenges may involve the fusion of multiple sources of data and treatment of missing data (Buja et al., 2008), both ongoing topics of study.

___________________

8 The CIE color model is a color space model created by the International Commission on Illumination, known as the Commission Internationale de l’Elcairage (CIE).

A combination of research in the vision sciences, the behavioral sciences, and human factors has the potential to advance understanding of how people extract meaning from a data visualization, resulting in more effective techniques and design principles. Research on visual perception and attention can be mined to improve the functionality of data visualization. Relevant topics include studies of the perception of basic visual properties (e.g., color, shape), visual search, and working memory.

Forecasting Models and Tools

A well-designed human–machine ecosystem has the potential to transform predictive forecasting—a domain that has long been central to the IC. Forecasting (the so-called “Holy Grail” of intelligence analysis) is the reliable anticipation of future events. Large-scale data sources and the

increasing complexity of intelligence problems will challenge the design of future forecasting systems. In addition, designers will have to determine how best to integrate information processed by semiautonomous AI agents and automated detection systems with human judgment. At present, forecasting methods with a 75–80 percent success rate are considered rather good, yet this success rate is often built on easy-to-moderate cases. Human analysts or algorithmic models may also score well on easy calls, but not so well on situations that are more difficult to predict. The IC needs forecasting methods that succeed with challenging problems. Recent SBS research has made significant progress toward (1) understanding how humans make predictions and improving the probability estimates of human forecasts and (2) incorporating human behavior into forecasting models. The next generation of forecasting research can build on this work to improve precision for those difficult problems with which the analyst most needs help.

Human forecasters. Human judgments and decisions, including the ability of humans to assess and manipulate probabilities, have been studied for decades, notably since the World War II era (Luce, 2005; Luce and Raiffa, 2012). Forecasting the future is a complex effort, though people do it often as they consider decisions in their personal lives (e.g., the likelihood of needing major medical treatment in the next year); in business (e.g., what kinds of dresses will sell); or in economic predictions (e.g., the economic consequences of a shift in monetary policy). The centrality of forecasting to intelligence analysis was recently highlighted by an Intelligence Advanced Research Projects Activity (IARPA) research initiative on geopolitical forecasting, which sponsored competitive forecasting tournaments.9 These tournaments were focused on such questions as: How likely is it that unrest in region R will explode into violence, and on what time scale? Will country C develop enriched nuclear stockpiles of a critical size? Will a regional outbreak of infectious disease D be transmitted to the United States? Will the capacity to generate clean fresh water be outpaced by population growth in arid region Z?

The initial 4-year round of IARPA funding covered five academic research groups that participated in these tournaments, producing a wealth of research on potential approaches to improving geopolitical forecasting (Tetlock, 2017). The research groups focused directly on understanding the probability estimates made by humans and how best to aggregate them. The research topics included quantitative evaluation of probability estimation, the technical issues underlying the transformation and/or aggregation of probability estimates from several individuals, the characteristics of

___________________

9 For more information on IARPA’s Aggregative Contingent Estimation program, see https://www.iarpa.gov/index.php/research-programs/ace [November 2018].

individual forecasters who are very successful, and whether selection or training could improve the accuracy of forecasts. This research program yielded valuable information for the IC, predicting yes-or-no answers to geopolitical questions relevant to its work. For example: Will Italy’s Silvio Berlusconi resign, lose reelection/confidence vote, or otherwise vacate office before October 1, 2011 (Satopää et al., 2014a)?10

The IARPA tournaments led to a number of key conclusions, resulting especially from the prominent work of one of the research groups—the Good Judgment Project (Mellers et al., 2014). This group may have outperformed others precisely because it focused on the training and selection of individuals and teams, as well as the use of technical models of probability estimation and aggregation.11 The training directed individuals to recognize potential biases of human probability estimates and reasoning (Fischhoff et al., 1978; Fong et al., 1986; Slovic et al., 1980). The group showed that certain interventions—such as providing individual training, increasing the interchange within teams, and the selection of “superforecasters” (the top few percent of performers)—all can improve the performance of both individuals and teams12 (Mellers et al., 2014).

One question for the next generation of research is how AI might be integrated into forecasting teams (see discussion of human–technology teaming below). To contribute effectively to team forecasts, AI and the automated models from which it would draw information would have to do a better job of incorporating human behavior into their predictions.

Incorporating human behavior into forecasting models. Research on human behavior, whether cognitive or social, has become increasingly relevant to forecasting. Many forecasting tools used in everyday life are based on physical models (e.g., forecasting of storm paths from weather models). Many of these physical models help predict impacts on humans but do not incorporate impacts of human behavior on the system. However, some tools have incorporated human behavior in their models. For example, a model used to predict the future geospatial distribution of valley fever—

___________________

10 Each human forecaster participating in a tournament would provide a probability estimate—e.g., 0.70 for a yes-no event—with the event being coded as 1 or 0 for whether it was predicted to occur or not. Brier (1950) scoring was used to assess the calibration of the forecasted probability (e.g., BS = ERj = 1(fi – oi)2, where R is the number of outcomes—2 as the problems were binary, fi is the forecasted probability, and oi is the outcome).

11 Other IARPA-funded groups focused on methods for aggregating and transforming probability estimates to correct for biases near the endpoints (i.e., underestimation near 1 and overestimation near 0) that may emerge from asymmetric noise distributions [Turner et al., 2014; Erev et al., 1994; Baron et al., 2014]).

12 The team performance was measured by the median probability estimate of an interacting group of individuals.

which tends to expand north and east from its area of greatest incidence in the southwestern United States when air and soils become warmer—incorporates both the impacts of human behavior on soil disturbance and climate factors (Gorris et al., 2018).

Two relevant examples were highlighted in the workshops held for this study (NASEM, 2018d; see Chapter 1). One of these examples, parallel to the valley fever case, shows how physical models of Zika virus vectors based on seasonal wetness, temperature, and mosquito proliferation identify baseline risk, but can be made more accurate when such human factors as risk behaviors, the mobility of infected individuals, or the potential impacts of available sociopolitical responses are also modeled (Monaghan et al., 2016). Other inputs might include indicators derived from data mining of social messaging (Twitter, Facebook, etc.), used to provide online estimates of outbreak severity. In the second example, indicators of human behavior are introduced into analytic estimates of human water insecurity in regions of water scarcity and population increase identified by the United Nations, many in the Middle East. Researchers from the Massachusetts Institute of Technology (MIT) forecasted regional water poverty by estimating the likelihood of large-scale water infrastructure projects based on such observable human indicators as local decision making, permits, and funding (Siddiqi et al., 2016). This example illustrates the benefits of specifying the most useful human indicators to include in a forecasting model.

Both of these examples start with models based in physical or biological mechanisms that become more sophisticated in one of three ways: (1) by incorporating further model modules to account for important aspects of human behavior (e.g., population mobility, exposure epidemiology, physical consequences of policy initiatives); (2) by measuring key inputs based on associated aspects of human behavior (e.g., disease contagion or measures of social unrest harvested from social media); or (3) by incorporating new questions or new indicators based on deconstruction of a problem (e.g., physical precursors of water projects, analysis of the availability of precursor supplies in an economic supply chain).

Data analytics and statistical models are tools that underpin the majority of forecasting. Traditionally, empirical models have been built from intentional observational studies, using statistically designed data collections. The data revolution and the explosion of data science have opened up new data sources not traditionally used in empirical models (Keller et al., 2016, 2017; NASEM, 2017b). Today, access to administrative data (i.e., data collected to administer a program, business, or institution) and opportunity data (e.g., embedded sensors, social media, Internet entries) are routinely being accessed to support analyses, even within the IC and U.S. Department of Defense (DoD) contexts (NASEM, 2017b). New quantitative paradigms are being developed to manage the diversity of data and the

challenges associated with repurposing massive amounts of non–statistically designed data sources for analysis (National Research Council, 2013). The IC has a growing program in open-source intelligence making use of such nontraditional data sources (Williams and Blum, 2018).

The IC has a long history of implementing and understanding engineering and physical systems modeling. The challenges highlighted in this report will require the IC to build corresponding competency in social systems modeling to support forecasting and sensemaking. The transition from physical/engineering-based modeling to social systems modeling is not straightforward (NASEM, 2016), however, for the following reasons:

- Physical systems often evolve according to well-known rules, so that uncertainties in predictions based on such models can be narrowed down to uncertainties in model parameters, initial conditions, and residual errors. By contrast, social systems often evolve according to rules that are not well understood, making the difference between such models and real life highly uncertain at times; quantifying this uncertainty can be challenging.

- A substantial amount of direct data is available for many physical systems with which to calibrate these models and estimate uncertainties in model-based predictions. Data for many social system models, on the other hand, are not readily available, and it may not be possible to produce those data directly (e.g., inducing strong emotions such as hatred in a human subject is unethical). Data may therefore need to be repurposed for use in supporting models of social systems.

- Engineered systems are often designed to operate so that their various subprocesses behave linearly, with minimal interaction, and operate within their designed specifications. Complex interactions and feedbacks are often the focus for many social systems, and the humans that are central to social systems do not have design specifications.

- Behavior mechanisms in physical systems can be modeled relatively easily because their effects are well known and independent (e.g., behavior of materials for yielding, fatigue, fracture, and creep. In contrast, mechanisms in human systems often interact (e.g., sadness, depression, addiction), making modeling more difficult.

- Extrapolation is difficult for physical and social systems. However, extrapolation is frequently required in many social system settings since the complexities of these systems often put them on a trajectory that is unlike previous experience.

___________________

13 More information on IARPA’s Hybrid Forecasting Competition can be found at https://www.iarpa.gov/index.php/research-programs/hfc?id=661 [November 2018].

14 Also, strong claims have been made about the calibrating force of the proper scoring method, such as the Brier score (Brier, 1950; Mellers et al., 2015; Tetlock et al., 2014), and it is not clear whether training and calibration of human forecasters would be equally effective in multinomial or expanded probability assessment problems, or indeed how best to aggregate estimates from multiple sources.

Applications of Neuroscience

Projected progress in neuroscience could be particularly relevant to the development of human–machine ecosystems, optimizing human–machine interactions. Developing work on the neurobiological relationships that underlie emotion, motivation, and cognition and influence cognitive processes (e.g., decision making) and their determinants is yielding possibilities for identifying and tracking physiological responses that signal mental and emotional states and improving task performance. Related work on interfaces between the human brain and computer technology, while further from implementation, show promise for significantly increasing the efficiency of technology-supported work.

Monitoring physiological responses. As an example of the application of neuroscience, neurotechnologies such as functional neural imaging (functional magnetic resonance imaging [fMRI]15 and functional near-infrared spectroscopy [fNIRS]16) provide tools with which to identify neural correlates of various dimensions of analytic thinking, such as inductive reasoning, pattern detection, cognitive flexibility, cognitive bias, open-mindedness, and even creativity. Mapping based on experimental results could produce a catalog of neural correlates of analytic thinking—that is, regions or networks of the brain that are active during a particular dimension of analytic thinking. Such mapping could provide a basis for vetting various human–machine interactions anticipated in a human–machine ecosystem.

Another important contribution of neuroscience is in the development of strategies and sensors designed to measure and potentially mitigate mental and physical fatigue in the workplace (see also the discussion of supports for the analytic workforce in Chapter 8). Recent advances have been made in developing tools for monitoring the physiological state of human agents and enhancing the interactions between humans and machines. Further developments in this area are likely to provide tools that can be used both in SBS research to study strategies for improving operations in a human–machine ecosystem and within the ecosystem itself as a way of monitoring the state of the environment and providing feedback to agents.

___________________

15 fMRI is used to measure changes in blood flow across the brain as an indication of changes in neural activity. Used in hospitals and imaging facilities, it measures changes in neural activity with high spatial resolution, but the size of the device does not allow for deployment in field settings.

16 fNIRS is used to measure changes in blood flow and changes in oxygenation of blood in discrete regions of the brain as an indication of neural activity. It measures changes in neural activity with moderate spatial resolution, and the size and portability of the device allow for deployment in field settings.

A wide range of portable, noninvasive biological sensors now available can monitor such physiological parameters as respiration and heart rate and a number of other biological signals.17 Many of these parameters are highly sensitive to stress, and can serve as important markers of specific aspects of stress, performance, and fatigue in human agents. Output from a number of these sensors has been used in developing explicit measures of cognitive workload (Liu et al., 2018). Biological markers, for example, including emerging skin-sensor measures of metabolic and neuroendocrine status (Rohrbaugh, 2017), could be valuable in identifying workload effects and provide important tools for use in studies of strategies for mitigating fatigue.

Although direct contact between a human agent and the sensor is necessary for some of these measures, work on the development of remote sensors that do not have that limitation is under way. Such sensors could be used to monitor physiological parameters as diverse as heart rate and heart rate variability, respiration, electrodermal activity, and other responses in people who are moving and are at a distance from the sensor.18

The development and deployment of these technologies will pose a wide range of ethical, legal, and social challenges (discussed further below). The use of biological sensors without the consent of those whose responses were being measured would pose clear questions, for example, but their use could also foster various forms of dependence among those who consented. Individuals who relied on data from the sensors to monitor their states and regulate their activities might become overreliant on the technology and less skillful at monitoring their own physiological responses (Bhardwaj, 2013; Lu, 2016; Noah et al., 2018). And widespread use of biosensors would contribute to concerns about a state in which too many actions are under surveillance (Shell, 2018; Rosenbalt et al., 2014; Moore and Piwek, 2017), as well as raise questions about the “quantified self”—the idea that all understanding of human behaviors is reduced to the data being tracked (Swan, 2013).

Interfaces between the human brain and computers. Research in neurotechnology and human–machine interactions has also explored interfaces between the human brain and computers; current applications of this work tend to be limited to the laboratory and are not yet practical for general use (Nicolas-Alonso and Gomez-Gil, 2012). A human operator using a

___________________

17 Other physiological parameters that can be monitored include heart rate variability; cardiovascular performance derived from impedance cardiography; sympathetic and parasympathetic activity indexed by pupillometry; localized cerebral blood flow revealed by near-infrared spectroscopy; and electrodermal, electroencephalographic, electromyographic, and neuroendocrine responses.

18 Other responses include photoplethysmographic, pupillometric, oculometric (eye-tracking), and pneumographic (respiratory) responses (Rohrbaugh, 2017).

keyboard and perhaps a mouse to enter and receive information through visual displays and possibly auditory communications represents a rather cumbersome and inefficient mode of interaction. The ability of the computer to interpret the thoughts of an operator would have significant implications for intelligence analysis, especially in combination with AI. Indeed, developments in brain–computer interfaces are rapidly emerging, making “thoughts” available as input to software applications. Instead of using typing or voice to issue data, an operator can deliver commands or information to a computer program simply by thinking. Current applications of this technology include devices that monitor the attention an individual devotes to a task and those that detect a selection that currently would be indicated by a mouse click. Emerging applications interface with virtual reality to convey feedback of reactions to the system through thoughts. Eventually, the brain–computer communication could be two-way, allowing a computer to induce perception in the brain of an operator.

DoD and the broader scientific community are increasingly interested in enhancing such interfaces. In support of President Obama’s BRAIN (Brain Research through Advancing Innovative Neurotechnologies) initiative, DoD sponsored the Systems-Based Neurotechnology for Emerging Therapies (SUBNETS) program.19 This program entailed a multi-institutional effort (the University of California-San Francisco, Massachusetts General Hospital, Lawrence Livermore National Laboratory, and Medtronics) to develop and deploy brain–computer interfaces (including invasive procedures) to advance health. More recently, DARPA sponsored the Towards a High-Resolution, Implantable Neural Interface project in support of the 2016 Neural Engineering System Design program.20 Although many of these efforts involve invasive methods, noninvasive approaches are increasingly viable and currently in development. One such system is envisioned as a wearable interactive smart system that can provide environmental and contextual information via multimodal sensory channels (visual, auditory, haptic); read the physiological, affective, and cognitive state of the operator; and assist in focusing attention on relevant items and facilitate decision making. Although this capability may be somewhat fanciful, and perhaps unrealistic with current technology and neurobiological understanding, ongoing interdisciplinary efforts are moving in this direction.

___________________

19 See https://www.darpa.mil/program/systems-based-neurotechnology-for-emerging-therapies [July 2018].

20 See https://www.darpa.mil/news-events/2017-07-10 [July 2018].

Human–Technology Teaming

Intelligence analysts generally work in analytic teams and collaborate regularly with their colleagues (see Chapter 4), and a key objective for a human–machine ecosystem is for analysts to work productively with technological teammates. A variety of research sheds light on the nature of teams in the workplace, some in the security context (see Box 7-4), and points to research directions for better understanding of human–machine teaming. This section reviews the science of teamwork and then explores its applications to teams involving autonomous or semiautonomous AI systems.

The Science of Teamwork

A rich literature describes research on teamwork and the factors that make human teams effective (Salas et al., 2008). The science of teams and teamwork gained impetus in July 1988 when the USS Vincennes accidentally took down an Iranian Airbus, killing 290 passengers. This incident was attributed in part to poor team decision making under stress. The Department of the Navy established a research program—TADMUS (Tactical Decision Making Under Stress)21—to identify research in human factors and training that could be useful in preventing such incidents. This program focused significant attention on team training (Cannon-Bowers and Salas, 1998). DoD subsequently supported significant research on

___________________

21 See http://all.net/journal/deception/www-tadmus.spawar.navy.mil/www-tadmus.spawar.navy.mil/TADMUS_Program_Background.html [June 2018].

the science of teams (Goodwin et al., 2018). In 2016, for example, the Army Research Office funded a Multi-University Research Initiative on the network science of teams, and in 2018, the Army Research Laboratory announced its Strengthening Teamwork for Robust Operations in Novel Groups (STRONG) program, focused on human–agent teaming.

The research base in this area has grown as recognition of the importance of understanding and improving teamwork has spread to other sectors, including medicine, energy, and academia. Foundational work established the nature of a team: a special type of group whose members have different but interdependent roles on the team (Salas et al., 1992). The study of teams within academia has come to be known as team science, which focuses on examining such questions as which team features influence scientific productivity (e.g., number of publications) and scientific impact (e.g., number of citations) (for a review, see Hall et al. [2018]). A 2015 National Academies report, Enhancing the Effectiveness of Team Science (National Research Council, 2015) addresses collaboration among teams of scientists, which often operate across disciplines. The interdisciplinary field of computer-supported cooperative work (Grudin, 1994) has developed to address the integration of computing technologies into teams, and most recently, advances in AI have led to work on the teaming of humans and autonomous agents or robots (McNeese et al., 2017).

Individuals almost always are members of multiple teams concurrently (O’Leary et al., 2011). In some cases, this multiteam membership enriches the performance of all teams because individuals serve as conduits of best practices across teams (Lungeanu et al., 2018). Research also has examined teams of teams, treating them as multiteam systems (DeChurch et al., 2012). This research has focused on the dilemma that the overarching goals of the system (e.g., investing resources to share intelligence relevant to other teams) are often not well aligned with the local goals of each team (e.g., investing resources in collecting and acting on intelligence within a particular team), which makes for inherent tension. This research has led to conceptualizing multiteam systems as ecosystems of networked groups (Poole and Contractor, 2012).

Research has also yielded practical guidance on how best to assemble human teams, how to train and lead teams, and how such outside influences as stress influence teamwork (Cannon-Bowers and Salas, 1998; Contractor, 2013). Significant research has also been carried out in the area of team cognition (Salas and Fiore, 2004). This work indicates that teammates who share knowledge about the task and the team (what has been referred to as shared mental models) are better able to coordinate implicitly (Entin and Serfaty, 1999; Fiore et al., 2001). For large or spatially distributed teams, individual team members likely can hold only partial understandings of the tasks and teammates at any given time. In such situations, cognition at the